Introduction

In this article, we will look at Android application to capture touch gestures.This module is the first part of generic touch based gesture recognition library.

Background

Gesture is a prerecorded touch screen motion sequency. Gesture recognition is an active research area in the field of pattern recognition, image analysis and computer vision.

We will have several modes in which application can operate. One of the options the user can select is to capture and store the candidate gesture.

The aim would be to build a generic C/C++ library that can store gestures is a user defined format.

Gesture Registration Android Interface

This process of capturing and storing information about candidate gesture classes is called gesture registration.

In the present article, we will use the GestureOverlay method. A gesture overlay acts as a simple drawing board on which the user can draw his gestures. The user can modify several visual properties, like the color and the width of the stroke used to draw gestures, and register various listeners to follow what the user is doing.

To capture gestures and process them, the first stem is to add a GestureOverlayView to store_gesture.xml XML layout file.

="1.0"="utf-8"

......

......

<android.gesture.GestureOverlayView

android:id="@+id/gestures"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:eventsInterceptionEnabled="true"

android:gestureStrokeType="multiple"

android:orientation="vertical" >

<LinearLayout

style="@android:style/ButtonBar"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="horizontal">

<Button

android:id="@+id/done"

android:layout_width="0dip"

android:layout_height="wrap_content"

android:layout_weight="1"

android:enabled="false"

android:onClick="addGesture"

android:text="@string/button_done" />

<Button

android:layout_width="0dip"

android:layout_height="wrap_content"

android:layout_weight="1"

android:onClick="cancelGesture"

android:text="@string/button_discard" />

</LinearLayout>

</android.gesture.GestureOverlayView>

Some properties of gesture overlay are specified that the gestures stroke type is single indicating unistroke gestures.

Now in the main activity file, we just need to set the content view to the layout file. In the present application, the name of layout file is "activity_open_vision_gesture.xml".

Since we also need to capture or process the gesture once they are performed, we add a gesture listerner to the overlay. The most commonly used listener is GestureOverlayView.OnGesturePerformedListener which fires whenever a user is done drawing a gesture. We use a class GesturesProcessor that implements the GestureOverlayListener.

Once the gesture is drawn by the user, the control flow enters the onGestureEnded method. Here, we copy the gesture and can perform host of activities like storing, predicting, etc.

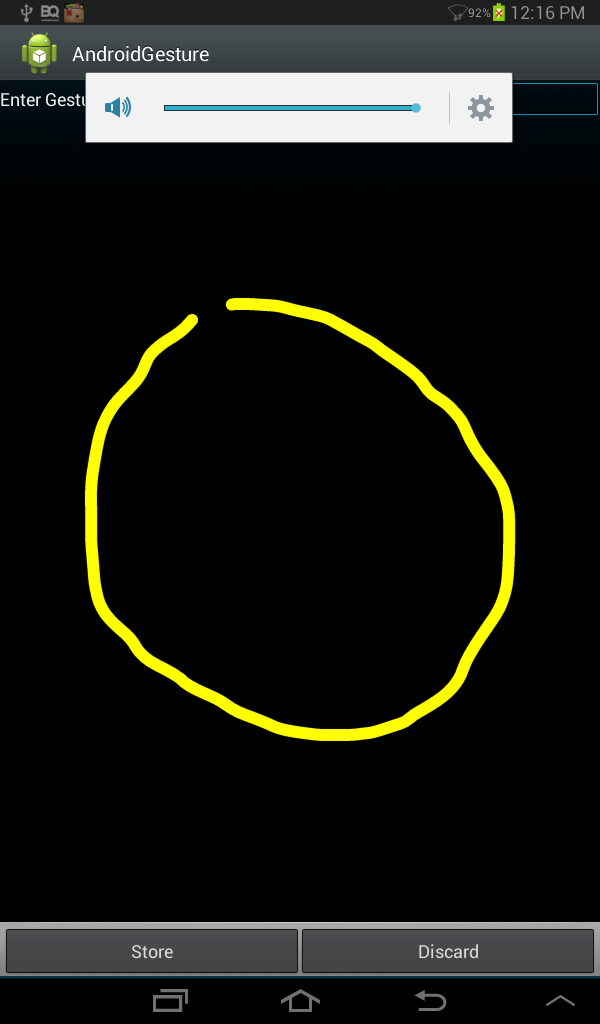

Below is an image of the UI Interface:

private class GesturesProcessor implements GestureOverlayView.OnGestureListener {

public void onGestureStarted(GestureOverlayView overlay, MotionEvent event) {

mDoneButton.setEnabled(false);

mGesture = null;

}

public void onGesture(GestureOverlayView overlay, MotionEvent event) {

}

public void onGestureEnded(GestureOverlayView overlay, MotionEvent event) {

mGesture = overlay.getGesture();

if (mGesture.getLength() < LENGTH_THRESHOLD) {

overlay.clear(false);

}

mDoneButton.setEnabled(true);

}

public void onGestureCancelled(GestureOverlayView overlay, MotionEvent event) {

}

}

Upon clicking the store button, the program enters "onStore" callback function.

public void addGesture(View v) {

Log.e("CreateGestureActivity","Adding Gestures");

extractGestureInfo();

}

We define all the JNI Interface functions in the class GestureLibraryInterface. We define 2 JNI Interface calls to the native C/C++ gesture library.

public class GestureLibraryInterface {

static{Loader.load();}

public native static void addGesture(ArrayList%lt;Float> location,ArrayList<Long> time,String name);

public native static void setDirectory(String name);

}

The first step is to set the directory where the Gestures will be stored by making the "setDirectory" call. This is done when the AndroidActivity is initialized in the "onCreate" function.

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.store_gesture);

mDoneButton = findViewById(R.id.done);

eText = (EditText) findViewById(R.id.gesture_name);

GestureOverlayView overlay = (GestureOverlayView) findViewById(R.id.gestures);

overlay.addOnGestureListener(new GesturesProcessor());

GestureLibraryInterface.setDirectory(DIR);

}

The extractGestureInfo reads the gesture strokes and stores the locations in ArrayList which is passed to native C/C++ using JNI Interface.

.....

private static final String DIR=Environment.getExternalStorageDirectory().getPath()+"/AndroidGesture/v1";

....

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.store_gesture);

mDoneButton = findViewById(R.id.done);

eText = (EditText) findViewById(R.id.gesture_name);

GestureOverlayView overlay = (GestureOverlayView) findViewById(R.id.gestures);

overlay.addOnGestureListener(new GesturesProcessor());

GestureLibraryInterface.setDirectory(DIR);

}

The JNI C/C++ codes associated with the Java class are defined in the file GestureLibraryInterface.cpp and GestureLibraryInterface.hpp files.

JNIEXPORT void JNICALL Java_com_openvision_androidgesture_GestureLibraryInterface_addGesture(JNIEnv *, jobject, jobject, jobject, jstring);

JNIEXPORT void JNICALL Java_com_openvision_androidgesture_GestureLibraryInterface_setDirectory(JNIEnv *, jobject, jstring);

float getFloat(JNIEnv *env,jobject value);

long getLong(JNIEnv *env,jobject value);

The UniStrokeGesture Library consists of the following files:

- UniStrokeGestureLibrary

- UniStrokeGesture

- GesturePoint

The UniStrokeGestureLibrary Class encapsulates all the properties of Unistroke gestures. It contains methods for storing, retrieving and predicting the gestures amongst others.

The "addGesture" JNI Method calls the save routine implemented in the class to store the Gestures.

The UniStrokeGestureLibrary consists of a sequence of objects of type UniStrokeGesture.

The objects of class UniStrokeGesture encapsulates all the properties of single gesture class. UniStrokeGesture Class contains facility to store multiple instances of sample gesture, as UniStroke Gesture can be represented by multiple candidated instances.

The UniStrokeGesture is contains as sequence of objects of type GesturePoint. Each Gesture points represents a element of UniStrokeGesture and is characterized by its location in 2D grid.

void UniStrokeGestureRecognizer::save(string dir,vector<gesturepoint> points)

{

char abspath1[1000],abspath2[1000];

sprintf(abspath1,"%s/%s",_path.c_str(),dir.c_str());

int count=0;

int ret=ImgUtils::createDir((const char *)abspath1);

count=ImgUtils::getFileCount(abspath1);

sprintf(abspath2,"%s/%d.csv",abspath1,count);

ofstream file(abspath2,std::ofstream::out);

for(int i=0;i<points.size();i++)

{

GesturePoint p=points[i];

file << p.position.x <<",";

file << p.position.y<< ","

}

file.close();

generateBitmap(abspath2);

}

Consider an example of gesture stored in CSV format:

1.CSV

The generateBitMap fuction loads the gesture points from the input CSV file and generates a bitmap image that is suitable for display.

void UniStrokeGestureRecognizer::generateBitmap(string file)

{

string basedir=ImgUtils::getBaseDir(file);

string name=ImgUtils::getBaseName(file);

string line;

float x,y;

cv::Mat image=cv::Mat(640,480,CV_8UC3);

image.setTo(cv::Scalar::all(0));

Point x1,x2,x3;

int first=-1;

int delta=20;

vector<gesturepoint> points;

points=loadTemplateFile(file.c_str(),"AA");

Rect R=boundingBox(points);

int i=0;

cv::circle(image,cv::Point((int)points[i].position.x,(int)points[i].position.y),3,cv::Scalar(255,255,0),-1,CV_AA);

for(i=1;i<points.size();i++) x2="cv::Point((int)points[i-1].position.x,

(int)points[i-1].position.y);" x1="cv::Point((int)points[i].position.x,

(int)points[i].position.y);" r="Rect(max(0,R.x-delta),max(0,R.y-delta),

R.width+2*delta,R.height+2*delta);">image.cols)

R.width=image.cols-R.x-1;

if(R.y+R.height>image.rows)

R.height=image.rows-R.y-1;

Mat roi=image(R);

Mat dst;

cv::resize(roi,dst,Size(640,480));

string bmpname=basedir+"/"+name+".bmp";

cv::imwrite(bmpname,dst);

}

Display the Gesture List

The next part of the application deals with displaying the gesture created in the above section on the Android UI.

Upon starting the application, all the bmp files in the template directory are loaded.

The activity of loading the gestures the bitmaps is done in background asynchronously.

In Android, a ListView is used to display the gesture bitmaps and associated text.

Layout each item of the list is defined in the file gesture_item.xml.

<TextView xmlns:android="http://schemas.android.com/apk/res/android"

android:id="@android:id/text1"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:gravity="center_vertical"

android:minHeight="?android:attr/listPreferredItemHeight"

android:drawablePadding="12dip"

android:paddingLeft="6dip"

android:paddingRight="6dip"

android:ellipsize="marquee"

android:singleLine="true"

android:textAppearance="?android:attr/textAppearanceLarge" />

The layout for ListView is defined in the main layout file activity_open_vision.xml.

The displayGestures function defined in OpenVisionGesture.java.

A object of type "AsyncTask", GesturesLoadTask is defined in the main class files.This methods of these classes is called from the displayGestures function, which loads the gesture list in the background.

The objects of class ArrayTask require these methods to be defined:

doInBackground - Main function executed in the backgroundonPreExecute - Function invoked before background task is executedonPostExecute - Function invoked after the background task has executed- onProgressUpdate - Function can be used to update UI contents while background task is executing

In the background task ,the code parses throught the gesture template directory and reads all the bitmap files.

An ArrayAdapter takes an Array and converts the items into View objects to be loaded into the ListView container. We define an adapter which maintains a NamedGesture objects which contain the gesture name and identifier. The "getView" function in the ArrayAdapter class is responsible for converting the Java object to View.

We maintain a List of Bitmaps identified by a ID as well as List of Gesture names represented by the SameID.

When ever a bitmap is read, we update the lists and GUI so that it can be displayed by calling the "publishProgress" function which leads to onProgressUpdate function being called in the main UI thread.

Using this approach, we can see the bitmaps being populated with time:

@Override

protected Integer doInBackground(Void... params) {

if (isCancelled()) return STATUS_CANCELLED;

if (!Environment.MEDIA_MOUNTED.equals(Environment.getExternalStorageState())) {

return STATUS_NO_STORAGE;

}

Long id=new Long(0);

File list = new File(CreateGestureActivity.DIR);

File[] files = list.listFiles(new DirFilter());

for(int i=0;i<files.length;i++)

{

File[] list1=files[i].listFiles(new ImageFileFilter());

for(int k=0;k<list1.length;k++)

{

BitmapFactory.Options options = new BitmapFactory.Options();

options.inPreferredConfig = Bitmap.Config.ARGB_8888;

Bitmap bitmap = BitmapFactory.decodeFile(list1[k].getPath(), options);

Bitmap ThumbImage = ThumbnailUtils.extractThumbnail(bitmap, mThumbnailSize, mThumbnailSize);

final NamedGesture namedGesture = new NamedGesture();

namedGesture.id=id;

namedGesture.name = files[i].getName()+"_"+list1[k].getName();

mAdapter.addBitmap((Long)id, ThumbImage);

id=id+1;

publishProgress(namedGesture);

bitmap.recycle();

}

}

return STATUS_SUCCESS;

}

Once the add function in adapter is called, the UI ListView is updated by displaying the gesture name and associated bitmap in the "getView" function.

@Override

public View getView(int position, View convertView, ViewGroup parent) {

if (convertView == null) {

convertView = mInflater.inflate(R.layout.gestures_item, parent, false);

}

final NamedGesture gesture = getItem(position);

final TextView label = (TextView) convertView;

label.setTag(gesture);

label.setText(gesture.name);

label.setCompoundDrawablesWithIntrinsicBounds(mThumbnails.get(gesture.id),null, null, null);

return convertView;

}

Code

The files found in the ImgApp Directory form the OpenVision repository and can be found at github repository www.github.com/pi19404/OpenVision.

The complete Android project can be found at samples/Android/AndroidGestureCapture directory in OpenVision repository.This is Android project source package and can be directly imported onto eclipse and run directly. The application was tested on Mobile device with Android Version 4.1.2. Compatibility with other Android OS versions has not been tested or kept in consideration while developing the application.

You need to have OpenCV installed on your system.The present application was developed on Ubuntu 12.04 OS. The paths in the Android.mk are specified based on this. For windows or other OS or if OpenCV paths are different, modify the make file accordingly.

The apk file can be downloaded from the links at the top of the article.