Contents

1. Background

2. Preparing the App

3. Text to Speech

4. Voice Recognition

4.1 Voice Recognition Intent

4.2 Using SpeechRecognizer class

5. Working With Audio Recorder

6. Audio Signal Processing

7. Conclusion

Speech and Audio are most important aspects of modern day apps. Speech recognition, text to speech are some of the features that makes your apps more intutive. Also knowledge of audio recording and playback helps! For instance you want to present a Toast to the user with a simple text, imagine how intuitive it is to also speak that out?

Or imaging how intutive it is to associate a voice command with menu options of your app? I am sure your user will find it more interesting and would love apps with such innovative features.

However when I see Google Play, not many apps leverages these features. Also a search in the internet does not point you to many complete tutorials on Android Speech and Audio Processing. Therefore I have decided to write a very basic tutorial to help you understand the principles of audio and speech processing in Android and to help you get started with it!

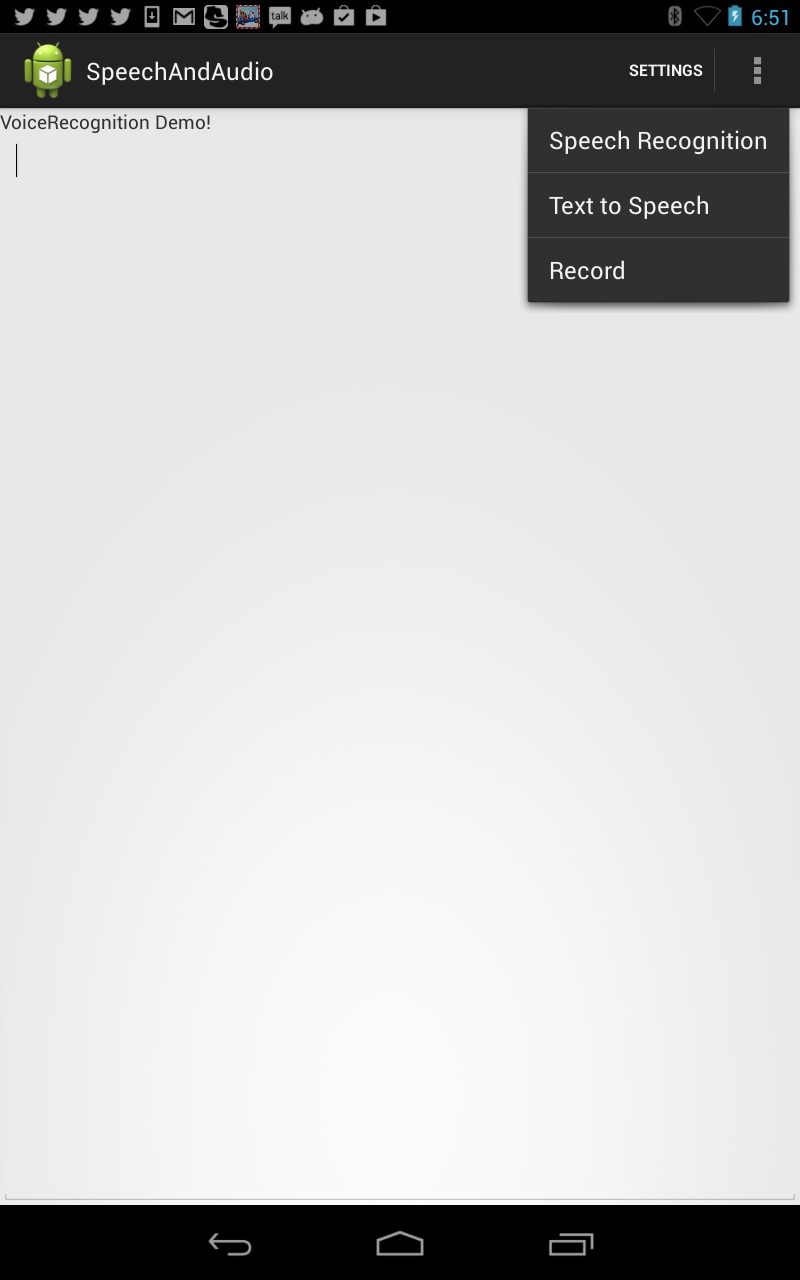

As usual we will try to keep our UI simple and focus on getting the stuff to work. First have a look at the UI.

Figure 2.1 Final UI Of the App

So our UI basically has an EditText that spans over the form. It has a Menu with three options: One for speech recognition or voice recognition as you may call, the second one is for Speech Synthesis or Text to Speech and the third one is for Audio recording. When we select record menu, the title will change to stop. Therefore user can now select the same menu to stop the recording.

We will first create an Android project in Eclipse with Minimum SDK requirement 14 and Target SDK requirement 14. We will name the project as SpeechAndAudio under package com.integratedideas. I would however urge you to use your own package name.

Here is our res/layout/activity_main.xml

="1.0"="utf-8"

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

<TextView

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:text="VoiceRecognition Demo!" />

<EditText

android:id="@+id/edWords"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_weight="0.38"

android:gravity="top"

android:ems="10" >

<requestFocus />

</EditText>

</LinearLayout>

Observe theuse of android:gravity="top" for edit text. Without this line, your cursor will be in middle of the control and typing will start from moddle.

As seen in figure 2.1, We must also have a menu with shown elements. We have already learnt how to work with menus from our Android Tutorial on Content Management

So we will edit and res/menu/main.xml and modify it to main.xml as given bellow.

<menu xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

tools:context="com.integratedideas.speechandaudio.MainActivity" >

<item

android:id="@+id/menuVoiceRecog"

android:orderInCategory="100"

android:showAsAction="never"

android:title="Speech Recognition"/>

<item

android:id="@+id/menuTTS"

android:orderInCategory="100"

android:showAsAction="never"

android:title="Text to Speech"/>

<item

android:id="@+id/menuRecord"

android:orderInCategory="100"

android:showAsAction="never"

android:title="Record"/>

</menu>

We have three menu items: menuVoiceRecog,menuTTS,menuRecord for Speech recognition, Text to Speech and Recording audio respectively. Let us also declare an Instance of edWords in MainActivity and initialize it with findViewById to edWord of activity_main.xml.

Let us also update our MainActivity.java

public class MainActivity extends Activity {

EditText edWords;

private void showToast(String message)

{

Toast.makeText(getApplicationContext(), message, Toast.LENGTH_SHORT)

.show();

}

@Override

protected void onCreate(Bundle savedInstanceState)

{

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

edWords=(EditText)findViewById(R.id.edWords);

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data)

{

super.onActivityResult(requestCode, resultCode, data);

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

getMenuInflater().inflate(R.menu.main, menu);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

int id = item.getItemId();

return super.onOptionsItemSelected(item);

}

}

Note that we have added the method onActivityResult because we will be working with Intents. Having our App environment ready, it is time to go staright to Speech Synthesis or Text to Speech part.

Also observe that we have added a simple method called showToast() which takes a string and presents in the toast. A Toast is basically a short lived dialog in Android which appears at the bottom of the form, remains visible for some period of time and then vanishes. It's a great way of letting user know the response of certain actions.

Text to Speech is a concept by means of which a system can synthesis speech for given sentences. An artificial voice reads out the words in the sentences in a natural way.

Android.speech.tts has a TextToSpeech class which helps us with TTS. It needs to be initialized with the supporting Locale or the language you want the TTS engine to speak out. Once initialized, you can call speak method to speak out the text you pass in the method.

So let us declare an object of TextToSpeech in the class and initialize it's onClickListener in onCreate method.

TextToSpeech tts;

@Override

protected void onCreate(Bundle savedInstanceState)

{

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

edWords=(EditText)findViewById(R.id.edWords);

tts=new TextToSpeech(getApplicationContext(), new TextToSpeech.OnInitListener() {

@Override

public void onInit(int status)

{

tts.setLanguage(Locale.US);

}

});

}

I have used Locale.US, you can check out the other Locale. Just delete .US from Locale.US and type . it will pop up other Locales.

tts has two variables that can change the way speech is generated: Pitch and SpeechRate Pitch is the center frequency of the generated voice and SpeechRate is the speed with which it is spoken out. The range of both the values is (0-1.0f). 1.0f SpeechRate is fastest and 0.0f is slowest. Similarly a high pitch means high frequency which produces smooth female voice.

So let us now modify the onOptionsItemSelected method and integrate speech synthesis.

public boolean onOptionsItemSelected(MenuItem item) {

int id = item.getItemId();

switch(id)

{

case R.id.menuVoiceRecog:

break;

case R.id.menuTTS:

tts.setPitch(.8f);

tts.setSpeechRate(.1f);

tts.speak(edWords.getText().toString().trim(), TextToSpeech.QUEUE_FLUSH, null);

break;

case R.id.menuRecord:

break;

}

return super.onOptionsItemSelected(item);

}

As you can see that we are caling speak method and passing the text in edWords as first parameter which the engine will speak out based on the st pitch and rate. If you do not specify their value, TTS will assume a default value.

Now build and run your application and run it in your device as we have learnt here. I wouldn't really bother to integrate the feature in Emulator because at the end of the day, you would want to test it in a device.

Voice Recognition can be implemented in two ways: First Using an Intent and Secondly as Service. Intent based method is triggered from Menu. So when you want some voice to be detected you start a speech recognition Intent, it remains active till you keep speaking and then it identifies set of words that you have spoken.

So let us prepare the menuVoiceRecog case in onOptionSelection method:

case R.id.menuVoiceRecog:

Intent intent = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL,

RecognizerIntent.LANGUAGE_MODEL_FREE_FORM);

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Voice recognition Demo...");

startActivityForResult(intent, REQUEST_CODE);

break;

Whenever we work with Intents we need a REQUEST_CODE such that in onActivityResult we can find out which intent's result has arrived. So let us declare an Integer variable in the class.

static final int REQUEST_CODE=1;

So when you select this menu option a new Intent will be displayed which will prompt you to speak. It's result will be available through onActivityResult once you have stopped speaking.

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data)

{

if (requestCode == REQUEST_CODE && resultCode == RESULT_OK)

{

ArrayList<String> matches = data.getStringArrayListExtra(

RecognizerIntent.EXTRA_RESULTS);

showToast(matches.get(0));

edWords.setText(edWords.getText().toString().trim()+" "+matches.get(0));

}

super.onActivityResult(requestCode, resultCode, data);

}

The intent returns matching result as an array with tag EXTRA_RESULTS. Where 0 the element is best match, 1st element is second best match and so on. Even if you have spoken several words, entire recognition will be available as a single string as first String only. So we catch this string and append in our editText.

Figure 2.2 : Result of Voice Recognition through Intent

You can see the miss detection of "all the citizens" as "i am in cities"

Having worked with Intent, which is practically of not much use, it's time to create a process which continuesly monitors for voice and keeps detecting it.

Might might have noticed that when you trigger the SpeechRecognition process, it starts an Intent which is really irritating sometimes. In order to run SpeechRecognition in background, you can use an object of SpeechRecognizer class, initialize it with a factory method: SpeechRecognizer.createSpeechRecognizer(Context) and set a new Listener using setRecognizationListener.

Further you need to implement RecognitionListener in a class which handles the events related to SpeechRecognition.

class listener implements RecognitionListener

{

public void onReadyForSpeech(Bundle params)

{

Log.d(TAG, "onReadyForSpeech");

}

public void onBeginningOfSpeech()

{

Log.d(TAG, "onBeginningOfSpeech");

}

public void onRmsChanged(float rmsdB)

{

Log.d(TAG, "onRmsChanged");

}

public void onBufferReceived(byte[] buffer)

{

Log.d(TAG, "onBufferReceived");

}

public void onEndOfSpeech()

{

Log.d(TAG, "onEndofSpeech");

}

public void onError(int error)

{

Log.d(TAG, "error " + error);

}

public void onResults(Bundle results)

{

String str = new String();

Log.d(TAG, "onResults " + results);

ArrayList data = results.getStringArrayList(SpeechRecognizer.RESULTS_RECOGNITION);

str = data.get(0).toString();

edWords.setText(edWords.getText().toString()+" "+str);

}

public void onPartialResults(Bundle partialResults)

{

Log.d(TAG, "onPartialResults");

}

public void onEvent(int eventType, Bundle params)

{

Log.d(TAG, "onEvent " + eventType);

}

}

The handler of our concern is onResult where we obtain the recognized word( or words) and append it to the edWords control.

SpeechRecognizer object sr can be initialized in following way:

sr= SpeechRecognizer.createSpeechRecognizer(this);

sr.setRecognitionListener(new listener());

Finally on menu click event listener, sr can be made to start listening.

Intent intent = new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL,RecognizerIntent.LANGUAGE_MODEL_FREE_FORM);

intent.putExtra(RecognizerIntent.EXTRA_CALLING_PACKAGE,"voice.recognition.test");

intent.putExtra(RecognizerIntent.EXTRA_MAX_RESULTS,5);

sr.startListening(intent);

The only significant difference of this from the one discussed in 4.1 is that instead of starting activity, we start a listener here.

Quote:

NOTE:

Remember, this solution is not perfectly workable from Android 4.1 Jelly beans and above. From that version onwards when you start SpeechRecognition, it will start with a beep sound and after a pause of about 4 seconds the object is automatically dispatched. In such case you must exten RecognitionService, check if there is a silence period or not. In error handler, mute the sound of half a second and restart the service. This hack is not ideal for a beginners tutorial and out of scope of this article.

You need to provide the App with the permission to record Audio.You also need to have the access to write in the external folder to be able to store the recorded audio.

So edit your manifest file and add the following lines:

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

To be able to store our audio files in one of the SD card folders, we will first create a folder in Music folder of SD card. We already know how to set up App directory in External folder.

try

{

Log.d("Starting", "Checking up directory");

File mediaStorageDir = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_MUSIC), "SpeechAndAudio");

if (! mediaStorageDir.exists())

{

if (! mediaStorageDir.mkdir())

{

Log.e("Directory Creation Failed",mediaStorageDir.toString());

}

else

{

Log.i("Directory Creation","Success");

}

}

}

catch(Exception ex)

{

Log.e("Directory Creation",ex.getMessage());

}

filePath=Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_MUSIC).getPath()+"/"+"SpeechAndAudio/";

Having setup the directory to store the files, we will go straight to implement recording. Here is our workflow:

1) Upon Menu-Record Click, the menu title should be changed to "Stop"

2) In recording an object of MediaRecorder is initialized to record audio stream in a file. We will create the filename from current time stamp.

3) Once the recording is completed, using an object of MediaPlayer we will play the just recorded file.

Here is the code for option menuRecord for onOptionItemSelected method.

case R.id.menuRecord:

if(item.getTitle().equals("Record"))

{

fileName = new SimpleDateFormat("yyyyMMddhhmm'.3gp'").format(new Date());

fname=filePath+fileName;

item.setTitle("Stop");

recorder = new MediaRecorder();

recorder.setAudioSource(MediaRecorder.AudioSource.MIC);

recorder.setOutputFormat(MediaRecorder.OutputFormat.THREE_GPP);

recorder.setOutputFile(fname);

recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AMR_NB);

try {

recorder.prepare();

} catch (Exception e) {

Log.e("In Recording", "prepare() failed");

}

recorder.start();

}

else

{

item.setTitle("Record");

recorder.stop();

recorder.release();

recorder = null;

player = new MediaPlayer();

try {

player.setDataSource(fname);

player.prepare();

player.start();

} catch (Exception e) {

Log.e("Player Exception", "prepare() failed");

}

}

break;

So now all your recorded files will be inside sdcard/Music/SpeechAndAudio folder which you can play offline too!

One of the important parts of working with Speech and Audio is processing Audio/Speech. There are several digital signal processing algorithms that ranges from simple pitch detection to changing the base frequency of the signal. Google has recently released a NDK based Audio Signal Processing library called patchfield. However there is another excellent audio signal processing Library which is written purely in Java and this is called TarsosDsp.

You can go inside /latest folder and download TarsosDSP-Android-latest-bin.jar. Copy this library into your lib folder of the project. Clean and build your project to get started with audio signal processing in Android.

I have shown a simple yet very effective Pitch detection algorithm. First we add a menu item called menuAudioProcessing. In the onOptionsItemSelected method we add a case for this menu option:

case R.id.menuAudioProcess:

AudioDispatcher dispatcher = AudioDispatcherFactory.fromDefaultMicrophone(22050,1024,0);

PitchDetectionHandler pdh = new PitchDetectionHandler() {

@Override

public void handlePitch(PitchDetectionResult result,AudioEvent e)

{

final float pitchInHz = result.getPitch();

runOnUiThread(new Runnable() {

@Override

public void run()

{

TextView text = (TextView) findViewById(R.id.tvMessage);

text.setText("" + pitchInHz);

}

});

}

};

AudioProcessor p = new PitchProcessor(PitchEstimationAlgorithm.FFT_YIN, 22050, 1024, pdh);

dispatcher.addAudioProcessor(p);

new Thread(dispatcher,"Audio Dispatcher").start();

Once you select this option, you need to speak, you can see very high pitch value in the text view, when you stop the pitch value becomes negative as shown in image bellow.

Figure 8.1: Pitch Detection using Audio Signal Processing with TarsosDSP in Android

Taking this example as a the starting point, you can check out the other signal processing stuff. Only thing you need to do is add a handler to the dispatcher object where handler are different audio processing algorithms.The general way to work with any of the classes in TarsosDSP that implement AudioProcessor is to first create an AudioEvent from your audio data, and then call process on the AudioProcessor. Once the event has been processed, you can check the contents of the AudioEvent buffer, or in some cases, listen to particular events/callbacks, like the PercussionOnsetDetector's OnsetHandler and its handleOnset() method.

Audio Signal Processing and working with audio is one very important aspect of multimedia related apps in Android. Even though Android provides simple and yet effective ways of doing stuff, not many tutorials can be found over internet that tells you how audio and sound related work is performed in Android effectively.

I wanted to write a beginners tutorial for audio and speech signal processing in Android which could be used as a starting point for audio processing. You can build smart applications by incorporating text to speech and pitch detection. Voice Recognition might be triggered from Pitch Detection. So you can do some fun stuff with audio. Hope this tutorial encourages you to use audio features more in your app.