Introduction

The article demonstrates how easy it is to work with OpenGL textures on iOS and common work pattern as singleton, MVC, outlets and test. So OpenGL is a useful technology which is also available on other platforms. The drawback is, that the very old version 2.0 from 2004 is standard, and the "new" is OpenGL 3.0 (2008).

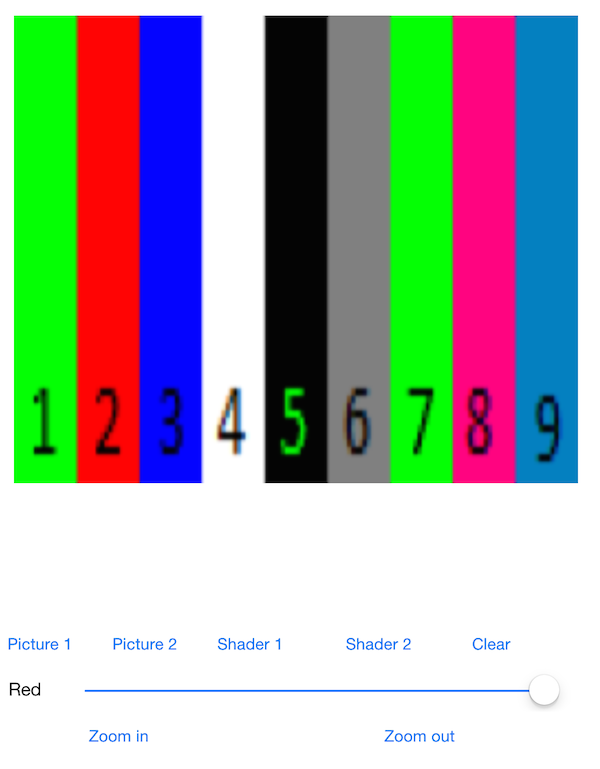

Screenshot: with selection of the pictures and slider for red filter effect.

Background

I wanted to understand OpenGL better, because its graphic perfomance and compatibility to use it in other projects.

Using the code

The included sample project is derived from the plain template of Xcode following the MVC-model, which Model-View-Controller means and really helps to separate code into useful pieces. So the ViewController class is making full use of its name and it is controlling how the view is working. Important is the Displaylink, because it is the callback from the display or better GPU timer for new pixel output.

- (void)startDisplayLinkIfNeeded

{

if (!_displayLink) {

self.displayLink = [CADisplayLink displayLinkWithTarget:_viewOpenGL selector:@selector(display)];

[_displayLink addToRunLoop:[NSRunLoop mainRunLoop] forMode:NSRunLoopCommonModes];

}

}

The GUI is configured in the Main.storyboard where the controls are stored. They got "connected" to the ViewController via Outlet. I found a nice tutorial for it at mobiforge. But now enough of the forewords.

Start with OpenGL

To somehow leverage the use of OpenGL I designed a class OpenGLContext with a typical design pattern in Objective-C the singleton.

+ (instancetype)sharedInstance

{

static OpenGLContext *theInstance = nil;

static dispatch_once_t onceToken;

dispatch_once(&onceToken, ^{

theInstance = [[OpenGLContext alloc] init];

});

return theInstance;

}

This objects is holding a poperty of the EAGLContext to work with OpenGL on iOS.

@property(strong, nonatomic) EAGLContext *context;

Here is the algorithem to make it work. If we have a context we're done, else if can create a V3 Context fine, else we create a V2 context. Important is the last line to set the context as current. It is a global function which directly interacts with the display driver.

- (int) createContext

{

if( self.context != nil ) return 1;

self.context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES3];

if (!self.context) {

NSLog(@"[Warn] Hardware doenst support OpenGL ES 3.0 context");

self.context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

if (!self.context) {

NSLog(@"[Error] failed to create an OpenGL ES 2.0 context");

return -1;

}

}

[EAGLContext setCurrentContext:self.context];

return 0;

}

Textures in OpenGL

Textures are the bitmaps in OpenGL and so we need some, to view some colored pixels. The code should look similar on other platforms.

glPixelStorei(GL_PACK_ALIGNMENT, 1);

glEnable(GL_TEXTURE_2D);

glGenTextures(1, &texture);

glActiveTexture(GL_TEXTURE0 + texture);

glBindTexture(GL_TEXTURE_2D, texture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, colorData);

Diving into the shaders

Shaders are small subprograms in OpenGL to manipulate the graphical output. There are to separate programs one is called vertex shader which computes the coordinates and the other is the frame shader which results for the color of the pixel. To load them into OpenGL they need to get compiled, linked and loaded. That is in the class OpenGLProgram done.

I also want to point into the complex field of the shaders, that they have different variable types which have big role in the work. So I have written some explanation.

#version 100

uniform mat4 modelViewProjectionMatrix;

attribute vec4 position;

attribute vec2 texcoord;

varying vec2 v_texcoord;

void main()

{

gl_Position = position;

v_texcoord = texcoord.xy;

}

The heart of my code is this handler of the slider. The value of the slider can get changed and gets into a OpenGL program which manipulates the output of the texture.

- (IBAction)onChange:(id)sender

{

float value = [_slider value];

NSLog(@"Slider = %f", value);

NSString *sFloat = [NSString stringWithFormat:@"%.2f",value];

NSDictionary *dic = @{@"RED_FACTOR": sFloat};

OpenGLProgram *shader = [[OpenGLProgram alloc] init];

[shader loadShaders:@"Shader3" FragmentModify:dic];

[[_openGLContext shaders] setObject:shader atIndexedSubscript:2];

self.shader = [_openGLContext useProgramAtIndex:2];

[_shader linkValue:"pixel" Texture:_textureOpenGL];

[self.viewOpenGL display];

}

Testing in Xcode

Xcode has some fine support for writing software tests, which I like to use. Take a look on it - it really worth it. At most with the measureBlock API is performance testing a piece of cake.

Points of Interest

By diving into OpenGL I learned a lot of the complicated stuff, which really helps in big graphic outputs with good perfomance.

Apple is providing good documentation for OpenGL for Embedded Systems which thay call OpenGL ES.

The next thing is to work with the new technology metal from Apple which sound really great.

As ever I provide a link to the famous Ray Wenderlich where a lot of interesting and good explained stuff can be found.

History

Initial version.