Introduction

Windows Azure, Microsoft's Cloud Computing offering, has three type of storage available: blob, table, and queue. This article will demonstrate a semi-practical usage for each of these types to help you understand each and how they could be used. The application will contain two roles, a Web Role for the user interface, and a Worker Role for some background processing. The Web Role will have a simple Web application to upload images to blob storage and some metadata about them to table storage. It will then add a message to a queue which will be read by the Worker Role to add a watermark to the uploaded image.

A Windows Azure account is not necessary to run this application since it will make use of the development environment.

Prerequisites

To run the sample code in this article, you will need:

Windows Azure Overview

Before I begin to build the application, a quick overview of Windows Azure and Roles is necessary. There are many resources available to describe these, so I wouldn't go into a lot of detail here.

Windows Azure is Microsoft's Cloud Computing offering that serves as the development, service host, and service management environment for the Windows Azure Platform. The Platform is comprised of three pieces: Windows Azure, SQL Azure, and AppFabric.

- Windows Azure: Cloud-based Operating System which provides a virtualized hosting environment, computing resources, and storage.

- SQL Azure: Cloud-based relational database management system that includes reporting and analytics.

- AppFabric: Service bus and access control for connecting distributed applications, including both on-premise and cloud applications.

Windows Azure Roles

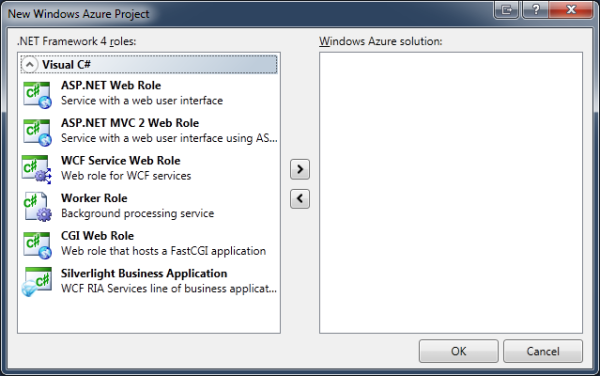

Unlike security related roles that most developers may be familiar with, Windows Azure Roles are used to provision and configure the virtual environment for the application when it is deployed. The figure below shows the Roles currently available in Visual Studio 2010.

Except for the CGI Web Role, these should be self-explanatory. The CGI Web Role is used to provide an environment for running non-ASP.NET web applications such as PHP. This provides a means for customers to move existing applications to the cloud without the cost and time associated with rewriting them in .NET.

Building the Azure Application

The first step is, of course, to create the Windows Azure application to use for this demonstration. After the prerequisites have been installed and configured, you can open Visual Studio and take the normal path to create a new project. In the New Project dialog, expand the Visual C# tree, if not already, and click Cloud. You will see one template available, Windows Azure Cloud Service.

After selecting this template, the New Cloud Service Project dialog will be displayed, listing the available Windows Azure Roles. For this application, select an ASP.NET Web Role and a Worker Role. After the roles have been added to the Cloud Service Solution list, you can rename them by hovering over the role to display the edit link. You can, of course, add additional Roles after the solution has been created.

After the solution has been created, you will see three projects in the Solution Explorer.

As this article is about Azure Storage rather than Windows Azure itself, I'll briefly cover some of the settings but leave more in-depth coverage for other articles or resources.

Under the Roles folder, you can see two items, one for each of the roles that were added in the previous step. Whether you double click the item or right-click and select Properties from the context menu, it will open the Properties page for the given role. The below image is for the AzureStorageWeb Role.

The first section in the Configuration tab is to select the trust level for the application. These settings should be familiar to most .NET developers. The Instances section tells the Windows Azure Platform how many instances to of this role to create and the size of the Virtual Machine to provision. If this Web Role were for a high volume web application, then selecting a high number of instances would improve its availability. Windows Azure will handle the load balancing for all of the instances that are created. The VM sizes are as follows:

| Compute Instance Size | CPU | Memory | Instance Storage | I/O Performance |

|---|

| Extra Small | 1.0 Ghz | 768 MB | 20 GB | Low |

| Small | 1.6 GHz | 1.75 GB | 225 GB | Moderate |

| Medium | 2 x 1.6 GHz | 3.5 GB | 490 GB | High |

| Large | 4 x 1.6 GHz | 7 GB | 1,000 GB | High |

| Extra large | 8 x 1.6 GHz | 14 GB | 2,040 GB | High |

The Startup action is specific to Web Roles and, as you can see, allows you to designate whether the application is accessed via HTTP or HTTPS. The Settings tab should be familiar to .NET developers, and is where any additional settings for the application can be created. Any settings added here will be placed in the ServiceConfiguration and ServiceDefinition files since they apply to the service itself, not specifically to a role project. Of course, the projects also have the web.config and app.config files that are specific to them.

The EndPoints tab allows you to configure the endpoints that will be configured and exposed for the Role. In this case, the Web Role can be configured for HTTP or HTTPS with a specific port and SSL certificate if appropriate.

Web Role

Since this article is meant to focus on Azure Storage, I'll keep the UI simple. However, with some styles, a simple interface can still look good with little effort.

There is nothing special about the web application, it is just like any other web app you have built. There is one class that is unique to Azure, however, the WebRole class. All Roles in Windows Azure must have a class that derives from RoleEntryPoint. This class is used by Windows Azure to initialize and control the application. The default implementation provides an bare-bones override for the OnStart method. If there are actions necessary to be taken before the application started, they should be handled here. Likewise, the Run and OnStop methods can be overridden to perform an action before the application is run and before it is stopped, respectively.

public override bool OnStart()

{

return base.OnStart();

}

One thing you will want to add here is the ability to restart the role when a change is made to the configuration file. Curiously this was the default implementation with Windows Azure Tools for Visual Studio v1.1 but not for v1.3. This code assigns a handler for the RoleEnvironmentChanging event to allow the Role to be restarted if the configuration changes, such as increasing the instance count or adding a new setting.

public override bool OnStart()

{

RoleEnvironment.Changing += RoleEnvironmentChanging;

return base.OnStart();

}

private void RoleEnvironmentChanging(object sender, RoleEnvironmentChangingEventArgs e)

{

if(e.Changes.Any(change => change is RoleEnvironmentConfigurationSettingChange))

{

e.Cancel = true;

}

}

Azure Storage

As I've said, there are three types of storage available with the Windows Azure Platform: blob, table, and queue.

Blob Storage

Binary Large Object, or blob, should be familiar to most developers and is used to store things like images, documents, or videos; something larger than a name or ID. Blob storage is organized by containers that can have two types of blob: Block and Page. The type of blob needed depends on its usage and size. Block blobs are limited to 200 GB, while Page blobs can go up to 1 TB. Note, however, that in development, storage blobs are limited to 2 GB. Blob storage can be accessed via RESTful methods with a URL such as: http://myapp.blob.core.windows.net/container_name/blob_name.

Although blob storage isn't hierarchical, it can be simulated by the name. Blob names can use /, so you can have names such as:

- http://myapp.blob.core.windows.net/container_name/2009/10/4/photo1

- http://myapp.blob.core.windows.net/container_name/2009/10/4/photo2

- http://myapp.blob.core.windows.net/container_name/2008/6/25/photo1

Here it appears that the blobs are organized by year, month, and day; however, in reality, the names of blobs are like 2009/10/4/photo1, 2009/10/4/photo2, and 2008/6/25/photo1.

Block Blob

Although a Block blob can be up to 200 GB, if it is larger than 64 MB, it must be sent in multiple chunks of no more than 4 MB. Storing a Block blob is also a two-step process; the block must be committed before it becomes available. When a Block blob is sent in multiple chunks, they can be sent in any order. The order in which the Commit call is made determines how the blob is assembled. Thankfully, as we'll see later, the Azure Storage API hides these details so you won't have to worry about them unless you want to.

Page Blob

A Page blob can be up to 1 TB in size, and is organized into 512 byte pages within the block. This means any point in the blob can be accessed for read or write operations by using the offsite from the start of the blob. This is the advantage to using a Page blob rather than a Block blob, which can only be accessed as a whole.

Table Storage

Azure tables are not like tables from an RDBMS like SQL server. They are composed of a collection of entities and properties, with properties further containing collections of name, type, and value. The thing to realize, and what may cause a problem for some developers, is that Azure tables can't be accessed using ADO.NET methods. As with all other Azure storage methods, RESTful access is provided: http://myapp.table.core.winodws.net/TableName.

I'll cover tables in-depth later when getting to the actual code.

Queue Storage

Queues are used to transport messages between applications, Azure based or not. Think of Microsoft Messaging Queue, MSMQ, for the cloud. As with the other storage type, RESTful access is available as well: http://myapp.queue.core.windows.net/Queuename.

Queue messages can only be up to 8 KB; remember, it isn't meant to transport large objects, only messages. However, the message can be a URI to a blob or table entity. Where Azure Queues differ from traditional queue implementations is that it is not a FIFO container. This means, the message will remain in the queue until explicitly deleted. If a message is read by one process, it will be marked as invisible to other processes for a variable time period, which defaults to 30 seconds, and can be no more than 2 hours; if the message hasn't been deleted by then, it will be returned to the queue and will be available for processing again. Because of this behavior, there is also no guarantee that messages will be in any particular order.

Building the Storage Methods

To start with, I'll add another project to the solution, a Class Library project. This project will serve as a container for the storage methods and implementation used in this solution. After creating the project, you'll need to add references to the Windows Azure Storage assembly Microsoft.WindowsAzure.StorageClient.dll, which can be found in the Windows Azure SDK folder.

Since a CloudStorageAccount is necessary for any access, I'll create a base class to contain a property for it.

public static CloudStorageAccount Account

{

get

{

return CloudStorageAccount.FromConfigurationSetting

("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString");

}

}

You'll see here that we can use two methods to return the CloudStorageAccount object. Since the application is being run in a development environment, we could use the first method and return the static property DevelopmentStorageAccount. However, before deployment, this would need to be updated to an actual account. Using the second method, however, the account information can be retrieved from the configuration file, similar to database connection strings in an app.config or web.config file. Before the FromConfigurationSetting method can be used though, you must add some code to the OnStart method for each. The Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString is from the settings tab and stored in the ServiceConfiguration.cscfg file. It is a bit long so feel free to rename it the Settings tab as you wish.

CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSetter) =>

{

configSetter(RoleEnvironment.GetConfigurationSettingValue(configName));

RoleEnvironment.Changed += (sender, arg) =>

{

if(arg.Changes.OfType<roleenvironmentconfigurationsettingchange>()

.Any((change) => (change.ConfigurationSettingName == configName)))

{

if(!configSetter(RoleEnvironment.GetConfigurationSettingValue(configName)))

{

RoleEnvironment.RequestRecycle();

}

}

};

});

This code basically tells the runtime to use the configuration file for setting information, and also sets an event handler for the RoleEnvironment.Changed event to detect any changes to the configuration file. If a change is detected, the Role will be restarted so those changes can take effect. This code also makes the default RoleEnvironment.Changing event handler implementation unnecessary since they both do the same thing, restarting the role when a configuration change is made.

Setting up the Environment

Before you can start using the Azure Storage element, they must first be created and since they should only be done once a good place to do so is in the OnStart method of each role. I'll add a call to the method there which will create the Queue, Blob and Table as necessary. As noted, the CreateIfNotExists method returns true if the elements did not exist and were created. For this sample though, it really doesn't matter so I'll ignore it.

public static void CreateContainersQueuesTables()

{

bool didExist = Blob.CreateIfNotExist();

didExist = Queue.CreateIfNotExist();

didExist = Table.CreateTableIfNotExist(TABLE_NAME);

}

Implementing Blob Storage

The first thing you need is a reference to a CloudBlobClient object to access the methods. As you can see, there are two ways to do this. Both produce the same result; one is just less typing, but the other gives more control over the creation.

private static CloudBlobClient Client

{

get

{

return Account.CreateCloudBlobClient();

}

}

Uploading the blob is a relatively easy task.

protected static CloudBlobContainer Blob

{

get { return BlobClient.GetContainerReference(BLOB_CONTAINER_NAME); }

}

public static void PutBlobBlock(Stream stream, string fileName)

{

CloudBlob blobRef = Blob.GetBlobReference(fileName);

blobRef.UploadFromStream(stream);

return blobRef.Uri.ToString();

}

As you can see, the first step is to retrieve a reference to the container. With this container, the next step is getting a reference to the CloudBlob to upload the file into. If a CloudBlob already exists with the specified name, it will be overwritten. After obtaining a reference to the CloudBlob object, it's just a matter of calling the appropriate method to upload the blob. In this case, I'll use the UploadFromStream method since the file is coming from the ASP.NET Upload control as a stream; however, there are other methods depending on the environment and usage, such as UploadFile, which uses the path of a physical file. All of the upload and download methods also have asynchronous counterparts.

One thing to note here is that the container names must be lowercase. If trying a name with capitalization, you will receive a rather cryptic and uninformative StorageClientException with the message "One of the request inputs is out of range." Further, the InnerException will a WebException with the message "The remote server returned an error: (400) Bad Request."

Implementing Table Storage

Of the three storage types, Azure Table Storage requires the most setup. The first thing necessary is to create a model for the data that will be stored in the table.

public class MetaData : TableServiceEntity

{

public MetaData()

{

PartitionKey = "MetaData";

RowKey = "Not Set";

}

public string Description { get; set; }

public DateTime Date { get; set; }

public string ImageURL { get; set; }

}

For this demonstration, the model is very simple, but, most importantly, it derives from TableServiceEntity which tells Azure the class represents a table entity. Although Azure Table Storage is not a relational database, there must be some mechanism to uniquely identify the rows that are stored in a table. The PartitionKey and RowKey properties from the TableServiceEntity class are used for this purpose. The PartitionKey itself is used to partition the table data across multiple storage nodes in the virtual environment, and, although an application can use one partition for all table data, it may not be the best solution for scalability and performance.

Windows Azure Table Storage is based on WCF Data Services (formerly, ADO.NET Data Services), so there needs to be some context for the table. The TableServiceContext class represents this, so I'll derive a class from it.

public class MetaDataContext : TableServiceContext

{

public MetaDataContext(string baseAddress, StorageCredentials credentials)

: base(baseAddress, credentials)

{

}

}

Within the constructor you see an alternative method of creating tables, however, since it was already created in the RoleEntryPoint OnStart I'll just leave it informational purposes and comment it out.

Adding to the table should be very familiar to anyone who has worked with LINQ to SQL or Entity Framework. You add the object to the data context, then save all the changes. Note here the RowKey. Since I'm using the date with the filename, I need to make a slight modification since RowKey can't contain "/" characters. I also want to first check if the record already exists and update it does.

public void Add(MetaData data)

{

data.RowKey = data.RowKey.Replace("/", "_");

MetaData original = (from e in MetaData

where e.RowKey == data.RowKey

&& e.PartitionKey == Table.PARTITION_KEY

select e).FirstOrDefault();

if(original != null)

{

Update(original, data);

}

else

{

AddObject(StorageBase.TABLE_NAME, data);

}

SaveChanges();

}

If you are familiar with Linq, then you expect the FirstOrDefault to return null if no record can be found. However, with WCF Data Services and Azure Table Storage if your query uses both RowKey and PartitionKey it will return a DataServiceQueryException with a 404 Resource not found as the InnerException. To solve this, you must set the IgnoreResourceNotFoundException = true. A good place to do this is in the constructor.

public MetaDataContext(string baseAddress, StorageCredentials credentials)

: base(baseAddress, credentials)

{

IgnoreResourceNotFoundException = true;

}

Implementing Queue Storage

Queue storage is probably the easiest part to implement. Unlike Table storage, there is no need to setup a model and context, and unlike Blob storage, there is no need to be concerned with blocks and pages. Queue storage is only meant to store small messages, 8 KB or less. Adding a message to a Queue follows the same pattern as the other storage mechanisms. First, get a reference to the Queue, creating it if necessary, then add the message.

public void Add(CloudQueueMessage msg)

{

Queue.AddMessage(msg);

}

Retrieving a message from the Queue is just as easy.

public CloudQueueMessage GetNextMessage()

{

return Queue.PeekMessage() != null ? Queue.GetMessage() : null;

}

One thing to understand about Azure Queues is they are not like other types of Queues you may be familiar with, such as MSMQ. When a message is retrieved from the Queue, as above, it is not removed from it. The message will remain in the queue however Windows Azure will set a flag marking it as invisible to other requests. If the message has not been deleted from the Queue within a period of time, the default is 30 seconds, it will again be visible to GetMessage calls. This behavior also makes Windows Azure Queues non-FIFO as expected from other implementations.

At some point, you may want get all messages in a Queue. Although the GetMessages method will work for this, however, there are definitely some issues to be aware of. GetMessages takes a parameter specifying the number of messages to return. Specifying a number greater than the number of messages in the Queue will produce and Exception so you need to know how many messages are in the QUeue to retrieve. Because of the dynamic nature of Queues as explained above, the best Windows Azure can do is give an approximate count though. The CloudQueue.ApproximateMessageCount property would seem to be perfect for this. Unfortunately though, this property seems to always return null. To get the count, you need to use the Queue.RetrieveApproximateMessageCount() method.

public static List<CloudQueueMessage> GetAllMessages()

{

int count = Queue.RetrieveApproximateMessageCount();

return Queue.GetMessages(count).ToList();

}

Worker Role

Now we can finally get to the Worker Role. To demonstrate how a Worker Role can be incorporated into a project, I'll use it to add a watermark to the images that have been uploaded. The Queue that was previously created will be used to notify this Worker Role when it needs to process an image and which one to process.

Just as with the Web Role, the OnStart method is used to setup and configure the environment. Worker Roles have the additional method, Run, which simply creates a loop and continues indefinitely. It's somewhat odd to not have an exit condition; instead, when Stop is called for this role, it forcibly terminates the loop, which may cause issues for any code running in it.

public override void Run()

{

Trace.WriteLine("AzureStorageWorker entry point called", "Information");

while(true)

{

PhotoProcessing.Run();

Thread.Sleep(5000);

Trace.WriteLine("Working", "Information");

}

}

You can view the sample code for this article to see the details of PhotoProcessing.Run. It simply gets the blob indicated in the QueueMessage, adds a watermark, and updates the Blob storage.

Putting It All Together

Now that everything has been implemented, it's just a matter of putting it all together. Using the Click event for the Upload button on the ASPX page, I'll get the file that is being uploaded and the other pertinent details. The first step is to upload the blob so we can get the URI that points to it and add it to Table storage along with the description and date. The final step is adding a message to the Queue to trigger the worker process.

protected void OnUpload(object sender, EventArgs e)

{

if(FileUpload.HasFile)

{

DateTime dt = DateTime.Parse(Date.Text);

string fileName = string.Format("{0}_{1}",

dt.ToString("yyyy/MM/dd"), FileUpload.FileName);

string blobURI = Storage.Blob.PutBlob

(FileUpload.PostedFile.InputStream, fileName);

Storage.Table.Add(new Storage.MetaData

{

Description = Description.Text,

Date = dt,

ImageURL = blobURI,

RowKey = fileName

}

);

Storage.Queue.Add(new CloudQueueMessage(blobURI + "$" + fileName));

Description.Text = "";

Date.Text = "";

}

}

Conclusion

Hopefully, this article has given you an overview of what Windows Azure Storage is and how it can be used. There is, of course, much more that can be covered on this topic, that may be covered in follow-up articles. However, here are some resources that can provide you with additional information and insight about Windows Azure and Windows Azure Storage.

Points of Interest

Names for Tables, Blob containers, and Queues seem to have a mixture of support for uppercase names. It would seem the best approach is to always use lowercase.

There are some quirks and differences between each version of the SDK and Visual Studio tools. As an example, the first version of this article was written with version 1.1 and setting SetConfigurationSettingPublisher was not an issue. Another bug encounter is a strange System.ServiceModel.CommunicationObjectFailedException encountered when the web.config is read-only, as would be the case when using source control. See this link for more information.

History

- Initial posting: 5/24/10

- Updated: 1/1/11

- Windows Azure SDK and Windows Azure tools for Visual Studio v1.3 and .NET Framework 4.0