Download source-code

Introduction

Scanning for tokens is the first step to take before analyzing the syntax of an input source file. The previous article, Lexical analyzer, presented an example of scanner. Now this article is to present an example of the parser (or syntax analyzer). The whole assignment with following rules and requirements includes:

1/4: Lexical rules:

- Case insensitive

- All English letters (upper and lower), digits, and extra characters as seen below, plus whitespace

- Identifiers

- begin with a letter, and continue with any number of letters

- assume no identifier is longer than 8 characters

- Keywords (reserved), include: start finish then if repeat var int float do read print void return dummy program

- Relational operators, include: < > =!= => =< ==

- Other operators, include: = : + - * / %

- Delimiters, include: . ( ) , { } ; [ ]

- Numbers:

- any sequence of decimal digits, no sign

- assume no number is longer than 8 digits

- Other assumptions:

- No whitespace needed to separate tokens except when this changes the tokens (as x y vs xy)

- Comments start with // and end with new line

- Make any reasonable assumptions and necessary validations. For example, if found invalid symbols, show error message with line number, and then abort the program

This step corresponds to 'lexical analyzing' (or scanning) for tokens (also called symbols) from an input source file. Each token is a meaningful character string, such as a number, an operator, or an identifier, etc.

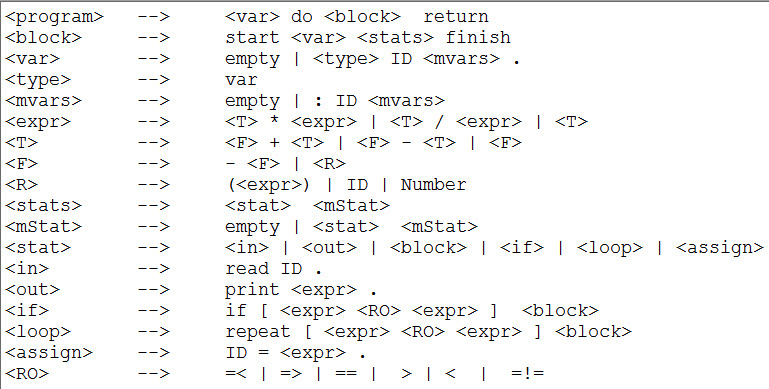

2/3: Syntax rules

This step corresponds to 'syntax analyzing' (or parsing). Top-down parsing is used here because it's easier to implement (but less powerful). We follow the left-most derivation, or LL(k) with k = 1 look-ahead to decide which derivation to follow (meaning by a single next character, it's possible to decide which rule to apply).

So, we need to verify if the above grammar rule is LL(1), or re-write the grammar as needed in an equivalent form.

3/4: Parse tree

After scanning and parsing OK, build the parse tree with each node has zero, one or more tokens. Each token has instance, token type, and line number.

4/4: Other requirements:

Invocation of the program is:

testParser [filename]

where input source file is filename.lan (extension .lan is implicit).

Program will read the same data from keyboard via redirecting from a file if filename is not specified.

For example, testParser < filename.lan

The plan

This is the Makefile that defines the dependency of program files

CC = gcc

PROG = testParser

$(PROG): main.o scanner.o parser.o

$(CC) -o $(PROG) main.o scanner.o parser.o

main.o : main.c token.h scanner.h parser.h

$(CC) -c main.c

scanner.o : scanner.c token.h scanner.h symdef.h

$(CC) -c scanner.c

parser.o : parser.c parser.h token.h

$(CC) -c parser.c

.PHONY: clean

clean:

/usr/bin/rm -rf core $(PROG) *.o

In the previous article, tokenizing was OK, but it didn't utilize the Token and TokenType data structure to serve the purpose of parsing and building the parse tree. So, the scanner now will be re-written. But first, let's look at the data structures defined in token.h:

#define MAX 9 // max length of each word string, not including '\0'

extern int lineNum;

typedef enum {

IDtk,

STARTtk, FINISHtk, THENtk, IFtk, REPEATtk, VARtk, INTtk, FLOATtk,

DOtk, READtk, PRINTtk, VOIDtk, RETURNtk, DUMMYtk, PROGRAMtk,

NUMBERtk,

EQUALtk, GREATERtk, LESStk, DIFFtk, GREATEREQtk, LESSEQtk,

ASSIGNtk, COLONtk, ADDtk, SUBTRACTtk, MULtk, DIVtk, REMAINDERtk,

DOTtk, LEFTPAtk, RIGHTPAtk, COMMAtk, LEFTBRACEtk, RIGHTBRACEtk,

SEMICOLONtk, LEFTBRACKETtk, RIGHTBRACKETtk,

NAtk, EOFtk

} TokenType;

struct tokenTag {

char str[MAX];

TokenType tokenType;

int lineNum;

struct tokenTag *next; };

typedef struct tokenTag Token;

extern int numToken;

extern Token *tokens;

As above, each Token has these elements: (1) token instance (str), (2) TokenType, (3) line number found from the input source file, and (4) a pointer used for parse tree later.

main.c

main() function performs necessary validations, such as command-line arguments, file existence, max length of words, invalid characters, etc. which are not changed from the previous article. After all of those things are OK, it calls the scanner to do the work:

printf("%10s \t Line number \t %s\n\n", "Token instance", "Token type");

numToken = 0; tokens = (Token *) malloc(numToken * sizeof(Token)); do {

numToken++;

tokens = (Token *)realloc(tokens, numToken * sizeof(Token));

tokens[numToken - 1] = scanner(filePtr);

printToken(tokens[numToken - 1]);

} while (tokens[numToken - 1].tokenType != EOFtk);

The array tokens (with size of numtoken) is (used just in case) to store all tokens found while the scanner traverses the input source file. Note EOFtk to terminate the scanner, not EOF.

After that, main() calls the parser, closes the file, and done:

parser(filePtr);

fclose(filePtr);

return 0;

The scanner (scanner.c)

symdef.h

First, for convenience, symdef.h contains the data definitions (based on the lexical rules):

char *keywords[15] = {"start", "finish", "then", "if", "repeat", "var",

"int", "float", "do", "read", "print", "void", "return", "dummy", "program"};

char *relationalOperators[] = {"==", "<", ">", "=!=", "=>", "=<"};

char otherOperators[6] = {':', '+', '-', '*', '/', '%'};

char delimiters[9] = {'.', '(', ')', ',', '{', '}', ';', '[', ']'};

char word[MAX];

int wi = 0;

char numStr[MAX];

int ni = 0;

Token scanner(FILE *) function

int numToken = 0; int lineNum = 1; Token *tokens = NULL;

Token scanner(FILE *filePtr) {

Token token;

char ch;

while ((ch = fgetc(filePtr)) != EOF) {

if (ch == '\n')

lineNum++;

if (ch == '/') {

if (fgetc(filePtr) == '/') {

while ((ch = fgetc(filePtr)) != '\n') {}

lineNum++;

} else

fseek(filePtr, -1, SEEK_CUR);

}

if (isalpha(ch)) {

word[wi++] = ch;

while (isalpha(ch = fgetc(filePtr))) {

word[wi++] = ch;

}

word[wi] = '\0';

wi = 0;

strcpy(token.str, word);

if (isKeyword(word)) {

token.tokenType = getTokenTypeOfKeyword(word);

} else {

token.tokenType = IDtk;

}

token.lineNum = lineNum;

fseek(filePtr, -1, SEEK_CUR);

return token;

}

else if (isdigit(ch)) {

numStr[ni++] = ch;

while (isdigit(ch = fgetc(filePtr))) {

numStr[ni++] = ch;

}

numStr[ni] = '\0';

ni = 0;

strcpy(token.str, numStr);

token.tokenType = NUMBERtk;

token.lineNum = lineNum;

fseek(filePtr, -1, SEEK_CUR);

return token;

}

else if (ispunct(ch)) {

if (isDelimiter(ch)) {

token.tokenType = getTokenTypeOfDelimiter(ch);

token.lineNum = lineNum;

char str[2];

str[0] = ch;

str[1] = '\0';

strcpy(token.str, str);

return token;

}

else if (isOtherOperators(ch)) {

token.tokenType = getTokenTypeOfOtherOperator(ch);

token.lineNum = lineNum;

char str[2];

str[0] = ch;

str[1] = '\0';

strcpy(token.str, str);

return token;

}

else if (isStartRelationalOperator(ch)) {

if (ch == '<' || ch == '>') {

token.lineNum = lineNum;

if (ch == '<') {

token.tokenType = LESStk;

strcpy(token.str, "<");

} else {

token.tokenType = GREATERtk;

strcpy(token.str, ">");

}

return token;

}

else if (ch == '=') {

if ((ch = fgetc(filePtr)) == '=' || ch == '>' || ch == '<') {

token.lineNum = lineNum;

if (ch == '=') {

token.tokenType = EQUALtk;

strcpy(token.str, "==");

} else if (ch == '>') {

token.tokenType = GREATEREQtk;

strcpy(token.str, "=>");

} else {

token.tokenType = LESSEQtk;

strcpy(token.str, "=<");

}

return token;

} else if (ch == '!') {

if ((ch = fgetc(filePtr)) == '=') {

token.lineNum = lineNum;

token.tokenType = DIFFtk;

strcpy(token.str, "=!=");

return token;

} else

fseek(filePtr, -1, SEEK_CUR);

} else

fseek(filePtr, -1, SEEK_CUR);

}

}

}

}

token.tokenType = EOFtk;

return token;

}

Note:

- The idea is to 'look one character ahead' to decide what next token is (or might be) going to be.

- fseek(filePtr, -1, SEEK_CUR) is to go back one character when needed.

- Besides the built-in functions provided (such as isalpha(ch), isdigit(ch), and ispunct(ch)), these are some supporting functions to check a character:

int isKeyword(char *str) {

int i;

int result = 0; for (i = 0; i < 15; i++) {

if (!strcasecmp(keywords[i], str))

result = 1;

}

return result;

}

int isDelimiter(char c) {

int i;

int result = 0; for (i = 0; i < 9; i++) {

if (delimiters[i] == c)

result = 1;

}

return result;

}

int isOtherOperators(char c) {

int i;

int result = 0; for (i = 0; i < 6; i++) {

if (otherOperators[i] == c)

result = 1;

}

return result;

}

int isStartRelationalOperator(char c) {

int i;

int result = 0; if (c == '=' || c == '<' || c == '>') {

result = 1;

}

return result;

}

Note: the number 6, 9, 15 (number of pre-specified other operators, delimiters, and keywords) were hard-coded which shouldn't be. They should be defined the way similiar to MAX in token.h instead.

Last three supporting functions for Scanner(FILE *):

TokenType getTokenTypeOfKeyword(char *word) {

if (strcasecmp(word, "start") == 0) return STARTtk;

if (strcasecmp(word, "finish") == 0) return FINISHtk;

}

TokenType getTokenTypeOfDelimiter(char ch) {

if (ch == '.') return DOTtk;

if (ch == '(') return LEFTPAtk;

}

TokenType getTokenTypeOfOtherOperator(char ch) {

if (ch == '=') return ASSIGNtk;

if (ch == ':') return COLONtk;

}

Functions for printing tokens are also defined in scanner.c.

The parser and parse tree

Follow the production rules (syntax grammar) above, parser.h declares two data structures:

void parser(FILE *);

typedef enum {

programNode, blockNode, varNode, mvarsNode, exprNode, xNode,

tNode, yNode, fNode, rNode, statsNode, mStatNode, statNode,

inNode, outNode, ifNode, loopNode, assignNode, roNode

} NodeType;

struct nodeTag {

NodeType nodeType;

Token *tokenPtr; struct nodeTag *child1; struct nodeTag *child2;

struct nodeTag *child3;

struct nodeTag *child4; };

typedef struct nodeTag Node;

Note: have a look at syntax rules and data types here in parser.h to make it easier reading the rest of this article.

parser.c

parser.c starts with:

Token tk = {"N/A", NAtk, 0};

to initialize the global token tk variable used throughout the parsing, and begin the function called by main():

void parser(FILE *filePtr) {

lineNum = 1; fP = filePtr;

rewind(fP);

tk = scanner(fP);

Node *root = NULL;

root = program();

if (tk.tokenType == EOFtk)

printf("Parse OK! \n");

else {

exit(1);

}

printf("\n*-----Parse Tree-----*\n");

printParseTree(root, 0);

return;

}

As we see, program() function corresponds to the first production rule (syntax grammar) above, and is defined as follow:

Node * program() {

Node *node = getNode(programNode);

node->child1 = var();

if (tk.tokenType == DOtk) {

tk = scanner(fP);

} else {

printf("ERROR: expect DOtk or VARtk, but received %s on line #%d \n", tk.str, tk.lineNum);

exit(1);

}

node->child2 = block();

if (tk.tokenType == RETURNtk) {

tk = scanner(fP);

return node;

} else {

printf("ERROR: expect RETURNtk, bu received %s on line #%d \n", tk.str, tk.lineNum);

exit(1);

}

}

Note: Node *getNode (NodeType) function returns a new node marked by NodeType argument passed to it:

Node *getNode(NodeType nodeType) {

Node *node;

node = (Node *) malloc(sizeof(Node));

node->nodeType = nodeType;

node->tokenPtr = NULL;

node->child1 = node->child2 = node->child3 = node->child4 = NULL;

return node;

}

Again, based on syntax rule, var() corresponds to the production rule with < var > and is defined as follow:

Node * var() {

Node *node = getNode(varNode);

if (tk.tokenType == VARtk) {

tk = scanner(fP);

if (tk.tokenType == IDtk) {

insertToken(node, tk);

tk = scanner(fP);

} else {

printf("ERROR: expect IDtk, but received %s on line #%d \n", tk.str, tk.lineNum);

exit(1);

}

node->child1 = mvars();

if (tk.tokenType == DOTtk) {

tk = scanner(fP);

return node;

} else {

printf("ERROR: expect DOTtk, but received %s on line #%d \n", tk.str, tk.lineNum);

exit(1);

}

}

else {

return node; }

}

Note:

- the production rule < type > is removed and replaced directly into the < var > production rule, like this:

< var > --> empty | var ID < mvars > .

- function insertToken(Node *, Token) will be showed shortly later.

- continue following the production rules defined by syntax grammar, var() will calls mvars(), program() calls block(), mvars() calls itself recursively, block() calls var() and stats(),... and so on for in(), f(), t(), assign(), loop(), etc. Finally, the ro() function:

Node * ro() {

Node *node = getNode(roNode);

if (tk.tokenType == LESSEQtk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else if (tk.tokenType == GREATEREQtk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else if (tk.tokenType == EQUALtk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else if (tk.tokenType == GREATERtk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else if (tk.tokenType == LESStk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else if (tk.tokenType == DIFFtk) {

insertToken(node, tk);

tk = scanner(fP);

return node;

} else {

printf("ERROR: expect relational operator, but received %s on line #%d \n",

tk.str, tk.lineNum);

exit(1);

}

}

insertToken(Node *, Token) function is to insert a new Token at the end of linked-list of tokens of a tree node:

Token *getTokenPtr(Token tk) {

Token *tokenPtr = (Token *) malloc(sizeof(Token));

strcpy(tokenPtr->str, tk.str);

tokenPtr->lineNum = tk.lineNum;

tokenPtr->tokenType = tk.tokenType;

return tokenPtr;

}

void insertToken(Node *node, Token tk) {

Token *new = getTokenPtr(tk);

if (node->tokenPtr == NULL) {

node->tokenPtr = new;

} else {

Token *tmp = node->tokenPtr;

while (tmp->next != NULL) {

tmp = tmp->next;

}

tmp->next = new;

}

}

At this time, we are ready to print the parse tree.

char *nodeTypeStrArr[] = {

"<program>", "<block>", ... similar ... , "<assign>", "<ro>"

};

char *tokenStrArr[] = {

"IDtk",

"STARTtk", "FINISHtk", "THENtk", "IFtk",

... similar

"NAtk", "EOFtk"

};

void printParseTree(Node *root, int level) {

if (root == NULL) return;

printf("%*s" "%d %s ", level * 4, "", level, nodeTypeStrArr[root->nodeType]);

Token *tmp = root->tokenPtr;

int isTokenFound = 0; if (tmp != NULL) {

isTokenFound = 1;

printf("{Token(s) found: ");

}

while (tmp != NULL) {

int isLastToken = 0; printf("%s (%s, #%d)", tmp->str, tokenStrArr[tmp->tokenType], tmp->lineNum);

tmp = tmp->next;

if (tmp == NULL)

isLastToken = 1;

if (! isLastToken) {

printf(", and ");

}

}

if (isTokenFound) {

printf("}");

}

printf("\n");

printParseTree(root->child1, level + 1);

printParseTree(root->child2, level + 1);

printParseTree(root->child3, level + 1);

printParseTree(root->child4, level + 1);

}

Sample outputs

Scanner output:

Parsing a 'bad' (invalid syntax) file:

Parsing a valid file: