Advances in technology come with increased complexity. That is just the nature of how technology evolves. However, things get simpler for users as they are getting more demanding about alignment with their intentions. In “The Invisible Computer: Why Good Products Can Fail, the Personal Computer Is So Complex, and Information Appliances Are the Solution” Donald Normam says:

Most technology goes through cycles of development and change in both internal and external complexity. Often, the very first device is simple, but crude. As the device undergoes the early stages of development, its power and efficiency improve, but so does its complexity. As the technology matures, however, simpler, more effective ways of doing things are developed, and the device becomes easier to use, although usually by becoming more complex inside.

The memory management of the .NET framework is a good example of how complexity can be hidden in a layer of simplicity. However, when we assume that we don’t need to worry about how memory is managed and how garbage collection works, focusing on developing skills in syntax and classes, we risk taking bad design decisions that lead to performance and memory issues. Furthermore, the knowledge about how memory is managed helps us understand how the variables are behaving in our application. In this article, I cover the basics about this topic.

Stack

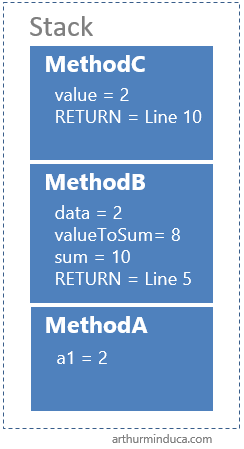

The stack stores the state of a method. Every time a method is called, the .NET creates a container (i.e. stack frame) that stores parameters, local variables and the address of the return point. When the method completes, the frame is removed and the thread continues in the return point defined in the stack frame. Also, each thread has its own stack.

void MethodA()

{

int a1 = 2;

MethodB(a1, 8);

}

void MethodB(int data, int valueToSum)

{

int sum = data + valueToSum;

MethodC(sum);

}

void MethodC(int value)

{

Console.WriteLine(value);

}

Obviously, the representation of the stack is simplified to ease understanding and the return point is not the code line number.

In the above example, if we consider that the thread starts executing the first line of MethodA and there is a breakpoint on the last line of MethodC, when the thread reaches the breakpoint, the stack will look like the image above.

As we can see, it’s like a pile of boxes: in order to access the contents of a box that is under another, you must first remove all the boxes that are above it. And since all the content in a box cannot be accessed from the outside, it can never have a global scope. In addition, memory in the stack is self-maintained. When a method exits, the whole stack frame is thrown out and memory is freed automatically.

Furthermore, as we can see, stacks do more than store variables: since it stores the return point, it keeps track of the execution flow.

Variables Stored in the Stack are Local by Nature

We cannot access variables from anywhere other than the last container (i.e., the top of the stack). So, when a new stack frame is created, variables declared in other frames cannot be accessed anymore. It means that types stored onto the stack are local by nature. When you pass a parameter, it is copied to the next stack frame in order to be accessed.

Content copy isn’t usually a big deal, unless you are copying large value types. If you pass large structs as parameter between method calls inside a big loop or recursive operation, you make successive copies of the struct, which leads to high copying overhead and the risk of running into performance issues.

Summary

- A stack frame grows as methods declare variables, and variables exist while the method that owns the frame is running.

- Everything stored in a stack has local scope.

- We do not need to worry about allocating and deallocating memory in the stack, because memory is managed automatically. When a method exits, the whole stack frame is disposed.

- Reading, allocating and deallocating memory is faster in a stack when compared to a heap. The operations are much simpler and efficiently organized by the CPU.

- Space is well managed by the CPU and memory is not fragmented.

- A stack is bound to a thread, and each thread handles one stack.

- There is a limit for the stack size, which is OS-dependent and defined when a thread is created.

- Variables cannot be resized.

Heap

Everything that is stored in the Heap can be accessed at any time. However, unlike the stack, heap memory is managed by a garbage collector (GC), resource responsible for optimizing available memory space and deallocating memory that is no longer referenced.

public class SomeClass

{

public SomeClass(int v)

{

this.value = v;

}

public int value { get; set; }

}

SomeClass a = new SomeClass(3);

SomeClass b = new SomeClass(1);

In above example, ‘a’ and ‘b’ are two instances of SomeClass. Because SomeClass is a reference type, it is stored in the Heap. On the other hand, ‘a’ and ‘b’ are declared on the stack as references to this heap memory. Special attention to the “value” property of SomeClass. Despite being an int32, it is also stored in the heap because it is part of the reference type content.

When SomeMethod finishes, the stack frame is deleted and there will be no pointer referencing the objects in the heap. The heap then will be storing orphaned elements, which will be disposed during the next execution of the GC.

Summary

- No limit on memory size. Although the initial size is predefined on application startup, more space can be requested from the OS.

- Variables stored in the heap can be accessed globally.

- Memory is managed by the OS or the memory management library.

- As blocks are allocated and deallocated, memory might become fragmented.

- Heap is allocated for the application by the runtime and disposed when the application process exits.

- Allocation and deallocation in heap is more expensive when compared to stack.

Where Things Are Stored

Short and brief: Reference type is always stored in the heap. Value types are stored where they are declared. If we declare a value type inside a method, it is placed on the stack. If a value type is boxed or if it is a member of a reference type (as we can see in the example of SomeClass with a int property named value), it will be stored on the heap.

Value Types

struct | int16 | int32 | int64 |

uint16 | uint32 | uint64 | byte |

sbyte | float | double | decimal |

enum | bool | char | |

Reference Types

- class

- instances of object

- interface

- delegate

- string

Pointers

A pointer refers to the address of an item in the memory. “References types” are types that are accessed through a Pointer. They are managed by the CLR (Common Language Runtime) and take up space in memory as any other type.

Performance Issues in Boxing Operations

Boxing is the conversion of a value type to an object or interface. It is a computationally expensive process that should be avoided whenever possible.

int v = 10;

object obj = v;

int v2 = (int)obj

When a value type is boxed, a new object is allocated on the heap. This can be 20 times slower than a reference assignment. When unboxing, this operation can be 4 times slower than an assignment (msdn reference). Thus, iterating this operation in a loop can cause significant impact on performance.

Summary

- Accessing boxed values is slower. First, the pointer is accessed in the stack, then its content in the heap.

- Boxed values take up more memory, because since it is stored in the heap, a pointer is needed in the stack (which takes 32B or 64B).

- Unboxed variables are disposed with the stack frame. Boxed variables will be kept in memory until the GC is triggered.

- Boxing operation requires significant CPU time, because space must be allocated in the heap and the value type must be copied to it. Unboxing requires some CPU time to copy the content of the heap back to the stack.

When (and Why) Recursive Operations Can Be A Problem

When a method is called, a new stack frame is added to the call stack. Thus, recursive operations add new stack frames for each recursive call. The more recursive operations are expected in a call, the more expensive the overhead is. Moreover, as explained before, each method call involves copying the parameters to the stack frame of the next called method. This might be a problem when the method parameter is a large value type, such as some structs, and there are successive calls to this method.

And What About the Memory Consumption?

Earlier in this article, I said that we do not need to worry about allocating and deallocating memory in the stack, because memory is managed automatically. When a method exits, the whole stack frame is disposed. However, the stack grows and grows while operations are chained in recursive calls. Remember that each method retains its own stack frame with local variables, copies of parameters that were passed, and everything else that remains until the recursive operation is over and the method finishes.

If You Go With Recursion, Use Tail Call Whenever Possible

A Tail call occurs when the last statement of a method is a method call.

public int TailRecursiveFunction(...)

{

return TailRecursiveFunction(...);

}

Here is the magic: when the thread gets to the point of the tail call, there will be no need to add a new frame to the stack, copy values, etc. In this particular scenario, since most of the state of the current frame will no longer be necessary, many compilers optimize performance by reusing the current stack frame for the next method execution. Methods call in tail positions can be parsed to efficient “goto” statements, which is far more efficient than the traditional recursive operation.

Related Readings