Introduction

In this article, I will benchmark Neuroph, JOONE and Encog. These are the three major open source frameworks for Java. Encog also has a .NET version. I will give all three frameworks a similar task, and see how long they take to complete. For this article, I am comparing the following versions. As versions evolve, I will try to keep this article up to date.

I began creating this benchmark for a project I am working on in the university. My professor analyzes geothermic information he receives daily. We’ve had a neural network for some time that we retrain weekly. We currently use a neural network based on JOONE. If you look at my last article, you will see that the source code used to do nearly anything in JOONE is much more complex than it needs to be. Also JOONE is not actively supported anymore. Further, JOONE does not properly make use of modern multicore machines. We wanted to see if we could get our one-day training cycle down to a more reasonable level.

So I created this benchmark to give us an idea. We found that both Neuroph and Encog offered upgrade paths from JOONE. First, I will review the results. Then I will show you the code necessary to make this happen.

Neural networks train in iterations. Each iteration moves the neural network closer to the desired output. When benchmarking neural networks, there are really two things to consider. First, how effective is each iteration. Secondly, how long does an iteration take to process.

In my last article, I evaluated how effective Neuroph, JOONE and Encog were with their training iterations. JOONE was the worst, taking over 5,000 iterations to learn the simple XOR operation. Neuroph fared much better, taking only 613 iterations. However, the real winner was Encog, which uses much more advanced training methods than the other two. Encog took only 18 iterations to complete.

As a result, you must consider both. For example, I was originally comparing just JOONE and Neuroph. I found that Neuroph is much slower than JOONE, but Neuroph’s more advanced training more than makes up for the slowness, as Neuroph needs only a fraction of the iterations to do what JOONE does. However Encog decimates both JOONE and Neuroph. Encog can effectively use multicore and even your graphics card (GPU) to speed processing. However, even with both of these removed, Encog uses a fraction of the time to complete an iteration of both JOONE and Neuroph. Further, Encog can train to great error rate in fewer iterations. As a result, it is a double win for Encog.

We implemented Encog as a replacement for our JOONE solution. The framework for Encog is very easy to use. I was able to rip out the JOONE code and replace with Encog in about a day. Due to Encog’s speed and highly efficient training, our one day process now takes 20 minutes. As a result, we are upgrading our project to Encog.

I can’t release my professor’s code to demonstrate this, but I am releasing our benchmarking code, in the hopes that it might be useful to others. This article shows the results we got.

Benchmarking Task

To benchmark the neural networks, I created the following sample task:

| Input Neurons | 10 |

| Output Neurons | 10 |

| Hidden 1 Neurons | 20 |

| Transfer Function | TANH |

| Training Set Size | 100,000 elements |

| Training Iterations | 50 |

| Training Method | backpropagation with momentum |

It is important to use backpropagation with momentum to keep this equal. Encog supports much more advanced methods than this, as covered in my previous article. However, we needed a training method that all three support, so they are all doing essentially the same thing. This way we can compare how efficient each of the neural network frameworks is.

Benchmarking Computer

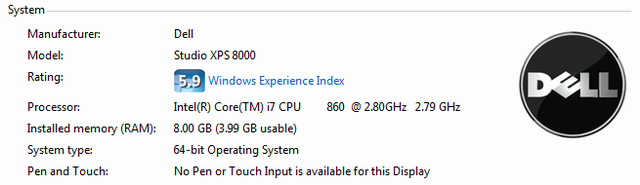

The computer used is a <leo_highlight id="leoHighlights_Underline_0" style="DISPLAY: inline; BACKGROUND-ATTACHMENT: scroll; BACKGROUND-IMAGE: none; BORDER-BOTTOM: rgb(255,255,150) 2px solid; BACKGROUND-REPEAT: repeat; BACKGROUND-COLOR: transparent; -moz-background-size: auto auto; -moz-background-clip: -moz-initial; -moz-background-origin: -moz-initial; -moz-background-inline-policy: -moz-initial" leohighlights_underline="true" leohighlights_removed_removed="http%3A//shortcuts.thebrowserhighlighter.com/leonardo/plugin/highlights/3_1/tbh_highlightsBottom.jsp?keywords%3Ddell%26domain%3Dwww.codeproject.com" leohighlights_keywords="dell" önmouseout="leoHighlightsHandleMouseOut('leoHighlights_Underline_0')" önmouseover="leoHighlightsHandleMouseOver('leoHighlights_Underline_0')" önclick="leoHighlightsHandleClick('leoHighlights_Underline_0')">Dell Studio XPS 8000. It has an Intel Core i7 860 @ 2.8 ghtz. This is a quadcore with hyperthreading. You can see the system display here.

This system was used for all benchmarking in this article.

Benchmark Results

The results of this benchmark are shown here. All results are in seconds. Obviously, the lower the value, the better.

| Test | Results |

|---|

| Encog w/multithreaded | 0.9520 |

| Encog single threaded | 3.1280 |

| JOONE w/multithreaded | 26.0430 |

| JOONE single threaded | 17.8510 |

| Neuroph single threaded | 39.7450 |

A chart that demonstrates this is shown here:

One very interesting thing to note here is the single thread verses multithread. Multithreaded allows the benchmark to take advantage of a multicore to take advantage of a multi core CPU. Only Encog and JOONE support multithreaded. Though running JOONE in multithreaded mode actually slows it down on a multicore machine! This is a real case for testing your code on multicore architectures. Just because you use threads does NOT mean your application will scale.

Encog pretty much decimated the competition here. Even when Encog is forced to use a single thread, it beats the others by a huge mark. Encog is about 40 times as fast as Neuroph and 17 times as fast as JOONE.

Feature Grid

I also did a grid to compare the features of the neural networks. Encog also seems to be ahead here. Especially in training options.

Neural Network Types

JOONE Neuroph Encog

ADALINE * *

ART1 *

BAM * *

Boltzmann Machine *

CPN * *

Elman SRN * *

Perceptron * * *

Hopfield * *

Jordan SRN * *

NEAT *1 *2

RBF * *

Recurrent SOM *

Kohonen/SOM * *

NeuroFuzzy Perceptron *

Hebbian Network * *

*1 Experimental in preview release(release ???)

*2 Supported in Encog 2.4(currently in beta, release for late June 2010).

Activation/Transfer Functions

JOONE Neuroph Encog

Sigmoid * * *

HTAN * * *

Linear * * *

SoftMax * *

Step * * *

Bipolar/Sgn *

Gaussian * *

Log * * *

Sin * *

logarithmic * *

Ramp * *

Trapezoid *

Randomization Techniques

JOONE Neuroph Encog

Range * * *

Gaussian *

Fan-In * *

Nguyen-Widrow *

Training Techniques

JOONE Neuroph Encog

Annealing *

Auto Backpropagation * *

Backpropagation * * *

Binary Delta Rule *

Resilient Prop * *

Hebbian Learning * *

Scaled Conjugate Grd *

Manhattan Update *

Instar/Outstar * *

Kohonen * * *

Hopfield * *

Levenberg Marquardt (LMA) *

Genetic *

Instar * *

Outstar * *

ADALINE *

Other Features

JOONE Neuroph Encog

Multithreaded Training * *

GPU/OpenCL *

Platforms Java Java Java/.Net/Silverlight

Year Started 2001 2008 2008

Implementing the Benchmark

Now that we have reviewed the results of the benchmark, I will show you how it was actually constructed.

The Benchmarkable Interface

I began by creating a simple interface, named Benchmarkable. This interface defines a benchmark that I will use with Encog, Neuroph and JOONE. The interface is shown here:

public interface Benchmarkable {

void prepareBenchmark(double[][] input, double[][] ideal);

double benchmark(int iterations);

}

As you can see, there are two methods. The first method is called prepareBenchmark. This method is called with an input and ideal array. I am only benchmarking the frameworks using supervised training. This method allows the framework to create the necessary objects to train the neural network. We do not want to count setup time. Neural networks can train for days. I really do not care if one framework takes a few seconds longer to setup. The actual benchmark is done inside of the benchmark method. This method will not return until the framework is done training. So we will be measuring the amount of time that this method executes for. We also return the final error rate.

Generating Data

This is done using the generate method of the GenerateDataclass. You can see the method signature here.

public

void generate(final int count, final int inputCount,

final int idealCount, final double min, final double max) {

The count parameter tells the neural network how many training set elements should be created. Each training set element is a pair of arrays. The inputCount parameter specifies how big the input array should be. The idealCount parameter specifies how big the output array should be. These two arrays are allocated.

this.input = new double[count][inputCount];

this.ideal = new double[count][idealCount];

We will now loop over the specified count and generate random training data. Random training data is fine. The neural network will never actually learn to predict random numbers. This is impossible. It will never get a good error rate. The error rate is the degree to which the actual output from the neural network is matching the ideal output provided. We are not measuring how much a framework is able to decrease the error by. We will do that later. For now, we just want to see how long it takes each of the frameworks to execute 50 iterations. Neural networks are trained using iterations, each iteration attempts to reduce the error rate. Normally, you would use far more than 50 iterations, however, this is enough to see the relative speed of the networks.

for(int i = 0; i < count; i++) {

Next we generate the random input and ideal data.

for(int j = 0; j < inputCount; j++) {

input[i][j] = randomRange(min,max);

}

for(int j = 0; j < idealCount; j++) {

ideal[i][j] = randomRange(min, max);

}

The benchmark is prepared with the following code. We create the random training data to the arrays and prepare the benchmark.

public static void time(String title, Benchmarkable run)

{

GenerateData data = new GenerateData();

data.generate(10000, Benchmark.INPUT_COUNT, Benchmark.OUTPUT_COUNT, -1, 1);

double[][] input = data.getInput();

double[][] ideal = data.getIdeal();

run.prepareBenchmark(input, ideal);

long started = System.currentTimeMillis();

run.benchmark(50);

long finish = System.currentTimeMillis();

long elapsed = finish-started;

double e2 = elapsed/1000.0;

NumberFormat f = NumberFormat.getNumberInstance();

f.setMinimumFractionDigits(4);

f.setMaximumFractionDigits(4);

System.out.println(f.format(e2) + " seconds for " + title );

}

We then use the Stopwatch class to actually perform the benchmark.

The benchmarking goes through and times each network as it loops through iterations. If you would like to see how to program each of the three neural net types, you should see my last article which covers that.

Conclusion

As you can see from my two articles, the clear winner is Encog. It provides a clean and easy to use API and stunning performance. The performance of Encog currently cannot be matched.

Neuroph is an interesting project that has a very helpful following on Sourceforge. I think Neuroph has a great deal of potential, but it is just not as advanced of a project as Encog. One of the goals of Neuroph is ease of use. Looking at the internals of their code, they are correct. I can follow the internal code of Neuroph much easier than Encog. If you are going to be working inside the framework, this might be important. However, using the framework’s API I really do not see much difference in complexity between Encog and Neuroph. This is illustrated in my previous article.

JOONE is technically faster than Neuroph. However if you use automatic backpropagation in Neuroph, the speed increase of JOONE really no longer matters as much. They become approximately the same net speed. Due to the lack of support of JOONE and the complexity of their interface, I really cannot recommend JOONE.