Introduction

This article illustrates one way of using "Things" via a Cloud-enabled app (on Azure) to communicate information to a third party. The specific implementations might vary, but it illustrates a generic pattern which will be common to many implementations.

Background

As I was writing my entry for the Intel RealSense(tm) Challenge 2014, I realized I had one problem: I had envisioned the project as a three-tiered system, and the component connecting the other two would be hosted on IIS. However, this would have required a more complex installation than the one the reviewers would be familiar with.

So I decided to look into Cloud solutions. Since I was already working with C# and the Visual Studio 2013 community edition, I wondered how easy it would be to migrate my original idea to an Azure-hosted Web Service.

Turns out it was very easy.

Using the Code

The project is divided in three parts:

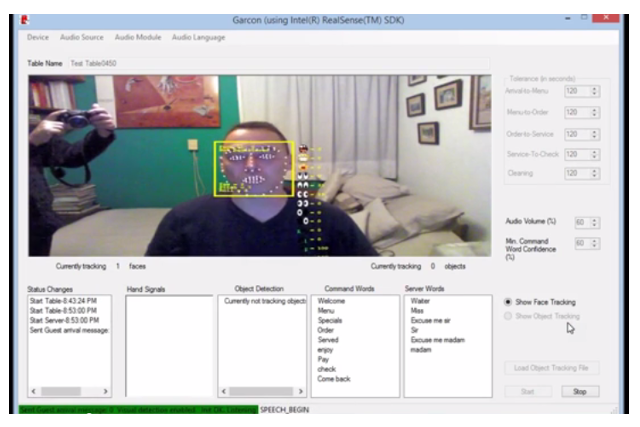

Garcon: Uses an Intel RealSense(tm) device to detect different actions (verbal and visual). Whenever an action is detected, the Garcon sends a signal to the central component (a Workflow Service on Azure). In the image below, the main view of the Garcon can be seen (without the camera itself, of course).

Maitre: Two workflows, embedded in an Azure Workflow Service. They keep track of the current state of the table and, if a valid change is detected or an appropriate situation comes up, they send a signal to the Watcher.

The transitions (for instance Client Called) contain the interaction points between the remote client ("Thing") and the Workflow. These need to be defined by using callable Interfaces.

To preserve context, these Interfaces are fed an input parameter which, when used in the Correlation Definition will help the Workflow use the correct instance.

Watcher: Just displays the information it receives. Again, due to its simplicity, it can easily be adapted to fit any platform. For instance, instead of a Windows Forms Application, a Smartphone could subscribe to the service and display messages to the Waiter who carries it, alerting him to a condition at the tables he is responsible for.

Like I mentioned in the Introduction, my first task was the conversion of the Workflow Service to the Azure platform. This task was actually very simple. I opened the project, selected the Menu Project ->Convert to Microsoft Azure Cloud Service Project.

This generates a Cloud service project in the solution which, on right click, can either be packaged or directly sent to your Azure cloud.

To upload your service, you need to create a new Azure Web Service (next two images):

After following the previous steps, your Cloud service has been created. Now you can go to your Azure portal to download the Azure SDK to debug and work with your Azure sites. Your CloudService's landing page should look similar to the image below:

Use the "Install a Windows Azure SDK" link to download the tools. Once installed, you can debug your project by right-clicking your Azure service project and choosing Debug. This should launch the Azure Emulator (Compute and Storage Emulator).

In the image, you'll note that my project was actually launched on port 81 (instead of the usual port 80). The reason for this is that I have IIS running on my machine, which stops the emulator from launching on the default port. If you have IIS running or if you're using the port 80 for some other kind of deployment (for instance the Apache HTTP server), you should be aware of this to change your client's configurations accordingly.

To be able to switch quickly from a production configuration to a debugging configuration, I added sections like these in my client's App.config (currently showing the Azure production configuration as active):

<client>

<endpoint address="http://example.cloudapp.net/MyService.xamlx"

binding="basicHttpBinding" bindingConfiguration="BasicHttpBinding_IStartTable"

contract="MaitreService.IStartTable" name="BasicHttpBinding_IStartTable" />

</client>

As easy as switching between configurations turns out to be, the important thing is to remember that your client's contact points will need to be switched around depending on the environment you're working on.

The workflow I implemented included Receive Activities which exposed points on the workflow which depended on external input. The references depend on keeping the correlation alive, which I implemented by sending the initial workflow Id back to the caller (using a Send Activity) and using it as a parameter on each call to the workflow. (The process is pretty well documented at the "Create a Workflow Service with Messaging Activities" tutorial on the MSDN site.)

To import the proper Service References to my Garcon project, I used the "Service Reference" option on the right click menu which opens on the Project "Add->ServiceReference". The address you put in should correspond to the one you specified when you loaded your project to the Azure cloud.

Pressing OK creates the reference classes to the Azure Workflow Service. As I said before, you'll have to review the App.config file to verify that the production and debug references are equivalent. Other than that, a call to the appropriate classes becomes a simple task. In my case, for instance, the connected camera ("Thing") launches a new workflow as soon as its front end has been launched.

MaitreService.StartTableClient startProxy = new StartTableClient();

p_tableId = startProxy.StartTableService(0, GetMyIpAddress().ToString());

This allows me to start detecting events as soon as I need to and simplifies the code needed to communicate with the back-end. The code to announce a new guest for instance, is reduced to:

MaitreService.GuestsArriveClient guestServiceProxy = new GuestsArriveClient();

guestServiceProxy.GuestsArriveAsync(p_tableId, DetectedFaces);

and is triggered by the camera's face detection thread.

I also had to consider the interaction between the MaitreService (Workflow) and the front end (Watcher). Like I mentioned in the introduction, this is a simple Observer pattern . To communicate the Observers (Watcher) to the Subject (MaitreService), I am using a WCF-Duplex Service implementation.

- At the start of a Garcon ("

Thing") program's life, I register on a table of watchable "Subjects". - When a

Watcher is started, it asks for a list of "Subjects" and the user chooses one of them. - From then on in, the

Maitre sends any reportable notification to all registered Observers (Watcher) of the "Subjects" (Garcon).

I opted for a manual start of the Maitre Service's end of the WCF-Service. It looks like this:

NetTcpBinding binding = new NetTcpBinding(SecurityMode.None);

binding.ReceiveTimeout = new TimeSpan(12, 0, 0);

RoleInstanceEndpoint externalEndPoint =

RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["example"];

string endpoint = String.Format("net.tcp://{0}/example",

externalEndPoint.IPEndpoint);

host = new ServiceHost(typeof(TableReporter), new Uri[] { new Uri(endpoint) });

host.AddServiceEndpoint(typeof(ITableReports), binding, endpoint);

This configuration enables me to control the lifetime of the visible endpoints, thus ensuring that there are no visible endpoints if there are no active Garcons.

Points of Interest

The main issues I detected while testing my project were some unexpected timeout configurations on all sides of my chosen platforms. The workflows, for one, are configured to have a default configuration which unloads them after a minute. Since my workflows are pretty much run as they are seen on the graphic design interface, I decided to modify their unloading/persistence parameters in the corresponding Web.config.

<workflowIdle timeToUnload="24:00:00" timeToPersist="10:00:00"/>

The Watchers, on the other hand, stopped receiving updates after 4 minutes. Some digging in the Azure's reference documentation revealed that the Load Balancer has a 4 minute idle timeout (non configurable). I opted to implement the first solution mentioned in the linked article, namely:

Quote:

[1] - Make sure the TCP connection is not idle. To keep your TCP connection active keeping sending some data before 240 seconds is passed. This could be done via chunked transfer encoding;send something or you can just send blank lines to keep the connection active.

In my way it even helped improve my implementation, giving the Watcher an excuse to ask every 220 seconds if the watched Subject was still alive.

My experience with the debugging environment tells me that the Azure local debugging environment behaves almost exactly like the actual staging area on the cloud. However, there are some points to watch:

- The addresses are obviously different and the debugging and production values of some configuration files (App.config, Web.config, etc.) will have to be changed depending on the scenario.

- Some timeouts, like the ones I mentioned above, don't apply to the local (debugging) scenario, which may lead to bugs that only appear when the service is uploaded to Azure. I recommend debugging your service directly on the Cloud before you release it.

You shouldn't leave this option on, since it is not the optimal configuration for any project but it should help you to catch any problems that local debugging would hide. The beauty of this is the possibility of connecting remotely to your Cloud Service and still set breakpoints, watches and any other debugging configuration you're used to with minimal effort.

History

- 2015-02-22: First completed draft

- 2015-03-09: Improved some formatting, some grammatical corrections and adjusted the width of my images