When we need authorization in a .NET application on our intranet, we tend to use the pre-configured ASP.NET membership database provided by Microsoft. During development, we have the need to refresh data on development from production on a daily basis. This article describes a solution to this problem. Let’s get started.

Prerequisites

- Microsoft SQL Server 2008 R2

- Business Intelligence Development Studio (BIDS)

- Microsoft SQL Server Management Studio 2008+ (SSMS)

Step 1: Dependencies

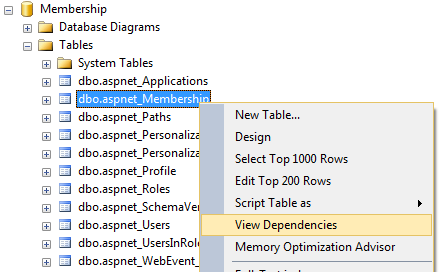

Let’s start out by mapping the dependencies of the supplied database. This will help us create a data load package which does not run into referential integrity issues by loading child tables before their parent(s) are available. This task is immensely repetitive especially in large databases. Fortunately, the membership database we discuss only consists of eleven tables. To find an object dependency, right-click on it in SSMS (Object Explorer > Databases > Database > Tables > Table) and select “View Dependencies”.

Within the Object Dependencies window, select “Objects on which [selected object] depends” (Object Dependencies > General). This will show all dependencies for the selected object in reverse order. Lower hierarchy items need to be loaded before a dependent table can reference it.

Our goal is to create a dependency tree for our database.

Completed Dependency Tree

No dependencies:

aspnet_WebEvent_Eventsaspnet_SchemaVersions

Dependencies:

aspnet_UsersInRoleaspnet_Profileaspnet_Membershipaspnet_PersonalizationPerUseraspnet_PersonalizationAllUsers

aspnet_Usersaspnet_Pathsaspnet_Roles

From the dependency tree, we can see that “Applications” needs to be loaded first, then “Users, Paths, and Roles” and so forth. With this information, we are now able to create a data load package.

Step 2: Creating a Data Load Package

We will develop a data load package in BIDS that first clears out old records and then inserts new ones from the production environment. For very large databases, you could adapt the script to only load new records and update old ones. For sanity’s sake however, we will remove all records before we sync new ones, since Membership databases tend to be small in size anyway. Begin by creating a new “Integration Services Project” in BIDS (File > New Project > Business Intelligence Projects > Visual Studio installed templates: Integration Services Project).

Add two OLE DB Connections by right-clicking in the Connection Managers window and selecting “New OLE DB Connection…”. One for our source database, one for our target database. Fill in the server name and database name for each. Additionally, set the property “DelayValidation” to True to allow for a dynamic connection string through scripting later.

Next, add a Sequence Container with three Execute SQL Tasks and connect them as shown below. Since we identified the dependencies in Step 1, we know that we can remove and load data in three phases (Each level in the hierarchy represents a phase that can be executed in sequence).

Now, we add SQL to remove most- to least-dependent table data. In our case, we remove UsersInRole, Profile, Membership, PersonalizationPerUser, and PersonalizationAllUsers in phase one. Do this by editing the SQLStatement within the Execute SQL Task Editor. Set the connection to the target database and give the task a name. Do this for all three tasks, removing only the respective table data in each phase.

At this point, we are ready to develop the data load portion of the package. Start by adding another Sequence Container with three Data Flow Tasks. This time, the sequence in which we load data is the opposite (least- to most-dependent). That means that we will start loading Applications, SchemaVersions, and WebEvent_Events first. Since SchemaVersions and WebEvent_Events have no dependencies in either direction, we will add them to phase one in this example.

Open phase one of the Load Membership sequence by double-clicking the Data Flow Task. From your toolbox, add an OLE DB Source and an OLE DB Destination to your Data Flow. Start configuring your source by double-clicking it. Select your source connection manager from the drop-down and set the Data access mode to “Table or view”. Then, select the table you would like to load. In this example, we are starting out with [dbo].[aspnet_Applications].

Next, connect your source and target components and double-click the target to configure it. Select the target OLE DB connection manager, set Data access mode to “Table or view – fast load”, and click OK. The column mappings should already be set up correctly (Input and Output columns are identical). To check, Open your OLE DB Destination Editor and click on the Mappings page.

Continue to add a separate source and destination component for each item (SchemaVersions, WebEvent_Events). The end result should look something like this:

Add the other source and destination components to phase two and three accordingly. At this point, you can take your new package for a spin. If everything went smoothly, we can see a successful output and data in our development environment.

There you have it. We started out by creating a dependency tree of our database, split the data load process into three phases (one for each level of the tree hierarchy), and created a data load package that can run as a scheduled job or from the command line. In a future article, we will take a look at merging two existing membership databases through SSIS and migrating a local ASP.NET membership database to SQL Server. Stay tuned for more updates. Let me know what you think in the comments!

References

Project on GitHub: https://github.com/mariotacke/asp.net-membership-tools CodeProject

Bonus: Scripting Our Solution

When working in a development environment which needs frequent data refreshes to multiple databases, we need a solution that accommodates this scenario quickly and safely. We can use the dtexec utility to run our package adhoc and in different environments. Let’s write a quick script for it (a simple .bat file will do the job):

dtexec /f "ASP.NET Membership Tools\bin\Refresh Membership.dtsx"

/conn "\"Source\"";"\"Data Source=source_server;Initial Catalog=source_database;

Integrated Security=SSPI;\"" /conn "\"Target\"";"\"Data Source=target_server;

Initial Catalog=target_database;Integrated Security=SSPI;\"" /reporting W

Here, we are executing dtexec with “/f” for file with our package location and modifying the two connections strings we set up earlier. By overwriting the connection string, we can quickly change the source and target of our data load package.