Introduction

Problem: There were many IoT devices demonstrated at Electronic Expo this year - from cars to smart mirrors to baby feeding bottles - most of them built with differing technologies and standards. On one hand, there are tons of new IoT devices on the market to play with; on the other hand, it is mind boggling on how developers could figure out which standards (or lack of) connect all these devices in meaningful ways for potential end customers to make it worthwhile.

Solution - To begin to solve this challenge, we first have to acknowledge there is no single program that can connect all these IoT devices; this is largely due to both the infancy of IoT and the inherent protectionism of industries producing devices for it. However, that does not mean we cannot build an easy to use, secure, fast and economic platform for developers who want to control one or more types of IoT devices. With the right platform, developers can create interface programs that target specific IoT devices that he/she wants to control without getting bogged down with technical challenges and cost of the IoT platform. The right IoT developer’s platform would include monitoring availability of the network and services, hosting of the data, scalability, operational continuity, reporting, unified presentation of IoT, security, and outages caused by datacenter patching – to name just a few.

In this article, we will explain how we created a scalable IoT platform on Azure for developers and how we use Chef to apply updates to large-scale plug computer deployments.

Background

Basic Concept

Conceptually, if we were to control a smart coffee brewer, the simplest thing an interface program would do is to turn it on/off. Assuming this is the only function needed, the most simplistic interface program would more or less encompass the following components:

- A local program that can recognize new and existing coffee brewers; this program is local to the brewer’s network and is running on a plug computer using Debian operation system.

- The local program monitors the smart coffee brewer and all other smart devices on the network and determines whether they are online.

- The local program sends brewer information to a user, such as whether the brewer is on or off, temperature, etc., through REST services on a server in Azure Cloud.

- Web portals or apps supported by the server allow the user to control the brewer (on/off).

- A business logic component on the local program is responsible to turn the brewer on/off based on users’ input from #4.

Even in the simple series of steps described above, a developer has to deal with a host of technologies and complications such as networking, web services, data storage, scaling, web portal or app programming, and possibly much more. Combining these complexities with hundreds and potentially thousands of IoT devices to control, an IoT developer can quickly become overwhelmed.

Our firm looked at all the components that a typical IoT program would entail, extracted all the elements that commonly exist across most IoT programs, and built a platform enabling developers to focus exclusively on writing interface programs to the IoT devices. In order to explain how the platform works, we will continue with the previously mentioned coffee brewer. In the explanation that follows, the “brewer interface program” is the only code that a developer needs to write to make an IoT device available anywhere there is an internet connection:

- We first create a client program running on a “plug computer”, which when plugged into a network , automatically configures itself and monitors all network devices, registers them, and tracks them even if their Internet address changes.

- The client program then executes the “brewer interface program” developed by any developer. In this case, the “brewer interface program” resides on a removable USB drive inserted into the plug computer; our platform reads it and installs it, making it ready for action.

- The “brewer interface program” exchanges information with our client program about the brewer specific information and our client program sends the information through services hosted on Azure.

- Encrypted information received through the REST services creates messages on the Azure Service Bus Queue.

- A number of “worker roles” read and decrypt the message and store them into Blob Storage with Meta data held in an Azure SQL database.

- A traditional web portal and mobile website displays the brewer’s information to end users.

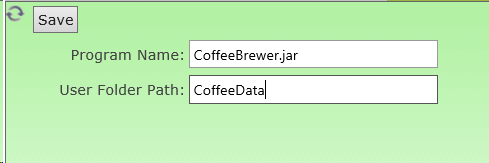

Here is what the developer must do to get our client program to execute his/her interface program on the plug computer. The developer registers the “brewer interface program” on our web portal to tell us the brewer program’s name and information that needs to be exchanged with our client program. The developer also defines a series of commands associated to the coffee brewer, which allows the end user to control the coffee brewer through the web portal and mobile website. Here is an example of how to register a third party program with our platform:

Once this registration happens and the executable is loaded into the plug computer, our platform automatically reads data from the user’s folder path, and creates data in both a Tree View and a Map View. When clicking on a corresponding IOT device in Tree View, we can see data is sent through from the Brewer‘s interface program. Here is an example of Tree View:

In the Map View, we see thousands of plug computers and tens of thousands of IOT devices. The example below demonstrates how our Map View can display large numbers of devices and highlight those that need attention through color. This view is especially useful when we want to geographically monitor devices in different locations. After performance tuning and testing, we can now confirm that display of 2000 plug computers with millions of interactive data points take less than 12 seconds on a small Azure server and S2 tier database. A large Azure server with a faster database can display the same amount of data point 4 - 5 times faster. This means if you have globally managed devices, within a glance you will know where things are happening and why they need your attention.

Here is the basic architecture on how the platform works:

Advantage

When using our platform, the developer needs to take care of four things:

- Write the interface program to the IoT device and put the interface program on a removable USB drive.

- Register each IoT interface program on our platform by specifying the program name and information exchange.

- Define a series of commands the interface program can use to control the IoT device.

- Send displayable data to the web portal.

Here is what the platform provides:

- Monitor network status of the IoT devices and send alerts to end user when network or individual IoT devices go offline.

- Automatically provision, update and reconfigure both plug computer and IoT interface programs based on requirement such as security patching, software upgrades, etc.

- Ensure business continuity by leveraging Azure capabilities such as scaling, data recovery, redundancy of network and cloud services, service bus redundancy in multiple regions, and offline storage on plug computer for network outages, which automatically synchronizes to the cloud when network connectivity is restored.

- Ensure performance and scalability for the IoT data going to the cloud that not all developers are equipped to tackle. Our platform can easily receive millions of messages through a large distributed network. In other words, we can connect to thousands of plug computers, and each can host multiple third party IoT interface programs. If someone wants to read and/or control IoT devices for a large-scale deployment, all the plumbing is complete and all he/she needs to do is to get many plug computers and load the IoT interface program(s) into them.

- Present a unified view to all IoT devices in both a Tree View and Map View, capable of displaying thousands of plug computers and tens of thousands of IoT devices at a given time for a single customer.

- Provide security through encryption/decryption of data in transit and sensitive data at rest (i.e. passwords).

Using the code

The code shown at the end of article is an example on how to build a page to deliver downloadable files to a Chef Client that uses wget to recieve the files. You can paste the entire code into an aspx page. Just remember to set up azure blob with the right container name (in this case it's called "cookbooks").

Points of Interest

How we leverage Azure

Azure not only allows simple and easy development and deployment of web services, background workers, and websites; it also provides capabilities such as Service Bus Queues, Event Hubs, SQL Database in cloud and fantastic scaling and data backup/restore capabilities.

Among the Azure offerings, Service Bus and Event Hubs both offer the ability to scale incoming data from IoT devices. Event hubs will offer a much simpler implementation and is the preferred route for bringing in IoT data because it scales out much further than Service Bus with less coding. We initially implemented Service Bus because of prior knowledge but we have begun changing over to Event Hubs so we can more easily scale with less code. The remainder of our discussion will focus on Service Bus knowing Event Hubs will also work.

After a lot of practice and experimentation with Azure offerings, we found that we could advance business continuity by leveraging how easy it is to create queues in Azure. Here is a simple example of how our platform leverages Azure to provide business continuity with service bus queues and how we provide high availability through round robin of the queues in all Azure data centers. Once you understand how this is implemented, the logic and technique can easily be extended to Event Hubs.

Why do we need to provide High Availability (HA) in Azure Service Bus Queues?

First, let me clarify some terminology so the following paragraphs are easier to read. For business critical data such as alerts that require a strict FIFO order, we leverage queues from Service Bus. We use Rest Services to put messages (information from IoT devices) into the queue, and we leverage a series of background processors running on worker role to receive messages from the queue. Moving forward, I’ll use background processor and worker role interchangeably.

Now, back to why HA should be considered for Service Bus Queues and Event Hubs. Azure Service Bus can have degradation of performance while still maintaining the up/down SLA. When this happens, data has trouble either going into the queue or taken out of the queue. If you use REST services to deposit messages into the queue, the services will begin to fail. Although our plug computer will go into offline mode and store data locally until the REST services are available again; a more resilient solution is automatically switching to a different region. This is possible because most times, Azure Service Bus Queues do not go down across the globe. Therefore, the question we need to address is, “How can queue processing automatically failover to a different region when it has problems?”

Without further ado, here is how we failover queues with round robin to data centers across the globe. First, we have to understand that a program can create its own queue dynamically as long as it knows the queue’s namespace and issuer name/key. While the queue creation code is very simple, the trick is to coordinate both the sender and listener of the queue to use dynamically created queues with the right coordination.

Information from IoT devices should be stored in database in sequential order, and this means if there are issues in retrieving messages from a queue, the message ordering must be preserved until messages are drained from the queue. When this happens, we should not keep putting messages into the troubled queue, and in many cases, when we can’t take messages from the queue, we can’t put messages into the queue anymore anyway.

The key to resolve this problem follows the following steps:

- The background processor will determine if there are problems with a queue.

- In time of outage, background processor will notify the REST services to create their own queues dynamically in another region, while the background processor continues to listen on the troubled queue and tries to drain all messages from the queue until all messages are drained normally or they all go to dead letter queue. It may take a few minutes or a few hours for this happen, depending on the outage situation.

- While step 2 is taking place, the REST services are putting messages into newly created queues in a different region, and these queues are guaranteed not to be in the same data center as the troubled queues.

- If a REST service cannot put messages into queues, it will notify its corresponding background processors that it will create a new queue in a different region.

- This process continues until either REST services exhaust all possible data centers it can create a queue at which point a human intervention will take place or it found a data center that it can create queues and operate in it without problems.

- The background processor, once finish with step 2, will start to follow the trails of the REST services and go to each queue the REST services created to drain them until it ends up on the same queue that the REST services are now operating on.

Following the 6 steps listed above, we guarantee the following outcomes:

- Preserve the order of the messages because background processors will not abandon any messages in queue.

- Gain a High Availability because we can fail over to different queues around the globe.

- Maintain the operational integrity of the distributed plug computers so that they can continue send messages without going into offline mode.

- Minimize support because the issues will resolve themselves without generating a support call. It is extremely rare that outages happen at the same time across all Azure data.

Implementation

Once you understand how the 6 steps work logically, the actual implementation is pretty straight forward. The secret is to use a data source (i.e. SQL Database or Table Storage) that both REST services and Background Processors can talk to and bind them together. This is necessary because REST services and background processors cannot communicate otherwise. Here are some of the details that illustrate how we did it.

In an Azure SQL database, we register a set of service bus namespaces, issuer names, and issuer keys (let’s call them triplets) to be used. Each set of triplets must be come from a different data center.

Each triplet’s value set needs a priority as well, because it allows the REST services to know where to create queues first when multiple namespaces are available. Prioritization is done based on cost, performance, supportability. For example, if your operation is primarily in North America, you should probably rank North America data centers higher. Doing so can minimize your latency and perhaps lower your cost (sending data across regions cost more).

In the next table, we pair up plug computer, REST service, background processor and queue triplets together. When failover situation is to happen, different parts of the system such as REST services and background processors can communicate to each other through the modifications to the table.

The Failover bit can be set to control if failover should take place or not. The RestServiceQueueNameSpaceID shows what triplet the REST service is using now, and if it is different from the QueueNamespaceID, it means a failover is in process where REST service started to use a different queue than the background process.

Another interesting way to use Azure is to combine Azure with Chef to achieve system level provisioning at large scale for thousands plug computers. Here is the operational requirement that necessitates the use of Chef with Azure. If a plug computer resides in New York and the developer lives in California, how would the developer apply OS updates to the plug computer? Yes, one might be able to remote into a plug computer but imagine scaling this approach for thousands of plug computers. What happens if the developer sold his interface program(s) to thousands of customers, and all customers need a security patch applied to the plug computers? You can solve this scaling problem with Chef and Azure.

Chef is a wonderful tool in the DevOps space that helps us achieve the configuration as code paradigm. In an ideal world, we could use Hosted Chef where OpsCode will host the server for us, and install Chef client on each plug computer to fulfill the operational requirement mentioned above. However, the cost of Hosted chef is cost prohibitive so we considered another option where we host Chef Server on Azure ourselves and the hosting cost is completely free. You can read how to set it up here: https://downloads.chef.io/chef-server/.

With the second option, we realized another problem. In order to ensure High Availability, we have to pay $6 per month per client install. If we have to manage 3,000 plug computers, it will mean a whopping $18,000 per month. To combat this issue, we considered yet another option, our third option.

Chef client can run stand alone, and periodically access REST services to download cookbook, scripts and executable interface programs. We store the cookbook, scripts and executables in Azure blob storage, and use a webpage to retrieve them. For example, one of the blobs called Cookbooks is set up to host Chef Cookbooks.

When Chef client pulls (via wget) cookbooks from the blob through a webpage, it needs to get downloadable files. The concept is easy, but to make .net REST service to return downloadable file requires some coding. Here is how it can be done:

DownloadBlob allows us to connect to a blob and download information into a memory stream as shown here:

public partial class Cookbooks : System.Web.UI.Page

{

string containerName = "cookbooks";

List<hyperlink> hyperlinkList = new List<hyperlink>();

protected void Page_Load(object sender, EventArgs e)

{

String currurl = HttpContext.Current.Request.RawUrl;

String querystring = null;

int iqs = currurl.IndexOf('?');

if (iqs == -1)

{

String redirecturl = currurl;

Response.Redirect(redirecturl, true);

}

else if (iqs >= 0)

{

querystring = (iqs < currurl.Length - 1) ? currurl.Substring(iqs + 1) : String.Empty;

}

NameValueCollection qscoll = HttpUtility.ParseQueryString(querystring);

string blobpassword = RoleEnvironment.GetConfigurationSettingValue("blobpassword");

string oldblobpassword = RoleEnvironment.GetConfigurationSettingValue("oldblobpassword");

LocalResource localResource = RoleEnvironment.GetLocalResource(containerName);

bool success = true;

if (qscoll["blobpassword"].Equals(blobpassword) || qscoll["blobpassword"].Equals(oldblobpassword))

{

success = DownloadBlob(qscoll, success, containerName);

if (!success)

{

DownloadFailed();

}

}

else

{

DownloadFailed();

}

}

private bool DownloadBlob(NameValueCollection qscoll, bool success, string containerName)

{

if (qscoll["file"] != null)

{

var storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString");

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer blobContainer = blobClient.GetContainerReference(containerName);

string filename = Request["file"].ToString();

CloudBlockBlob blockBlob = blobContainer.GetBlockBlobReference(filename);

try

{

using (Stream memStream = new MemoryStream())

{

blockBlob.DownloadToStream(memStream);

fileDownload(filename, memStream);

}

}

catch (Exception ex)

{

var error = ex.Message.ToString();

success = false;

}

}

return success;

}

private void DownloadFailed()

{

Response.ClearHeaders();

Response.ClearContent();

Response.Status = "500 no file available to download";

Response.StatusCode = 500;

Response.StatusDescription = "An error has occurred";

Response.Flush();

throw new HttpException(500, "An internal error occurred in the Application");

}

private CloudBlobContainer GetContainer()

{

return SetContainerAndPermissions();

}

private CloudBlobContainer SetContainerAndPermissions()

{

try

{

var account = CloudStorageAccount.FromConfigurationSetting("DataConnectionString");

var client = account.CreateCloudBlobClient();

CloudBlobContainer blobContainer = client.GetContainerReference(containerName);

blobContainer = client.GetContainerReference(containerName);

blobContainer.CreateIfNotExist();

return blobContainer;

}

catch (Exception ex)

{

throw new Exception("Error while creating the containers" + ex.Message);

}

}

private void fileDownload(string fileName, Stream memStream)

{

Page.Response.Clear();

bool success = ResponseFile(Page.Request, Page.Response, fileName, memStream, 1024000);

}

public static bool ResponseFile(HttpRequest _Request, HttpResponse _Response, string _fileName, Stream _memStream, long _speed)

{

try

{

BinaryReader br = new BinaryReader(_memStream);

try

{

_Response.AddHeader("Accept-Ranges", "bytes");

_Response.Buffer = false;

long fileLength = _memStream.Length;

long startBytes = 0;

int pack = 10240;

int sleep = (int)Math.Floor((double)(1000 * pack / _speed)) + 1;

if (_Request.Headers["Range"] != null)

{

_Response.StatusCode = 206;

string[] range = _Request.Headers["Range"].Split(new char[] { '=', '-' });

startBytes = Convert.ToInt64(range[1]);

}

_Response.AddHeader("Content-Length", (fileLength - startBytes).ToString());

if (startBytes != 0)

{

_Response.AddHeader("Content-Range", string.Format(" bytes {0}-{1}/{2}", startBytes, fileLength - 1, fileLength));

}

_Response.AddHeader("Connection", "Keep-Alive");

_Response.ContentType = "application/octet-stream";

_Response.AddHeader("Content-Disposition", "attachment;filename=" + HttpUtility.UrlEncode(_fileName, System.Text.Encoding.UTF8));

br.BaseStream.Seek(startBytes, SeekOrigin.Begin);

int maxCount = (int)Math.Floor((double)((fileLength - startBytes) / pack)) + 1;

for (int i = 0; i < maxCount; i++)

{

if (_Response.IsClientConnected)

{

_Response.BinaryWrite(br.ReadBytes(pack));

Thread.Sleep(sleep);

}

else

{

i = maxCount;

}

}

}

catch (Exception ex)

{

return false;

}

finally

{

br.Close();

_memStream.Close();

}

}

catch (Exception ex)

{

return false;

}

return true;

}

}</hyperlink></hyperlink>

Once Chef client on the plug computer receives a cookbook, supporting scripts and executables; it can upgrade the plug computer anywhere with virtually no limit on how many clients to be managed. Better yet, the cost is negligible.

Closing comment

We are now focusing our energy to create a platform leveraging Azure technology to provide a platform for all developers alike. The platform offers monitoring, security, ease of use and configuration, scalability and data continuity. Most of all, it allows developers to stay focused on building awesome interfaces to IoT devices instead of doing enterprise platform development.

History

3/25/2015 - Added missing pictures and diagrams

3/30/2015 - Added a performance notes on the map view