In the previous post in my blog series on implementing continuous delivery with VSO, we got as far as configuring Release Management with a release path. In this post, we cover the application deployment stage where we’ll create the items to actually deploy the Contoso University application. In order to achieve this, we’ll need to create a component which will orchestrate copying the build to a temporary location on target nodes and then we’ll need to create PowerShell scripts to actually install the web files to their proper place on disk and run the DACPAC to deploy any database changes. Note that although RM supports PowerShell DSC, I’m not using it here and instead I’m using plain PowerShell. Why is that? It’s because for what we’re doing here – just deploying components – it feels like an unnecessary complication. Just because you can doesn’t mean you should…

Sort out Build

The first thing you are going to want to sort out is build. VSO comes with 60 minutes of bundled build which disappears in no time. You can pay for more by linking your VSO account to an Azure subscription that has billing activated or the alternative is to use your own build server. This second option turns out to be ridiculously easy and Anthony Borton has a great post on how to do this starting from scratch here. However, if you already have a build server configured, it’s a moment’s work to reconfigure it for VSO. From Team Foundation Server Administration Console, choose the Build Configuration node and select the Properties of the build controller. Stop the service and then use the familiar dialogs to connect to your VSO URL. Configure a new controller and agent and that’s it!

Deploying PowerShell Scripts

The next piece of the jigsaw is how to get the PowerShell scripts you will write to the nodes where they should run. Several possibilities present themselves amongst which is embedding the scripts in your Visual Studio projects. From a reusability perspective, this doesn’t feel quite right somehow and instead I’ve adopted and reproduced the technique described by Colin Dembovsky here with his kind permission. You can implement this as follows:

- Create folders called Build and Deploy in the root of your version control for ContosoUniversity and check them in.

- Create a PowerShell script in the Build folder called CodeDeployFiles.ps1 in and add the following code:

Param(

[string]$srcPath = $env:TF_BUILD_SOURCESDIRECTORY,

[string]$binPath = $env:TF_BUILD_BINARIESDIRECTORY,

[string]$pathToCopy

)

try

{

$sourcePath = "$srcPath\$pathToCopy"

$targetPath = "$binPath\$pathToCopy"

if (-not(Test-Path($targetPath))) {

mkdir $targetPath

}

xcopy /y /e $sourcePath $targetPath

Write-Host "Done!"

}

catch {

Write-Host $_

exit 1

}

- Check CodeDeployFiles.ps1 in to source control.

- Modify the process template of the build definition created in a previous post as follows:

2.Build > 5. Advanced > Post-build script arguments = -pathToCopy Deploy

2.Build > 5. Advanced > Post-build script path = Build/CopyDeployFiles.ps1

To explain, Post-build script path specifies that CodeDeployFiles.ps1 created above should be run and Post-build script arguments feeds in the -pathToCopy argument which is the Deploy folder we created above. The net effect of all this is that the Deploy folder and any contents get created as part of the build.

Create a Component

In a multi-server world, we’d create a component in RM from Configure Apps > Components for each server that we need to deploy to since a component is involved in ensuring that the build is copied to the target node. Each component would then be associated with an appropriately named PowerShell script to do the actual work of installing/copying/running tests or whatever is needed for that node. Because we are hosting IIS and SQL Server on the same machine, we only actually need one component. We’re getting ahead of ourselves a little but a side effect of this is that we will use only one PowerShell script for several tasks which is a bit ugly. (Okay, we could use two components but that would mean two build copy operations which feels equally ugly.)

With that noted, create a component called Drop Folder and add a backslash (\) to Source > Builds with application > Path to package. The net effect of this when the deployment has taken place is the existence a folder called Drop Folder on the target node with the contents of the original drop folder copied over to the remote folder. As long as we don’t need to create configuration variables for the component, it can be reused in this basic form. It probably needs a better name though.

Create a vNext Release Template

Navigate to Configure Apps > vNext Release Templates and create a new template called Contoso University\DAT>DQA based on the Contoso University\DAT>DQA release path. You’ll need to specify the build definition and check Can Trigger a Release from a Build. We now need to create the workflow on the DAT design surface as follows:

- Right-click the Components node of the Toolbox and Add the Drop Folder component.

- Expand the Actions node of the Toolbox and drag a Deploy Using PS/DSC action to the Deployment Sequence. Click the pen icon to rename to Deploy Web and Database.

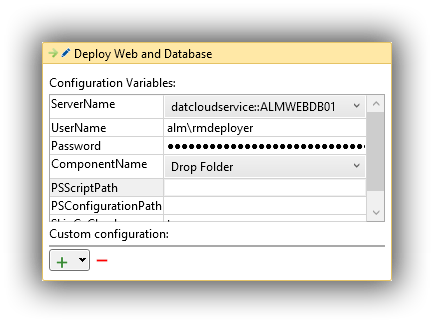

- Double click the action and set the Configuration Variables as follows:

ServerName = choose the appropriate server from the dropdown.UserName = the name of an account that has permissions on the target node. I’m using the RMDEPLOYER domain account that was set up for Deployment Agents to use in agent based deployments.Password = password for the UserNameComponentName = choose Drop Folder from the dropdown.SkipCaCheck = true

- The Actions do not display very well so a complete screenshot is not possible but it should look something like this (note

SkipCaCheck isn’t shown):

At this stage, we can save the template and trigger a build. If everything is working, you should be able to examine the target node and observe a folder called C:\Windows\DtlDownloads\Drop Folder that contains the build.

Deploy the Bits

With the build now existing on the target node, the next step is to actually get the web files in place and deploy the database. We’ll do this from one PowerShell script called WebAndDatabase.ps1 that you should create in the Deploy folder created above. Every time you edit this and want it to run, do make sure you check it in to version control. To actually get it to run, we need to edit the Deploy Web and Database action created above. The first step is to add Deploy\WebAndDatabase.ps1 as the parameter to the PSScriptPath configuration variable. We then need to add the following custom configuration variables by clicking on the green plus sign:

destinationPath = C:\inetpub\wwwroot\CU-DATwebsiteSourcePath = _PublishedWebsites\ContosoUniversity.WebdacpacName = ContosoUniversity.Database.dacpacdatabaseServer = ALMWEBDB01databaseName = CU-DATloginOrUser = ALM\CU-DAT

The first section of the script will deploy the web files to C:\inetpub\wwwroot\CU-DAT on the target node, so create this folder if you haven’t already. Obviously, we could get PowerShell to do this but I’m keeping things simple. I’m using functions in WebAndDatabase.ps1 to keep things neat and tidy and to make debugging a bit easier if I want to only run one function.

The first function is as follows:

function copy_web_files

{

if ([string]::IsNullOrEmpty($destinationPath) -or [string]::IsNullOrEmpty($websiteSourcePath)

-or [string]::IsNullOrEmpty($databaseServer) -or [string]::IsNullOrEmpty($databaseName))

{

$(throw "A required parameter is missing.")

}

Write-Verbose "#####################################################################" -Verbose

Write-Verbose "Executing Copy Web Files with the following parameters:" -Verbose

Write-Verbose "Destination Path: $destinationPath" -Verbose

Write-Verbose "Website Source Path: $websiteSourcePath" -Verbose

Write-Verbose "Database Server: $databaseServer" -Verbose

Write-Verbose "Database Name: $databaseName" -Verbose

$sourcePath = "$ApplicationPath\$websiteSourcePath"

Remove-Item "$destinationPath\*" -recurse

Write-Verbose "Deleted contents of $destinationPath" -Verbose

xcopy /y /e $sourcePath $destinationPath

Write-Verbose "Copied $sourcePath to $destinationPath" -Verbose

$webDotConfig = "$destinationPath\Web.config"

(Get-Content $webDotConfig) | Foreach-Object {

$_ -replace '__DATA_SOURCE__', $databaseServer `

-replace '__INITIAL_CATALOG__', $databaseName

} | Set-Content $webDotConfig

Write-Verbose "Tokens in $webDotConfig were replaced" -Verbose

}

copy_web_files

The code clears out the current set of web files and then copies the new set over. The tokens in Web.config get changed in the copied set so the originals can be used for the DQA stage. Note how I’m using Write-Verbose statements with the -Verbose switch at the end. This causes the RM Deployment Log grid to display a View Log link in the Command Output column. Very handy for debugging purposes.

The second function deploys the DACPAC:

function deploy_dacpac

{

if ([string]::IsNullOrEmpty($dacpacName) -or [string]::IsNullOrEmpty($databaseServer)

-or [string]::IsNullOrEmpty($databaseName))

{

$(throw "A required parameter is missing.")

}

Write-Verbose "#####################################################################" -Verbose

Write-Verbose "Executing Deploy DACPAC with the following parameters:" -Verbose

Write-Verbose "DACPAC Name: $dacpacName" -Verbose

Write-Verbose "Database Server: $databaseServer" -Verbose

Write-Verbose "Database Name: $databaseName" -Verbose

$cmd = "& 'C:\Program Files (x86)\Microsoft SQL Server\120\DAC\bin\sqlpackage.exe'

/a:Publish /sf:'$ApplicationPath'\'$dacpacName' /tcs:'server=$databaseServer;

initial catalog=$databaseName'"

Invoke-Expression $cmd | Write-Verbose -Verbose

}

deploy_dacpac

The code is simply building the command to run sqlpackage.exe – pretty straightforward. Note that the script is hardcoded to SQL Server 2014 – more on that below.

The final function deals with the Create login and database user.sql script that lives in the Scripts folder of the ContosoUniversity.Database project. This script ensures that the necessary SQL Server login and database user exists and is tokenised so it can be used in different stages – see this article for all the details.

function run_create_login_and_database_user

{

if ([string]::IsNullOrEmpty($loginOrUser) -or [string]::IsNullOrEmpty($databaseName))

{

$(throw "A required parameter is missing.")

}

Write-Verbose "#####################################################################" -Verbose

Write-Verbose "Executing Run Create Login and Database User with the following parameters:" -Verbose

Write-Verbose "Login or User: $loginOrUser" -Verbose

Write-Verbose "Database Server: $databaseServer" -Verbose

Write-Verbose "Database Name: $databaseName" -Verbose

$scriptName = "$ApplicationPath\Scripts\Create login and database user.sql"

(Get-Content $scriptName) | Foreach-Object {

$_ -replace '__LOGIN_OR_USER__', $loginOrUser `

-replace '__DB_NAME__', $databaseName

} | Set-Content $scriptName

Write-Verbose "Tokens in $scriptName were replaced" -Verbose

$cmd = "& 'sqlcmd' /S $databaseServer /i '$scriptName' "

Invoke-Expression $cmd | Write-Verbose -Verbose

Write-Verbose "$scriptName was executed against $databaseServer" -Verbose

}

run_create_login_and_database_user

The tokens in the SQL script are first swapped for passed-in values and then the code builds a command to run the script. Again, pretty straightforward.

Loose Ends

At this stage, you should be able to trigger a build and have all of the components deploy. In order to fully test that everything is working, you’ll want to create and configure a web application in IIS – this article has the details.

To create the stated aim of an initial pipeline with both a DAT and DQA stage, the final step is to actually configure all of the above for DQA. It’s essentially a repeat of DAT so I’m not going to describe it here but do note that you can copy and paste the Deployment Sequence:

One remaining aspect to cover is the subject of script reusability. With RM-TFS, there is an out-of-the-box way to achieve reusability with tools and actions. This isn’t available in RM-VSO and instead potential reusability comes via storing scripts outside of the Visual Studio solution. This needs some thought though since the all-in-one script used above (by necessity) only has limited reusability and in a non-demo environment, you would want to consider splitting the script and co-ordinating everything from a master script. Some of this would happen anyway if the web and database servers were distinct machines but there is probably more that should be done. For example, tokens that are to be swapped-out are hard-coded in the script above which limits reusability. I’ve left it like that for readability but this certainly feels like the sort of thing that should be improved upon. In a similar vein, the path to sqlpackage.exe is hard coded and thus tied to a specific version of SQL Server and probably needs addressing.

In the next post, we’ll look at executing automated web tests. In the meantime, if you have any thoughts on great ways to use PowerShell with RM-VSO, please do share in the comments.

Cheers – Graham

The post Continuous Delivery with VSO: Application Deployment with Release Management appeared first on Please Release Me.