A continuation of a series of blog entries that documents the design and implementation process of a library. The library is called, Network Alchemy[^]. Alchemy performs automated data serialization with compile-time reflection. It is written in C++ using template meta-programming.

Benchmark testing and code profiling is a phase that can often be avoided for the majority of development. That is, if you develop wisely. Selecting appropriate data structures and algorithms for the task at hand. Avoiding pre-mature optimization is about not getting caught up on the minute details before you even have a working system. That doesn't mean to throw out good decision making altogether. Well, I have reached the point in Alchemy, where I have a feature-set that is rich enough to make this a useful library. This entry chronicles my discoveries for how well Alchemy performs and the steps I have taken to find and improve the areas where improvement has been required.

Benchmark Tests

To get started, I simply wanted to capture the performance of a different collection of the Alchemy supported types in a few arranged structures.

Each of the tests are run across a pre-allocated buffer of 512 MB. The structures for each test will extract as many messages as they can from the 512 MB buffer. Each message that is extracted will:

- Deserialize the message from the buffer

- Convert the byte-order

- Serialize the message into a different buffer

This is a summary of the size of each structure and the number of iterations that were performed over the 512 MB block of memory:

| Test Name | Struct Size | Iterations |

| basic | 14 | 28247922 |

| packed | 7 | 76695844 |

| unaligned | 19 | 28256363 |

| complex | 86 | 6242685 |

| array | 1024 | 524288 |

| no_conversion | 64 | 8388608 |

Here is a brief overview of the tests that I selected to exercise the basic features of Alchemy. I will also continue to add more tests over time.

1) Basic

This message structure contains a collection of fundamental types, where all of the types are aligned on a minimum of a two-byte boundary.

C++

struct Basic

{

int32_t i32;

uint32_t u32;

int16_t i16;

uint16_t u16;

char ch;

uint8_t u8;

};

C++

HG_BEGIN_FORMAT(Basic,

HG_DATUM(int32_t, i32),

HG_DATUM(uint32_t, u32),

HG_DATUM(int16_t, i16),

HG_DATUM(uint16_t, u16),

HG_DATUM(char, ch),

HG_DATUM(uint8_t, u8)

);

2) Packed

The PackedBits test is not complete. Currently, I only define the bit-fields for Alchemy because I wanted to see how well they performed for basic serialization. Also, all of my test implementations follow a similar pattern with a simple loop. I will need to devise a test that accesses the different bit-field values from these structures to test the access speed of the data. For now, they are simply processed in bulk as integers.

C++

struct Packed

uint32_t set_a;

uint16_t set_b;

uint8_t set_c;

};

C++

HG_BEGIN_PACKED (uint32_t, SetA)

HG_BIT_FIELD (0, fifteen, 15)

HG_BIT_FIELD (1, two, 2)

HG_BIT_FIELD (2, five, 5)

HG_BIT_FIELD (3, eight, 8)

HG_BIT_FIELD (4, one, 1)

HG_END_PACKED

HG_BEGIN_PACKED (uint16_t, SetB) HG_BEGIN_PACKED (uint8_t, SetC)

HG_BEGIN_FORMAT(Packed,

HG_DATUM(SetA, set_a),

HG_DATUM(SetB, set_b),

HG_DATUM(SetC, set_c)

);

3) Unaligned

While I have seen most message structures have taken care to try to properly align the different parameter sizes on proper boundaries, it is still possible to receive data that is not properly aligned in memory. This test purposely offsets the alignment of the data structure so none of the fields are properly aligned.

For those that are unaware, the x86 architecture is very accommodating and will not crash for unaligned memory. It will happily perform two partial reads, then OR your data together. Of course, there is a huge speed penalty to rely on this behavior.

C++

struct Unaligned

{

char ch;

uint32_t u32_a;

uint32_t u32_b;

uint32_t u32_c;

int16_t i16_a;

int16_t i16_b;

int16_t i16_c;

};

C++

HG_BEGIN_FORMAT(Unaligned,

HG_DATUM(char, ch),

HG_DATUM(uint32_t, u32_a),

HG_DATUM(uint32_t, u32_b),

HG_DATUM(uint32_t, u32_c),

HG_DATUM(int16_t, i16_a),

HG_DATUM(int16_t, i16_b),

HG_DATUM(int16_t, i16_c)

);

4) Complex

Complex is the test that contains a bit of everything, except a vector. All of the previous types are included in the format as well as an extra 32-bit integer. Furthermore, the Basic structure defined from a previous test is defined as an array. The current number of elements in the array is 10. This can be changed at the top of the benchmark type definition file.

C++

struct Complex

{

uint32_t seq;

Basic basic[k_complex_basic_count];

Packed bits;

Unaligned unaligned;

};

C++

HG_BEGIN_FORMAT(Complex,

HG_DATUM(uint32_t, seq),

HG_ARRAY(Basic, k_complex_basic_count, basic),

HG_DATUM(Packed, bits),

HG_DATUM(Unaligned, unaligned)

);

5) Array

This array test was added fairly recently. It contains a single field that is an array of 32-bit integers. The current size of this array is 256 elements. Similar to the array in the complex test, this value can be changed by adjusting the global variable.

C++

struct Array

{

uint32_t items[k_array_test_count];

};

C++

HG_BEGIN_FORMAT(Array_test,

HG_ARRAY(uint32_t, k_array_test_count, items)

);

6) No Conversion

This scenario contains 16 individual 32-bit integers, and the byte-order conversion step is omitted from the test. However, the data is still read in, copied, then written out to a separate buffer. As you will see in the results, this is one that Alchemy has a lot of room for improvement.

C++

struct NoConversion

{

uint32_t ch_0;

uint32_t ch_1;

uint32_t ch_14;

uint32_t ch_15;

};

C++

HG_BEGIN_FORMAT(NoConversion,

HG_DATUM(uint32_t, ch_0),

HG_DATUM(uint32_t, ch_1),

HG_DATUM(uint32_t, ch_14),

HG_DATUM(uint32_t, ch_15)

);

What a Waste of Time!

I completed the first set of features, and even received feedback from a production environment and used it to improve my design and implementation. It was time to create some benchmarks. I wanted to verify that I created something unique and useful, and that any trade-offs that existed were well worth the cost.

Alchemy was designed to be very simple. It should be simple to define a packed buffer format for binary wire transfer, portably and safely between a variety of different computer architectures. Maintainability was the most valued quality for this library. For those qualities, I felt it would easily be worth a 10% premium over a convoluted and hand-written solution that was possibly more efficient.

I was not prepared for the results that I received the first time I ran my 4 simple benchmark tests.

Denial led me to run the same program without any changes at least three or four more times, "It'll straighten itself out, right?!"

What?

The results were consistent each and every time.

I calmly stood up from my desk, said out loud "What a f***ing waste of 18 months!", and I walked away in disgust.

Alchemy was over 100 times slower than the naïve implementations that I wrote by hand. 100 time slower than the type of implementations that I had grown sick of maintaining for at least 10 years.

Take a Walk, Breathe, Relax

I admit, I got a little extremely worked up. I was anticipating great things. I gave myself a little bit of room for disappointment just-in-case Alchemy was slower, but that margin was only 10%, not 2 orders of magnitude. Cognitive Dissonance doesn't sit well with me. So the new relaxed persona that I brought back to my desk, sat down and simply dug in to see what could possibly be the issue.

Was it a Debug Build?

No.

I already experienced that in the first phase of my grief. I did see that I had initially compiled it in debug then watched and waited, and waited as it slowly chugged through the tests. Then I thought "woah, I can't wait to see how long the hand-written version takes." I blinked, and it was done.

Yes, debug builds of Alchemy are extremely slow because of all of the layers of one-statement abstractions. I will give more details in the summary.

I reconfigured for release, and I quickly worked passed denial. I already told you about my experience with anger.

Like Comparing Apples and Oranges...

I really can't tell you what happened to both bargaining and depression. They're probably repressed memories now. Either way, when I returned, I had already accepted that I would need to investigate. I have also learned through years of experience that there is always a better way. Better in this case would mean faster.

What Did I Find?

After only five minutes into my search, I recognized that I had brought dynamic-memory of the buffers completely into the Hg::Message object. I was using completely static buffers on the stack for the hand-written variant. I reasoned that had to account for a decent portion of the discrepancies. So yes, I basically tested a comparison of two different circumstances, apples vs. oranges, static vs. dynamic memory.

What Did I Do?

I did not have any mechanism in place to actually allow the user to provide the buffer for the Hg::Message to work with. A use case example would be a buffer stored on the stack that the caller wanted the packed result to be copied directly into. I did have the concept of a Hg::StoragePolicy, which allows the user to manage the source of allocated memory as well as provide rules for how to interact with buffers. However, to implement that solution would be a kludge that would result in an awkward API for the user.

I decided to simply overload the data member function of the message object. This second version would allow the caller to pass in a buffer and it's size. With a few internal adjustments to the Hg::Message implementation, I was able to make both versions transparently call the function that performs all of the actual work.

It turned out that I did need to create a specialized Hg::StoragePolicy for static data and a specialized Hg::MessageBuffer implementation. However, with the overloaded member to accept static buffers supplied by the caller, I saw an immediate increase in performance, an order of magnitude to be precise.

Fantastic! Alchemy is only 10 times slower than the naïve approach. Ignorance truly is bliss, because I was thinking "Hey, it could be worse! Like, 100x as slow."

A Closer Inspection

After comparing the relative percentage differences in performance, it was clear my BitList implementation was absolutely killing performance. It was like an anchor holding back a drag-racer. Since I used BitList types in two of my tests, I decided to improve that implementation next.

This is the teaser, the full-details will be revealed in the write up for my third, and current implementation in use for the BitList type. Along the way, I also decided to rename it PackedBit. Because essentially that is what the data-type does, and it helps separate itself from the notion of bit-fields altogether.

I discovered the virtual anchor that was halting performance was the growing collection of references that were added to the PackedBits structure. There was one new reference for each sub-field that was added. I allow for a maximum of 32 sub-fields in the PackedBits structure. Therefore, the amount of unique data fields packed into a single structure was directly related to the decrease in performance.

After I resolved this issue, things were starting to look up again, because now I was only 4x slower. In percentages, 400% slower is 40x slower than I was expecting and hoping.

Time to Profile

You know you are in the optimization phase when you turn to a code profiler to determine what your program is spending all of its time doing. It turns out, the majority of the time for both runs is spent in standard library calls, especially ::memcpy. That wasn't a big surprise to me, although I was surprised that the amount of time was 60%.

Excluding standard calls and digging deeper, what did I find?

- Copies, lots of copies; by the copy constructor no-less.

- Empty object creation and initialization for fields that were never used.

- More copies. This time by other methods of duplicating data in Alchemy.

There Was a Single Cause for All Three Inefficiencies

What was the cause you ask?

Stupidity? No, and that's harsh.

More like ignorance, or not completely understanding a language feature. There were a few cases of oversight as well.

My Use of References

I like references, because they simplify code in many circumstances. Just like pointers, they have limitations and some scenarios to be aware of. However, if your data will live on the stack while it is passed into a function as a parameter, they are fantastic. I have even converted verified pointers to references to make my syntax and code cleaner.

Now realize that a reference looks and behaves exactly like a pointer in hardware. It is simply a bit of syntactic sugar that eases the semantics of our code. I was well aware of this during my development.

My slip up occurred on the template functions that I created to request fields by index. The compiler wasn't happy enough with me simply passing in a single number with no parameters to deduce which template instantiation I was actually after. So I relented, and added an unused parameter to the function declaration. I have noticed that in this context pointers-to-the-type were generally used.

I was stubborn and stuck with the references, because I like them better when I can use them. I initialized my code with a default instance of the field-type, and moved on to the next task. Here is the problem. A Datum entry is defined similarly to this inside of a message format structure:

C++

typedef

Hg::detail::DeduceProxyType

< 0,

format_type

>::type Proxylen;

typedef Proxylen::datum_type datum_len;

Proxylen len;

datum_len& FieldAtIndex(const datum_len&)

{

return *static_cast<datum_len *>(&len);

}

There are a few typedefs that simplify the declarations for data types and functions. One of them is the named-variable the user interacts with, which is actually a proxy object. Then there is a function called FieldAtIndex that facilitates the compile-time reflection, and allows the address of the field for this index in the TypeList to be returned. For a uint32_t, like in this example, the default constructor will basically create a zero filled buffer when called by the parent container.

My reasoning failed to account for the large nested structure types and arrays for which I added support. FieldAtIndex was another function that was reported as a bottle-neck by my profiler. When I stepped through the code in the debugger I realized my mistake.

References cannot be NULL. When I would instantiate the function as shown in the example below, a temporary object was being created, always. This is very inefficient for something that is never even referenced.

C++

template< size_t IDX>

Datum<IDX, format_type>& FieldAt()

{

typedef Datum < IDX,

format_type> datum_type_t;

return this_type::FieldAtIndex(datum_type_t());

}

Now I understand why pointers are used in this context. I modified my implementation to use a pointer in this declaration and I also changed the way this function is invoked.

C++

datum_len& FieldAtIndex(const datum_len*)

{ }

template< size_t IDX>

Datum<IDX, format_type>& FieldAt()

{

return this_type::FieldAtIndex((datum_type_t*)0);

}

I Search for Any Unnecessary Copies

I fired up the benchmark test again, and saw another enormous boost in performance. I continued to comb through the code looking for inefficiencies, then running the tests. Slowly continuing to improve the overall performance. Each iteration I made sure that I assigned values to references if they were only temporaries, or were going to be passed into another function that requires a reference. I found a handful of cases. These were primarily located in my specialized implementations of the byte_order, pack, and unpack calls.

Finally, I Could See Alchemy's Potential

I was much more pleased with the results after all of the adjustments that I made above. Some of them I was directed towards with the help of a profiler, others were realizations of inefficient techniques as I was perusing my code.

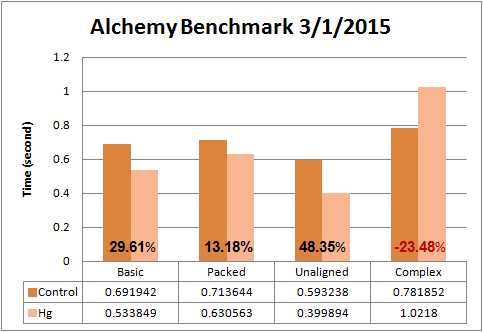

These are the first benchmarks that I released:

Three of the four tests perform very well now. The only thing that troubled me was the nested type fields were consistently 25%-33% slower. This is the performance that I lived with for the next few months. I figured that I would revisit optimizations after some time away from these improvements. The benchmark/optimization process up to this point was probably about 3 weeks.

One Last Change

For about four months, I just could not improve the performance of nested types beyond about 25% slower than the hand-written implementation. I tried many modifications, hoping to find something. As I started writing this article, I briefly looked over my code once more while adding a few more tests, and I found it!

There was one location where I was making an unnecessary copy, rather than taking a reference. This code is located in the byte-order conversion details. The functor that I create an pass into the ForEachType meta-function is initialized with an input and accepting output message. I was making a copy of the const input message rather than storing it as a reference.

The difference in changes is shown below:

C++

const from_message_type input;

explicit

ByteOrderConversionFunctor(

const from_message_type& from,

to_message_type& to)

: input(from)

, output(to)

{ }

const from_message_type& input;

Generally, the performance is comparable if not better than the hand-written implementation.

Alchemy FTW!

Current Results

This test reveals an area where Alchemy will need to focus at some point in the future. The extra processing that is performed just for the copy adds an enormous amount of overhead.

Slow Debug Mode

As far as debug-mode. Processing large sets of data is extremely slow because of all of the layers of abstraction. Most of the layers simply disappear as variables are directly assigned inline and the code becomes compiled almost to a level of a dedicated hand-optimized solution for each new type.

I have plans to make it simple to generate an Alchemy library that contains message data types and their conversion functions. These objects could then be compiled into a release build for use when debugging other regions of your applications.

Summary

I realize that the benchmarks that I have created are not a comprehensive test of the library. That is why I still have a few doubts about making claims of discovering the process of Cold Fusion for software. As a suggestion, one of my colleagues suggested I simply claim to have discovered Luke-warm Fusion for now. I would like to add quite a few more tests, as well as include some examples that are adapted from real-world situations. I will continue to update the status on GitHub as well as any notable improvements here at Code of the Damned.

I am working on a dedicated landing page for Alchemy to consolidate all of the information that I have catalogued up to this point. I will also place the latest performance results there when I activate that page.