Introduction

FlatMapper is a library to import and export data from and to plain text files.

Plain text files are still very widely used on legacy systems and still a favorite format for Human-to-System interface. This project was born from a need to read and write data from plain text files. I needed a lightweight module that would do just that, no extra needless weight. Since most of the libraries that I found at the time were either code intrusive, or had extra dependencies, I decided to write my own.

My goal was to write it with a nice fluent API, must be simple to use, minimal dependencies and dependents and fast! It must work with any POCO and should not be bloated with features that are not part of the core. I had a great time developing it (I still do).

Since the project that originated this library, I already used it again on 2 other projects.

Key Features

- Fast - Uses Static Reflection and Dynamic methods

- LINQ Compatible

- It supports character delimited and fixed length files

- Non intrusive - You don't have to change your code. Any POCO will work

- No external dependencies

- Iterative reads - Doesn't need to load the entire file into memory

- Multi-line support (Only on character delimited and quoted)

- Nullables support

- Enums support

- Vitually any type support with FieldValueConverters

- Fluent Interface

- Per line/record Error handling

- Simple to use

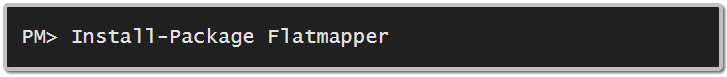

How to Install

To install Flatmapper, run the following command in the Package Manager Console:

How to Use

Before we start reading or writing from files, we need to specify the layout of the file. We only need to do this once.

Imagine the following scenario. We need to read and write from text files to and from this class:

public class TestObject

{

public int Id { get; set; }

public string Description { get; set; }

public int? NullableInt { get; set; }

public Gender? NullableEnum { get; set; }

public DateTime Date { get; set; }

}

In the following sections, you'll find out how to setup the file layout with both fixed length and character delimited.

Fixed Length Layout

var layout = new Layout<TestObject>.FixedLengthLayout()

.HeaderLines(1)

.WithMember(o => o.Id, set =>

set.WithLength(5).WithLeftPadding('0'))

.WithMember(o => o.Description, set =>

set.WithLength(25).WithRightPadding(' '))

.WithMember(o => o.NullableInt,

set => set.WithLength(5).AllowNull("=Null").WithLeftPadding('0'))

.WithMember(o => o.NullableEnum, set => set.WithLength(10).AllowNull("======NULL").WithLeftPadding(' '))

.WithMember(o => o.Date, set => set.WithLength(19).WithFormat(new CultureInfo("pt-PT")));

Delimited Layout

var layout = new Layout<TestObject>.DelimitedLayout()

.WithDelimiter(";")

.WithQuote("\"")

.HeaderLines(1)

.WithMember(o => o.Id, set => set.WithLength(5))

.WithMember(o => o.Description, set => set.WithLength(25))

.WithMember(o => o.NullableInt, set => set.AllowNull("NULL"))

.WithMember(o => o.NullableEnum, set => set.AllowNull("NULL"))

.WithMember(o => o.Date, set => set.WithFormat(new CultureInfo("pt-PT")));

With this setup is also possible to have multi-line fields, as long they are quoted.

Reading and Writing

The reading is interactive, meaning that only when a new item is requested, the data will be read. This helps to avoid reading the entire file into memory and only then parsing the data. Data is parsed on demand.

This library connects into the `Stream` class of the core framework. This way, don't have restrictions in the encoding and it's outside the scope of the library to free any resource.

using (var fileStream = File.OpenRead("c:\temp\data.txt"))

{

var flatfile = new FlatFile<TestObject>(layout, fileStream);

foreach(var objectInstance in flatfile.Read())

{

}

}

using (var fileStream = File.OpenWrite("c:\temp\data.txt"))

{

var flatfile = new FlatFile<TestObject>(layout, fileStream);

flatfile.Write(listOfObjects);

}

Error Handling

Optionally, per line/object instance, you can control the behavior if any error is thrown due to some unexpected format or any other error, for that matter.

By specifying a `Func<string, Exception, bool>` into the `handleEntryReadError` parameter of the constructor of `FlatFile<T>`, every-time any input error occurs, that function is executed, with the line and the `Exception` that was thrown. If that function returns `true`, the Exception is ignored, and the import continues. If not, the `Exception` that originated the call, will be re-thrown.

private bool HandleEntryReadError(string line, Exception exception)

{

Log.LogError("Error reading line :" + line, exception);

return true;

}

var flatfile = new FlatFile<TestObject>(layout, fileStream, HandleEntryReadError);

Plays Well With Others

One of the core philosophies behind this library is that there are other libraries that already excel on their goal, so this should not be a one library make all, but a one goal library that follows the standards and can play well with others.

An example of that is how this library can work with Dapper in order to import and export data from the database.

public void ImportData(IDbConnection connection)

{

using (var file = File.OpenRead(ImportFile))

{

var flatfile = new FlatFile<TestObject>(layout, file);

var itemsEnumerable = flatfile.Read();

connection.Execute("spInsertItem", itemsEnumerable);

}

}

public void ExportData(IDbConnection connection)

{

using (var file = File.OpenWrite(ImportFile))

{

var flatfile = new FlatFile<TestObject>(layout, file);

var query = "select Id, Description, NullableInt from TestDataTable";

var items = connection.Query<TestObject>(query);

flatfile.Write(items);

}

}

Since is works with every POCO and there is no code intrusion in any way, there is no outstanding reason why this won't work as well with EntityFramework or any other micro or full fledged ORM.

Performance

Basic performance tests are made on https://github.com/kappy/FlatMapper.PerformanceTests.

For the test, I used a record with 3 parameters (very similar to the previous examples), 1.000.000 of them.

The files generated were about 22 MB, and these were the results:

The test was run on a i7 Q740 @ 1.73GHz, SSD, and 6GB Ram.

This test used the nuget package. On recent tests, I discovered that a local build from the sources, using VS 2015, results in quite some performance gain, maybe because of different compiler versions.

The Road to 1.0

Before the 1.0 release, there is some stuff that I would like to have in the library.

- Async (for this to actually work, it will need to work by blocks, dropping the Iterative reads feature)

Ideas

Some other ideas for after 1.0.

- Per line/record Layout.. using a discriminator as base.

License and Contributions

Contributions are welcome, either bug-fixes, new features, or just filing up issues with ideas and suggestions. Any feedback is appreciated.

This library is open source and licensed under Apache License 2.0.

Thanks

- Need to thank my wife for all those nights where the attention was on the computer and not on her;

- My company Mindbus that allowed me not only to use my library on live projects but helped me fine in tuning it;

- My colleague Nuno Santos, the man that originally wrote the Multi-line parser.