Introduction

I do a lot of database administration work for my customers, and quite often I receive requests like this:

Quote:

How big is our database today? How big was it yesterday? Last month? Last year?

When they ask this sort of question, non-technical people don't want to know the gigabyte size of the SQL Server master data file or the transaction log file. Those metrics don't mean anything to them. Instead, they want to know how many tables are in the database, how many rows are in the database (across all tables), and how many rows are in each table.

I created a simple script to calculate these metrics. (An application could be built to store a history of database size metrics, which would then be useful for charting and predicting database growth. I haven't got to that quite yet...)

This script has been useful in my own DevOps work too, especially when I'm looking at someone else's database for the first time and I want an overall impression of "weight distribution" across database entities.

Using the Code

It's a very basic script, without any rocket surgery. :)

CREATE TABLE #Counts

(

TableName VARCHAR(255) ,

RecordCount INT

);

EXEC sp_MSforeachtable 'INSERT #Counts (TableName, RecordCount) SELECT ''?'', COUNT(*) FROM ?';

SELECT COUNT(DISTINCT TableName) AS [Tables]

FROM #Counts;

SELECT SUM(RecordCount) AS [Rows]

FROM #Counts;

SELECT REPLACE(REPLACE(TableName, '[', ''), ']', '') AS TableName ,

RecordCount ,

CAST(CAST(100.0 * RecordCount / ( SELECT SUM(RecordCount) AS [Rows]

FROM #Counts

) AS DECIMAL(5, 2)) AS VARCHAR) + '%' AS Weight

FROM #Counts

ORDER BY TableName ,

RecordCount DESC;

SELECT REPLACE(REPLACE(TableName, '[', ''), ']', '') AS EmptyTableName ,

RecordCount

FROM #Counts

WHERE RecordCount = 0

ORDER BY TableName ,

RecordCount DESC;

DROP TABLE #Counts;

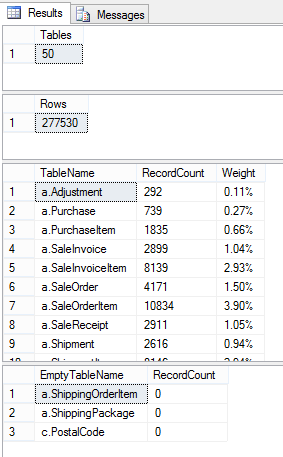

Here, we simply count the records per table and load the results into a temporary table. From this, we can derive:

- The number of tables in the database

- The number of rows in the database (across all tables)

- The number of rows in each table, and

- The empty tables (with no rows)

The output looks like this:

Points of Interest

Of course, the above script does not take into consideration the fact that a table might contain a small number of records and still heavily "outweigh" other tables in terms of disk space usage.

For example, a table containing one record that stores a binary copy of a 4GB video is obviously "bigger" (in terms of disk space) than a table containing one hundred records that store mailing addresses in plain text.

When this is an important consideration, you can use standard reports in SQL Server like "Disk Usage by Top Tables", or execute queries like this:

SELECT t.name AS TableName ,

i.name AS IndexName ,

SUM(p.rows) AS RecordCount ,

SUM(a.total_pages) AS PageCount ,

SUM(a.used_pages) AS UsedPages ,

SUM(a.data_pages) AS DataPages ,

( SUM(a.total_pages) * 8 ) / 1024 AS DiskSpaceMB ,

( SUM(a.used_pages) * 8 ) / 1024 AS UsedSpaceMB ,

( SUM(a.data_pages) * 8 ) / 1024 AS DataSpaceMB

FROM sys.tables t

INNER JOIN sys.indexes i ON t.object_id = i.object_id

INNER JOIN sys.partitions p ON i.object_id = p.object_id

AND i.index_id = p.index_id

INNER JOIN sys.allocation_units a ON p.partition_id = a.container_id

WHERE t.name NOT LIKE 'dt%'

AND i.object_id > 255

AND i.index_id <= 1

GROUP BY t.name ,

i.object_id ,

i.index_id ,

i.name

ORDER BY OBJECT_NAME(i.object_id);

And here is another useful script, when you need to query for the disk size of your data and log files. (Thanks to CodeProject member @PIEBALDconsult for this one.)

WITH CTE AS

(

SELECT Type, SUM(size) Size

FROM ( SELECT SUBSTRING(filename, LEN(filename) - 2, 3) Type ,

CAST(size / 128.0 / 1024.0 AS NUMERIC(20,2)) Size

FROM dbo.sysfiles ) T GROUP BY Type

)

SELECT @@servername AS ServerName ,

DB_NAME() AS DatabaseName ,

*

FROM ( SELECT Size DataSizeInGB FROM CTE WHERE Type = 'mdf' ) [Data]

CROSS JOIN

( SELECT Size LogSizeInGB FROM CTE WHERE Type = 'ldf' ) [Log]