Introduction

In this tutorial I will show how to load data from an Excel file to load in a

dimension table in an incremental way.

Background

Each time you want to populate your dimension table data you need to truncate the destination and reload from the source.

But if the table size is huge then it is not the best solution. So instead of delete insert, we will populate data by insert new row and update changed row.

What you need

This script was tested in SQL Server 2008.

Create project

First create a database and table from script.

use master

go

if db_id ('bi_Stage') is not null

drop database bi_Stage;

go

create database bi_Stage

on primary (name = 'bi_Stage'

, filename = 'E:\BI\DB\Stage\bi_Stage.mdf'

, size = 5 MB, filegrowth = 5 MB)

log on (name = 'bi_Meta_log'

, filename = 'E:\BI\DB\Stage\bi_Stage_log.ldf'

, size = 2 MB, filegrowth = 512 KB)

collate SQL_Latin1_General_CP1_CI_AS

go

alter database bi_Stage set recovery simple

go

alter database bi_Stage set auto_shrink off

go

alter database bi_Stage set auto_create_statistics off

go

alter database bi_Stage set auto_update_statistics off

go

SET ANSI_NULLS ON

GO

use bi_Stage

go

if exists

(select * from sys.tables

where name = 'chart_of_account')

drop table chart_of_account

go

BEGIN

CREATE TABLE [dbo].[chart_of_account](

[account_id] [int] NULL,

[parent_account_id] [int] NULL,

[account_code] [nvarchar](50) NULL,

[account_name] [nvarchar](150) NULL,

[account_type] [nvarchar](50) NULL

) ON [PRIMARY];

END

GO

SET ANSI_NULLS OFF

GO

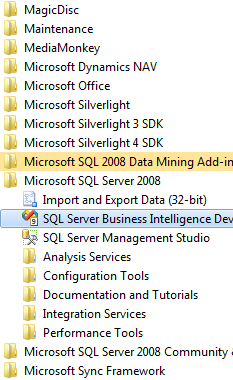

Then open SQL Server Business Intelligence Development Studio.

Then go to File->New->Project and select Integration Service Project.

Select "Data Flow Task" from "Control Menu" and drag it on "Control Flow" tab. Then double click it.

Now select "Excel Source" from "Data Flow Sources" and drag it on "Data Flow" tab. Then double click it to assign file source.

Click OK and then again click OK.

Select "Data Conversion" from "Data Flow Transformation" and drag it on "Control Flow" tab. Connect it from "Excel Source".

Then double click it. We need to convert data which is coming from source.

Rename "Output Alias" field, change "Data Type" value from default value to add prefix "src_" to distinguish, and change "Length". Click OK.

Select "OLE DB Source" from "Data Flow Transformation" and drag it on "Control Flow" tab. Then double click it.

We need to assign data source.

Click New button for create New connection or select from already existing connection.

Click New button.

Assign "Server Name", select "Authentication", select "Database", and click "OK".

From left tab select "Column" and then click OK.

Select "Sort" from "Data Flow Transformation" and drag it on "Control Flow" tab. Connect it from "Data Conversion". Then double click it.

Select column which needs to be sorted.

Select another "Sort" from "Data Flow Transformation" and drag it on "Control Flow" tab. Connect it from "OLE DB Source".

Then double click it. Select column which needs to be sorted.

Select "Merge Join" from "Data Flow Transformation" and drag it on "Control Flow" tab. Connect it from "Sort".

Select Join Type as "Left outer join" and select all fields from left panel and all fields from

right panel. Click OK. Connect "Merge Join" from "Sort Dest".

Select "Conditional Split" from "Data Flow Transformation" and drag it on "Control Flow" tab.

Connect it from "Merge Join". Right-click the Conditional Split and click Edit to open the Conditional Split Editor.

Expand the NULL Functions folder in the upper right of the Conditional Split Transformation Editor. Expand the Columns folder in the upper left

side of the Conditional Split Transformation Editor. Click in the "Output Name" column and enter "New" as the name of the first output.

From the NULL Functions folder, drag and drop the "ISNULL( <<expression>> )" function to the Condition column of the New Rows condition:

Next, drag account_id from the columns folder and drop it onto the "<<expression>>" text in the Condition column. "New" should now be defined by

the condition "ISNULL( [account_id] )". This defines the WHERE clause for new rows - setting it to "WHERE account_id Is NULL".

Type "Update" into a second Output Name column. Add the expression "((src_code != account_code) || (src_name != account_name) || (src_parent_id != parent_account_id) ||

(src_type != account_type))" to the Condition column for the Update Rows output. This defines our WHERE clause for detecting changed rows - setting it to

"WHERE ((src_code != account_code) || (src_name != account_name) || (src_parent_id != parent_account_id) || (src_type != account_type))". Note "||" is

used to convey "OR" in SSIS Expressions:

Change the "Default output name" from "Conditional Split Default Output" to "Unchanged".

Select "OLE DB Destination" from "Data Flow Destination" and Drag it on "Control Flow" tab.

Connect it from "Conditional Split" and select "New" and assign connection and map field.

Select "OLE DB Command" from "Data Flow Transformation" and Drag it on "Control Flow" tab.

Connect it from "Conditional Split" and select "Update" and assign "Connection Manager" and map field.

Click OK. Now we are ready to execute the SSIS.

If you execute the package with debugging (press F5), the package should succeed and appear as shown here:

Now Execute the command to test:

SELECT [account_id]

,[parent_account_id]

,[account_code]

,[account_name]

,[account_type]

FROM [bi_Stage].[dbo].[chart_of_account]

Now I have changed some data in Excel file and again execute the package with debugging (press F5), the package should succeed and appear as shown here:

Conclusion

I have used one more "Sort Dest" for sorting destination table. I think it will be better if we use "ORDER BY" clause in

"OLE DB Source" rather than use "Sort". Performance can be varied. I hope this might be helpful to you!

History

None so far.