After spending about 2 years with a few different graphing libraries trying to visualize big data quickly, I ended up rewriting someone else's library to make it fast enough. Doing that taught me a lot about what I didn't want in a graphing package. I wanted it to be simple, small, fast and easy to use. I wanted it to work well on large data sets instead of looking extra pretty on small ones.

Introduction

There are many packages for creating line graphs, and they are really nice and one could say "this is another one", but if you take a close look, they are really all different. Highcharts, Plotly, Bokeh and others are nice but just as someone might love Highcharts for one thing, and Bokeh for another, none of these and the 10 others I examined really fit mine. I lived with Highcharts but it wasn't a great fit for me.

It was written with the following features in mind:

- Small library size (very fast animatable page reloads)

- Fast graphing for large data sets: 10k points less than 20ms fast, 10-25x faster than others

- Fast enough graphing to stream data in

- Intuitive pan and zoom controls

- Phase (radian) polar graphs for signal visualization

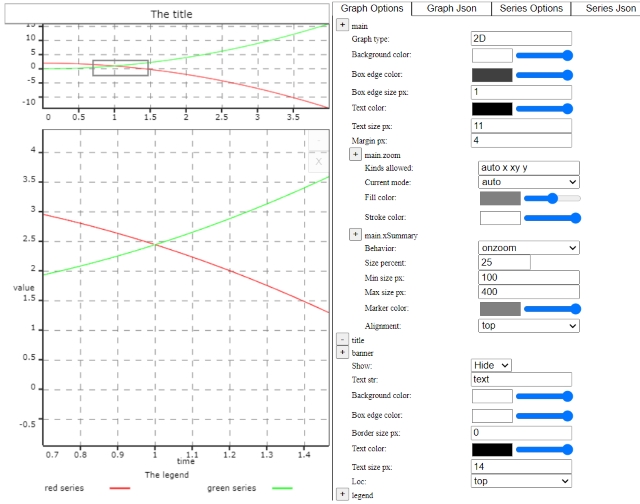

- A graph editor that outputs json

- Line and scatter graph centric, also heatmaps and polar for signals

- Intuitive fast zoom in/out and pan features

- Few dependencies - I use JQuery, that's it.

- Resize automatically to the frame the graph is in

- Very little user code to make a graph, no code needed for pan, zoom, resize

- Simple HTML supporting any browser, QT windows, C# and Xamarin, Android for multiplatform apps

This is not modern coffee script, or any of the modern approaches to JavaScript. I wrote it in a very old school way with lots of comments to make it easy to read and inspect. I wrote it from the mindset of wanting something that could be formally QA'd for defence or medical standards. That said, there are still a bunch of cool code techniques I used even though it's not that modern looking.

Cool Things

Data Series

I chose the data series class to be a set of segments, each segment has a min, max and cached values. This is great because as the data grows in size, the software needs to only look at the summary of the data (the mins/maxes of sets of data) to scale the points and new hunks of data can be appended. It also means as data becomes old (stale) when streaming the array isn't resizing in memory based on individual points, sets of points are discarded. This makes garbage collection smoother.

Data Hive

Often, users will have multiple graphs that are of the same data. For example, suppose you are graphing 5 series but they share the same x-series timestamps. Rather than have 5 sets of the x values, a mild indirection means fetched data is shared if it is the same data set just used in multiple graphs. This is pretty common in sensor data. Using less memory means storing, fetching and managing the garbage collection of less data. It also makes zoom/pan very fast.

Tree Edit

This is a common design pattern I use in C++, C#, Java, Python, etc. It basically walks an object and knows if the object pieces are colors, lines, regions, etc. It then knows how to create a simple UI widget to that piece and continue walking the object tree. This is a great way to create test, automation and debug UIs. In this case, it builds the editor for users to style their graphs.

Using the Code

Building a graph is pretty simple, test.html shows how to do that.

The HTML should look roughly like:

<div id='testLineGraph' class='ggGraph_line' style='width: 100%; height: 100%;'>

The JavaScript to initialize it all:

ggGraph.initialize();

ggGraph.setupTestData();

ggGraph.setupTestLineGraph('testLineGraph');

ggGraph.getGraph('testLineGraph').draw();

History

- 23rd March, 2022: Initial version