FFmpeg version 7.0 was released in April 2024. In this article, you can learn to build new FFmpeg command-line binaries from the git source and test-drive some of its new features such as the ability to dynamically generate QR codes on video.

Introduction

Last week, a new version of FFmpeg — version 7.0 — was released. In this article, I shall limit myself to changes in the FFmpeg command-line tools (CLI), not the FFmpeg library. However, of note among the new set of coders, decoders, muxers, demuxers and filters is a native decoder for the H266 codec (VVC — Versatile Video Coding). The new version also supports Immersive Audio, which is an immersive sound or 3D sound alternative to Dolby Atmos. (Immersive sound has support for any number of channels, particularly height channels in addition to surround channels.)

What is new in FFmpeg v7.0 CLI

Users of the FFmpeg command-line tools (ffmpeg, ffprobe and ffplay) would be interested in:

- Multi-threaded conversion: FFmpeg will perform muxing, demuxing, encoding, decoding and filtering on different threads. You will find improved performance only when there are multiple input sources and/or output destinations. If you are converting a single file from one format to another file with a different format, the

ffmpeg command will not leverage this new ability. - Loopback decoders: The processed pre-muxing output streams can be fed back as input to a filter.

- New filters: Among the new filters, there is one which can place a dynamically generated QR code over a video.

- Removal of deprecated API: Options such as

-map_channel have been removed. See my previous article (on FFmpeg v6.0) on alternatives.

Compile FFmpeg 7 from source

Like last time, I followed the compilation steps given in the official FFmpeg wiki for the external libraries but when it was time to compile FFmpeg CLI, I took a slight detour.

If you blindly follow the wiki, you will not get access to new features such as the QR code filter. So, I studied the configure file in the ffmpeg_source directory and made appropriate changes to the configure statement from the wiki. I enabled whatever external library that was not automatically detected but could be manually compiled or installed. (That is, in another terminal window, I manually compiled and/or installed these external libraries using the package manager of my Linux distro.)

I compiled my custom ffmpeg build thusly:

cd ~/ffmpeg_sources && \

wget -O ffmpeg-snapshot.tar.bz2 https://ffmpeg.org/releases/ffmpeg-snapshot.tar.bz2 && \

tar xjvf ffmpeg-snapshot.tar.bz2 && \

cd ffmpeg

cp VERSION VERSION.bak

echo -e "$(cat VERSION.bak) [$(date +%Y-%m-%d)] [$(cat RELEASE)] " > VERSION

PATH="$HOME/bin:$PATH" PKG_CONFIG_PATH="$HOME/ffmpeg_build/lib/pkgconfig" ./configure --prefix="$HOME/ffmpeg_build" --pkg-config-flags="--static" --extra-cflags="-I$HOME/ffmpeg_build/include" --extra-ldflags="-L$HOME/ffmpeg_build/lib" --extra-libs="-lpthread -lm" --ld="g++" --bindir="$HOME/bin" \

--enable-gpl --enable-version3 --enable-static --enable-nonfree \

--enable-libaom --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-chromaprint --enable-libdav1d --enable-libfdk-aac --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-frei0r --enable-libgme --enable-libharfbuzz --enable-libjxl --enable-liblensfun --enable-libmp3lame --enable-libopencore-amrwb --enable-opengl --enable-libopus --enable-libopenjpeg --enable-libopenmpt --enable-libsvtav1 --enable-libsmbclient --enable-libtheora --enable-libxvid --enable-libsnappy --enable-libmysofa --enable-libopencore-amrnb --enable-libmodplug --enable-libpulse --enable-libqrencode --enable-librtmp --enable-libshine --enable-librubberband --enable-libshine --enable-libsoxr --enable-libsnappy --enable-libspeex --enable-libtheora --enable-libtorch --enable-libtls --enable-libtwolame --enable-libvidstab --enable-libvorbis --enable-libv4l2 --enable-libvo-amrwbenc --enable-libvpx --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzimg --enable-libzvbi --enable-gcrypt --enable-gmp --enable-gnutls --enable-ladspa --enable-mediacodec --enable-mediafoundation --enable-openal --enable-opencl --enable-pocketsphinx

PATH="$HOME/bin:$PATH" make

make install && hash -r

The CLI tools will be copied to your $HOME/bin directory. Usually, this directory will be included in PATH environment variable and you will be able to use ffmpeg, ffprobe and ffplay executables right away. The documentation files though will require manual copying to system directories.

Unlike last time (with version 6), someone had mercifully updated the RELEASE git file with the correct version number so there was no need to manually edit it.

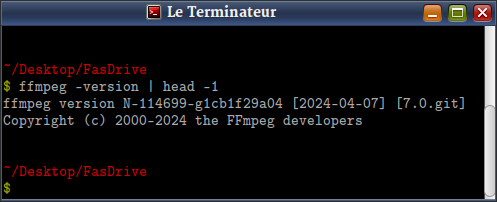

Thus, the output of ffmpeg command with the -version option is more informative in my custom build.

qrencode filter

Using the qrencode filter, you can dynamically generate QR codes anywhere on your video. No need to mess with QR code image input files and overlay filters. This new filter does both — creating the QR code and overlaying it on the video — in memory.

ffplay -vf "qrencode=q=120:text=www.vsubhash.in:x=W-Q-20:y=20:Q=q+10" \

NamibiaCam-Ostrich-Party.mp4

If you want to show multiple QR codes at different moments in the video, just daisy-chain the filters with different timeline filter option values. Also, remember to pad the QR code or use a contrasting background colour than the video (check documentation for additional filter options) so as to prevent QR scanner apps from getting confused.

There is also a new qrencodesrc source filter. As it does not seem to have a duration option, you will have to find other means to trim it.

ffplay -f lavfi -i "qrencodesrc=text=WWW.VSubhash.IN:q=200,trim=end=5"

tiltandshift filter

This filter has been described as taking inspiration from tilt-and-shift photography. It prefers videos with a static background on which small changes can be sped up.

ffplay -vf "split[v1][v2];

[v2]tiltandshift[ts];

[v1][ts]vstack" \

Puerto-Sotogrande.mp4

Incidentally, this animation was also created using FFmpeg. FFmpeg seems like a one-stop multimedia shop! These days, I create all my videos using FFmpeg. Some of these FFmpeg commands may be as long as the Illiad but no matter how many edits, FFmpeg gets invoked only once. It is that powerful!

Loopback decoders

With the new -dec option, you can use a pre-muxer (or post-encoder) stream and use it as an input stream for a filter.

Suppose that you want to compare the video quality for two different encoder settings. How would you do it?

ffmpeg -i The-Stupids-Trailer.mp4 \

-map 0:v:0 -c:v libx264 -crf 31 -preset medium \

-f null -t 20 - -dec 0:0 \

-map 0:v:0 -c:v libx264 -crf 31 -preset ultrafast \

-f null -t 20 - -dec 1:0 \

-filter_complex '[dec:0][dec:1]hstack[v]' \

-map '[v]' -c:v ffv1 -y loopback-decoder-test.mkv

Do remember that the ffv1 encoder is a lossless one. If you do not limit the time (like I have done, down to 20 seconds), your disk may run out free space.

Removed options

Options that were deprecated in version 5.1 such as -map_channel have been removed in version 7. However, most Linux distros will take quite a while to update to this new version. Kodi came out with a new version recently (after FFmpeg version 7 was released) but it has only updated to FFmpeg version 6. In my book Quick Start Guide to FFmpeg and my previous CodeProject article for version 6, I have provided new alternatives to the -map_channel command. My FFmpeg binaries were built from the latest git source and my FFmpeg executables were newer than then release version. However, the loopback decoders are totally new to me. Refer the HTML documentation generated by the build process (compilation) detailed above.

History

Version 1: Created.

Version 2: Language cleanup and padded QR info.

Version 3: X-coordinate of QR code fixed. Alt text for images added.