Introduction

Mathematical operations and functions can have a negative

impact on the performance of an algorithm when the operands or arguments it

includes possess a considerable time complexity. For instance, the

multiplication of large numbers is a complex operation that is usually solved by

algorithms that bypass the disadvantage of the traditional sum and provide a

more effective and less time consuming method. The same happens with exponentiation

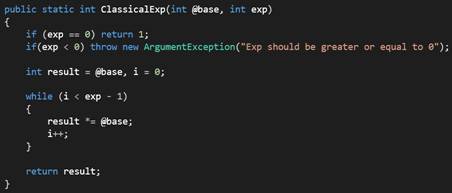

when the exponent is a large number. The classical algorithm for exponentiation

and the first that pops up into any programmer’s mind is pretty

straightforward; it loops from 1 to exp (where exp is the exponent to raise the

base) and multiplies the base by a variable result in each loop, similar to the

following code.

The problem with the previous algorithm is that it has a

high cost when the exp is a large number like 10000 or 100000. A more effective

alternative could be achieved if the exponent is considered as a binary number

and this approach is known as the binary exponentiation algorithm which runs in

O(log(exp)) contrary to the classical version which runs in O(exp), obviously

more expensive. The next code details the algorithm.

The idea with binary exponentiation is to use the binary

representation of the exponent to reduce in log2 the number of iterations

required to produce a result. A loop goes from left to right in the binary representation

of the exponent and multiplies the value of a variable result by itself. This

variable stores base^(2^b_m*b_k +, …, + 2^b_j*b_i = b’) (where b’ is the binary

prefix discovered in the loop at a given moment) in order to produce the value (base^(2^b_m*b_k

+, …, + 2^b_j*b_i = b’))^2 which equals base^(2^(b_m+1)*b_k +, …, +

2^(b_j+1)*b_i + 2^(b_j)*b_i -1 = b’’). If a 1 digit is found then is also

necessary to add base^1 to the result.