Introduction

This tip will be of interest for anyone looking for a way to perform traces with SQL Server Express in real-time much like SQL Server Profiler, instead of using a file output. Using a file output usually means implementing a test plan that gives sufficient coverage in one or more areas of an application or service, then examining the results when the test plan is completed. But sometimes, I want to test in a way that allows a much more dynamic approach, giving immediate feedback while examining varying cases of causes and effects. I find it more useful during the development phase of an application or service, and also for post development diagnostics.

Background

As many are aware, SQL Server Express does not come with SQL Server Profiler. When searching for ways to perform traces similarly with SQL Server Express, I found little or no documentation that would assist in writing a solution to perform the task in the same manner. Nearly all searches resulted in advice for configuring server-side traces using functions and stored procedures that come with the full and express versions of SQL Server, but all also without steps for getting the output trace data in real-time.

Microsoft's documentation for using these features is quite thorough, but also consistently indicate that the features are provided for performing server-side traces that output to a file, noting that the data in the files can be examined after tracing is complete. Generally speaking, if we want to examine SQL trace outputs in real-time from a client tool, we need to purchase the full version which is bit much for something I use for development on my own machine.

I recommend referring to Microsoft's own documentation: SQL Trace. I found this to be a very useful reference and much of what I learned from that documentation is applied in this solution.

As for the undocumented pieces that gets things to work the way I was most interested, I've been piecing together bits here-and-there over quite some time, from whatever I could glean from the blogosphere. I had yet to find a complete set of code that does this, so parts of the following is the result of trial and error along with some educated guesswork and maybe even a dash or two of luck.

Using the Code

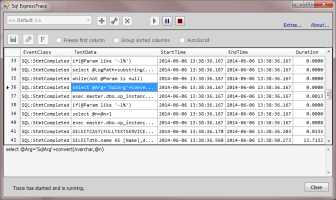

The C# code included with this tip can be used as-is by building the single solution. The solution contains two projects; The first project is SqlExpressTraceLib which defines a library that applies the concepts described in this tip. The second project is SqlExpressTraceUI, a Windows application that serves as a demo for applying the library interfaces to perform traces. Though I refer to it as a demo, I find it is currently complete enough to use regularly.

Points of Interest

The heart of the library is the TraceController class. This class provides the means to create, control and monitor the trace process on the server. When creating the trace, it applies trace definitions that include events, columns and filters in much the same way it would be done using the aforementioned documented stored procedures and functions. One key exception is that it sets certain parameters to null when using sp_trace_create, in particular the @trace_file parameter. This is the first of the undocumented steps:

using (SqlCommand command = new SqlCommand("sp_trace_create", connection))

{

command.CommandType = CommandType.StoredProcedure;

...

command.Parameters.Add("@traceid", SqlDbType.Int).Direction = ParameterDirection.Output;

command.Parameters.Add("@options", SqlDbType.Int).Value = 1;

command.Parameters.Add("@trace_file", SqlDbType.NVarChar, 1).Value = DBNull.Value;

...

command.ExecuteNonQuery();

...

}

The TraceController also provides the means to control the running states of the defined trace, as well as getting the trace data from the server. And this is where it really differs. Once the trace is started, the TraceController creates a SqlDataReader instance that reads data returned from sp_trace_getData, the second undocumented step. Note command.CommandTimeout = 0. Setting the command to never time out is not recommended for your average call to SQL Server. But then this is no average call:

SqlCommand command = new SqlCommand("sp_trace_getdata", connection);

command.CommandType = CommandType.StoredProcedure;

command.CommandTimeout = 0;

...

command.Parameters.Add("@traceid", SqlDbType.Int).Value = this._traceId;

command.Parameters.Add("@records", SqlDbType.Int).Value = 0;

...

return command.ExecuteReader(CommandBehavior.SingleResult);

There is an implication here that the command process on the server is kept open for the duration of the trace. Every time a specified event is recorded, data is returned. Until that happens, the call to the SqlDataReader.Read() method sits waiting. If it returns a false, the trace has either been closed or stopped(paused) on the server. To accommodate this waiting for trace events, I created the monitorServerTraceEvents method that runs on a separate thread. It's not quite as straight forward as getting one record with all the column data for a trace event, there are values to read and interpret to indicate when and if the data returned represents an event with column data. The monitorServerTraceEvents method as it is implemented pretty much explains it. But the real point here is that when a traced event data IS returned, it's one piece of column data at a time, in bytes.

The record class that we use to hold all of the column data is TraceRecord. There are some particulars about this otherwise would-be-simple class that handles issues regarding the event data returned from the server, as well as determining how the data needs to be displayed. When constructing a record that represents an event, each piece of column data for the event is applied with the TraceRecord.SetColumnValueFromBytes method. Here, the byte data is converted to the corresponding data type, and then to a formatted string. The column data types are provided through a static custom dictionary called TraceColumnsInfoList, indexed by column Ids and column Name.

public void SetColumnValueFromBytes(int columnId, byte[] bytes)

{

string stringVal = string.Empty;

switch (TraceInfo.TraceColumnsInfoList[columnId].DataType)

{

case TraceColumnDataType.Int32:

stringVal = BitConverter.ToInt32(bytes, 0).ToString();

break;

case TraceColumnDataType.String:

stringVal = Encoding.Unicode.GetString(bytes);

break;

case TraceColumnDataType.Int64:

long longVal = BitConverter.ToInt64(bytes, 0);

if (columnId == TraceInfo.TraceColumnsInfoList["Duration"].Id)

{

double dVal = (double)longVal / 1000000;

stringVal = dVal.ToString("0.0000");

}

else stringVal = longVal.ToString();

break;

...

Why convert all column data to a formatted string here and not in the data grid when displayed? Well, the particular nature of events and use of the columns means that columns might, or might not, be used by multiple events. I could have written a number of special cases for handling null or default values of primitive types when displayed, but in the end I found that a record which initializes fields to an empty string and overwrites some with received values was a simpler solution while keeping it all moving fast. The conversions to a formatted string will happen regardless of whether it is in the construction of the record or the display in the grid, so it's just as well doing it here where I can take out a few birds with one stone.

In the end, the performance was quite good. Good enough to cause me to reconsider changing the asynchronous hand-off of the recorded trace records to the client through an event, to be synchronous. That's because I found when I had accidentally set up a trace which rapidly recorded thousands of events on a single execution, the UI actually struggled to keep up. I may or may not make that change or tune performance in the UI, but I'll probably be making other tweaks here and there as needed.

This is a good place to note that it is always best to be conservative with the amount of data recorded in the trace. Even if performing server side traces to a file, all tracing adds pressure to the server and the greater the number of events and columns specified in the trace, the greater the pressure. This is also the reason I do not perform tracing on a shared developer SQL Server instance (don't even think of a production server!). I perform tracing on my local SQL Server Express instance only.

A class called TraceDefinition is provided to encapsulate all the data elements required to create the trace on the server. An instance of this is passed from the client UI to the TraceController when starting the trace. Recorded trace events are received in the client UI using event handlers, along with updates to the trace status. The client UI demo provided uses an extension to the TraceDefinition to allow other customizations, column order for example.

There is another thread that runs along side the trace monitoring thread used for monitoring the trace status on the server. I had decided to keep tabs on SQL Server's own opinion on the status, along side of the typical coded setting of the status based on actions performed. After all, regardless of whatever the application reports the status should be, if SQL Server says the trace is closed; it's closed. The reported status can and will be overruled by the output of this check. It's a small query that runs every half second and the effect on the server is nominal.

There are some other nice personal touches, cool stuff and particular implementations throughout the library and UI, all of which I would be happy to show and discuss; Sortable bindings, record grouping, row numbering, etc. But for this tip, I wanted just the most salient points around the trace configurations. Feel free to ask about anything appearing in the code in the Comments and Discussions, I'll be happy to answer what I can.

Notes, Issues

I noticed a few times that the application failed to resume after pausing a trace, though I have not seen this happen in quite a while. I never gave the issue full attention because of the lack of value in having a 'pause' feature in a utility like this. The feature is there only because the trace features on the server provide for it. But if not manually constructing and controlling traces using SQL commands, the UI makes stopping and starting a trace which actually closes and re-creates the trace perceptibly and, for all intents and purposes, functionally the same as pausing and resuming. And so I don't use the pause very often .

There is also a defined set of columns that are actually recordable for a particular event instead of all columns as it appears in the demo application. Sometime soon, I will modify accordingly, perhaps with grouping events by category.

This code was tested to work with SQL Server 2008 R2 Express and SQL Server 2012 Express. However earlier and latest versions may work as well.

History