Introduction

Nowadays, memory is no longer a scarce resource. Most desktops have several gigabytes of physical RAM. Virtual memory may extend available space in the terabyte range. And consequently, most of our applications are tested in very comfortable situations.

But what if your applications can't afford to fail in real life conditions? What if they are critical for security, or have to be able to cope with the worst conditions? Can you then rely only on memory allocation debugging libraries that can't be used for release grade executables?

The wealth of memory makes it difficult to test conditions of scarce resources. I propose here a small utility that can consume memory on a system, either at once, or with a gradual increase over time. The tip is written with Windows in mind, but the code is 100% standard C++ and therefore portable across platforms.

Background

There are fortunately many resources and excellent tools available to help you finding memory leaks, hunting memory mismanagement that causes segmentation faults or memory corruption, as well as monitoring consumption patterns.

But there's much less literature for non intrusive black-box testing. The typical scenario is to verify that application terminates properly if critical memory can't be allocated during the initialization phase. The other scenario is to make sure that application can cope with lack of memory during a critical process.

For this purpose, I've written a simple and flexible command line utility in C++. It consumes memory according to a pattern given in the command line. The advantage is that it behaves as any other application on your PC. So it's able to reproduce real life scenarios. You can use it to stress test your own code, and also for any third party application. Last but not least, the learning curve is minimal.

The principle is simple: the tool first allocates a given number of memory blocks, each having the same size. The allocation can be done as quickly as possible, or spread over a fixed amount of time, up to an hour. When allocation is finished, the tool releases the memory blocks. Allocation and deallocation is monitored in case you need the statistical details.

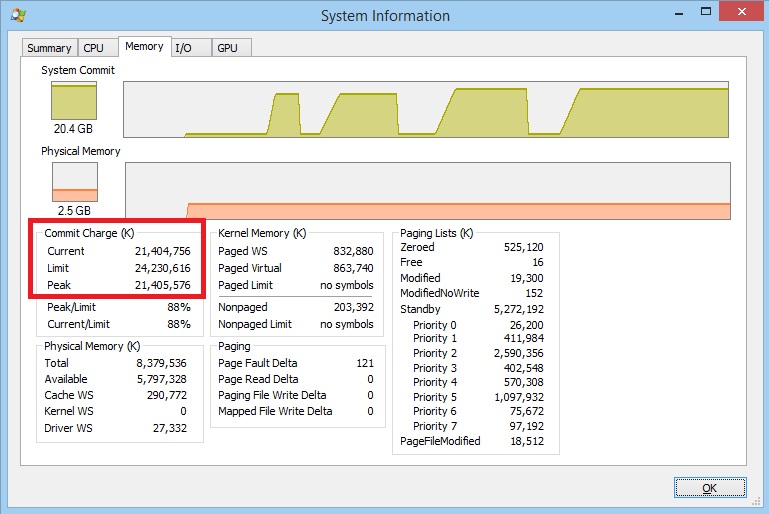

Example of successive runs monitored with Microsoft's Process Explorer:

You'll notice that the allocation process consumes virtual memory. The physical memory is managed by the operating system and used to optimized access to the virtual memory. If there's no virtual memory space anymore, the subsequent allocations will fail, regardless of the physical memory.

Using the Code

Build the Executable

The download files contain an MSVC 2013 solution/project. All you have to do is to load it, and build it in 32 bits or 64 bits flavor. Use the 64 bit version if possible: it can consume much more memory at once.

You can then copy the final executable MEMCONS32.EXE or MEMCONS64.EXE to the location where you store your ad-hoc utilities.

If you want to compile the code on another system and/or with another compiler, it shouldn't be too difficult: the code is contained in a single C++ file and uses only the standard library.

Run It

You run the tool from the command line. The following will display the usage instruction and the possible options:

MEMCONS64 /H

To start testing the tool, you can for example allocate 4096 blocks of 1024 Kb each, that is 4 Gb with:

MEMCONS64 /B:1024 /N:4096

For the purpose of curiosity, you'll also get performance statistics that show the time needed to allocate and deallocate the memory.

If you want to stress test your application with a gradual competition for memory, you spread the allocation over a time frame expressed in milliseconds with /T. If you want to wait before releasing the memory, just add /I:

MEMCONS64 /B:1024 /N:4096 /T:30000 /I

You can run the tool concurrently in several consoles, to simulate more complex patterns. Of course, if MEMCONS doesn't get memory, it doesn't abort! It stops trying to allocate anything and tells you how much memory it could grab.

Usage Instructions

Usage: MEMCONS [options][/N:number-of-blocks]

Parameters and options:

/B:block-size size of each block in Kb (default: 1 Kb)

/N:number-of-blocks that will be allocated (mandatory)

/T:duration overall duration of the simulation (default: 0,as quick as possible)

/I wait or user interaction when memory is allocated

/H or /HELP display this help

/S or /SILENT don't display progress

/STAT show stats on individual allocations

/D dumps block details on screen

Tips for Creating Test Conditions

The limits of Windows are quite large. You can for example hope 8TB with x64 and 128 TB for Windows 8.1.

Unfortunately, if the settings of your virtual memory are automatic, you might end-up consuming all your disk space if the timing profile lets the OS resize its paging file between the memory allocation calls.

To avoid this effect, you could set a maximum for the virtual memory. For this purpose, run the following Windows command:

SystemPropertiesAdvanced

Alternatively, you can get there by calling System in the System menu or the Control Panel, then choose Advanced System Settings:

You then have to go to the performance settings, and check the virtual memory options:

Make sure to uncheck the automatic management option, and set a reasonable maximum size. You can set this back as soon as your test is over.

Points of Interest

The tool is easy to use. By pushing the system to its limit, I could effectively observe the behavior of some software under adverse circumstances.

However, be aware that if all the memory is consumed, it's gone for all processes! So be sure not to run other unrelated software during the test. I managed to freeze a famous reliable browser, and got a command line window disappearing unexpectedly from the screen. This proves that stress test is really not a luxury and should be done more consistently.

The tool shows also performance statistics of memory allocation and deallocation according to the different scenarios. I could for example observe that within the limits of the physical memory, the deallocation is 2 to 3 times slower than the allocation. As soon as you've reached the limits and overall virtual memory has to be increased, the average allocation time can be multiplied by a factor of 10 to 20.

Some future evolution of MEMCONS could be to add some randomization, allocating random sized blocks and having a time distribution that is not necessarily linear. But for the time being, this first version gets most of the job done.

History