Introduction

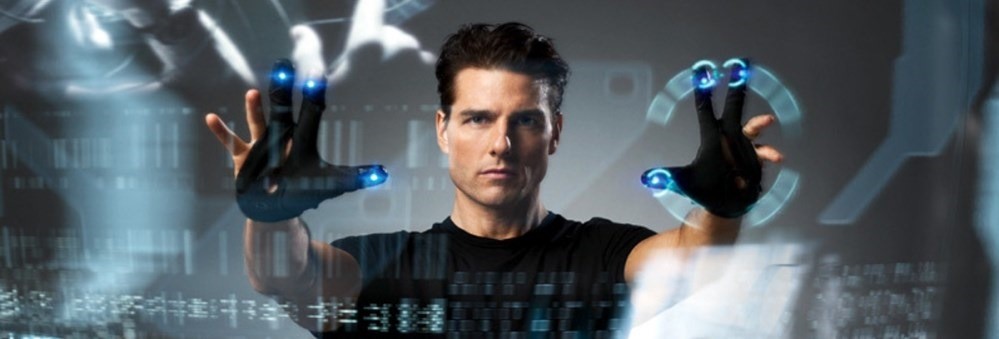

The future of human computer interaction (HCI) is about to have a paradigm shift. Ever since the beginning of computing, scientists and engineers have always been working towards creating 3D environments and Virtual and Augmented Worlds where we integrated the digital and the physical spheres of our lives. From early science-fiction books and movies, you can see that we have always dreamt of creating such environments and interactions.

Today, we live in very exciting times. We are getting closer to making these science-fiction ideas, into science-facts. In fact, we have already done so to some extent. Virtual Reality (VR) is a large topic. One of the important topics within VR is the ability to interact with the digital world. This article is going to concentrate on the interaction of the user with the digital world using the hardware sensor developed by Leap Motion.

This article will be covering how to integrate and use Leap Motion hand motion sensor with Unity 3D. We are not going to cover the basics of Unity 3D in this article. Please refer to the following article series to get started with Unity 3D.

Just in case if this is the first time reading the Unity 3D articles, I have listed the links to the series below:

- Unity 3D – Game Programming – Part 1

- Unity 3D – Game Programming – Part 2

- Unity 3D – Game Programming – Part 3

- Unity 3D – Game Programming – Part 4

- Unity 3D – Game Programming – Part 5

- Unity 3D – Game Programming – Part 6

- Unity 3D – Game Programming – Part 7

- Unity 3D – Game Programming – Part 8

- Unity 3D – Game Programming – Part 9

- Unity 3D – Game Programming – Part 10

Unity 3D Networking Article(s):

- Unity 3D - Network Game Programming

The articles listed above will give you a good starting foundation regarding Unity 3D.

NOTE: In order to experiment with the code provided in this article, you will need to have the Leap Motion hardware.

Introduction to Game Programing: Using C# and Unity 3D (Paperback) or (eBook) is designed and developed to help individuals that are interested in the field of computer science and game programming. It is intended to illustrate the concepts and fundamentals of computer programming. It uses the design and development of simple games to illustrate and apply the concepts.

|

|

Paperback

ISBN: 9780997148404

Edition: First Edition

Publisher: Noorcon Inc.

Language: English

Pages: 274

Binding: Perfect-bound Paperback (Full Color)

Dimensions (inches): 6 wide x 9 tall |

|  |

eBook (ePUB)

ISBN: 9780997148428

Edition: First Edition

Publisher: Noorcon Inc.

Language: English

Size: 9.98 MB |

|

|

|

Background

It is assumed that the reader of this article is familiar with programming concepts in general. It is also assumed that the reader has an understanding and experience of the C# language. It is also recommended that the reader of the article is familiar with Object-Oriented Programming and Design Concepts as well. We will be covering them briefly throughout the article as needed, but we will not get into the details as they are separate topics altogether. We also assume that you have a passion to learn 3D programming and have the basic theoretical concepts for 3D Graphics and Vector Math.

Lastly, the article uses Unity 3D version 5.1 which is the latest public release as of the initial publication date. Most of the topics discussed in the series will be compatible with older versions of the game engine.

Using the Code

Follow the instructions. Easy to setup.

What is Leap Motion?

“In just one hand, you have 29 bones, 29 joints, 123 ligaments, 48 nerves, and 30 arteries. That’s sophisticated, complicated, and amazing technology (times two). Yet it feels effortless. The Leap Motion Controller has come really close to figuring it all out.”

The Leap Motion Controller senses how you naturally move your hands and lets you use your computer in a whole new way. It tracks all 10 fingers up to 1/100th of a millimeter. It's dramatically more sensitive than existing motion control technology. That's how you can draw or paint mini masterpieces inside a one-inch cube.

Figure 1-Leap Motion Sensor

Leap Motion Setup and Unity 3D Integration

First, you will need to download the SDK for Leap Motion. You can get the latest using the following link: developer.leapmotion.com. I am using SDK v.2.2.6.29154. After you download the SDK, go ahead and install the runtime. The SDK supports other platforms and languages which you are encouraged to look into. For our purposes and integration with Unity, you will need to also get the Leap Motion Unity Core Assets from the Asset store. Here is a direct link to Leap Motion Unity Core Assets v2.3.1.

Assuming you have already downloaded and installed Unity 5 on your machine, the next thing you will need to do is get the Leap Motion Unity Core Assets from the Asset store. At the time of this writing, the asset package was on version 2.3.1.

Figure 2-Leap Motion Core Assets

After downloading and installing the Leap Motion Core Assets, we can start with creating our simple scene and getting to learn how to interact with the assets and extend them for our own purposes.

Figure 3-Project Folder Structure for Leap Motion

Go ahead and create a new empty project and import the Leap Motion Core Assets into your project. After you have imported the Leap Motion Assets, your project window should look similar to the figure above.

Leap Motion Core Assets

In the Project Window, your main attention should be toward the LeapMotion folder. The folders that contain OVR are geared towards using Leap Motion with Oculus Rift Virtual Reality headset. We will cover this in future article(s).

You should take the time and study the structure and more importantly, the content within each folder. One of the main core assets that you will want to get familiar with is the HandController. This is the main PreFab that allows you to interact with the Leap Motion device in your scene. It is located under the Prefab folder within the LeapMotion folder. It serves as the anchor point for rendering your hands in the scene.

Figure 4-HandController Inspector Properties

The HandController prefab has the Hand Controller script attached which enables you to interact with the device. Take a look at some of the properties that are visible through the Inspector Window. You would notice that there are two hand properties for the actual hand model to be rendered in the scene, and then there are two for the physical model, these are the colliders. The benefit of this design is that you can create your own hand models, and use them with the controller for visual representation and also custom gestures, etc…

NOTE: Unity and Leap Motion both use the metric system. But, there is a difference in the following: Unity unit measure is in meters, whereas Leap Motion is in millimeters. Not a big deal, but you will need to be aware of this when you are measuring your coordinates.

Another key property is the Hand Movement Scale vector. The larger the scale, the larger the area that the device will cover in the physical world. A word of caution here, you will need to read the documentation and specifications for finding out the right and proper adjustments for the particular application that you are working on.

The Hand Movement Scale vector is used to change the range of motion of the hands without changing the apparent model size.

Placing the HandController object is important in the scene, as stated, this is where the anchor point is and therefore, your camera should be in the same area as the HandController. In our demo scene, go ahead and place the HandController at the following position: (0,-3,3) for the (x,y,z) coordinates respectively. Make sure the camera is positioned at (0,0,-3). Take a look at the coordinates and visualize how the components are represented in 3D space. Here is a diagram for you to consider:

Figure 5-Visual representation of HandController and Camera positions

In other words, you want the HandController to be in front of the Camera GameObject and also below a certain threshold. There are no magic numbers here, you will just need to figure out what works best for you.

At this point, you have connected all of the fundamental pieces to actually run your scene and try out Leap Motion. So go ahead and give it a try, if you have installed all of the software components properly, you should be able to see your hands in the scene.

The Next Step

The fact that you can actually visualize your hand movement in the scene by itself is a huge undertaking by the assets that have been provided. However, in reality, to make things more interesting, you will need to be able to interact with the environment and be able to change and manipulate stuff within the scene.

To illustrate this particular point, we will create a few more GameObjects, and within the scene, we will look at how to implement some basic interactions with GameObjects. The idea would be to have a cube suspended in the air. We would like to change the color of the Cube by selecting from another set of cubes representing our color pallet.

For simplicity of the scene, we will place three cubes representing our color pallet. The first one will be red, the second one will be blue and the third one will be orange. You may choose any color you wish by the way. We would need to place the cubes in a way that interaction with them will be easy and non-confusing to the user.

We can place the cubes in the following order:

- The Cube (0,1,3)

- Red Cube: (3,-1,3)

- Blue Cube: (0,-1,3)

- Orange Cube: (-3,-1,3)

Notice that they are above the HandController GameObject in the Y-Axis, but they are at the same coordinate in the Z-Axis. You can play with these numbers if you wish to adjust it to your liking. As long as the cubes are above and within the range of detection of the HandController, you should be fine.

The next step is to create the script that will help us interact with the Cube GameObjects. I call the script CubeInteraction.cs:

using UnityEngine;

using System.Collections;

public class CubeInteraction : MonoBehaviour {

public Color c;

public static Color selectedColor;

public bool selectable = false;

void OnTriggerEnter(Collider c)

{

if (c.gameObject.transform.parent.name.Equals("index"))

{

if (this.selectable)

{

CubeInteraction.selectedColor = this.c;

this.transform.Rotate(Vector3.up, 33);

return;

}

transform.gameObject.GetComponent<Renderer>().material.color =

CubeInteraction.selectedColor;

}

}

}

As you can see, the code is not very complex, but it does help us achieve what we want. There are three public variables which are used to detect if the object is selectable, the color that the object represents, and the selected color.

The core of the logic happens in the OnTriggerEnter(Collider c) function. We check to see if the object that collided with the Cube is the index finger, then we check to see if the object is selectable. If the object is selectable, we set the selectable color to the predefined color code and exit the function.

Another good idea when you are designing such interactions between virtual objects, is actual visual feedback to the user. In this case, I made the selected color cube to rotate 33° degrees each time the index finger collides with the GameObject. This is a good visual clue that we have selected the color.

This same function is also used to apply the selected color to the Cube GameObject that can be painted. For this particular case, we get the Renderer object from the Cube and set the material color to the selected color.

Running the Scene

The following is a sample screen capture of the scene you just setup:

Figure 6-Running Demo Scene with Left Hand

You can see my left hand in the screen capture above. My right hand is on the mouse taking the screen shot! The next screen capture captures selecting the blue cube:

Figure 7-Selecting Blue Color Cube

And finally applying the selected color to the colorable cube in the scene:

Figure 8-Blue Color Applies to Paintable Cube

How About Moving Object?

At this point, you will say to yourself, all this is cool, but what if I wanted to be able to have more interactions with my 3D environment. Say for instance, you want to pick up objects and move them around in the scene.

Well, this is very much so possible and in order for us to make it happen, we need to write more code! Extending our example, we will implement the code that actually will allow you to pick-up the Cube object and move it around in the environment.

Listing to grab a given object in the scene is provided in GrabMyCube.cs:

using UnityEngine;

using UnityEngine.UI;

using System.Collections;

using Leap;

public class GrabMyCube : MonoBehaviour {

public GameObject cubePrefab;

public HandController hc;

private HandModel hm;

public Text lblNoDeviceDetected;

public Text lblLeftHandPosition;

public Text lblLeftHandRotation;

public Text lblRightHandPosition;

public Text lblRightHandRotation;

void Start()

{

hc.GetLeapController().EnableGesture(Gesture.GestureType.TYPECIRCLE);

hc.GetLeapController().EnableGesture(Gesture.GestureType.TYPESWIPE);

hc.GetLeapController().EnableGesture(Gesture.GestureType.TYPE_SCREEN_TAP);

}

private GameObject cube = null;

Frame currentFrame;

Frame lastFrame = null;

Frame thisFrame = null;

long difference = 0;

void Update()

{

this.currentFrame = hc.GetFrame();

GestureList gestures = this.currentFrame.Gestures();

foreach (Gesture g in gestures)

{

Debug.Log(g.Type);

if (g.Type == Gesture.GestureType.TYPECIRCLE)

{

if (this.cube == null)

{

this.cube = GameObject.Instantiate(this.cubePrefab,

this.cubePrefab.transform.position,

this.cubePrefab.transform.rotation)

as GameObject;

}

}

if (g.Type == Gesture.GestureType.TYPESWIPE)

{

if (this.cube != null)

{

Destroy(this.cube);

this.cube = null;

}

}

}

foreach (var h in hc.GetFrame().Hands)

{

if (h.IsRight)

{

this.lblRightHandPosition.text =

string.Format("Right Hand Position: {0}", h.PalmPosition.ToUnity());

this.lblRightHandRotation.text =

string.Format("Right Hand Rotation: <{0},{1},{2}>",

h.Direction.Pitch, h.Direction.Yaw, h.Direction.Roll);

if (this.cube != null)

this.cube.transform.rotation = Quaternion.EulerRotation

(h.Direction.Pitch, h.Direction.Yaw, h.Direction.Roll);

foreach (var f in h.Fingers)

{

if (f.Type() == Finger.FingerType.TYPE_INDEX)

{

Leap.Vector position = f.TipPosition;

Vector3 unityPosition = position.ToUnityScaled(false);

Vector3 worldPosition = hc.transform.TransformPoint(unityPosition);

}

}

}

if (h.IsLeft)

{

this.lblLeftHandPosition.text = string.Format

("Left Hand Position: {0}", h.PalmPosition.ToUnity());

this.lblLeftHandRotation.text = string.Format

("Left Hand Rotation: <{0},{1},{2}>", h.Direction.Pitch,

h.Direction.Yaw, h.Direction.Roll);

if (this.cube != null)

this.cube.transform.rotation = Quaternion.EulerRotation

(h.Direction.Pitch, h.Direction.Yaw, h.Direction.Roll);

}

}

}

}

There are several things that are happening in this code. I will point out the most important sections and let you work around the rest yourself. For instance, I am not going to cover the UI code, etc. You can look at the article series for a detailed explanation of how to setup and work with the new UI framework.

We have a public HandController object defined as hc. This is a reference to the HandController in the scene so we can access the functions and properties as needed. The first thing we would need to do is register hand gestures with the HandController object. This is done in the Start() function. There are some predefined gestures already defined by default, so we will be using some of them. In this case, we have registered the CIRCLE, SWIPE, and SCREEN_TAP gesture types.

We have also defined two variables of type GameObject named cubePrefab, and cube. The variable cubePrefab is a reference to a prefab that was created to represent our cube with the appropriate materials and components associated with it.

Let’s take a look at the Update() function. This is the core of where everything happens and it might be a little confusing at first, but you will get a hang of it in no time. What we are doing here, is that we are looking for a hand gesture of TYPECIRCLE. This will instantiate the prefab we have defined and reference in the variable called cubePrefab. So the first thing we are doing in the function is grabbing the current frame from the HandController object. A Frame object contains all of the information pertaining to the hand motion at that given instance. The next step is to get a list of all the gestures that were detected by the sensor and store it in a list.

Next, we are going to loop through each gesture and detect the type. If we detect a CIRCLE gesture, we will check to see if we have already instantiated our cube prefab, and if not, we will go ahead and instantiate it. The next gesture type is of SWIPE, and this will destroy our instantiated prefab.

The next loop basically goes through the hands detected in the current frame, and detects if it is the left hand or the right hand, and based on which hand it is, it perform specific operations. In this case, we just get the position and rotation of the hands, and also rotate our instantiated cube based on the rotation of the right or left hand. Nothing fancy!

You can see the result in the following video: demo.

Points of Interest

Virtual reality has always been one of those topics in the industry that gets a lot of exposure at a point in time and then everything cools down. This time around, things are a bit different. The hardware and software ecosystem that enables Virtual Reality possible is becoming more and more democratized. The cost of the hardware, even though not cheap, but economical for those who are really eager to get their hands dirty, is at a point that most developers can afford.

This enables the development community to provide better VR experiences and entertainment. The idea of integrating hand motion and gestures with VR applications is a must. I have decided to look into Leap Motion as it is a promising input sensor for non-touch user interaction with the computer. The next step would be to integrate Leap Motion with Oculus Rift.

Unity 3D Article Series

- Unity 3D – Game Programming – Part 1

- Unity 3D – Game Programming – Part 2

- Unity 3D – Game Programming – Part 3

- Unity 3D – Game Programming – Part 4

- Unity 3D – Game Programming – Part 5

- Unity 3D – Game Programming – Part 6

- Unity 3D – Game Programming – Part 7

- Unity 3D – Game Programming – Part 8

- Unity 3D – Game Programming – Part 9

- Unity 3D – Game Programming – Part 10

Unity 3D Networking Article(s)

- Unity 3D - Network Game Programming