Intel® Developer Zone offers tools and how-to information for cross-platform app development, platform and technology information, code samples, and peer expertise to help developers innovate and succeed. Join our communities for Android, Internet of Things, Intel® RealSense™ Technology, and Windows to download tools, access dev kits, share ideas with like-minded developers, and participate in hackathon’s, contests, roadshows, and local events.

By adding the “real sense” of human depth perception to digital imaging, Intel® RealSense™ technology enables 3D photography, on mainstream tablets, 2-in-1s, and other RealSense technology enabled devices. These capabilities are based on extrapolating depth information from images captured by an array of three cameras, producing data for a 3D model that can be embedded into a JPEG photo file.

Software development kits and other developer tools from Intel will abstract depth perception processing to simplify the creation of applications without low-level expertise in depth processing. Devices that support this end-user functionality are available on the market now.

This article introduces software developers to the key mechanisms used by Intel® RealSense™ technology to implement depth perception in enhanced digital photography.

Encoding Data into a Depth Map

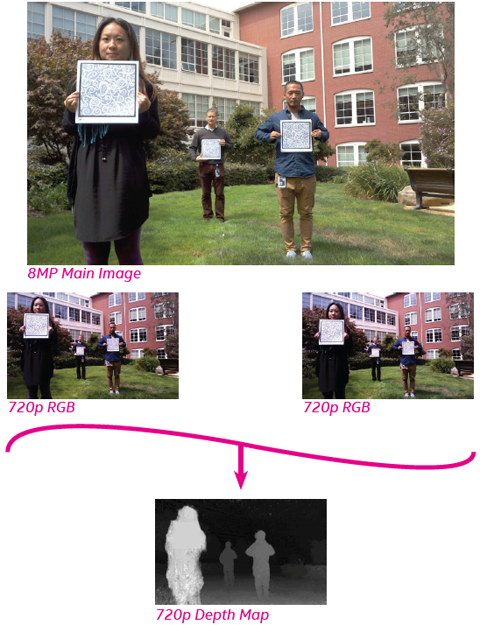

The third dimension in digital photography, as enabled by Intel RealSense technology, is capturing the relative distances between the camera and various elements within the scene. This information is stored in a depth map, which is conceptually similar to a topographic map, where a depth value (z-dimension) is stored for each pixel (x-y coordinate) in the image. Image capture to support depth mapping is accomplished using three camera sensors, as illustrated in Figure 1. Here, the 8 megapixel (MP) main image is augmented with information captured by two 720p Red, Green, and Blue (RGB) sensors.

Figure 1. RGB camera sensor array produces image with depth data.

The actual depth map is produced by computing the disparities between the positions of individual points in the images captured by the three cameras (based on parallax due to the physical separation of the cameras on the device). The disparity associated with each point in the scene is mapped onto a grayscale image. The smaller disparities are represented by darker pixels and are further away from the device. The larger disparities are represented by lighter pixels and are closer to the device. The main image has a higher resolution and can be used independently, or when needed by an application, the depth information can be used to model 3D space in the scene.

Resolution of the depth map is limited by the size of the image captured by the lowest resolution sensor (720p). It may be saved as an 8-bit or 16-bit PNG file. Typically, the depth map file roughly doubles the overall size of the JPEG finished file. The depth information itself is stored along with the main image in a single JPEG file. The JPEG is compatible with standard image viewers. However, when viewed on an Intel RealSense 3D Camera enabled system, the depth information is also retrieved for use by various RealSense apps.

Quality of the depth map is dependent on a number of factors, including the following:

- Distance from camera to subject. Distances between 1 to 10 meters provide optimal depth experience with 1 to 5 meters providing the best measurement experience.

- Lighting. Dimly lit scenes require higher ISO equivalents, which can produce sensor noise and interfere with distance calculations; glare and reflective surfaces can also adversely affect depth images.

- Texture and contrast. Clear visual distinctions between elements in a scene—as opposed to solid masses of color or busy geometric patterns—help provide for dependable outcomes of depth algorithms.

Hardware and Use Cases

Depth photography is currently available using the Intel RealSense R100 rear-facing three-camera array as featured in the Dell Venue 8 7840 Android tablet. At only 6 millimeters (less than 1/4”) thick and approximately 300 grams (0.7 pounds), this Venue tablet is powered by the 2.3 GHz Intel® Atom™ processor Z3580 and provides an 8.4-inch OLED display with 2560 x 1600 resolution.

One common use case for depth mapping in real-world applications is to produce accurate measurements of objects in a photographed scene AFTER the image has been captured. This is accomplished using the 3D data within the depth map. To illustrate this concept in a light-hearted way, Intel created the “Fish Demo,” as shown in Figure 2, where two friends display the fish they have caught.

Figure 2. Intel® RealSense™ technology dispels a false fish story using actual measurements.

While one of the two men has caught the smaller fish (11 inches, compared to his friend’s fish that is 3 feet and 1 inch long), he crowds in closer to the camera, making his catch appear larger in a conventional photograph. In this demonstration, the Measurement application allows for actual measurements of each fish to be taken with a simple tap of the screen on the head and tail of each, and the actual measurements are superimposed over the image.

A broad range of similar use cases are possible. Parents could document the growth of their children in a digital photo album as opposed to marking up their door frames. Shopping for furniture could be simplified by identifying how pieces in the showroom would fit into the living room back at home. For further illustration, consider the series of television commercials featuring Jim Parsons including the scene in Figure 3, where he explains to a stunt-bike rider how measurements ahead of time using Intel RealSense technology could have made a bike jump successful.

About the Author

Kyle Mabin has been with Intel for 22 years and is a Technical Marketing Engineer with SSG’s Developer Relations Division. He is based in Chandler, AZ.