Introduction

Maybe your app works with cooking recipes, people profiles, vehicle records, teammates, or students to name a few. You may want to give a way to attach a photo to them. To realize this feature, you will want to provide some minimum suite of image manipulation operations. One basic operation is to crop an image.

I recently had such a need. Like many programmers, I submitted a Google search to see what I could learn from others' experiences. I found that many had struggled through this problem, and I was never quite satisfied with their solutions. This article shares what I learned and details the implementation of an object, called MMSCropImageView; you can use it to support basic image cropping function.

Background

When you work with still images, you use UIImage to display it. UIImage is the glue between the UIImageView and the underlying bitmap. The UIImage renders the bitmap to the current graphics context, configures transformation attributes, orientation, and scaling to name a few. However, none of these operations change the underlying representation. They give control over how the bitmap is rendered to the graphics context, but to realize changes to it, you need to work with the Core Graphics Framework.

Working with UIImage’s bitmap can be disorienting because it’s natural to think that the UIImage is a one-to-one mapping to it. It’s not. Often what you see on the screen is a transformation. For example, the UIImage may have a width of 4288 by a height of 2848 while the bitmap’s dimensions may be rotated 90 degrees.

If you work with the bitmap without accounting for these properties, you may scratch your head wondering why after a transformation, the resulting bitmap doesn’t match expectations. Cropping is one such use case.

When a user drags a rectangle over an image, the rectangle’s orientation is with respect to the UIImage and the transformation rendered to the screen. So, if the imageOrientation is set to any value other than UIImageOrientedUp and you crop the bitmap without factoring this property, the resulting image will appear distorted in different ways.

The other attributes to factor are the image dimensions with respect to the views. It’s most often the case where they have different sizes. Since the user identifies the crop region through the view, the dimensions between the bitmap, view, and crop rectangle must be normalized to match what you see on screen to what gets extracted from the bitmap.

CropDemo App

The remainder of this article describes a simple program called CropDemo. I will use it to demonstrate how to use MMSCropImageView and the helper classes to crop an image.

The app displays a large image at the top of the screen. The user drags a finger over the image to identify the crop region. As the finger drags across the image, a white, transparent rectangle displays. To reposition the rectangle, press a finger on it and move it around the image. Tap outside the rectangle to clear it. To crop the image, press the crop button at the bottom of the screen, and the area covered by the rectangle displays below the original one.

Figure 1 - Application Window

The application has a class and category for you to use unchanged in your own application: MMSCropImageView and UIImage+cropping.

MMSCropImageView is a subclass of UIImageView. It provides the features for dragging a rectangle over the view, moving it, clearing it, and returning a UIImage extracted from the original one identified by the crop region.

The category named UIImage+cropping adds methods to the UIImage class to crop the bitmap. It can be used independently of the MMSCropImageView class.

Cropping a UIImage

The UIImage+cropping category implements a public method to crop the bitmap and returns it in a UIImage.

-(UIImage*)cropRectangle:(CGRect)cropRect inFrame:(CGSize)frameSize

The parameter cropRect is the rectangular region to cut from the image. Its dimensions are relative to the second parameter, frameSize.

The parameter frameSize holds the dimensions of the view where the image is rendered.

Since all the user’s inputs are relative to the view, it’s necessary to normalize the dimensions between the view and the image. The approach taken is to resize the bitmap to the view’s dimensions.

The other variable to factor is the image’s orientation. Orientation must be determined to position the crop rectangle over the bitmap and to scale the dimensions in relation to the view as the image rendered may be oriented different than the bitmap.

Steps for Scaling a Bitmap

These are the steps for scaling the underlying bitmap of a UIImage.

One, check the imageOrientation and swap the scale size’s height and width if the image is oriented left or right.

if (self.imageOrientation == UIImageOrientationLeft || self.imageOrientation == UIImageOrientationRight) {

scaleSize = CGSizeMake(round(scaleSize.height), round(scaleSize.width));

}

Two, create a bitmap context in the scale dimensions.

CGContextRef context = CGBitmapContextCreate(nil, scaleSize.width, scaleSize.height,

CGImageGetBitsPerComponent(self.CGImage), CGImageGetBytesPerRow(self.CGImage)/CGImageGetWidth

(self.CGImage)*scaleSize.width, CGImageGetColorSpace(self.CGImage), CGImageGetBitmapInfo(self.CGImage));

Three, draw the bitmap to the new context.

CGContextDrawImage(context, CGRectMake(0, 0, scaleSize.width, scaleSize.height), self.CGImage);

Four, get a CGImageRef from the bitmap context.

CGImageRef imgRef = CGBitmapContextCreateImage(context);

Five, instantiate a UIImage from the CGImageRef returned in step four.

UIImage* returnImg = [UIImage imageWithCGImage:imgRef];

The variable returnImg has height and width equal to scale dimensions. Download the attached code to view the complete implementation of scaleBitmapToSize: in the file UIImage+cropping.m.

Transposing the Crop Rectangle

The crop rectangle’s origin and size are with respect to the view. Consequently, to properly crop the bitmap, the rectangle must be transposed to position it based on the orientation property. The method transposeCropRect:inDimension:forOrientation: transposes the crop rectangle to the destination's orientation. Parameter inDimension is the width and height of the frame encapsulating the rectangle.

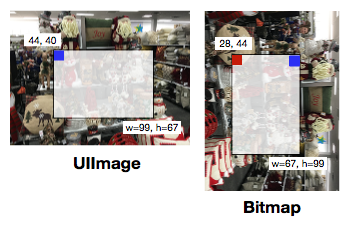

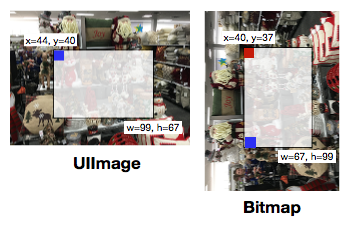

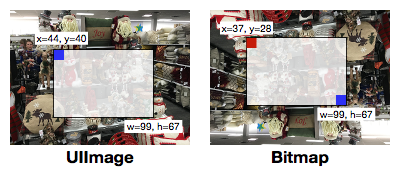

It’s best to show this with pictures. The following images depict the origin and size translations that must occur when imageOrientation is left, right, and bottom. The blue square represents the rectangle’s original origin, and the red rectangle represents where the new one falls after transpose.

Figure 2 - Crop rectangle translation for UIImageOrientationLeft

Figure 3 - Crop rectangle for UIImageOrientationRight

Figure 4 - Crop rectangle for UIImageOrientationDown

The orientation for UIImageOrientationUp does not require a transfer because the UIImage and bitmap are identically oriented.

The following code shows the algorithm to transform the origin and size when the orientation is UIImageOrientationLeft.

case UIImageOrientationLeft:

transposedRect.origin.x = dimension.height - (cropRect.size.height + cropRect.origin.y);

transposedRect.origin.y = cropRect.origin.x;

transposedRect.size = CGSizeMake(cropRect.size.height, cropRect.size.width);

break;

See that attached code in UIImage+cropping.m for the calculations for all possible orientations.

Cropping Steps

Now that the helper methods are explained, cropping a UIImage becomes quite simple. The first step is to scale the bitmap to the frame size. Since the crop rectangle’s coordinate space exists within the views, the bitmap’s space must match to apply it. An alternative would be to scale the crop rectangle to the bitmap’s coordinate space. I chose the former since it matches the user’s perspective.

UIImage* img = [self scaleBitmapToSize:frameSize];

Next, extract the crop region from the bitmap. It calls the Core Graphics function CGImageCreateWithImageInRect and passes a translated crop rectangle:

CGImageRef cropRef = CGImageCreateWithImageInRect(img.CGImage, [self transposeCropRect:cropRect inDimension:frameSize forOrientation:self.imageOrientation]);

Finally, it creates a UIImage from the cropped bitmap with the class factory and passes the bitmap along with a scale factor of 1.0 and the source’s orientation. The orientation is key because the returned image displays like its source. If you always use a constant like UIImageOrientationUp, the image will display rotated, mirrored, or a combination of the two depending on the original value.

UIImage* croppedImg = [UIImage imageWithCGImage:cropRef scale:1.0 orientation:self.imageOrientation];

The complete implementation of cropRectangle:inFrame: follows:

-(UIImage*)cropRectangle:(CGRect)cropRect inFrame:(CGSize)frameSize {

frameSize = CGSizeMake(round(frameSize.width), round(frameSize.height));

UIImage* img = [self scaleBitmapToSize:frameSize];

CGImageRef cropRef = CGImageCreateWithImageInRect(img.CGImage, [self transposeCropRect:cropRect inDimension:frameSize forOrientation:self.imageOrientation]);

UIImage* croppedImg = [UIImage imageWithCGImage:cropRef scale:1.0 orientation:self.imageOrientation];

return croppedImg;

}

Drawing the Crop Rectangle

To identify the crop rectangle, the user drags out a rectangular region over the image. Once drawn, the rectangle can be moved to adjust its origin. The class MMSCropImageView supports the features of drawing and positioning the rectangle and returning the cropped image. It delivers these features in a subclass of UIImageView.

To draw and clear the crop rectangle, the UIPanGestureRecognizer and the UITapGestureRecognizer are added to the view. These gestures are recognized simultaneously; therefore, recognition must be enabled by implementing the UIGestureRecognizerDelegate method gestureRecognizer shouldRecognizeSimultaneouslyWithGestureRecognizer:. Return true for one of the pair combinations but not both.

-(BOOL)gestureRecognizer:(UIGestureRecognizer *)gestureRecognizer

shouldRecognizeSimultaneouslyWithGestureRecognizer:(UIGestureRecognizer *)otherGestureRecognizer {

if ([gestureRecognizer isKindOfClass:[UIPanGestureRecognizer class]]

&& [otherGestureRecognizer isKindOfClass:[UITapGestureRecognizer class]]) {

return YES;

}

return NO;

}

The tap gesture target hides the crop view:

- (IBAction)hideCropRectangle:(UITapGestureRecognizer *)gesture {

if (!cropView.hidden) {

cropView.hidden = true;

cropView.frame = CGRectMake(-1.0, -1.0, 0.0, 0.0);

}

}

To more accurately identify the gesture’s first touch point, it uses the class DragCropRectRecognizer. It is a subclass of UIPanGestureRecognizer and serves to pinpoint the crop rectangle’s origin. It overrides the method touchesBegan:withEvent: to save the gesture’s first touch point.

This approach was chosen for identifying the origin over setting it when processing the state UIGestureRecognizerStateBegan because I found the point returned from locationInView: was shifted from the point where your finger first touches.

Here’s the code for identifying the origin:

-(void)touchesBegan:(NSSet<UITouch *> *)touches withEvent:(UIEvent *)event {

NSEnumerator* touchEnumerator = [touches objectEnumerator];

UITouch* touch;

while (touch = [touchEnumerator nextObject]) {

if (touch.phase == UITouchPhaseBegan) {

self.origin = [touch locationInView:self.view];

break;

};

}

[super touchesBegan:touches withEvent:event];

}

As the user continues to move their finger across the image, the pan gesture repeatedly calls its target drawRectangle, and it draws the crop rectangle based on the new position of the touch. The crop rectangle is a UIView and a subview of UIImageView having a white transparent background and a solid white boarder. The most important factor in calculating the crop rectangle’s origin and size is to calculate them based on the point that began the pan gesture. This point is referred to as the drag origin.

The first step to calculate the crop rectangle is to determine what quadrant the new point falls relative to the drag origin: upper left (Quadrant I), top right (Quadrant II), bottom right (Quadrant III), or bottom left (Quadrant IV). Once determined, calculate the new origin and size.

Here’s the code for calculating the crop rectangle dimensions when the current point falls in the quadrant III. See the method drawRectangle: for all the calculations in the source file MMSCropImageView.m in the attached source files.

cropRect = cropView.frame;

CGPoint currentPoint = [gesture locationInView:self];

if (currentPoint.x >= dragOrigin.x && currentPoint.y >= dragOrigin.y) {

cropRect.origin = dragOrigin;

cropRect.size = CGSizeMake(currentPoint.x - cropRect.origin.x, currentPoint.y - cropRect.origin.y);

}

Moving the Crop Rectangle

Once the crop rectangle is drawn out, the MMSCropImageView class gives the user the ability to reposition it over the image. It adds a UIPanGestureRecognizer to the crop rectangle’s UIView to respond to move gestures. When the gesture begins, two points are recorded: the touch point referred to as touch origin and the crop rectangle’s origin called drag origin. They remain fixed through the drag operation.

If (gesture.state == UIGestureRecognizerStateBegan) {

touchOrigin = [gesture locationInView:self];

dragOrigin = cropView.frame.origin;

}

As the user’s finger moves across the image, it computes the change in x and y from the touch origin. To reposition the crop rectangle, it updates the frame origin by adding the change in x and y to the corresponding variables in the drag origin. All computations are relative to those starting points.

CGFloat dx, dy;

CGPoint currentPt = [gesture locationInView:self];

dx = currentPt.x - touchOrigin.x;

dy = currentPt.y - touchOrigin.y;

cropView.frame = CGRectMake(dragOrigin.x + dx, dragOrigin.y + dy, cropView.frame.size.width, cropView.frame.size.height);

Special Handling for Tap Gesture

A gesture works its way up the subview chain in search of a handler until it reaches the parent. If the subview does not support the gesture and the parent does, the parent’s handler executes. This could have adverse consequences if the operation is not valid for the subview.

In this example, a tap on the image outside of the crop rectangle removes it. If the crop view does not handle the tap gesture and the user taps inside the rectangle, the parent’s handler is called and removes it. To prevent the parent handler from executing, the tap gesture is added to the crop view with the default target.

swallowGesture = [[UITapGestureRecognizer alloc] init];

[cropView addGestureRecognizer:swallowGesture];

Points of Interest

During the research and development of this class, I spent a considerable amount of time working through resolving differences in the sharpness of the cropped image versus the original image when extracted from a magnified rendering. Though the cropped image had the same pixel dimensions of the magnified crop region, it appeared pixelated when displayed on the screen.

I can’t tell you how many countless hours I spent trying to understand and resolve the small difference in sharpness. When I finally convinced myself that I was extracting the image from the correct origin, height, and width and that the UIImageView dimensions were identical, I began to look elsewhere. That’s when I said to myself, let me see how it appears on an actual device versus the simulator.

Voila! The cropped image had identical sharpness as the magnified original without algorithm changes. I concluded the differences were an artifact of the simulator.

Since I had an explanation and devoted too much time to it, I did not attempt to understand what it was about the simulator that showed this behavior. If any reader has some insights, please share them in the comments or contact me via email. As you may tell, it continues to gnaw at me, but not enough to research further.

Using the Code

The code for this example is attached to the article in a zip file. You can also find it on github at https://github.com/miller-ms/MMSCropImageView.

To use the code in your own app, select the custom class MMSCropImageView for the image view widget in your storyboard. If you do not use storyboards, create one in the view controller where you plan to show the image.

MMSCropImageView *cv = [[MMSCropImageView alloc] initWithFrame:CGRectMake(10, 10, 200, 100)];

Import the header in the files where you will be interacting with the object.

#import <MMSCropImageView.h>

Add an event handler to the view controller of the view where the object is displayed. The event handler is likely connected to a button where you user initiates the crop action.

- (IBAction)crop:(UIButton *)sender {

UIImage* croppedImage;

croppedImage = [self.imageView crop];

self.croppedView.image = croppedImage;

}

This example simply displays the returned image in another UIImageView.

Summary

Hopefully, this article demystifies the complexities of cropping an image.

This approach resizes the bitmap to the view's dimensions to normalize the coordinates and extract the crop region. This likely results in a cropped image having fewer pixels than if the crop rectangle is resized to the bitmap's dimensions because the view is likely displaying images taken with the camera. Depending on the app's purpose, it may be more desirable to crop at the higher resolution. I leave that as an exercise for the reader.

The key aspects of the solution to factor are as follows:

- The crop rectangle is relative to the image view, not the image.

- The view has different dimensions than the image it renders.

- The image orientation may be different than the bitmaps.

There are alternatives to supporting these factors. This solution addresses them as follows:

- It normalizes the dimensions by resizing the bitmap to the view's.

- It factors orientation when scaling the bitmap, applying the crop rectangle, and returning the

UIImage.

The class MMSCropImageView should be a good starting point for supporting your app’s cropping requirements. If you do use and enhance it, please submit those changes to the project on GitHub: https://github.com/miller-ms/MMSCropView for the swift poject and https://github.com/miller-ms/MMSCropImageView.

Good luck with your projects. I welcome your questions and comments.

History

- June 25, 2016 - Uploaded swift version of the solution and pointed to swift version of project on github.

- February 6, 2016 - Corrected article images that show orientation; clarified some of the writing; added updated project files; new project files corrects a bug in creating the bitmap context.

- January 17, 2016 - Updated variable highlighting and updated the summary

- January 13, 2016 - Corrected class name and link to github

- January 12, 2016 - Initial release