Introduction

At this article I would like to open discussion of video transcoding and streaming to internet browser without addons, just into native video html control and to show my expiriemnts on this topic. I will appreciate for any advice and comments on the article. Basically the Idea appeared from issue to make something similar to Plex (https://plex.tv/).

The problem is to transcode a media file for appropriate format on demand (file for now, but it can be applied for stream from webcam, for example), which can be played by video tag of internet browser and if it is possible to do that on the fly without any temporary files. The problem can be easily resolved for morden browsers as soon as google introduced webm container and the most of browsers have supported it, probably except IE. So the task becomes to transcode media file for this container and to stream it. It sounds simpler than it is. Because actually it is not enough, in order to have the normal user experience, like user can have on pre transcoded files, which allow them to seeking by the file, seeing time left, and overall time and smooth playing. To achieve the functionality you need a custom player who provides basic features. Of course it can be done using flash or silverlight based player with implemented RTSP or RTMP protocols. But as I said above, it is not our solution, because the main requirement to have everything but without browser extensions. Probably the most closest solution is Dynamic Adaptive Streaming over HTTP (MPEG DASH). Under github we can find client implementation such as,

https://github.com/Dash-Industry-Forum/dash.js or https://github.com/google/shaka-player. Such players can change streams when necessary to ensure continuous playback or to improve the experience by monitoring CPU utilization and/or buffer status. Usually for that, server side has already prepared content and easily navigate client for appropriate stream. Actually I'm going to create simple javascript player based on video tag with overrided native control, implement a few features of player, design a few calls to server, and design transcoding on server side and streaming the content to clients. For now, just transcoding and streaming without analyzing of playback, therefore all examples of transcoding will be based on static config.

So as I said it is pretty straightforward to implement such functionality for modern environment, but what about old browsers and Internet Explorer who don't support webm. For Internet Explorer under windows 8 and above it can be solved using mp4 container with starting a new fragment at each video keyframe. About old browsers I will talk later. Right now I would like to say important thing and how to and what to use for transcoding. Probably the most famous and usable tool which is supported for all major platforms is FFMPEG so at the article I will talk about FFMPEG and provide example based on FFMPEG.

Contents

Now from overall to concepts.

Here is list of formats and who supports them.

| Browser | MP4 | WebM | Ogg |

| Internet Explorer | YES | NO | NO |

| Chrome | YES | YES | YES |

| Firefox | YES | YES | YES |

| Safari | YES | NO | NO |

| Opera | YES (from Opera 25) | YES | YES |

Copied from http://www.w3schools.com/HTML/html5_video.asp resource.

So as we can see mp4 container is supported by all major browsers. And it is almost nothing to say here except, that to have starting of playing such files immediately after user selected source, the file should be prepared and have moov atom at the beginning of file. The operation is fast and can be done even directly before playing the file. It can be done by using -movflags faststart option at ffmpeg (of course if container contains appropriate video and audio stream, I mean it is encoded H.264 video and the AAC audio and it is not need at the transcoding).

More intresting case when the file have to be transcoded before playing.

First I would talk about modern browsers except IE for now, so they are based on common webkit and support a webm container. Chrome, Fireox and Opera can play transcoded stream to webm format and now it is what I found usefull to work with these browsers. Here is basic config for transcoding at webm format -vcodec libvpx -acodec libopus -deadline realtime -speed 4 -tile-columns 6 -frame-parallel 1 -f webm. The meaning of the options can be found at http://www.ffmpeg.org/ resource. Chrome can play transcoded stream at matroska profile -vcodec libx264 -preset ultrafast -acodec aac -strict -2 -b:a 96k -threads 0 -f matroska, as well. Basically the webm container is based on a profile of Matroska. But actually it is more processor efficient, according to my test.

For Internet Explorer on windows 8 and above, the media should be transcoded at mp4 container with starting a new fragment at each video keyframe. Here is how to do that -vcodec libx264 -preset ultrafast -acodec aac -strict -2 -b:a 96k -threads 0 -f mp4 -movflags frag_keyframe.

I dont think that I reinvent the wheel, everything what I said, it is well known techniques, I've just organized it at one article.

Let's talk about old browsers, who can not play such formats, for example Internet Explorer for windows 7 and below.

Basically the problem is that just to start play file under IE on windows 7, the file has to be mp4 formats and to has moov atom at the beginning, otherwise if file contains the atom at the end of file then the file has to be fully downloaded on client side and only after that it will be played. (The atom contains information about the video file, time scale, duration, display characteristics,...). It is not big problem for small files but if you want to play DVD video at browser (reason why at browser, you can find by themselves :-) ) it can be uncomfortable. At this point the video file has to be transcoded to mp4 and ideally with moov atom at the beginning, the transcoding itself will take a while and it can be flatly to wait about half of hour to start watching interesting movie. So solution can be to transcode the file into small chunks and play them consequently. Something similar to HLS or MPEG-DASH. About, how to smoothly play such chunks, how to seeks by the video, and other, I will talk at next chapter.

At the chapter I will provide detailing of my vision of the concepts and samples of code.

Source code you can find at downloads to the article, the solution was developed under visual studio 2008, but it can be converted easily to the latest. The concepts was tested under windows 7 and 8 for browsers IE 10-11, Chrome v48, FF v44 and Opera v35 and only on .MOV, .mp4 and .mkv files.

First I would start from customization of html5 player to run on modern browsers.

The players for modern and old browsers are a little different but basically have common concepts and designed in MVC manner.

As it is just concept so it contains only basic functionality to show how it works and doesn't have all necessary functionality and it steel need a lot of work to be done. So the public interfaces are pretty simple and have a few sets of public methods.

Interface of PlayerCntr class (\sources\JsSource\js\modern\playercntr.js):

The class provides basic functionality to initialize media source for player and methods to control of playing. Also it encapsulates objects to represent UI of the the player and to manage a data of playing.

var PlayerCntr = (function()

{

return function(renderTo)

{

var _data = null;

var _view = null;

this.start = function()

{

}

this.stop = function()

{

}

this.initSource = function(source)

{

}

this.volume = function(value)

{

}

}

})();

- start/stop - starts/stops playing of media.

- initSource - initializes media source for player.

- volume - tunes up volume.

Interface of PlayerView class (\sources\JsSource\js\modern\playerview.js):

The class represents UI of player.

var PlayerView = (function()

{

return function()

{

this.control = function()

{

}

this.updateDuration = function(duration_sec)

{

}

this.updateTimeLine = function(time_ratio)

{

}

this.updateVolumeBar = function(volume_ratio)

{

}

this.isPlayed = function()

{

}

this.render = function(element)

{

}

}

})();

- render - renders this Component into the passed HTML element and initializes handlers for the events.

- isPlayed - returns state of playing.

- updateVolumeBar - updates runner positioin of volume control.

- updateTimeLine - updates progress of playing.

- updateDuration - updates overall time of playing.

- control - returns reference of current played video control.

Interface of PlayerData class (\sources\JsSource\js\modern\playerdata.js):

The class encapsulates data regarding of the current playing media and methods to manage the data.

var PlayerData = (function()

{

return function()

{

this.getVideoInfo = function(video_src, callback, scope)

{

}

this.getSeekOffset = function()

{

}

this.setSeekOffset = function(offset)

{

}

this.getSource = function()

{

}

this.reset = function()

{

}

this.parseInfo = function(info, path)

{

}

this.durationToReadableString = function(duration_sec)

{

}

this.getVideoDuration = function()

{

}

}

})();

- getVideoInfo - returns info in json format of current playing media.

- getSeekOffset - returns value in seconds of last seeking operation.

- setSeekOffset - updates value in seconds of last seeking operation.

- getSource - returns realtive path to media source.

- reset - resets data.

- parseInfo - parse incoming data and returns value of specified node at json.

- durationToReadableString - returns human readable string of time.

- getVideoDuration - returns media duration in seconds.

Interface of Player class (\sources\JsSource\js\player.js):

The Player class is class factory. It decides what kind of player have to be downloaded and created according to useragent string and file format, of course it is more correct way to decide what kind of player to use according to capabilities of video tag, information for that can be requested from server like I'm doing for duration below. So the class decides and downloads appropriate script regarding of media source and browser in runtime.

var Player = (function()

{

return function(parentEl)

{

this.initSource = function(source)

{

}

}

})();

The class has a public constructor with passed argument as html element, where to render player and one method which decides what player to use.

Player loader:

var loadPlayer = function(type, cb, scope)

{

var scriptLoader = new ScriptLoader();

scriptLoader.load(document.location.protocol + '//' + document.location.host + '/js/' + type + '/playerview.js')

.load(document.location.protocol + '//' + document.location.host + '/js/' + type + '/playerdata.js')

.load(document.location.protocol + '//' + document.location.host + '/js/' + type + '/playercntr.js')

.then(cb, scope);

}

And factory of player is:

if (getFileExtension(source).toLowerCase() === 'mp4')

{

loadPlayer('modern', function()

{

var player = new PlayerCntr(_parentEl);

player.initSource(source);

}, this);

}

else

{

var parser = new UAParser(navigator.userAgent);

if (isModern(parser))

{

loadPlayer('modern', function()

{

var player = new PlayerCntr(_parentEl);

player.initSource(source);

}, this);

}

else

{

loadPlayer('fallback', function()

{

var player = new PlayerCntr(_parentEl);

player.initSource(source);

}, this);

}

}

To parse useragent string I use parser from ua-parser project. It makes pretty accurate job.

The custom player encapsulates all methods around native video control of html. Since to have user experience like the player plays normal file, not endless stream, it needs an info regarding of the media source (endless, because the player knows only duration in seconds and doesn't know the resulting size, what is necessary in order to seek in bytes range like it is doing native control). So first what the client is doing it is requesting info about file which is going to play. The method initSource of PlayerCntr makes request to server to get information about played file. For now, only duration, video codecs, format are used, but in production version more info can be useful, like framerate, resolution, audio/video codecs (how streams encoded inside of the container). So according to the duration, the player builds timeline bar, in order that user could seeks in the file, using now timestamps, not bytes range. So as soon as player is rendered and method initSource is called, the source of video tag will be updated, the time offset in seconds will be added to the url, initially it is 0, but if user moves runner on the timeline, source of video tags will be updated proportionally to overall time of media. So calls to the server will look like:

http://localhost:8081/movies/movie7.mov?offset=0 - from beginig

http://localhost:8081/movies/movie7.mov?offset=2167.990451497396 - from specific position on timeline

Accordingly on server side, when new request comes for already played resource the current transcoding and streaming will be stopped and new one with offset in time will be started (The server side I will discuss later).The details of implementation you can find at provided source code.

Now about player for outdated browsers.

Basically, the interfaces for both types of player are similar, the main difference is, in order to provide smooth playing of transcoded chunks of media I use two video tags. One used for current played chunk and another as buffer. As soon as currently played chunk will reach the end, the currently played player and buffer will switch each other (current player becomes buffer and contrariwise) and buffer will send request to the server for new chunk of video at specific time frame. Each timeframe is 2 minutes. The timeframe is hard coded and not related to the content of video, but probably better way is to analyze the video in order to select end of time of each chunk on scene changes, it would give a smoother shifting of chunks, but it would increase time of transcoding of chunk. So now calls to the server will look like:

http://localhost:8081/movies/movie7.mov?offset=0&duration=120 - from beginig

http://localhost:8081/movies/movie7.mov?offset=2760&duration=120 - from specific position on timeline

Basically HTML markup for the player looks like:

<div class="video-player">

//...

<video width="100%" height="100%" class="visible" src="/movies/movie7.mov?offset=120&duration=240"></video> //current played player

<video width="100%" height="100%" class="hidden" src="/movies/movie7.mov?offset=240&duration=360"></video> //buffer

<div class="video-controls">

//...

</div>

</div>

//after switching players

<div class="video-player">

//...

<video width="100%" height="100%" class="hidden" src="/movies/movie7.mov?offset=360&duration=480"></video> //buffer

<video width="100%" height="100%" class="visible" src="/movies/movie7.mov?offset=240&duration=360"></video> //current played player

<div class="video-controls">

//...

</div>

</div>

Interface of PlayerCntr class (\sources\JsSource\js\outdated\playercntr.js):

The interface was not changed, the differences are hidden at private methods and events handler. The switching of video tags happens on event onMediaEnd, currently played video tag becomes hidden and buffer becomes visible. Next as soon as current played media reached 10% of ratio of current time of playing to the overall time of chunk it starts to download data for buffer. The operation will repeat until current time of playing reaches overral time of media. Actually 10% of ratio should not be constant it should be calculated regarding of bandwidth. As well as probably bitrate of transcoded media should be variable. All of the values will impact on how smooth video will play. Again it is just concepts.

Interface of PlayerView class (\sources\JsSource\js\outdated\playerview.js):

Two new methods was added to the interface:

- buffer - returns reference of buffer video control.

- switchPlayed - switches current played video and buffer.

Interface of PlayerData class (\sources\JsSource\js\outdated\playerdata.js):

Five new methods was added to the interface:

- calculateOffset - returns offset in seconds according to the specific value selected by user on timeline.

- getChankDuration - returns duration in seconds for next chunk based on overall time of played chunks.

- readyToUpdateBuffer - checks if it is time to update buffer according to current played time.

- getTimeChanksPlayed - returns overall time of played chunks.

- setTimeChanksPlayed - sets overall time of played chunks.

The implementation of server side is divided on modules. Each module responsible for specific type of stream and they are loading in runtime, when server is starting. So it is possible to don't load a module and some of streams will be disabled. So currently server implements three types of stream, steaming mp4 files, transcoding and streaming at wbem, matroska or mp4 with starting a new fragment at each video keyframe option (streaming to modern browsers). Third type of streaming is the streaming of transcoded chunks.

The server itself also implements two handlers, one for listing of working directory, seconde to handle request from client to get info about file which is going to be played.

Every handler has to implement IHandler interface:

public interface IHandler : IDisposable

{

string Name { get; }

void SetEnvironment(IHandlerEnvironment env);

bool IsSupported(IHttpContext context);

void Process(IHttpContext context);

}

- Name - returns unique name of handler.

- SetEnvironment(IHandlerEnvironment env) - sets running environment of the server.

- IsSupported(IHttpContext context) - returns state, does the server support the current http request or not.

- Process(IHttpContext context) - handles the request.

IHandlerEnvironment interface encapsulates only working directory of server.

public interface IHandlerEnvironment : IDisposable

{

string Directory { get; }

}

The most important interface is IHttpContext. The object of the interface encapsulates all data of incoming request and passed to all handlers, it is creating on incoming of new http request.

public interface IHttpContext

{

event EventHandler<handlereventargs> Closed;

Uri URL { get; }

int GetUniqueKeyPerRequest { get; }

int GetUniqueKeyPerPath { get; }

Stream InStream { get; }

Stream OutStream { get; set; }

NameValueCollection RequestHeaders { get; }

CookieCollection RequestCookies { get; }

ClientInfo BrowserCapabilities { get; }

WebHeaderCollection ResponseHeaders { get; }

CookieCollection ResponseCookies { get; }

ISession Session { get; }

Boundary Range { get; }

int StatusCode { get; set; }

void SetHandlerContext(object context);

void OnProcessed();

void OnLogging(EventLogEntryType logType, string message);

}

- Closed - event of closing incoming request, subscriber has to release all data associated with the request.

- URL - returns original url of incoming reques

- GetUniqueKeyPerRequest - convenient attributes which returns unique hash based on session id and original url.

- GetUniqueKeyPerPath - convenient attributes which returns unique hash based on session id and request path.

- InStream - returns incoming stream.

- OutStream - sets/returns outgoing stream.

- RequestHeaders - returns original request header.

- RequestCookies - returns original request cookies.

- BrowserCapabilities - returns information about client based on incoming useragent string.

- ResponseHeaders - returns response header.

- ResponseCookies - returns response cookies.

- Session - returns session associated with the request.

- Range - returns Boundary of outgoing stream in case of file or chunks and maximum of integer value in case of endless stream.

- StatusCode - sets/returns http code of response.

- SetHandlerContext(object context) - sets handler context, which can be gotten on Closed event.

- OnProcessed() - handler has to call the method as soon as request was handled.

- OnLogging(EventLogEntryType logType, string message) - handler can call the method to print out debugging info.

The interface hides original http context incoming with http request and provides only methods to process the request. The server implementation is not interesting in order to discuss carefully. It is based on asynchronous (non-blocking) call to receive incoming client requests and reading and writing to incoming and to outgoing stream. All file operations are asynchronous as well.

Details of implementation are in:

Server class (\sources\HttpServer\httpServer\Server.cs).

The Media type is handling request to get info of media file which is going to be played. To get the video info it uses ffprobe (ffbrobe part of ffmpeg progect and gathers information from multimedia streams and prints it in human- and machine-readable fashion).

Media Class (\sources\HttpService\Handlers\Media.cs).

Here is implementation of getting info:

private void StartFFProbe(IHttpContext context)

{

ThreadPool.QueueUserWorkItem(delegate(object ctx)

{

IHttpContext httpContext = (IHttpContext)ctx;

try

{

string urlpath = context.URL.LocalPath.Replace("/", @"\");

NameValueCollection urlArgs = HttpUtility.ParseQueryString(context.URL.Query);

string instance = HttpUtility.UrlDecode(Helpers.GetQueryParameter(urlArgs, "item"));

string path = string.Format(@"{0}\{1}", _env.Directory, instance.Replace("/", @"\")).Replace(@"\\", @"\");

ProcessStartInfo pr = new ProcessStartInfo(Path.Combine(new FileInfo(Assembly.GetEntryAssembly().Location).Directory.FullName, _ffprobe),

string.Format(@"-i {0} -show_format -print_format json", path));

pr.UseShellExecute = false;

pr.RedirectStandardOutput = true;

Process proc = new Process();

proc.StartInfo = pr;

proc.Start();

string output = "{ \"seeking_type\": \"" + Helpers.GetSeekingType((new FileInfo(path)).Extension) + "\", \"media_info\": " + proc.StandardOutput.ReadToEnd() + " }";

proc.WaitForExit();

SetHeader(httpContext, 200, Helpers.GetMimeType(""));

httpContext.OutStream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(output));

SetRange(httpContext);

}

catch (Exception e)

{

SetHeader(httpContext, 500, Helpers.GetMimeType(""));

if (httpContext != null)

{

httpContext.OutStream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(""));

}

context.OnLogging(EventLogEntryType.Error, string.Format("Media.StartFFProbe, Exception : {0}", e.Message));

}

finally

{

httpContext.OnProcessed();

}

}, context);

}

A result of the process is JSON. In addition to information about the video, output includes information such as the client can seek in the stream. The endless stream and chunked stream have one type of seeking, the file stream has different one and can be seeked on client side.

The requests to play mp4 files are handled at Mp4StreamHandler.

Mp4StreamHandler Class (\sources\HandlerExample1\Mp4StreamHandler.cs).

Here is implementation of mp4 file streaming:

public void Process(IHttpContext httpContext)

{

string urlpath = httpContext.URL.LocalPath.Replace("/", @"\");

string ext = Path.GetExtension(urlpath).ToLower();

try

{

string path = string.Format(@"{0}\{1}", _env.Directory, urlpath).Replace(@"\\", @"\");

switch (ext)

{

case ".mp4":

if (File.Exists(path))

{

SetHeader(httpContext, 206, Helpers.GetMimeType(ext));

httpContext.OutStream = new FileStream(path, FileMode.Open, FileAccess.Read, FileShare.Read, BufferSize.Value, true);

SetRange(httpContext);

httpContext.OutStream.Seek(httpContext.Range.Left, SeekOrigin.Begin);

SetHeaderRange(httpContext);

httpContext.OnProcessed();

}

return;

default:

throw new NotSupportedException();

}

}

catch (NotSupportedException e)

{

SetHeader(httpContext, 404, Helpers.GetMimeType(ext));

httpContext.OutStream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(""));

httpContext.OnLogging(EventLogEntryType.Error, e.Message);

httpContext.OnProcessed();

}

catch (Exception e)

{

SetHeader(httpContext, 500, Helpers.GetMimeType(ext));

httpContext.OutStream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(""));

httpContext.OnLogging(EventLogEntryType.Error, e.Message);

httpContext.OnProcessed();

}

}

These implementation of handlers don't keep reference on httpcontext and all responsibility for managing life time of the outgoing stream and httpcontext will take server, which called the handler. So these objects will be released on close of response.

But in some cases we need to delegate the responsibility for life time of the outgoing stream and httpcontext, at the case, the handler has to subscribe for closed event, and at the handler of the event has to sets up the state, that the handler will responsible for the outgoing stream and httpcontext.

More complicated case is to transcode and stream on the fly to modern and outdated players. I would start to describe my implementation of transcoding and streaming at webm or matroska format.

The main difference of the transcoding and streaming on the fly is that stream does not have length in bytes and player can use only duration at seconds. That is why operation of seeking in the stream happens on server side, not on client side in case of file streams. General workflow is when coming new request for playing file, which is not supported by browser, it arrives to WbemStreamHandler (of course if it modern browser). WbemStreamHandler will start http server if it is not started yet, next it will start ffmpeg and point his outgoing stream to the started server, so the http server is IO server, just to relay stream from ffmpeg to the client. If client want to seek at the stream, then current stream stops and new at new position will be started.

WbemStreamHandler Class (\sources\HandlerExample2\WbemStreamHandler.cs).

Here is implementation of staring of ffmpeg:

The function will be blocked until http server is starting to have appropriate addres of server.

private void StartFFMpeg(IHttpContext context)

{

ThreadPool.QueueUserWorkItem(delegate(object ctx)

{

_waitPort.WaitOne();

try

{

IHttpContext httpContext = (IHttpContext)ctx;

RemoveFFMpegProcess(httpContext);

string urlpath = httpContext.URL.LocalPath.Replace("/", @"\");

string path = string.Format(@"{0}\{1}", _env.Directory, urlpath).Replace(@"\\", @"\");

NameValueCollection urlArgs = HttpUtility.ParseQueryString(httpContext.URL.Query);

string offset = Helpers.GetQueryParameter(urlArgs, "offset");

if (string.IsNullOrEmpty(offset))

{

offset = "00:00:00";

}

else

{

offset = Helpers.GetFormatedOffset(Convert.ToDouble(offset));

}

ProcessStartInfo pr = new ProcessStartInfo(Path.Combine(new FileInfo(Assembly.GetEntryAssembly().Location).Directory.FullName, _ffmpeg),

string.Format(@"-ss {1} -i {0} {2} http://localhost:{3}/{4}", path, offset, GetBrowserSupportedFFMpegFormat(context.BrowserCapabilities), _httpPort.ToString(), AddContext(httpContext)));

pr.UseShellExecute = false;

pr.RedirectStandardOutput = true;

Process proc = new Process();

proc.StartInfo = pr;

proc.Start();

AddFFMpegProcess(httpContext, proc);

httpContext.OnLogging(EventLogEntryType.SuccessAudit, "*************** WbemStreamHandler.StartFFMpeg ***************");

}

catch (Exception e)

{

context.OnLogging(EventLogEntryType.Error, string.Format("WbemStreamHandler.StartFFMpeg, Exception: {0}", e.Message));

}

}, context);

}

Here I'm faced with problem managing bandwidth of output stream and transcoding performance. The transcoding is too heavy operation, usually ffmpeg takes more than half of operation time of processor. The transcoding of some format can take more time then playing. In such cases server have to dynamically manipulate with quality of transcoding in order to decrease time of transcoding (i'm still working around of the issue, how to make correctly).

To manage bandwidth of output stream the server has SpeedTester class, who accumulates information how many bytes was handled during specific time, and provides the statistics to server. The statistics is accumulated at ClientStatistics class. The class accumulates statistic per request and session id, overall statistic for all clients is kept on OverallStatistics class. So the server decides what stream and how long should be postponed according to the statistics. The ClientStatistics class is keeping information about bitrate of the transcoded data and median bitrate of two operations, the output streaming and transcoding operation. According to the both bitrates we can calculate ratio and waiting time, i.e. how long the stream can wait.

Next step should be the managing of the quality of video according to playback experience and workload server, to have smooth video playing on client side. The step requires more information on server side and also playback experience from client.

Source code of statistics: SpeedTester class (\sources\HttpService\httpServer\Utils\SpeedTester.cs), OverallStatistics Class (\sources\HttpService\httpServer\Utils\OverallStatistics.cs), ClientStatistics class (\sources\HttpService\httpServer\Utils\ClientStatistics.cs), and three classes to accumulates and calculate median bitrate: BinaryHeapMax class (\sources\HttpService\httpServer\Utils\BinaryHeapMax.cs), BinaryHeapMin class (\sources\HttpService\httpServer\Utils\BinaryHeapMin.cs) and Median class (\sources\HttpService\httpServer\Utils\Median.cs).

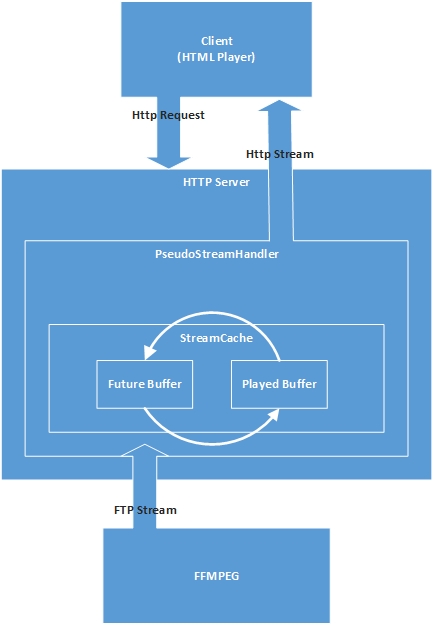

Now let's start to discuss the server side part of transcoding and streaming by chunks. I have implemented two different versions of the handler. One is based on file, i.e. chunks are transcoded by the ffmpeg and stored at filesystem, and then the handler just streams them as mp4 files with moov atom at the beginning of file in order to start playing immediately, it is implemented at PseudoStreamHandler2. Another one is memory based, i.e. ffmpeg transcodes the specific chunk and store the chunk at FTP server. So at this case the outgoing resources of ffmpeg is ftp server, implemented at PseudoStreamHandler, the handler keeps every transcode chunk at his runtime memory.

The file based handler implements two functions, one function transcodes new chunk and store it at file and another function processes already transcoded chunk. The references for the files are kept at StreamCaсhe object. It is simple implementation FIFO cache which can keep two instances per file. I.e. for specific source at the cache can be maximum two references on files (reference for currently played chunk and reference for buffer which will play next), when comes third the first one will be released and replaced by second then new one becomes on second place.

Details of implementation are in:

PseudoStreamHandler2 Class (\sources\PseudoStreamHandler2\PseudoStreamHandler2.cs), StreamCache Class (\sources\PseudoStreamHandler2\StreamCache.cs).

The handler based on the memory cached chunks is pretty similar to the handler above, except that StreamCache keeps references for memory, and handler implements simple FTP server. I just would notice that to improve memory management, the StreamCache doesn't create MemoryStream every time when comes new chunk it reuses MemoryStream which currently not used and already played.

Details of implementation are in:

PseudoStreamHandler Class (\sources\PseudoStreamHandler\PseudoStreamHandler.cs), StreamCache Class (\sources\PseudoStreamHandler\StreamCache.cs), (Implementation of ftp client connection handling) FtpClient class (\sources\PseudoStreamHandler\FtpClient.cs), PassiveListener class (\sources\PseudoStreamHandler\PassiveListener.cs).

Here is a lot of questions around StreamCache, and transcoded data. Because transcoding itself is to heavy operation, probably it is good to keep all transcoded chunks at some storage, that to have possibility just to stream media without transcoding, and to transcode on demand in case if cache doesn't have reference on such timeframe. Also it is necessary to have locking of currently played buffer to prevent it from rewriting, in case if data was not streamed in full and give possibility to grow for cache, do not lock it on two buffers. But basically, let's say as homenetwork solution, for streaming from media box to a few recipients, it can work.

To make it properly working it will require big efforts as on client side so on server. Especially because the transcoding itself will require a lot of resource on server side, also it will require feedback from client about playback experience. And even it can be done for a few clients it seems to me it is very difficult to do for more than for a large number of clients without caching transcoded data and re-streaming it to clients.

As I said it is just experiment and I'm interested about feedback and new ideas. Maybe it is overhead solution or it never will work in real environment.

At attached source code, you will find solution: .\_build\ MediaStreamer.sln for Visual Studio 2008. It creates console application of http/https server.

Before compiling you have to install ffmpeg, in order to do that you need to dowload the package from https://ffmpeg.org/download.html#build-windows resource. Next, unpack the archive under \workenv\ffmpeg\ folder. The build process will automatically copy it into appropriate place.

For your convenience before building of the solution you can copy video content under folder .\data and it will be copied to working directory of server.

When solution is built successfully, you will find folder .\ _debug or .\_release, depends on what configuration you've selected. At the folder, simply run file start_http_server.bat to start http server. As soon as server started successfully, you will find PLAYER.lnk file at the folder, it will help you to launch default internet browser.