Introduction

.NET 2.0 introduces a lot of new features in Windows Forms and deployment technologies that take Smart Client development to the next level. Previously, we had to worry about too many issues including rich controls (owner drawn controls), multithreading, auto update and various other issues while developing a Smart Client. All these have been taken care of in the 2.0 Framework. However, most of the web based systems including Web Services and Web Sites are already developed in .NET 1.1. So, in this article, we will look at a real life scenario where a .NET 2.0 based Smart Client will be using a well engineered Service Oriented Architecture (SOA) based web service collection developed using .NET 1.1. The sample application will show you from early design issues to the deployment complexities, and how you can make a Smart Client smartly talk to a collection of web services which are also well engineered using the famous SOA. The server side will be fully utilizing Enterprise Library June 2005 by using all of its application blocks. The resultant product is an ideal example of best practices in cutting edge technologies and latest architecture design trends.

If you would like a pure .NET 1.1 Smart Client, please see my other article: RSS Feed Aggregator and Blogging Smart Client.

Revision

Feature Walkthrough

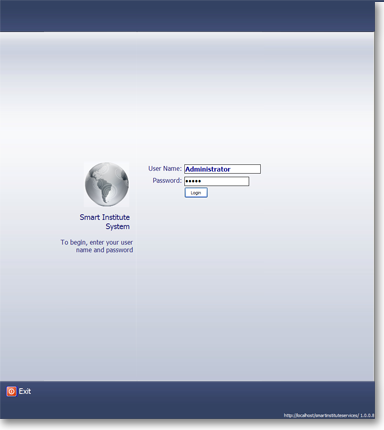

Login

| Now this is something that you don't see everyday. This looks like the login screen of a preview release of Longhorn which has been renamed to Windows Vista. The new login screen is quite different than this one, but I really liked this design and decided to use it in my own apps.

When you click the Login button, a background thread is spawned which connects to the webservice and passes the credentials. As the login is in background, the UI remains active and you can cancel login and exit. We will see how we can make a truly responsive UI by calling Web Services asynchronously using the new BackgroundWorker component.

Fig: Login in progress

|

Loading Required Data Asynchronously

| When the main form loads, it needs to load the course list and the student list. As this takes time and we cannot keep the user stuck on the UI, it loads in the background using two different asynchronous web service calls. You can develop small controls in such a way that each control itself manages its asynchronous loading by utilizing the convenient BackgroundWorker component.

The UI remains fully responsive while the application is loading. As this is a long task, the user may want to explore the menus or do some other chores while the application gets ready to work.

|

Tabbed Interface: Document-centric View

| The application follows an enhanced version of the Model-View-Controller architecture which we will discuss here. The architecture I have implemented is almost similar to what you see in Microsoft Office, Visual Studio and other desktop applications which provide Automation Support. You will see how you can leverage this wonderful design idea to create a truly decoupled, extensible and scriptable application that saves your development and maintenance time significantly.

Each tab represents a Document which can be a Student Profile, an Options module, Send Receive Module or any other module that inherits from the Document class.

Modules can load their data asynchronously. For example, here the course list of a student loads asynchronously. Although the basic profile information of all students are loaded during application startup, the details are loaded on demand, saving initial bandwidth consumption and overall memory allocation of the entire application.

|

Owner-drawn Tree View

| We will explore the new WinForms features of .NET 2.0 which has made most of the controls fully owner drawn. You can now create owner drawn TreeView, ListView, ListBox and almost all the controls. Most of the controls now offer a virtual DrawItem method and/or event which you can subscribe to and provide custom rendering. You can also define whether the items' height will be variable or fixed. When you have the power to draw the items yourself, you can do anything inside a node. You can show pictures along with text, draw text in different font and color inside the same node, make nodes hierarchical etc.

The Course Treeview on the left is owner drawn. You can see the nodes provide multiline content, with different background gradient. It also renders multiple properties of an object inside one node. We will see how to do this later on.

|

Offline Capability

| Offline capability is the best feature of Smart Clients. Smart Clients can download data that you need to work with later on and then disconnect from the information source. You can then work on the data while you are mobile or out of office. Later on, you can connect to the information store and send/receive the changes that you have made during the offline period.

One example here is, you can open the students data you want to work with. Then you can disconnect from the web service or go offline. You can then modify these students either by editing profile, or by adding/dropping courses, modifying accounts etc. Later on, when you get connected or get online, click on the "Send/Recive" button and the changes are synchronized back to the server.

Both you and the server get the latest and up-to-date information.

You can enhance the offline experience further by providing local storage of information so that users can shut down the application after going offline and can later on start the application to work with the local data. This can easily be done by serializing the course and student collection to some local XML file.

|

Going Online

| Here you see the Outlook 2003 style Send Receive module. This module collects the pending saves and sends them to the server. It also fetches latest student information for those which you have modified.

In case of any error received from the server, you can see them from the second tab. This tab uses an owner drawn ListBox control to show both the icon and the text of the error message.

For such offline synchronization, you need to handle concurrency on the server side. We will discuss the server architecture and how we have implemented concurrency later on.

|

Being Smart Client

Requirements

In order to qualify an application as a Smart Client, the application needs to fulfill the following requirements according to the definition of Smart Client at MSDN:

Local Resources and User Experience

All Smart Client applications share is an ability to exploit local resources such as hardware for storage, processing or data capture such as compact flash memory, CPUs and scanners for example. Smart Client solutions offer hi-fidelity end-user experiences by taking full advantage of all that the Microsoft® Windows® platform has to offer. Examples of well known Smart Client applications are Word, Excel, MS Money, and even PC games such as Half-Life 2. Unlike "browser-based" applications such as Amazon.Com or eBay.com, Smart Client applications live on your PC, laptop, Tablet PC, or smart device.

Connected

Smart Client applications are able to readily connect to and exchange data with systems across the enterprise or the internet. Web services allow Smart Client solutions to utilize industry standard protocols such as XML, HTTP and SOAP to exchange information with any type of remote system.

Offline Capable

Smart Client applications work whether connected to the Internet or not. Microsoft® Money and Microsoft® Outlook are two great examples. Smart Clients can take advantage of local caching and processing to enable operations during periods of no network connectivity or intermittent network connectivity. Offline capabilities are not only of use in mobile scenarios however, desktop solutions can take advantage of offline architecture to update backend systems on background threads, thus keeping the user interface responsive and improving the overall end-user experience. This architecture can also provide cost and performance benefits since the user interface need not be shuttled to the Smart Client from a server. Since Smart Clients can exchange just the data needed with other systems in the background, reductions in the volume of data exchanged with other systems are realized (even on hard-wired client systems, this bandwidth reduction can realize huge benefits). This in turn increases the responsiveness of the user interface (UI) since the UI is not rendered by a remote system.

Intelligent Deployment and Update

In the past, traditional client applications have been difficult to deploy and update. It was not uncommon to install one application only to have it break another. Issues such as "DLL Hell" made installing and maintaining client applications difficult and frustrating. The Updater Application Block for .NET from the Patterns and Practices team provides prescriptive guidance to those that wish to create self-updating .NET Framework-based applications that are to be deployed across multiple desktops. The release of Visual Studio 2005 and the .NET Framework 2.0 will beckon a new era of simplified Smart Client deployment and updating with the release of a new deploy and update technology known as ClickOnce.

(The above text is copied and shortened from the MSDN site.)

How is This a Smart Client

- Local Resources and User Experience: The application downloads from a website using ClickOnce deployment feature of .NET 2.0 and runs locally. It fully utilizes local resources, multithreading capability, graphics card's power, giving you the best user experience .NET 2.0 has to offer.

- Connected: The application works by calling several web services which act as the backend of the client.

- Offline Capable: You can load students data you want to work with and go offline. You can make changes to the student profiles, add/drop courses, modify accounts etc. and then synchronize them back when you go online.

- Intelligent Deployment and Update: The application uses Updater Application Block 2.0 to provide auto update feature. Whenever I release a new version or deploy some bug fixes to a central server, all the users of this application automatically get the update behind the scene. This saves each and every user from going to the website and downloading the new version every time I release something new. It also allows me to instantly deliver bug fixes to everyone within a very short time.

- Multithreaded: Another favorite requirement of mine for making a client really Smart is to make the application fully multithreaded. Smart Clients should always be responsive. They must not get stuck whenever they are downloading or uploading data. User will keep on working without knowing something big is going on in the background. For example, while you are loading one student's course list, you can move around on the course list on the left or can work on another student data which is already loaded.

- Crash Proof: A Smart Client becomes a Dumb Client when it crashes in front of a user showing the dreaded "Continue" or "Quit" dialog box. In order to make your app truly Smart, you need to catch any unhandled error and publish the error safely. In this app, I will show you how this has been done using .NET 2.0's Unhandled Exception trap feature.

The Client

The greatest feature of this application is that, it provides you with an automation model similar to what you see in Microsoft Office applications. As a result, you can write scripts to automate the application and you can also develop custom plug-ins. The idea and implementation of this architecture is pretty big and explained in this article:

The basic idea is to make an observable Object Model that all the UI modules or Views subscribe to in order to provide services based on changes made to the object model. For example, as soon as you add a new Document object in the Documents collection, the Tab module catches up the Add event and creates a new tab. The Views also reflect changes made on the UI to the object model. For example, when the user switches a tab, the view sets the Selected property to true for the corresponding Document object.

This is the object model of the Client application. _Application is the starting point. This is not WinForm's Application class. This is my own Application class with a prefix "_". It contains collections of StudentModel, CourseModel, and Document. These are all observable classes which provide notification of a variety of events. They all inherit from a class named ItemBase which provides the foundation for an observable object model. ItemBase exposes a lot of events which you can subscribe to in order to get notified whenever something happens on the object.

public abstract class ItemBase : IDisposable, IXml

{

...

...

public virtual event ItemSelectHandler OnSelect;

public virtual event ItemQueryClose OnQueryClose;

public virtual event ItemChangeHandler OnChange;

public virtual event ItemDetachHandler OnDetach;

public virtual event ItemShowHandler OnShow;

}

When you extend your object from ItemBase, you get the feature to Observe changes made in your objects. Your extended objects can call ItemBase's NotifyChange method to let others know when the object changes. For example, let's look at the StudentModel which listens to any changes made to its properties. Whenever there is a change, it calls the NotifyChange method to let others know that it has changed.

So, this gives you the following features:-

- Whenever you make changes in the

Student object, e.g. set it's FirstName property to "Misho" (my name), the "First Name" text box on the UI gets updated. This happens because the StudentProfile UserControl which contains the text box has already subscribed to receive events from the StudentModel and has dedicated its life only to reflect changes made in the object model to the UI. - Similarly, whenever user types anything on the the first name text box, the

UserControl immediately updates the FirstName property of the StudentModel. As a result, OnChange event is fired and another module which is Far Far Away (Shrek2), receives the notification and updates the title bar of the application reflecting the current name of the student.

So, you can now have objects which can broadcast events. Any module can anytime subscribe/unsubscribe to a student object and provide some service without ever knowing existences of the other modules. This makes your program's architecture extremely simple and loosely coupled as you only have to think about how to listen to changes in the global object model and how to update that object model. You never think, hmm... there's a title bar which needs to be changed when user types something in the "First Name" text box which is inside a StudentProfile UserControl. Hmm... the user control is in another DLL. How can I capture the change notification on the text box and carry it all the way to the top Form. Hmm... looks like we need to expose a public Event on the user control and we need to subscribe to it somehow from the Form. Now from the Form, how do I get reference to the user control which is hosted inside another control, which is inside another control and which is also inside another control. Hmm... So, we have to add events to all these nested user controls in order to bubble up the event raised deep inside the StudentProfile control. Life is so enjoyable, isn't it?

Instead of doing these, what you can do is, make the Form subscribe to OnChange event of the StudentCollection . The StudentProfile control will reflect changes from the UI to the Student object and raise the event. The Form will instantly capture it and update the title bar. But this requires you to have a global object model just like what you see in MS Word's object model.

Let's see the difference between how we normally design desktop applications and how an automation model can dramatically change the way we develop them:

Making such an object model is quite easy when you have the ItemBase class which I have perfected over the years, project after project. All you need to do is extend it, pass the parent to the base on the constructor, and call the methods on the base whenever you like. For example, the StudentModel class is as simple as it looks here:

public class StudentModel : ItemBase

{

public StudentModel( Student profile, object parent ) : base( parent )

{

this.Profile = profile;

profile.AfterChanged += new StudentEventHandler(profile_AfterChanged);

}

void profile_AfterChanged(object sender, StudentEventArgs e)

{

base.IsDirty = true;

base.NotifyChange("Dirty");

}

}

Similarly, there is a CollectionBase class which is the base of all observable collections. This class provides you with two major features:

- Notification of item: add, remove, clear.

- Notification from any of the contained item.

The second feature is the most useful one. If you have a StudentCollection class which contains 1000 Student objects, you cannot just subscribe to 1000 object's OnChange event. Instead you subscribe to the OnItemChange event of the collection which is automatically fired whenever a child object raises its OnChange event. The CollectionBase class handles all the difficulties of event subscription and propagation from its child objects for your convenience.

public abstract class CollectionBase : System.Collections.CollectionBase

{

public event ItemChangeHandler OnItemChange;

public event ItemDetachHandler OnItemDetach;

public event ItemShowHandler OnItemShow;

public event ItemSelectHandler OnItemSelect;

public event ItemUndoStateHandler OnItemUndo;

public event ItemRedoStateHandler OnItemRedo;

public event ItemBeginUpdateHandler OnItemBeginUpdate;

public event ItemEndUpdateHandler OnItemEndUpdate;

public event CollectionAddHandler OnItemCollectionAdd;

public event CollectionRemoveHandler OnItemCollectionRemove;

public event CollectionClearHandler OnItemCollectionClear;

public event SelectionChangeHandler OnItemCollectionSelectionChanged;

public event CollectionAddRangeHandler OnAddRange;

public event CollectionRefreshHandler OnRefresh;

}

ItemBase provides another feature which is listening to a child collection. For example, a Course object has a collection of Section objects which in turn has a collection of SectionRoutine objects. The Course object, being an ItemBase, can receive events raised from the SectionCollection which inherits CollectionBase. As a result, Course object can receive events from Section objects contained inside the SectionCollection.

Command Pattern

For desktop applications, this is probably the best pattern of all. Command Pattern has many variants, but the simplest form looks like this:

I have used Command Pattern for all activities that involve Web Service call and UI update. Here is a list of commands that I have used which will give you ideas how to break your code into small commands:

LoadAllCourseCommand - Load all courses and sections by calling Course web service and populate the _Application.Courses collection. AuthenticateCommand - Call Security web service to authenticate the specified credentials. LoadStudentDetailCommand - Load details of a particular student. Called when you load a student for editing. SaveStudentCommand - Called by the "Send/Receive" module to save a student's information to the server. CloseAllDocumentsCommand - Called by the Window->Close All menu item to close all open documents. CloseCurrentDocumentCommand - Called by the Window->Close menu item and the Tab->Close menu item in order to close the current document. ExitCommand - Exits application.

So, you see, all the user activities are translated to some form of commands. Commands are like small packets of instructions. Normally each command is self-sufficient. It does all the activities like calling web service, fetching data, updating object model and optionally updating the UI. This makes the commands truly reusable and very easy to call from anywhere you like. It also reduces duplicate codes in different modules which provide similar functionality.

For details about Command pattern, see my famous article:

Background Send Receive of Tasks

We will now take a look at a complete implementation of everything we have seen so far. Let's look at the complicated module "Send Receive" which performs the synchronization.

There are two classes that host this view:

SendReceiveDocument - a class which inherits from DocumentBase and works as a gateway between the object model and the User Control which provides the UI. SendReceiveUI - a UserControl which provides the interactivity.

The SendReceiveDocument is quite simple:

public class SendReceiveDocument : DocumentBase

{

private IList<StudentModel> _Students;

public IList<StudentModel> Students

{

get { return _Students; }

set { _Students = value; }

}

public SendReceiveDocument(IList<StudentModel> students, object parent)

: base("SendReceive", "Send/Receive", new SendReceiveUI(), parent)

{

this._Students = students;

}

public override bool Accept(object data)

{

if (data is IList<StudentModel>)

return base.UI.AcceptData(data);

else

return false;

}

public override bool CanAccept(object data)

{

return false;

}

public override bool IsFeatureSupported(object feature)

{

return false;

}

}

It contains a list of Students that needs to be synchronized. The SendReceiveUI inherits from DocumentUIBase. This is a base user control for all Document type user controls. DocumentUIBase provides a reference to the Document which it needs to show.

public partial class SendReceiveUI : DocumentUIBase

{

private IList<StudentModel> _Students

{

get { return (base.Document as SendReceiveDocument).Students; }

set { (base.Document as SendReceiveDocument).Students = value; }

}

public SendReceiveUI()

{

InitializeComponent();

}

internal override bool AcceptData(object data)

{

this._Students = data as IList<StudentModel>;

this.StartSendReceive();

return true;

}

}

So, the architecture looks like this:

When the synchronization starts, SendReceiveUI has a method named StartSendReceive which is called:

private void StartSendReceive()

{

this.tasksListView.Items.Clear();

foreach (StudentModel student in this._Students)

{

ListViewItem item = new StudentListViewItem(student);

this.tasksListView.Items.Add(item);

}

...

...

worker.RunWorkerAsync();

}

Here worker is a BackgroundWorker component, one of .NET 2.0's marvel. This component provides a very convenient way to provide multithreading in your UI. When you put this control in your Form or UserControl, you get three events:

DoWork - where you do the actual work. This method is executed in a different thread and you cannot access any UI element from this event of from any function that somehow originates a call from this event. ProgressChanged - This event is fired when you call the ReportProgress method on the worker component. This event is slip streamed to the UI thread. So, you are free to use the UI components. However, what you do here blocks the main UI thread. So, you need to do very small amount of work here. Also remember not to call ReportProgress too frequently. RunWorkerCompleted - This is fired when you are done with the execution in DoWork event. This function is called from the UI thread, so you are free to use UI controls.

Here's the DoWorker method definition:

private void worker_DoWork(object sender, DoWorkEventArgs e)

{

this.BackgroundThreadWork();

}

I generally follow a practice to include the "Background" word in the methods which are called from here. This gives me a visual indication to remember that I am not in the UI thread. Also, any other function called from such a function are also marked with the "Background" word.

The actual work is performed in this method. Let's see what the Send/Receive module does:

private void BackgroundThreadWork()

{

foreach (StudentModel model in this._Students)

{

try

{

worker.ReportProgress((int)

StudentListViewItem.StatusEnum.Sending, model);

ICommand saveCommand = new SaveStudentCommand(model.Profile);

saveCommand.Execute();

worker.ReportProgress((int)

StudentListViewItem.StatusEnum.Receiving, model);

ICommand loadCommand = new

LoadStudentDetailCommand(model.Profile.ID);

loadCommand.Execute();

model.IsDirty = false;

model.NotifyChange("Reloaded");

model.LastErrorInSave = string.Empty;

worker.ReportProgress((int)

StudentListViewItem.StatusEnum.Completed, model);

}

catch (Exception x)

{

model.LastErrorInSave = x.Message;

worker.ReportProgress((int)

StudentListViewItem.StatusEnum.Error, model);

}

}

}

In this method, we call the web services for doing the actual work. As this method is running in a background thread, the UI does not get blocked while the web service calls are in progress.

You see, the tasks are actually encapsulated inside small commands. These commands do the web service call and update the object model. I need not worry about refreshing the UI or repopulating the student list drop down from here because whenever the object model is updated, they update themselves. I need not think about any other part of the application at all and can focus on doing my duties which is relevant to this context only.

So you see, whenever you add a new module to your application, you need not worry about changing existing modules' code in order to utilize the new module. The new module just subscribes to the object model and does its job whenever some events are raised. You can also tear off a module from any part of the application without worrying about its dependency on other modules. There is no dependency. Everyone only depends on the object model. Everyone is listening to the object model for its own purpose. No body cares who comes in and who goes away.

This is the true power of an automation supported object model.

Owner-drawn ListBox

You have seen in the "Send Receive" module's screenshot that, a simple ListBox is showing both icon and text. This is possible if you tell the ListBox not to do any rendering itself and let you to take care of the rendering. This is called Owner Drawing. .NET 2.0's ListBox control has the DrawMode property which can have the following enumerated values:

Normal - The framework does the default rendering of list box items which is plain text. OwnerDrawFixed - You get to draw the items but each item is fixed height. OwnerDrawVariable - You get to decide the height of each item and draw each item as you like.

After setting the drawing mode, subscribe to the DrawItem event and do your drawing.

private void errorsListBox_DrawItem(object sender, DrawItemEventArgs e)

{

Rectangle bounds = e.Bounds;

bounds.Inflate(-4, -2);

float left = bounds.Left;

e.Graphics.FillRectangle(Brushes.White, e.Bounds);

if (e.State == DrawItemState.Focus

|| e.State == DrawItemState.Selected)

ControlPaint.DrawFocusRectangle(e.Graphics, e.Bounds);

e.Graphics.DrawImage(this.imageList1.Images[3],

new PointF(left, bounds.Top));

left += this.imageList1.Images[3].Width;

RectangleF textBounds = new RectangleF( left, bounds.Top,

bounds.Width, bounds.Height);

e.Graphics.DrawString(this.errorsListBox.Items[e.Index] as string,

this.errorsListBox.Font, Brushes.Black, textBounds);

e.Graphics.DrawLine(Pens.LightGray, e.Bounds.Left, e.Bounds.Bottom,

e.Bounds.Right, e.Bounds.Bottom);

}

You will get everything you need to know about the drawing context from the parameter e which lets you get the Graphics and Bounds of the current item. You can see in the code that, within the bounds, we can use the Graphics class to draw whatever we like. First we are filling the area with White color which cleans the area. Then we check whether this is the current item or not and draw the focus rectangle accordingly. After that we draw an image which is followed by the text of the item.

Owner-drawn TreeView

Just like owner drawn ListBox we can make TreeView control owner drawn and do whatever we like with the nodes. For example, the screenshot shows you that we are rendering gradient background and multiline text on a node. This is not something that was possible with .NET 1.1 unless you go under the hood and work with the Win32 API. But now, .NET 2.0 makes it all the managed way which is the easy way.

First, we override the OnDrawNode method which does the actual drawing.

|  |

protected override void OnDrawNode(DrawTreeNodeEventArgs e)

{

Brush brushBack = SystemBrushes.Window;

Brush brushText = SystemBrushes.WindowText;

Brush brushDim = Brushes.Gray;

if ((e.State & TreeNodeStates.Selected) != 0)

{

if ((e.State & TreeNodeStates.Focused) != 0)

{

brushBack = SystemBrushes.Highlight;

brushText = SystemBrushes.HighlightText;

brushDim = SystemBrushes.HighlightText;

}

else

{

brushBack = SystemBrushes.Control;

brushDim = SystemBrushes.WindowText;

}

}

RectangleF rc = new RectangleF(e.Bounds.X, e.Bounds.Y,

this.ClientRectangle.Width, e.Bounds.Height);

e.Graphics.FillRectangle(brushBack, rc);

if (rc.Width > 0 && rc.Height > 0)

{

if (e.Node is CourseNode)

this.DrawCourseNode(e, rc, brushBack, brushText, brushDim);

else if (e.Node is SectionNode)

this.DrawSectionNode(e, rc, brushBack, brushText, brushDim);

else if (e.Node is RoutineNode)

this.DrawRoutineNode(e, rc, brushBack, brushText, brushDim);

}

}

Most of the code here you see are dealing with current node state and drawing the focus rectangle or a gray rectangle. At the end, according to the current node object, we are calling the actual render function.

private void DrawCourseNode(DrawTreeNodeEventArgs e, RectangleF rc,

Brush brushBack, Brush brushText, Brush brushDim)

{

CourseNode node = e.Node as CourseNode;

if( (e.State & TreeNodeStates.Selected) == 0 )

{

this.GradientFill(e.Graphics, rc, Color.White,

Color.Gainsboro, rc.Height/2);

}

float textX = rc.Left;

SizeF titleSize = this.DrawString(e.Graphics, node.Course.Title,

base.Font, brushText, textX, rc.Top);

float secondLineY = rc.Top + titleSize.Height;

if (!node.IsAllowed)

textX += this.DrawString(e.Graphics, "All Closed ",

base.Font, Brushes.DarkRed, textX, secondLineY ).Width;

int capacity = node.Capacity;

string count = "(" + capacity + ") ";

textX += this.DrawString( e.Graphics, count, base.Font,

brushText, textX, secondLineY ).Width;

int sectioncount = node.Course.CourseSectionCollection.Count;

string sections = sectioncount.ToString() + " section"

+ (sectioncount>1?"s":"");

textX += this.DrawString(e.Graphics, sections,

base.Font, brushText, textX, secondLineY).Width;

}

We have the full power to draw text, icons using any style or font in this method. This gives us complete power over rendering of the TreeView control, yet fully reusing its complete functionality as it is.

Web Service Connection Configuration

When you add reference to a webservice, the proxy code is generated with the web service location embedded in the code. So, during development, if you add web service reference from the local computer, you get the web service reference set to localhost. So, before going to production, you need to change all the web service proxies, so that they point to the production server.

Another problem is, what if your web service locations are not static? What if you need to make the URL configurable from your own configuration file, instead of fixing it from Visual Studio?

I solve it the following way:

- I store the server name in a configuration variable.

- I use

ServiceProxyFactory class which gives me the webservice proxy reference I need, instead of directly accessing a webservice proxy.

So, basically I am following the Factory pattern where a factory decides which proxy to return and it also prepares the reference a bit before returning the reference. For example:

internal class ServiceProxyFactory

{

public static StudentService GetStudentService()

{

StudentService service = new StudentService();

service.Url = _Application.Settings.WebServiceUrl +

"StudentService.asmx";

SmartInstitute.Automation.SmartInstituteServices.

StudentService.CredentialSoapHeader header =

new SmartInstitute.Automation.SmartInstituteServices.

StudentService.CredentialSoapHeader();

header.Username = _Application.Settings.UserInfo.UserName;

header.PasswordHash = _Application.Settings.UserInfo.UserPassword;

header.VersionNo = _Application.Settings.VersionNo;

SetProxy(service);

service.CredentialSoapHeaderValue = header;

return service;

}

}

The following issues are handled here:

- Sets the URL of the proxy according to the configuration.

- Sets a SOAP Header which contains the credential. More on this later on.

- Sets the proxy. Configuration decides whether to use proxy or not. I needed to make it configurable instead of choosing default proxy because I wanted my web server to by bypassed from default proxy.

The Server - Service Oriented Architecture

Requirements

The word "Service" has the following definition as per Wikipedia:

A self-contained, stateless function which accepts one or more requests and returns one or more responses through a well-defined interface. Services can also perform discrete units of work such as editing and processing a transaction. Services do not depend on the state of other functions or processes. The technology used to provide the service does not form part of this definition.

Moreover a Service needs to be stateless:

Not depending on any pre-existing condition. In an SOA, services do not depend on the condition of any other service. They receive all information needed to provide a response from the request. Given the statelessness of services, consumers can sequence (orchestrate) them into numerous sequences (sometimes referred to as pipelines) to perform application logic.

Moreover, SOAs are:

Unlike traditional object-oriented architectures, SOAs comprise of loosely joined, highly interoperable application services. Because these services interoperate over different development technologies (such as Java and .NET), the software components become very reusable.

SOA provides a methodology and framework for documenting enterprise capabilities and can support integration and consolidation activities.

The Sample SOA

There are four services available on the web project available in the source code:

- Student Service: Provides Student related services like loading all students, saving a student etc.

- Course Service: Provides Course related services like loading all courses.

- Account Service: Loading/saving accounts, calculating assessment etc.

- Security Service: Provide authentication and authorization using Enterprise Library's Authentication Provider.

Enterprise Library

Enterprise Library is a major new release of the Microsoft Patterns & Practices application blocks. Application blocks are reusable software components designed to assist developers with common enterprise development challenges. Enterprise Library brings together new releases of the most widely used application blocks into a single integrated download.

The overall goals of the Enterprise Library are the following:

- Consistency. All Enterprise Library application blocks feature consistent design patterns and implementation approaches.

- Extensibility. All application blocks include defined extensibility points that allow developers to customize the behavior of the application blocks by adding in their own code.

- Ease of use. Enterprise Library offers numerous usability improvements, including a graphical configuration tool, a simpler installation procedure, and clearer and more complete documentation and samples.

- Integration. Enterprise Library application blocks are designed to work well together and are tested to make sure that they do. It is also possible to use the application blocks individually (except in cases where the blocks depend on each other, such as on the Configuration Application Block).

Application blocks help address the common problems that developers face from one project to the next. They have been designed to encapsulate the Microsoft recommended best practices for .NET applications. They can be added into .NET applications quickly and easily. For example, the Data Access Application Block provides access to the most frequently used features of ADO.NET in simple-to-use classes, boosting developer productivity. It also addresses scenarios not directly supported by the underlying class libraries. (Different applications have different requirements and you will not find that every application block is useful in every application that you build. Before using an application block, you should have a good understanding of your application requirements and of the scenarios that the application block is designed to address.)

(The above text is from Enterprise Library Documentation.)

The sample web solution uses the following application blocks:

- Caching Application Block. Caching frequently accessed data like the Student and Course list.

- Configuration Application Block. Configuration of all other application blocks.

- Cryptography Application Block. Encrypt/Decrypt password.

- Exception Handling Application Block. Publishing exceptions.

- Logging and Instrumentation Application Block. Log activities from the web service.

- Security Application Block. Used to provide authentication and authorization.

Security

Authentication & Authorization is handled by the Enterprise Library's Security Application Block. It's a feature rich block which contains the following:

- Database based authentication & authorization. Table structure is same as .NET 2.0's membership provider. Easy to upgrade.

- Active Directory based authentication.

- Windows Authorization Manager supported authorization.

It's a great block to use in your application. However, if you already have a custom database based authentication & authorization implementation, you will have to do the following in order to replace your home grown A&A codes to industry standard practices provided by the Enterprise Library:

- If you have a user class, extract the password field from it. Keep the user name field.

- Map the user name field with the "Users" table created by the database script provided with the Security Application Block. Create a foreign key from your user table to EL's Users table on the User Name column.

- Change your authentication code to use the

AuthenticationProvider available in EL. - Change your home grown role based authorization with EL's role based authorization.

Here's the code for authentication:

public static string Authenticate( string userName, string password )

{

IAuthenticationProvider authenticationProvider =

AuthenticationFactory.GetAuthenticationProvider(

AUTHENTICATION_PROVIDER);

IIdentity identity;

byte [] passwordBytes =

System.Text.Encoding.Unicode.GetBytes( password );

NamePasswordCredential credentials =

new NamePasswordCredential(userName, passwordBytes);

bool authenticated =

authenticationProvider.Authenticate(credentials, out identity);

if( authenticated )

{

ISecurityCacheProvider cache =

SecurityCacheFactory.GetSecurityCacheProvider(

CACHING_STORE_PROVIDER);

IToken token = cache.SaveIdentity(identity);

return token.Value;

}

else

{

return null;

}

}

Also for authorization, you can check whether the user is a member of a particular role:

public static bool IsInRole(string userName, string roleName)

{

IRolesProvider rolesProvider =

RolesFactory.GetRolesProvider(ROLE_PROVIDER);

IPrincipal principal =

rolesProvider.GetRoles(new GenericIdentity(userName));

if (principal != null)

{

bool isInRole = principal.IsInRole(roleName);

return isInRole;

}

else

{

return false;

}

}

But checking just user's role membership is not sufficient. In complex applications, we need to provide task based authorization which means checking whether the user has permission to do a particular task or not. This can be done the following way:

public static bool IsAuthorized( string userName, string task )

{

IRolesProvider roleProvider =

RolesFactory.GetRolesProvider(ROLE_PROVIDER);

IIdentity identity = new GenericIdentity(userName);

IPrincipal principal = roleProvider.GetRoles(identity);

IAuthorizationProvider ruleProvider =

AuthorizationFactory.GetAuthorizationProvider(RULE_PROVIDER);

bool authorized = ruleProvider.Authorize(principal, task);

return authorized;

}

The Security Console application provided with the source code allows you to create user, create role and then assign user to role. More on this later on.

However, defining a rule is a bit different. My first expectation was, a rule should be in the database so that it can be changed from code. Instead, it turned out to be static and defined in the application configuration file using the Enterprise Library Configuration application.

Sending Password Over the Wire

If you are not using HTTPS/SSL, or Integrated Windows Authentication, you will face the problem of sending password over the wire. You cannot send password as plain text because anyone can eavesdrop and get the password. So, you have to send the MD5/SHA hash of the password to the webservice. The simplest way to do this is to send credentials using SOAPHeader. Here's a class called CredentialSoapHeader which contains user credentials:

public class CredentialSoapHeader : SoapHeader

{

public string Username;

public string PasswordHash;

public string VersionNo;

public string SecurityKey;

}

There are two ways you can pass authentication information to the server:

- Always send user name and password hash on every web service call.

- After authenticating for the first time, the server will generate a security token and you can use that token for later calls. However, this is not secure as anyone can capture the user name and security token using a network sniffer and then impersonate.

On the client side, we have seen that we are using a factory for generating the web service proxy which prepares the web service proxy reference with the credentials:

public static StudentService GetStudentService()

{

StudentService service = new StudentService();

service.Url = _Application.Settings.WebServiceUrl +

"StudentService.asmx";

SmartInstitute.Automation.SmartInstituteServices.

StudentService.CredentialSoapHeader header =

new SmartInstitute.Automation.SmartInstituteServices.

StudentService.CredentialSoapHeader();

header.Username = _Application.Settings.UserInfo.UserName;

header.PasswordHash = _Application.Settings.UserInfo.UserPassword;

header.VersionNo = _Application.Settings.VersionNo;

}

Now we do not send the password collected from the login box as plain text, instead we send the MD5 hash of the password:

UserInfo user = _Application.Settings.UserInfo;

user.UserName = this._UserName;

string passwordHash = HashStringMD5(this._Password);

user.UserPassword = passwordHash;

using (SecurityService service = ServiceProxyFactory.GetSecurityService())

{

user.SecurityKey = service.AuthenticateUser();

if (null != user.SecurityKey && user.SecurityKey.Length > 0)

{

this._IsSuccess = true;

}

}

Authentication & Authorization from Web Service

On the server side, you go to each and every web method that should be called from the authenticated clients, and mark them to check for the credential SOAP header.

[WebService(Namespace="http://oazabir.com/webservices/smartinstitute")]

public class StudentService : SecureWebService

{

[WebMethod]

[SoapHeader("Credentials")]

public Student GetStudentDetails( int userID )

{

base.VerifyCredentials();

return base.Facade.GetStudentDetail( userID );

}

[WebMethod]

[SoapHeader("Credentials")]

public StudentCollection GetAllStudents( )

{

base.VerifyCredentials();

return base.Facade.GetAllStudents();

}

[WebMethod]

[SoapHeader("Credentials")]

public bool SyncStudent( Student student )

{

base.VerifyCredentials();

return base.Facade.SyncStudent( student );

}

}

First, we specify the SoapHeader which must be present when the methods are called. Then we call the VerifyCredentials method of a base class which ensures that the credentials are authentic.

The base class called SecureWebService is a simple class which inherits from the .NET framework's WebService class. It provides common services required in all web services.

public class SecureWebService : WebService

{

public CredentialSoapHeader Credentials;

This public object is populated using the SOAPHeader's values.

protected Facade Facade = new Facade();

protected string VerifyCredentials()

{

System.Threading.Thread.Sleep(2000);

if (this.Credentials == null

|| this.Credentials.Username == null

|| this.Credentials.PasswordHash == null)

{

throw new SoapException("No credential supplied",

SoapException.ClientFaultCode, "Security");

}

if( this.Credentials.VersionNo != Configuration.Instance.VersionNo )

throw new SoapException("The version you are using" +

" is not compatible with the server.",

SoapException.VersionMismatchFaultCode, "Security");

return CheckCredential(this.Credentials);

}

private string CheckCredential( CredentialSoapHeader header )

{

if( null != header.SecurityKey && header.SecurityKey.Length > 0 )

{

if( Facade.Login( header.SecurityKey ) )

return header.SecurityKey;

else

throw new SoapException("Security key is not valid",

SoapException.ClientFaultCode, "Security" );

}

else

{

string key = Facade.Login( header.Username, header.PasswordHash );

if( null == key )

throw new SoapException("Invalid credential supplied",

SoapException.ClientFaultCode, "Security" );

else

return key;

}

}

}

Enforcing Update from the Server

One interesting trick you have noticed in the above code is that, we are passing VersionNo inside the SoapHeader. When you deploy Smart Clients, if you do not have the Auto Update feature which forces users to update the application before using it, you will run into a problem where people won't update the application before using it even though you tell them to do that. As a result, they may use old code which corrupts data on the server and creates problems for others and themselves. Imagine a scenario where you have fixed a delicate account calculation and you asked all Accounts people to get the latest version. But someone forgets to do that and continues to use the old version which miscalculates the account statement. This becomes a maintenance nightmare for you.

The solution is to reject any client by force, which is not up-to-date. On the client application, store a configuration variable which contains the version number. On the server side, store the version number that you will allow in a configuration file. Every request contains the SOAPHeader which contains the VersionNo. Match these numbers with each other and if they don't match, the request is rejected. This prevents users from login in using an unsupported version of the client or even perform any operation on the server when they are already logged in.

if( this.Credentials.VersionNo != Configuration.Instance.VersionNo )

throw new SoapException("The version you are using" +

" is not compatible with the server.",

SoapException.VersionMismatchFaultCode, "Security");

Configuring Security Application Block

Before getting started, make sure you have added Data Access Application Block in the configuration and you have mapped it properly to your database. This database will be used in all the places.

Step 1: Create an Authentication Provider. I am using Database Provider. You can use other options like Active Directory Authentication Provider. Please see the EL documentation for details. After adding the Database Provider, select the database where authentication information is stored. I am using the same database SmartInstitute for storing the configuration.

Step 2: Find the SecurityDatabase.sql file from EL's source code. This is the SQL which prepares the database. If you want to use the default "Security" database, then you can run it. But if you want to use your own database, open the file in an editor and perform the following search & replace:

- Replace N'Security' with N'YourDatabaseName'

- Replace [Security] with [YourDatabaseName]

Don't run the file yet! It will delete your database. Remove the DROP DATABASE command from the beginning of the file. Here's how it should look:

IF NOT EXISTS (SELECT name FROM master.dbo.sysdatabases

WHERE name = N'SmartInstitute')

CREATE DATABASE [SmartInstitute]

COLLATE SQL_Latin1_General_CP1_CI_AS

GO

Step 3: Add a Database Roles Provider. Map it to the same database.

Step 4: Add a Database Profile Provider. Map it to the same database.

Step 5: Add a Rules Provider. This is a bit different. Right click on the provider name and create a new rule. Here's how the rule screen looks like:

Here you can define the static expression of roles and identities which match a rule. If a user and its roles match a rule, it is authorized for the rule. We have already seen before how to authorize against a rule.

Step 6. Add a Security Cache provider. We will cache credentials in order to avoid database call for authentication every time a webservice method hits.

Preparing the Security Console Application

Security Console application is provided with EL's source code. You will have to modify the dataConfiguration.config and define your own database name and then build a version.

Authenticating using EL: The Surprise

Here's the code you need to use in order to authenticate using EL's AuthenticationProvider class:

IAuthenticationProvider authenticationProvider =

AuthenticationFactory.GetAuthenticationProvider(AUTHENTICATION_PROVIDER);

IIdentity identity;

byte [] passwordBytes = System.Text.Encoding.Unicode.GetBytes( password );

NamePasswordCredential credentials =

new NamePasswordCredential(userName, passwordBytes);

bool authenticated =

authenticationProvider.Authenticate(credentials, out identity);

Here's the catch: The password must be in plain text.

But you don't have password in plain text because the client will be sending the MD5 hash of the password. You cannot either get the password from the database using any of the AuthenticationProvider functions so that you could by-pass this process and match directly. So, you have no other choice but to change the EL's code and run into a maintenance nightmare.

The easy solution is, you pass the MD5 hash of the password to AuthenticationProvider as it is, but in the database you store the hashed value of the password hash. This means you will hash twice before storing the password in the database. The Security Console application creates the user accounts. So, you can easily change its code to hash the hash of the password and then store the double hashed bytes in the database. So, when you specify the MD5 hash to AuthenticationProvider, it hashes it again and then matches with the bytes available in the database. The database also contains the hash of hash and they match.

So, we will be modifying the code of SecurityConsole a bit like this:

string passwordHash = HashStringMD5( tbxPassword1.Text );

byte [] unicodeBytes = Encoding.Unicode.GetBytes(passwordHash);

byte[] password = hashProvider.CreateHash(unicodeBytes);

if (editMode)

{

userRoleMgr.ChangeUserPassword(tbxUser.Text, password);

}

This is not extra load on the server because the first hash is done by the client and the second hash is done by the server.

Data Access

Data Access Application Block

Enterprise Library provides a convenient Data Access Application Block for all your database needs. It has a convenient Database class which provides all the methods needed for database access. However, those who were already using the previous Patterns & Practices Data Access Application Block, they will run into major problem as there is no SqlHelper or SqlHelperParameterCache. This will force you to go through all your data access code and change them to use Database instead of SqlHelper. The worse news is, there is no SqlHelperParameterCache.

This is a real example where you should not use any third party code directly, instead always use your own wrapper. No matter how standard they appear to be and from whatever reliable source they come from, once you get dependent on them and they change, you are doomed.

Due to this problem, I have created a SqlHelper class which has the same signature as the previous DAB:

public class SqlHelper

{

public static int ExecuteNonQuery( IDbTransaction transaction,

string spName, params object [] parameterValues )

{

Database db = DatabaseFactory.CreateDatabase();

return db.ExecuteNonQuery( transaction, spName, parameterValues );

}

public static int ExecuteNonQuery( string connectionString,

string spName, params object [] parameterValues )

{

Database db = DatabaseFactory.CreateDatabase();

return db.ExecuteNonQuery( spName, parameterValues );

}

public static SqlDataReader ExecuteReader( string connectionString,

string spName, params object [] parameterValues )

{

Database db = DatabaseFactory.CreateDatabase();

return db.ExecuteReader( spName, parameterValues ) as SqlDataReader;

}

public static SqlDataReader ExecuteReader(IDbTransaction transaction,

string spName, params object [] parameterValues )

{

Database db = DatabaseFactory.CreateDatabase();

return db.ExecuteReader( transaction, spName,

parameterValues ) as SqlDataReader;

}

}

Once you make a class like this, you can have your existing code untouched and make them work with EL. However, if you were using SqlHelperParameterCache before, then you will have to make your version of that also:

public class SqlHelperParameterCache

{

private static readonly Hashtable _ParamCache = new Hashtable();

public static IDataParameterCollection GetSpParameterSet( string

connectionString, string storedProcName )

{

Database db = DatabaseFactory.CreateDatabase();

if( !_ParamCache.ContainsKey( storedProcName ) )

{

SqlConnection connection = db.GetConnection() as SqlConnection;

if( connection.State != ConnectionState.Open )

connection.Open();

SqlCommand cmd = new SqlCommand( storedProcName, connection );

cmd.CommandType = CommandType.StoredProcedure;

SqlCommandBuilder.DeriveParameters( cmd );

_ParamCache.Add( storedProcName, cmd.Parameters );

connection.Close();

}

return _ParamCache[ storedProcName ] as IDataParameterCollection;

}

}

Concurrency

Offline work leads to concurrency problems. This is inevitable. So, you need to make all the tables concurrency aware and handle concurrency either from the code or from the Stored Procedure.

This is how I handle concurrency:

- Add a Date field called "

ChangeStamp" in all the tables. - When objects are retrieved,

ChangeStamp is carried with the object. - When an object is sent to the database for update, first match the

ChangeStamp of the object with the ChangeStamp of the row. If they match, then there is no problem. - If the Date values do not match, then someone has modified the row in the meantime. So, abort the operation.

At the Stored Procedure, this is the code:

UPDATE dbo.[Assessment]

SET

[ActivityFee] = @ActivityFee,

[AdmissionFee] = @AdmissionFee,

...

WHERE

[ID] = @ID

AND [ChangeStamp] = @ChangeStamp

Here's how the code handles concurrency:

reader = SqlHelper.ExecuteReader(connectionString, "prc_Assessment_Update",

entity.ID,entity.ActivityFee, ... );

if (reader.RecordsAffected > 0)

{

RefreshEntity(reader, entity);

result = reader.RecordsAffected;

}

else

{

reader.Close();

DBConcurrencyException conflict =

new DBConcurrencyException("Concurrency exception");

conflict.ModifiedRecord = entity;

AssessmentCollection dsrecord;

if (transactionManager != null)

dsrecord = AssessmentRepository.Current.GetByID(

this.transactionManager, entity.ID);

else

dsrecord = AssessmentRepository.Current.GetByID(connectionString,

entity.ID);

if(dsrecord.Count > 0)

conflict.DatasourceRecord = dsrecord[0];

throw conflict;

}

Caching

Caching Application Block is the only block where I haven't run into any issue during usage. But again, it seems that Cache Expiration callback feature is missing.

Caching in a Server Cluster

When you have a cluster of servers, you will run into caching problems. Consider this scenario:

- You are caching a list of objects on a web method. For example, I am caching all the students once

GetAllStudents is called. - Now, the client modifies one student and I have to clear the Student cache so that it is again loaded from the database and cached.

- The web server in the cluster which receives the "SaveStudent" command clears up its own cache. But it does not clear the cache of other web servers.

- The next call from the client goes to another web server which is still holding an old copy of the cached objects. So, the client sees old information of the students even after making changes and saving them.

The only solution to this problem is to use a centralized cache store. You will have one dedicated computer for handling all the cached objects that are costly to load from the database. But this single server might become your single point of failure and performance bottleneck under heavy load.

Performance Monitoring

EL has rich instrumentation features. It uses both WMI Events and Performance Counters throughout the application blocks giving you the opportunity to monitor performance of database calls, authentication & authorization success/failure, measure cache hit rates etc. You can utilize these performance counters in order to detect bottlenecks in your server application. You can use the Windows Performance Monitor to see how EL App Blocks are doing. Here's a screenshot which shows different performance counters from EL App Blocks:

Code Generation using Code Smith Template

Code Smith is a tool that can dramatically reduce your development time. Everyday we write common codes for database calls, object population, object to UI population and vice versa. All these can be automatically generated from a single mouse click using Code Smith.

The entire server side code, except the Facade, is generated using the wonderful .NET data tiers generator. However, it generates code using the old Patterns & Practices Application Blocks. It was pretty difficult to modify these templates and generate EL specific codes. In the source code provided with this article, you will find the modified template which generates EL specific codes.

ClickOnce Deployment

Let's take a look at my most favorite feature of .NET 2.0, the ClickOnce deployment. This is truly a revolutionary step towards deployment of Desktop/Smart Client applications. Deployment and versioning has never been this easy. I remember the day when we used to make our own hand coded custom installers and service releases during C++ days. Things did not change much even on VB era. It introduced DLL hell. Now with .NET, there's no DLL hell. But auto update still is not available from the .NET framework and you have to use custom libraries like the Updater Application Block in order to provide automatic update. But now with ClickOnce, everything ends here. This is the ultimate deployment you can ever have.

While configuring ClickOnce, you need to make changes in the following section:

This dialog box defines the texts you see in the published website. After publishing your application by right clicking on the EXE project and selecting "Publish", you get a page like this:

After deploying, you can freely make changes in the application, add new features, fix bugs. When you are done, right click on the EXE project and click Publish again. All users get the updated version as soon as they launch the application. ClickOnce takes care of downloading the latest version and installing it.

And of course, for those reluctant users who never close the application and do not get the updated code, you can stop them whenever you want by implementing the VersionNo concept I have introduced before.

Installing & Running the Sample Application

Configuring the Server

Server is developed using .NET 1.1. So, you will need Visual Studio 2003.

The "Server" folder contains the code only and the database is located in the "Database" folder. You need to follow the following steps:

- Download Enterprise Library June 2005 and install it properly. After installing, go to Start->Programs->Enterprise Library June 2005->Install Services. Download URL.

- Restore the database from the "Database" folder. Use the database name "SmartInstitute". If you change the name, you will have to change the dataconfiguration.config and put the connect user name, password and database name.

- Create a virtual directory using IIS Manager named "SmartInstituteServices" which maps to "Server\SmartInstituteServices".

- Open "Server\SmartInstitute.sln" and build it.

- Modify "Server\SmartInstituteServices\dataconfiguration.config" and add proper database credentials. The default configuration assumes there is trusted connection to the SmartInstitute database. Add the "ASPNET" (Windows XP) or "Network Services" (Windows 2003) account as the database owner of SmartInstitute database.

You can run the website to see whether everything is working fine. However, none of the web service methods will run due to the absence of CredentialSoapHeader.

Client is developed using .NET 2.0. So, you will need Visual Studio 2005.

- Open the "client\SmartInstituteClient.sln"

- Go to SmartInstitute.App -> Properties

- Go to Signing tab

- Click "Create Test Certificate"

The default configuration assumes, you have the web service at "localhost". If you want to change it, then go to SmartInstitute.Automation project and open the "Properties\Settings.settings". Modify the "WebServiceURL" property.

Whenever you "Add Reference" or "Update Reference" a web service reference, Visual Studio generates all the related classes inside the Web Proxy class (Reference.cs). If you open up the code of the Proxy, you will see, all the related classes like Student, Course, CourseSection etc. are created from what VS discovers from the WSDL. This is a big problem because we have all the domain entity objects defined in the SmartInstitute.dll. It already contains all these classes and this DLL is shared between the server and the client. I could not find any way to tell VS that, please include the SmartInstitute namespace and do not generate duplicate classes in the Web Proxy class. As a result, whenever you update the reference, you can no longer build the project.

How to fix this problem:

- Open the Reference.cs file inside the Web reference folder. You will not see them from VS normally. You will have to turn on the "Show All Files" feature by clicking the button at the top of the "Solution Explorer" view.

- Scroll down until you find the

CredentialSoapHeader class. - After this class, you will see all those entity classes are created. Remove all these classes and the enumeration definition.

- Remember not to delete the delegates and events you see in the proxy. Those are required.

I haven't found a solution yet to do this automatically. Either I completely missed any feature in VS which already does this job or I need to write a macro which does this job automatically.

Conclusion

We have covered from design to development and deployment to maintenance of a .NET 2.0 Smart Client. You have also seen how you can implement an object model similar to Microsoft Office applications in your own projects. Such an object model automatically enforces a loosely coupled architecture as designed which provides ultimate extensibility. We have also seen how you can develop Service Oriented Architecture using XML Web Services and how Enterprise Library helps you dramatically reduce the development cost and time by providing a rich collection of reusable components. The sample code will give you ample examples to deal with real life Smart Client development hurdles and the reusable components will dramatically reduce the time needed for similar projects.