Introduction

In Part I of my tutorial we built a Web API that handles image labeling and translation into different languages. Now we will build Android app that can consume that API. Features we are going to have are:

- select destination language

- select image from gallery or take new photo using camera

- option to toogle speech output on/off

Using the code

All the code base is located in code section of this article. For simplicity reasons here I am going to highlight only the important parts of the code.

build.gradle

dependencies {

compile fileTree(dir: 'libs', include: ['*.jar'])

compile 'com.google.code.gson:gson:2.6.1'

compile 'com.android.support:support-v13:23.4.0'

compile 'com.android.support:appcompat-v7:23.4.0'

compile 'com.android.support:design:23.4.0'

compile 'com.squareup.retrofit2:retrofit:2.0.0'

compile 'com.squareup.retrofit2:converter-gson:2.0.0'

compile 'com.squareup.okhttp3:okhttp:3.4.1'

compile 'com.android.support.constraint:constraint-layout:1.0.0-alpha4'

testCompile 'junit:junit:4.12'

compile 'com.pixplicity.easyprefs:library:1.8.1@aar'

compile 'com.jakewharton:butterknife:8.4.0'

annotationProcessor 'com.jakewharton:butterknife-compiler:8.4.0'

}

We will use some really cool Java libraries that makes Android development lot easier.

- easyprefs - easy access to SharedPrefferences

- butterknife - makes Views declaration lot easier

- retrofit - enables us to work with REST API's quickly and easily

activity_main - spinner View has a custom layout defined in spinner_item.xml

<Spinner

android:id="@+id/spinner"

android:layout_width="100dp"

android:layout_height="match_parent"

android:layout_marginBottom="16dp"

android:layout_weight="0.91"

android:gravity="bottom|right" />

<?xml version="1.0" encoding="utf-8"?>

<TextView

xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="wrap_content"

android:layout_height="30dp"

android:textColor="@android:color/darker_gray"

android:textSize="24dp"

/>

ApiInterface.java - this is interface for our REST API calls. Put your API url we build in previous tutorial into ENDPOINT variable. As you can see only 2 parameters are used when making POST request:

- Image file

- Language Code

package thingtranslator2.jalle.com.thingtranslator2.Rest;

public interface ApiInterface {

String ENDPOINT = "YOUR-API-URL/api/";

@Multipart

@POST("upload")

Call<translation> upload(@Part("image\"; filename=\"pic.jpg\" ") RequestBody file, @Part("FirstName") RequestBody langCode1);

final OkHttpClient okHttpClient = new OkHttpClient.Builder()

.readTimeout(60, TimeUnit.SECONDS)

.connectTimeout(60, TimeUnit.SECONDS)

.build();

Retrofit retrofit = new Retrofit.Builder()

.baseUrl(ENDPOINT)

.client(okHttpClient)

.addConverterFactory(GsonConverterFactory.create())

.build();

}

Translation class

package thingtranslator2.jalle.com.thingtranslator2.Rest;

public class Translation {

public String Original;

public String Translation;

public String Error;

}

Tools - ImagePicker this class is used for getting image from gallery of taking new photo from camera. Tools - MarshMallowPermission this is used for setting up camera permissions on Android 6.

AndroidManifest

="1.0"="utf-8"

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="thingtranslator2.jalle.com.thingtranslator2">

<uses-feature android:name="android.hardware.camera" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:supportsRtl="true"

android:theme="@style/AppTheme">

<activity android:name=".MainActivity" >

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

MainActivity.java

public class MainActivity extends AppCompatActivity implements AdapterView.OnItemSelectedListener {

public

@BindView(R.id.txtTranslation)

TextView txtTranslation;

public

@BindView(R.id.spinner)

Spinner spinner;

public

@BindView(R.id.imgPhoto)

ImageButton imgPhoto;

public

@BindView(R.id.imgSpeaker)

ImageButton btnSpeaker;

public Boolean speakerOn, firstRun;

public TextToSpeech tts;

public List<string> languages = new ArrayList<string>();

public ArrayList<language> languageList = new ArrayList<language>();

public ArrayAdapter<language> spinnerArrayAdapter;

public Language selectedLanguage;

public static final int PICK_IMAGE_ID = 234;

thingtranslator2.jalle.com.thingtranslator2.MarshMallowPermission marshMallowPermission = new thingtranslator2.jalle.com.thingtranslator2.MarshMallowPermission(this);

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN, WindowManager.LayoutParams.FLAG_FULLSCREEN);

ButterKnife.bind(this);

new Prefs.Builder()

.setContext(this)

.setMode(ContextWrapper.MODE_PRIVATE)

.setPrefsName(getPackageName())

.setUseDefaultSharedPreference(true)

.build();

firstRun = Prefs.getBoolean("firstRun", true);

spinner.setOnItemSelectedListener(this);

tts = new TextToSpeech(getApplicationContext(), new TextToSpeech.OnInitListener() {

@Override

public void onInit(int status) {

if (status != TextToSpeech.ERROR) {

fillSpinner();

}

}

});

if (firstRun) {

Prefs.putBoolean("firstRun", false);

Prefs.putBoolean("speakerOn", true);

Prefs.putString("langCode", "bs");

}

setSpeaker();

}

private void setSpeaker() {

int id = Prefs.getBoolean("speakerOn", false) ? R.drawable.ic_volume : R.drawable.ic_volume_off;

btnSpeaker.setImageBitmap(BitmapFactory.decodeResource(getResources(), id));

}

public void ToogleSpeaker(View v) {

Prefs.putBoolean("speakerOn", !Prefs.getBoolean("speakerOn", false));

setSpeaker();

Toast.makeText(getApplicationContext(), "Speech " + (Prefs.getBoolean("speakerOn", true) ? "On" : "Off"), Toast.LENGTH_SHORT);

}

private void fillSpinner() {

Iterator itr = tts.getAvailableLanguages().iterator();

while (itr.hasNext()) {

Locale item = (Locale) itr.next();

languageList.add(new Language(item.getDisplayName(), item.getLanguage()));

}

Collections.sort(languageList, new Comparator<language>() {

@Override

public int compare(Language o1, Language o2) {

return o1.getlangCode().compareTo(o2.getlangCode());

}

});

spinnerArrayAdapter = new ArrayAdapter<language>(this, R.layout.spinner_item, languageList);

spinnerArrayAdapter.setDropDownViewResource(android.R.layout.simple_spinner_dropdown_item);

spinner.setAdapter(spinnerArrayAdapter);

String def = Prefs.getString("langCode", "bs");

for (Language lang : languageList) {

if (lang.getlangCode().equals(def)) {

spinner.setSelection(languageList.indexOf(lang));

}

}

}

;

public void getImage(View v) {

if (!marshMallowPermission.checkPermissionForCamera()) {

marshMallowPermission.requestPermissionForCamera();

} else {

if (!marshMallowPermission.checkPermissionForExternalStorage()) {

marshMallowPermission.requestPermissionForExternalStorage();

} else {

Intent chooseImageIntent = thingtranslator2.jalle.com.thingtranslator2.ImagePicker.getPickImageIntent(getApplicationContext());

startActivityForResult(chooseImageIntent, PICK_IMAGE_ID);

}

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

if (data == null) return;

switch (requestCode) {

case PICK_IMAGE_ID:

Bitmap bitmap = thingtranslator2.jalle.com.thingtranslator2.ImagePicker.getImageFromResult(this, resultCode, data);

imgPhoto.setImageBitmap(null);

imgPhoto.setBackground(null);

imgPhoto.setImageBitmap(bitmap);

imgPhoto.invalidate();

imgPhoto.postInvalidate();

File file = null;

try {

file = savebitmap(bitmap, "pic.jpeg");

} catch (IOException e) {

e.printStackTrace();

}

uploadImage(file);

break;

default:

super.onActivityResult(requestCode, resultCode, data);

break;

}

}

private void uploadImage(File file) {

RequestBody body = RequestBody.create(MediaType.parse("image/*"), file);

RequestBody langCode1 = RequestBody.create(MediaType.parse("text/plain"), selectedLanguage.langCode);

final ProgressDialog progress = new ProgressDialog(this);

progress.setMessage("Processing image...");

progress.setProgressStyle(ProgressDialog.STYLE_SPINNER);

progress.setIndeterminate(true);

progress.show();

ApiInterface mApiService = ApiInterface.retrofit.create(ApiInterface.class);

Call<translation> mService = mApiService.upload(body, langCode1);

mService.enqueue(new Callback<translation>() {

@Override

public void onResponse(Call<translation> call, Response<translation> response) {

progress.hide();

Translation result = response.body();

txtTranslation.setText(result.Translation);

txtTranslation.invalidate();

if (Prefs.getBoolean("speakerOn", true)) {

tts.speak(result.Translation, TextToSpeech.QUEUE_FLUSH, null);

}

}

@Override

public void onFailure(Call<translation> call, Throwable t) {

call.cancel();

progress.hide();

Toast.makeText(getApplicationContext(), "Error: " + t.getMessage(), Toast.LENGTH_LONG).show();

}

});

}

public static File savebitmap(Bitmap bmp, String fName) throws IOException {

ByteArrayOutputStream bytes = new ByteArrayOutputStream();

bmp.compress(Bitmap.CompressFormat.JPEG, 60, bytes);

File f = new File(Environment.getExternalStorageDirectory()

+ File.separator + fName);

f.createNewFile();

FileOutputStream fo = new FileOutputStream(f);

fo.write(bytes.toByteArray());

fo.close();

return f;

}

@Override

public void onItemSelected(AdapterView parent, View view, int position, long id) {

selectedLanguage = (Language) spinner.getSelectedItem();

tts.setLanguage(Locale.forLanguageTag(selectedLanguage.langCode));

Prefs.putString("langCode", selectedLanguage.langCode);

}

@Override

public void onNothingSelected(AdapterView parent) {

}

}

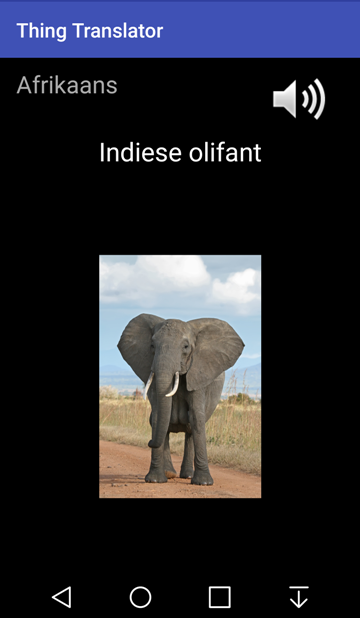

The end result of this tutorial is application that looks like this. Remember your WebAPI service needs to be up and running in order for all this to work.

Points of Interest

There are many ways for improvement and upgrading this application. One of my ideas is that the image labeling could be done during the live preview on your camera. Having the preview window opened, we can invoke the API every 5-10 seconds and have live labeling about what we see through our camera.

One drawback is that it would consume a lot of bandwidth and aslo remember, Google API Vision and most of the other APIs are not free. You pay for the API usage when requests exceed the allowed quota.

Happy codding!