Introduction

One of the projects I am involved in provides facility to query the Google search engine and uses the links returned by it. We used Google's API and it was fine until some August day when it ceased working with non-English queries. It merely returned irrelevant links. Hence I was forced to write my own Google parser.

Notes about the search engine parser

The Google (and other) search engine parsers are based on the HTML parser that was written by me long ago. I am not providing the source of it but I am going to explain the building blocks of the parser.

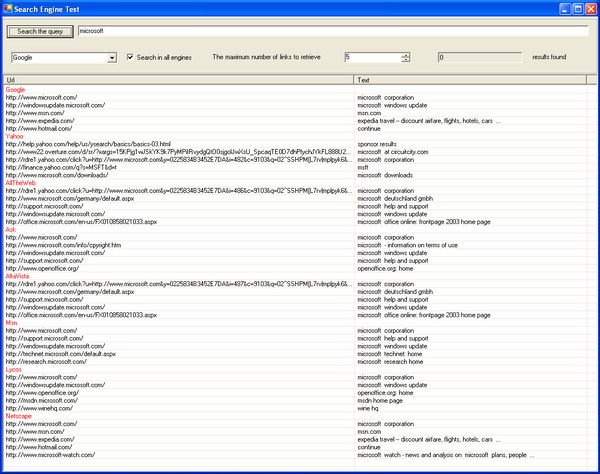

In the demo project you can find seven additional search engine parsers (MSN, Netscape, AllTheWeb, AltaVista, Yahoo!, Ask, Lycos).

HTML parser basics

My HTML parser is a regular parser which scans for HTML tokens - tags. It's all I need when I want to parse a regular HTML page.

But it is not enough when it comes to the parsing of a web page with results from the Google search engine, because the page contains many things that I won't need except for the actual links of the search.

I need somehow to tell the parser to find the important structures in the page. For this I define an XML file with definitions of the tags that I want to extract and all that lies in these tags.

Here is an example of the XML file for the Google web page:

<structures>

<structure name="TABLE" startTag="table" endTag="/table"/>

<structure name="PARAGRAPH" startTag="p" endTag="/p"/>

</structures>

Why do I need <table> and <p> tags for the Google web page? Prior to specializing the HTML Parser for the Google search engine I just looked at the source of a random page from Google. I found out that the links that match the query are placed between <p> tags and the number of total results found is somewhere in the <table> tag.

I could do more than that. The structure of the web page returned by Google (as well as other templatized web pages) is (almost?) the same. So I would know the exact position of the <table> tag where the number of the results matched is situated and directly retrieve it but it ties me to the specific template structure and I was not sure that Google returns the same template web page every time.

Search Engine Parser

There is the SearchEngineParser class which inherits the HtmlParser class and is the base class for all flavours of search engine parsers.

It defines some abstract methods which must be implemented by a specific search engine parser.

Google Search Engine Parser

One of the most important methods that is overridden is GetLinks(HtmlStructure,AddressLinkCollection).

HtmlStructure is defined in a class HtmlParser. It holds part of the web page structure defined by a specific tag. The structure can hold nested structures, the text found in that structure, and address links if any as well.

The idea is to iterate through structures and extract the data. All that we need is to get the address links out of the structure.

The HtmlAnchor class holds the address link:

protected override void GetLinks(HtmlStructure structure,

AddressLinkCollection linkCollection)

{

if (structure == null) return;

if (structure.TagName == "PARAGRAPH")

{

if (structure.Anchors != null && structure.Anchors.Count != 0)

{

IList anchors = structure.Anchors;

foreach(HtmlAnchor anchor in anchors)

{

if (anchor.Text.IndexOf("cached") >= 0) continue;

if (anchor.Text.IndexOf("similar") >= 0) continue;

if (anchor.Text.IndexOf("view as") >= 0) continue;

if (anchor.Href.ToString().IndexOf("google") >= 0 ) continue;

AddressLink link = new AddressLink(anchor.Href.ToString(),

anchor.Text);

linkCollection.Add(link);

}

}

}

IList structList = structure.Structure;

foreach(HtmlStructure struct_ in structList)

{

GetLinks(struct_,linkCollection);

}

}

Explanation of the code above

We iterate through structures found in the web page and those defined in the XML file. There will be only two structures for Google - <table> and <p>.

Each time we get the PARAGRAPH structure which is an alias for <p>, we know that this is a structure where Google holds its link results.

But not everything is so simple because there are some links that we don't need like cached links or similar pages link and we must filter them out.

if (anchor.Text.IndexOf("cached") >= 0) continue;

if (anchor.Text.IndexOf("similar") >= 0) continue;

if (anchor.Text.IndexOf("view as") >= 0) continue;

if (anchor.Href.ToString().IndexOf("google") >= 0 ) continue;

The next two methods that must be implemented are used for retrieving the number of the total results found. In Google it is of the form: Results 1 - 10 of about 124,000 for omg data mining specification. (0.20 seconds).

We need that 124,000.

GetTotalSearcheResults(HtmlStructure) iterates through structures. If it finds a TABLE structure then it calls FindTotalSearchResults(string text,out int total).

protected override int GetTotalSearchResults(HtmlStructure structure)

{

int totalSearchResults = -1;

if (structure == null) return -1;

if (structure.TagName == "TABLE")

{

if (FindTotalSearchResults(structure.TextArray,out totalSearchResults))

{

m_totalSearchResults = totalSearchResults;

m_isTotaSearchResultsFound = true;

return totalSearchResults;

}

}

IList structList = structure.Structure;

foreach(HtmlStructure struct_ in structList)

{

totalSearchResults = GetTotalSearchResults(struct_);

if (totalSearchResults >= 0) break;

}

return totalSearchResults;

}

FindTotalSearchResults(string text,out int total) uses regular expressions to find the number of the results.

protected override bool FindTotalSearchResults(string text,out int total)

{

total = -1;

if (text.IndexOf(SearchResultTermPattern) < 0) return false;

Match m = Regex.Match(text,TotalSearchResultPattern,

RegexOptions.IgnoreCase | RegexOptions.Multiline);

try

{

string totalString = m.Groups["total"].Value;

totalString = totalString.Replace(",","");

total = int.Parse(totalString);

}

catch(Exception)

{

return false;

}

return true;

}

The most important method to be overridden is Search() and its variant Search(int nextIndex).

public override bool Search()

{

m_fileName = m_queryPathString = m_startQuerySearchPattern +

m_query + m_startSearchPattern +

m_totalLinksRetrieved.ToString();

m_baseUri = new Uri(m_fileName);

bool isParsed = this.ParseMe();

if (isParsed)

{

m_addressLinkCollection = new AddressLinkCollection();

this.GetLinks(this.RootStructure,m_addressLinkCollection);

m_numberOfLinksRetrieved = m_addressLinkCollection.Count;

m_totalLinksRetrieved += m_numberOfLinksRetrieved;

}

return isParsed;

}

After-notes

- I don't explicitly use the XML file with structure configuration. Instead, I embed it into the assembly. One drawback of that is that the structure of the web page can be changed in the future.

- I tested all search engine parsers on English, Arabic, Hebrew and Russian. It works just fine with those languages. There are some inconsistencies like in Yahoo!. Yahoo! returns its web page with UTF-8 charset but the actual encoding is language specific. Because my HTML parser checks the charset of the web page, it won't recognize the actual encoding of it. So you will not see any text related to the link (check the demo).