This article demonstrates the development of code in C# implementing famous k-means clustering algorithm to perform graphical raster image segmentation.

Introduction

In this article, we’ll particularly discuss about the implementation of k-means clustering algorithm to perform raster image segmentation. We’ll demonstrate a raster image segmentation process by developing a code in C# that implements k-means clustering algorithm adaptation to perform an image segmentation.

What’s Image Segmentation

This article demonstrates the development of code in C# that implements one of the most basic variants of the classical k-means clustering algorithm that can be easily used to perform a simple graphical raster image segmentation. The image segmentation basically refers to the process of an image vectorized color quantization in which the color palette of an image is reduced to a certain finite quantity of colors. During the following process, we actually perform the partitioning of the entire image into multiple segments (i.e., super-pixels), making it easier to analyze the following image by using various image processing algorithms. While performing the image segmentation, we’re actually aiming to locate objects within the original image as well as to find their boundaries that define the areas of objects being located. The main idea of performing the following process is to find those areas of pixels that share the same color hue parameter value. Thus, those sets of pixels are grouped into multiple labeled segments. In this case, those multiple segments are constituents of the entire image.

Normally, the image segmentation can be efficiently used for many applications such as either the raster image compression or vectorization:

-

Image Data Compression: The image segmentation is one of the essential phases of many existing raster image lossy compression algorithms such as BPG, JPEG-2000, S3TC, PDF, DjVu, etc. In this case, the segmentation allows us to significantly increase the compress ratio as the result of performing the partitioning of the entire image into the number of clusters, containing pixels having the same color hue. By performing the segmentation, we actually replace the color hue of each pixel with the color of a centroid pixel selected within a single cluster, and, thus, increase the number of pixels of the same color. This normally results in obtaining an image consisting of much fewer colors compared to the original image being compressed. The lossy compression algorithm basically performs a lookup for the sets of pixels having the same color and finally encodes the less data, which, in turn, results in a better image data compression ratio, and, obviously reduced image file length.

-

Vectorization: From another respect, the image segmentation can also be successfully applied to the process of raster image vectorization. While performing an image vectorization, the segmentation can be successfully used to find the boundaries of particular fragments that constitute the entire image. That, in turn, makes it possible to easily locate the outlines of the entire image right from the very beginning of vectorization process. Further, those outlines are subsequently vectorized and represented by the number of vector linear equations. At the same time, the segmentation allows to eliminate those redundant and unnecessary image fragments, the data on which might not be assigned to specific data structures, that allows to either slipstream the vectorization process, or to reduce the potential size of the output vectorized image file, making it more lightweight.

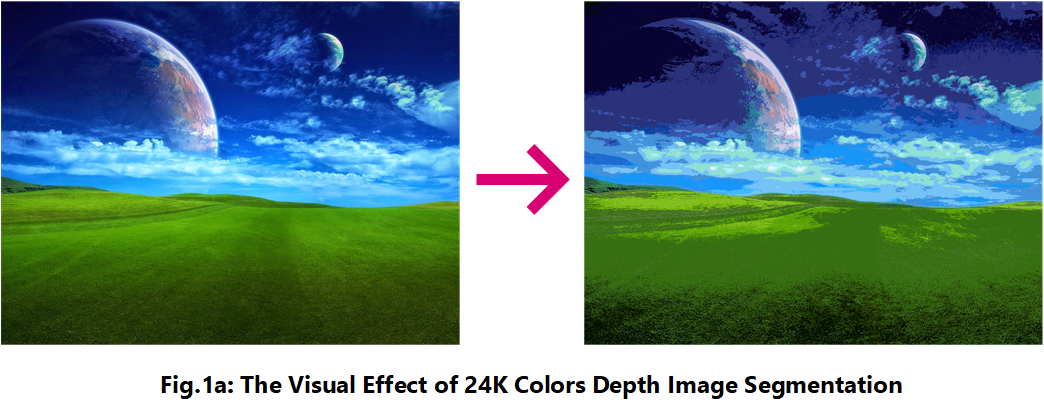

Fig.1a and Fig.1b illustrate the results of image segmentation performed as a part of either the image data compression or vectorization respectively:

Specifically, the raster image segmentation can be performed by using a variety of algorithms such as either thresholding, gradient-based, histogram-based, or compression-based, etc. Among those algorithms, k-means clustering actually turns out to be the most obvious and reliable algorithm. Actually, k-means clustering algorithm is one of the most fundamental algorithms of AI machine learning and data mining as well as the regression analysis. In most cases, it allows us to achieve a high-quality result of image segmentation avoiding the actual distortion during the image processing.

As we’ve already discussed, this is typically done by partitioning the number of observations learned from the image data into the corresponding number of clusters with the nearest mean exhibited as a super-pixel, that serves as a color prototype of the entire cluster. In addition, let’s recall that k-means clustering belongs to the class of NP-hard algorithms.

However, there’s the number of existing metaheuristic search algorithms that are very quick to converge as the result of an optimum cluster image decomposition, that, in turn, allows us to efficiently achieve an entire image segmentation, eliminating the case in which the image is segmented partially.

Background

In this section of the following article, we’ll make a large focus on the variant of the k-means clustering algorithm that allows us to perform a raster image segmentation, rather than the other data partitioning tasks, such as either producing recommendations or sum of squared error (SSE) computation. The conventional k-means clustering algorithm was already thoroughly discussed in one of my previous articles published. That’s actually why, in this article, we’ll discuss particularly about the k-means clustering algorithm variation that basically dealt solely with raster image segmentation.

Image Segmentation Algorithm

Basically, the image segmentation algorithm being discussed is very simple and can be formulated as follows:

- Create an initial cluster containing an original image and a set of centroid pixels randomly selected from the image. Append the initial cluster built to the array of clusters.

- Retrieve the current cluster from the array and iterate through the set of those super-pixels (i.e., centroids).

- For each super-pixel, compute the actual distance to each pixel in the current image. To do this, we’ll normally use the variant of Euclidian distance formula that allows us to find the distance between two 3D-vectors of colors (R; G; B) of the either super-pixel or the current pixel in the given image respectively.

- Perform a linear search to find those pixels which value of distance to the current super-pixel (i.e., centroid) does not exceed a specific boundary.

- Build a new cluster based on the new image containing all those pixels selected at the previous step and the current value of super-pixel. In this case, the super-pixel will serve as a centroid of a newly built cluster. Also, we need to substitute the color of each pixel with the centroid’s color.

- Compute the value of the nearest mean of all super-pixels in the current cluster by using the center of mass formula to find the coordinates of a particular central point for the set of super-pixels of the current cluster.

- Perform a check if the coordinates of that central point obtained in the previous step are not equal to the coordinates of the super-pixel in the newly built cluster (the central point has not been moved). If not, append the new cluster to the array of clusters.

- Perform a check if the current cluster is the final cluster in the array of clusters. If so, go to step 9, otherwise return and proceed with step 2.

- Iterate through the array of clusters and merge each particular cluster image into the entire image being segmented.

- Save the segmented image to a file.

Initialization

The very first essential step of the k-means image segmentation algorithm is the initialization phase. During this phase, we basically create an initial cluster from the source image and the array of randomly selected pixels. In this case, the process of generating an initial source array of random pixels having particular coordinates is a special interest that we’re about to thoroughly discuss in this section.

Under normal conditions, we might generate a set of distinct pixels which random coordinates (X; Y) are randomly selected during each of W * H loop iterations, where W – the width and H – is the height of the source image respectively. For that purpose, we need to implement a loop and perform the number of iterations. During each iteration, we normally generate the values of X <- RAND[0; W] and Y <- [0;H] separately, and create a point with specific coordinates. Further, we’re performing a check if the following point being generated already exists in the array of pixels.

However, the using of method mentioned above normally leads to a so-called “poor” clustering and causes the segmentation process to be merely unpredicted. That’s actually why, to provide the desired quality of image segmentation, we need to modify the following initial values generation method by applying the specific condition to the process of random pixels selection.

Normally, we have at least two main factors that have a large impact on the process of generating of the initial dataset for the image segmentation algorithm. Apparently, the first factor is the actual distance between points randomly selected. The typically small value of distance causes the increase of probability to select two pixels having the same color. That’s actually why the value of distance parameter must be optimal.

Let’s recall beforehand that in this particular case the actual distance and the color offset are the parameters which values are experimentally taken. For example, the value of actual distance can vary from 100 to approximately the half of the image width or height (e.g. W / 2 or H / 2). As well, to achieve a high-quality image segmentation, we’re might set the value of color offset parameter, suppose, to 50, to make sure that we select pixels of absolutely different colors.

For that purpose, we generate a new random pixel coordinates and perform a check if none of the previously generated points have the distance to the current point less than the value of the specific parameter mentioned above. To do this, we iterate through the array of pixels coordinates previously generate and for each existing pixel, we’re performing a check if its distance to the newly generated point is not greater or equal to the value of specified distance parameters. For that purpose, we normally use a Euclidian distance formula. Similarly, at this point, we’re performing a check if the value of color of the randomly selected pixel exactly meets the specified criteria. If both conditions are met, we append the newly generated point to the initial array of pixels.

During the following phase, since we’ve obtained the initial set of pixels that will serve as centroids of a newly built initial cluster, we also need to associate an original source image with the initial array being generated. As we already might have noticed, in this case, the image is represented as the matrix of pixels, in which each element of this matrix is an actual vector of pixel’s color (R; G; B). The indices of either matrix row X and column Y are actually the coordinates of the following pixel.

K-Means Clustering Phase

To perform the actual image segmentation, we normally create an array containing all the clusters being generated during the initialization phase. Also, we implement a loop to iterate through the following array, and as you might already know from the algorithm’s step-by-step explanation, during each iteration of the following loop, we normally step through the array of super-pixels (i.e., centroids being previously selected for the current cluster). For each super-pixel, we’re performing a linear search to find the set of those image pixels having a particular color distance that corresponds to the boundary condition being applied. Further, we create a new image from the selected pixels for the newly built cluster. The color of each pixel is replaced with the color of centroid super-pixel. The new image in this case will contain the pixels of the same colors that outline a specific area of the original image. Finally, the newly built cluster is added to the array of clusters.

After that, we compute the coordinates of the central point by finding the center of mass for the array of super-pixels in the parent cluster and perform a check if the centroid super-pixel of the newly built cluster has been moved relative to the central point of the parent cluster. If so, we’re adding the newly built cluster to the array of clusters, otherwise just omit it based on the hypothesis that the newly built and the parent clusters are identical.

Normally, we repeat with the process discussed above for each particular cluster in the target array of clusters until there’s no more clusters to be processed.

Finally, to obtain the ready image being segmented, we need to merge images from all those clusters built into an entire image. Obviously, that, an image associated with each one of those clusters will contain an area of the original image having a particular color. The guidelines on how to accumulatively merge all those images are discussed later on in this article.

Computing Euclidian Distance

As we’ve already discussed, the main aspect of using k-mean clustering algorithm to perform an image segmentation, is the Euclidian distance formula. In this case, we basically dealt with two main variants of the following formula for either computing the actual distance between two pixels, or to determine the similarity of two 3D-vectors colors.

The very general representation of the Euclidian distance formula is as follows:

, where L - is the value of Euclidian distance, x, y - the two vectors between which we're about to compute the value of actual distance, n - the number of dimensions (i.e., components of these vectors) (n = 2).

Since, in this case, we basically deal with the either two or three dimensional vectors only, the simplified variant of the following formula is used.

To compute the actual distance between two pixels with specific coordinates, we'll use the following variation of the Euclidian distance formula:

, where L - is the value of Euclidian distance (x1;x2) and (y1;y2) - the values of (X;Y) coordinates in the Cartesian plane of two pixels x and y respectively.

Also, to obtain the magnitude of similarity value between two 3D-vectors of colors (R; G; B), we'll use the same 3D-variant of this formula:

, where S - the value of similarity magnitude between two vectors of colors which components are (r1;g1;b1) and (r2;g2;b2) respectively.

Optionally, to achieve more sophisticated results of image segmentation, to measure the distance between two pixels or similarity of colors, we can also use the Pearson correlation formula or the formula that allows to compute the value of angle between two vectors being selected.

Using Ready Clusters to Build a Segmented Image

The merge procedure is the final essential step in the segmented image creation process. As we've already discussed, as the result of performing k-means clustering, we normally obtain the array of clusters, in which each cluster contains a specific image of a particular segmented region. At this point, our goal is to assemble all those segmented regions into an entire output image.

To do this, we'll use a trivial linear algorithm that deals with the matrices of pixels, copying each valid pixel from the current matrix into the target matrix of pixel, previously allocated. In particular, this algorithm has the following formulation.

According to the following algorithm, we normally need to iterate through the array of clusters and for an image within each cluster, we must retrieve the coordinates of those pixels that belong to the current segmented region. For that purpose, we iterate through the matrix of pixels and for each pixel performing a check if the following pixel has the color that is not equal to (0xFFFFFF) - the "white" color. If so, we're assigning the color data of the current pixel to a corresponding pixel in the target matrix of pixels having the same coordinates location. We normally proceed with the following process until we've stepped through all clusters produced during the k-mean clustering phase. As the result of merging all those images for each particular cluster, we're obtaining a ready segmented image.

Using the Code

In this section, we'll discuss about on how to develop a code in C# that implements the algorithm briefly discussed above. Before we begin, let's focus on specifically the data structures used to store the data on multiple different objects such as either pixels, images or clusters. Obviously that, the KMCPoint<T> template class is the simplest data structure used to store the data on pixel coordinates and its color. Here's an implementation of the class in C#:

class KMCPoint<t>

{

public KMCPoint(T X, T Y, Color Clr) { this.X = X; this.Y = Y; this.Clr = Clr; }

public T X { get { return m_X; } set { m_X = value; } }

public T Y { get { return m_Y; } set { m_Y = value; } }

public Color Clr { get { return m_Color; } set { m_Color = value; } }

private T m_X;

private T m_Y;

private Color m_Color;

}

As you can see from the code above, the following class contains two field private variables of either m_X and m_Y that are used to hold the data on the either X- or Y - coordinates of a certain pixel. Also, the following class has another private variable m_Color that is actually an object of Color generic class used to store the data on the actual color of pixel in (R;G;B) format. Since all these variables are private, we also additionally implement three corresponding properties X, Y and Clr to access and modify the values of those private variables. Normally, the following class also contains a constructor that accepts three parameters as passed as an argument. The values of these parameters are assigned to the interval private variables via the corresponding public properties when the object of KMCPoint<T> class is constructed.

Before we begin the discussion of code, let's remind that in the following code being developed to instantiate and process bitmaps, we'll use a modified variant of generic Bitmap class that allows to manipulate pixels stored in the previously allocated array rather than using conventional Bitmap.GetPixel(...) or Bitmap.SetPixel(...) methods.

This normally allows to hopefully :) increase the performance of image segmentation process when dealt with images of typically large dimensions.

class LockedBitmap

{

public LockedBitmap(string filename)

{

if (m_Bitmap == null)

{

m_Bitmap = new Bitmap(filename);

m_rect = new Rectangle(new Point(0, 0), m_Bitmap.Size);

}

}

public LockedBitmap(Int32 Width, Int32 Height)

{

if (m_Bitmap == null)

{

m_Bitmap = new Bitmap(Width, Height);

m_rect = new Rectangle(new Point(0, 0), m_Bitmap.Size);

}

}

public LockedBitmap(Bitmap bitmap)

{

if (m_Bitmap == null)

{

m_Bitmap = new Bitmap(bitmap);

m_rect = new Rectangle(new Point(0, 0), m_Bitmap.Size);

}

}

public static implicit operator LockedBitmap(Bitmap bitmap)

{

return new LockedBitmap(bitmap);

}

public void LockBits()

{

m_BitmapInfo = m_Bitmap.LockBits(m_rect, System.Drawing.Imaging.

ImageLockMode.ReadWrite, m_Bitmap.PixelFormat);

m_BitmapPtr = m_BitmapInfo.Scan0;

m_Pixels = new byte[Math.Abs(m_BitmapInfo.Stride) * m_Bitmap.Height];

System.Runtime.InteropServices.Marshal.Copy(m_BitmapPtr, m_Pixels,

0, Math.Abs(m_BitmapInfo.Stride) * m_Bitmap.Height);

}

public void UnlockBits()

{

m_BitmapPtr = m_BitmapInfo.Scan0;

System.Runtime.InteropServices.Marshal.Copy(m_Pixels, 0,

m_BitmapPtr, Math.Abs(m_BitmapInfo.Stride) * m_Bitmap.Height);

m_Bitmap.UnlockBits(m_BitmapInfo);

}

public Color GetPixel(Int32 Row, Int32 Col)

{

Int32 Channel = System.Drawing.Bitmap.GetPixelFormatSize

(m_BitmapInfo.PixelFormat);

Int32 Pixel = (Row + Col * m_Bitmap.Width) * (Channel / 8);

Int32 Red = 0, Green = 0, Blue = 0, Alpha = 0;

if (Channel == 32)

{

Blue = m_Pixels[Pixel];

Green = m_Pixels[Pixel + 1];

Red = m_Pixels[Pixel + 2];

Alpha = m_Pixels[Pixel + 3];

}

else if (Channel == 24)

{

Blue = m_Pixels[Pixel];

Green = m_Pixels[Pixel + 1];

Red = m_Pixels[Pixel + 2];

}

else if (Channel == 16)

{

Blue = m_Pixels[Pixel];

Green = m_Pixels[Pixel + 1];

}

else if (Channel == 8)

{

Blue = m_Pixels[Pixel];

}

return (Channel != 8) ?

Color.FromArgb(Red, Green, Blue) : Color.FromArgb(Blue, Blue, Blue);

}

public void SetPixel(Int32 Row, Int32 Col, Color Clr)

{

Int32 Channel =

System.Drawing.Bitmap.GetPixelFormatSize(m_BitmapInfo.PixelFormat);

Int32 Pixel = (Row + Col * m_Bitmap.Width) * (Channel / 8);

if (Channel == 32)

{

m_Pixels[Pixel] = Clr.B;

m_Pixels[Pixel + 1] = Clr.G;

m_Pixels[Pixel + 2] = Clr.R;

m_Pixels[Pixel + 3] = Clr.A;

}

else if (Channel == 24)

{

m_Pixels[Pixel] = Clr.B;

m_Pixels[Pixel + 1] = Clr.G;

m_Pixels[Pixel + 2] = Clr.R;

}

else if (Channel == 16)

{

m_Pixels[Pixel] = Clr.B;

m_Pixels[Pixel + 1] = Clr.G;

}

else if (Channel == 8)

{

m_Pixels[Pixel] = Clr.B;

}

}

public Int32 Width { get { return m_Bitmap.Width; } }

public Int32 Height { get { return m_Bitmap.Height; } }

public void Save(string filename)

{

m_Bitmap.Save(filename);

}

public Bitmap m_Bitmap = null;

private Rectangle m_rect;

private IntPtr m_BitmapPtr;

private byte[] m_Pixels = null;

private BitmapData m_BitmapInfo = null;

}

When the LockedBitmap class object is initialized, the appropriate constructor is invoked. In this code, we use multiple constructor declarations to either load bitmap from a file, create an empty bitmap of specified width and height, copy one bitmap object to another one using shallowed copy mechanism. When one of those three constructors are invoked, we're performing a check if the interval generic Bitmap class object is not initialized so far, if so, we're initializing that object using new operator. Also, at this point, we're initializing generic Rectangle class object to manipulate a rectangular area of a bitmap having a specific size. After that, we need to call LockBits()/UnlockBits() methods prior to performing various operations on the bitmap's matrix of pixels by invoking GetPixel(...)/SetPixel(...) methods. By calling these two methods, we actually lock or unlock the internal memory buffer that hold the matrix of bitmap's pixels. To do this, we invoke generic Bitmap.LockBits(...) method that causes the internal buffer to be locked. After that, we're obtaining the pointer to the first line of pixels in the following internal buffer. Finally, we use the generic marshalling copy method to actually move the elements of the internal buffer to the array of pixels previously allocated. Similarly, we're performing an unlock by calling the UnlockBits() method, during the execution of which we're obtaining the pointer to the first line of the bitmap's internal buffer and invoking the same marshalling copy method to move the array of pixels back to the bitmap's internal buffer.

Those methods are also re-used in LockedBitmap class object. Actually, during the following methods execution, we normally compute the absolute value of index of a specific pixel with coordinates (Row; Col) in the interval array of pixels. Since we've obtained the actual value of index, we either retrieve or set the color reference value of a given pixel by calling those methods mentioned above.

Another class we're about to discuss is the KMCFrame which is used to store the data on each cluster created during k-means clustering procedure. Let's remind that a "cluster" is actually an object having such parameters as an image object containing currently segmented area of the original image, the array of centroid super-pixels, central super-pixel computed as a center of mass for all centroids:

class KMCFrame

{

public KMCFrame(LockedBitmap Frame, List<KMCPoint<int>> Centroids,

KMCPoint<int> Center)

{

this.Frame = Frame;

this.m_Centroids = Centroids; this.Center = Center;

}

public LockedBitmap Frame

{

get { return m_Frame; }

set { m_Frame = value; }

}

public List<KMCPoint<int>> Centroids

{

get { return m_Centroids; }

set { m_Centroids = value; }

}

public KMCPoint<int32> Center

{

get { return m_Center; }

set { m_Center = value; }

}

private LockedBitmap m_Frame = null;

private KMCPoint<double><int32> m_Center;

private List<KMCPoint<int>><kmcpoint<int> m_Centroids = null;

}

Obviously that, the following class contains three private member variables such as m_Frame which is an object of class Bitmap that actually is used to store the data on the image associated with the following cluster, m_Centroids - an object of List<KMCPoint<int>> class used to store an array of centroid super-pixels for the current cluster, m_Center - the central super-pixel, computed as a center of mass for all existing in m_Centroids array super-pixels. Also, the three corresponding properties are declared in this class and used to access and modify those private fields. The constructor of this class normally accepts three parameters whose values are assigned to specific private variables accessed by their properties during the KMCFrame class instantiation.

Another data structure we need to implement is a KMCClusters class and derive it from IEnumerable<KMCFrame> template. This class is used as the array of clusters. Besides the encapsulation of the List<KMCFrame> class object, the following class also contains the number of public methods we're about to discuss right now.

In particular, the following class contains void Init(string Filename, Int32 Distance, Int32 Offset) method that will further be used to initialize the empty array of clusters. The following code in C# demonstrates the implementation of the following method:

public void Init(string Filename, Int32 Distance, Int32 Offset)

{

LockedBitmap FrameBuffer = new LockedBitmap(Filename);

List<KMCPoint<int>> Centroids = new List<KMCPoint<int>>();

this.Generate(ref Centroids, FrameBuffer, Distance, Offset);

KMCPoint<int> Mean = this.GetMean(FrameBuffer, Centroids);

m_Clusters.Add(new KMCFrame(FrameBuffer, Centroids, Mean));

}

The following method accepts values of three parameters passed as an argument including the filename of original source image, the values of distance and color offset parameters. When the following method is invoked, first, it constructs a bitmap object FrameBuffer and pass the value of first argument Filename to its constructor. This object, when it's created, loads the original image from file and assigns the data on each pixel of the original image to the specific interval private data structures such as a matrix of pixels. After the original image is loaded, the array of centroid super-pixels is instantiated by constructing an object of List<KMCPoint<int>> template class and the specific method Generate(...) is invoked to randomly generate a set of super-pixels for the initial cluster being created.

After we've initialized the specific data structures to hold the data on the image and array of super-pixel of the initial cluster, now we perform a computation of the central super-pixel coordinates and assign it to one of the parameters of KMCFrame class object being created. After that, we invoke method m_Clusters.Add(new KMCFrame(FrameBuffer, Centroids, Mean)); to append the initial cluster data to the array of clusters.

The method Generate(...) is a special interest in this code since it implements the algorithm that allows to randomly create an array of super-pixels for the initial cluster:

public void Generate(ref List<kmcpoint<int>> Centroids,

LockedBitmap ImageFrame, Int32 Distance, Int32 Offset)

{

Int32 Size = ImageFrame.Width * ImageFrame.Height;

ImageFrame.LockBits();

for (Int32 IterCount = 0; IterCount < Size; IterCount++)

{

Int32 Rand_X = rand.Next(0, ImageFrame.Width);

Int32 Rand_Y = rand.Next(0, ImageFrame.Height);

KMCPoint<int> RandPoint = new KMCPoint<int>(Rand_X,

Rand_Y, ImageFrame.GetPixel(Rand_X, Rand_Y));

if (!this.IsValidColor(Centroids, RandPoint, Offset) &&

!this.IsValidDistance(Centroids, RandPoint, Distance))

{

if (!Centroids.Contains(RandPoint))

{

Centroids.Add(RandPoint);

}

}

}

ImageFrame.UnlockBits();

}

During the following method execution, we initially compute the total number of iterations that exactly correspond to the maximum possible amount of random super-pixels, and perform the series of iterations which number equals the total number iterations previously obtained. During each particular iteration, we generate two random values in the interval from [0; ImageFrame.Width] and [0; ImageFrame.Height] respectively, to obtain the coordinates of the current super-pixel. After that, we construct the KMCPoint class object with previously obtained values passed as the arguments to the following object's constructor. Also, since the coordinates of a new super-pixel were obtained, we additionally obtain the color data from the matrix of image pixels by invoking ImageFrame.GetPixel(Rand_X, Rand_Y) method.

Before appending the super-pixel object being constructed, we're performing a check if the super-pixel coordinates and color being generated exactly meets the conditions discussed above. For that purpose, we use two methods of either this.IsValidDistance(Centroids, RandPoint, Distance) or this.IsValidColor(Centroids, RandPoint, Offset) to perform the super-pixel validity verification. The following fragments of code in C# implement those two methods mentioned above:

private bool IsValidDistance(List<kmcpoint<int>> Points,

KMCPoint<int> Target, Int32 Distance)

{

Int32 Index = -1; bool Exists = false;

while (++Index < Points.Count() && !Exists)

Exists = ((Math.Abs(Target.X - Points.ElementAt(Index).X) <= Distance) ||

(Math.Abs(Target.Y - Points.ElementAt(Index).Y) <= Distance)) ?

true : false;

return Exists;

}

private bool IsValidColor(List<kmcpoint<int>> Points, KMCPoint<int> Target,

Int32 Offset)

{

Int32 Index = -1; bool Exists = false;

while (++Index < Points.Count() && !Exists)

Exists = (Math.Sqrt(Math.Pow(Math.Abs(Points[Index].Clr.R -

Target.Clr.R), 2) +

Math.Pow(Math.Abs(Points[Index].Clr.G -

Target.Clr.G), 2) +

Math.Pow(Math.Abs(Points[Index].Clr.B -

Target.Clr.B), 2))) <= Offset ? true : false;

return Exists;

}

By executing these methods, we normally iterate throught the array of super-pixels being generated and for each particular item, compute either distance or color offset to the new super-pixel which coordinates have been previously generated during Generate method execution. Also, we're performing a check if either distance or color offset to the new super-pixel does not exceed the specified boundary. If so, we're breaking the loop execution and returning the specific result value. Particularly, if the array of super-pixels contains at least one super-pixel which distance or color offset relative to the new super-pixel is less than or equal to the value of specific boundary parameter, this method returns false. It means that the new super-pixel is not a valid and cannot be added to the array of super-pixels. To compute the actual distance and color offset, we typically use the same Euclidian distance formula discussed in the background section of this article.

Additionally, in this class, we're implementing one more important method KMCPoint<int> GetMean(Bitmap FrameBuffer, List<KMCPoint<int>> Centroids) used to compute the central super-pixel coordinates:

public KMCPoint<int> GetMean(LockedBitmap FrameBuffer,

List<KMCPoint<int>> Centroids)

{

double Mean_X = 0, Mean_Y = 0;

for (Int32 Index = 0; Index < Centroids.Count(); Index++)

{

Mean_X += Centroids[Index].X / (double)Centroids.Count();

Mean_Y += Centroids[Index].Y / (double)Centroids.Count();

}

Int32 X = Convert.ToInt32(Mean_X);

Int32 Y = Convert.ToInt32(Mean_Y);

FrameBuffer.LockBits();

Color Clr = FrameBuffer.GetPixel(X, Y);

FrameBuffer.UnlockBits();

return new KMCPoint<int>(X, Y, Clr);

}

During the following method execution, we normally iterate through the array of super-pixels previously generated and for each super-pixel, retrieve the either X- or Y - coordinate and divide it by the overall number of super-pixels in the array. Further, we accumulate each value obtained into the Mean_X and Mean_Y sum variable. As you've might noticed the "mean" value of either X - or Y - coordinate is computed separately. After that, those values of Mean_X and Mean_Y are converted to Int32 datatype. By using these values as well as the value of color of the super-pixel retrieved from the original image, we finally construct the KMCPoint<int> class object and pass those values to its constructors arguments.

The most fundamental class we're about discuss at this point is the ImageSegmentation class implementation. In the following class, we normally declare the method public void Compute(string InputFile, string OutputFile) which is the main of the image segmentation engine that performs the actual k-mean clustering. Also, besides this method, we'll implement two more simple methods that perform the Euclidian distance computation, which we'll discuss more about later.

Here's the implementation of the mentioned above Compute method:

public void Compute(string InputFile, string OutputFile)

{

var watch = System.Diagnostics.Stopwatch.StartNew();

DirectoryInfo dir_info = new DirectoryInfo("Clusters");

if (dir_info.Exists == false) dir_info.Create();

m_Clusters.Init(InputFile, m_Distance, m_OffsetClr);

LockedBitmap ResultBitmap =

new LockedBitmap(m_Clusters[0].Frame.Width, m_Clusters[0].Frame.Height);

Int32 FrameIndex = 0;

for (Int32 Index = 0; Index < m_Clusters.Count(); Index++)

{

List<KMCPoint<int>> Centroids = m_Clusters[Index].Centroids.ToList();

LockedBitmap FrameBuffer =

new LockedBitmap(m_Clusters[Index].Frame.m_Bitmap);

FrameBuffer.Save("Clusters\\Cluster_" + FrameIndex + ".jpg");

FrameBuffer.LockBits();

for (Int32 Cnt = 0; Cnt < Centroids.Count(); Cnt++)

{

Int32 Width = FrameBuffer.Width;

Int32 Height = FrameBuffer.Height;

LockedBitmap TargetFrame = new LockedBitmap

(FrameBuffer.Width, FrameBuffer.Height);

TargetFrame.LockBits();

for (Int32 Row = 0; Row < FrameBuffer.Width; Row++)

{

for (Int32 Col = 0; Col < Height; Col++)

{

double OffsetClr = GetEuclClr(new KMCPoint<int>

(Row, Col, FrameBuffer.GetPixel(Row, Col)),

new KMCPoint<int>(Centroids[Cnt].X,

Centroids[Cnt].Y, Centroids[Cnt].Clr));

if (OffsetClr <= 50)

{

TargetFrame.SetPixel(Row, Col, Centroids[Cnt].Clr);

}

else TargetFrame.SetPixel

(Row, Col, Color.FromArgb(255, 255, 255));

}

}

TargetFrame.UnlockBits();

List<KMCPoint<int>> TargetCnts = new List<KMCPoint<int>>();

TargetCnts.Add(Centroids[0]);

KMCPoint<int> Mean = m_Clusters.GetMean(TargetFrame, TargetCnts);

if (Mean.X != m_Clusters[Index].Center.X &&

Mean.Y != m_Clusters[Index].Center.Y)

m_Clusters.Add(TargetFrame, TargetCnts, Mean);

FrameIndex++;

}

FrameBuffer.UnlockBits();

}

ResultBitmap.LockBits();

for (Int32 Index = 0; Index < m_Clusters.Count(); Index++)

{

LockedBitmap FrameOut = new LockedBitmap

(m_Clusters[Index].Frame.m_Bitmap);

FrameOut.LockBits();

FrameOut.Save("temp_" + Index + ".jpg");

int Width = FrameOut.Width, Height = FrameOut.Height;

for (Int32 Row = 0; Row < Width; Row++)

{

for (Int32 Col = 0; Col < Height; Col++)

{

if (FrameOut.GetPixel(Row, Col) !=

Color.FromArgb(255, 255, 255))

{

ResultBitmap.SetPixel(Row, Col,

FrameOut.GetPixel(Row, Col));

}

}

}

FrameOut.UnlockBits();

}

ResultBitmap.UnlockBits();

ResultBitmap.Save(OutputFile);

watch.Stop();

var elapsedMs = watch.ElapsedMilliseconds;

TimeSpan ts = TimeSpan.FromMilliseconds(elapsedMs);

Console.WriteLine("***Done***\n" + ts.ToString(@"hh\:mm\:ss"));

}

While performing k-means clustering computations, we actually need to initialize the array of clusters by generating and appending an intial cluster to the following array prior to executing the iterations of the main loop. To do this, we invoke the m_Clusters.Init(...) method, thoroughly discussed above. After that, we enter the main process loop. During each iteration of the following main loop, we retrieve the data on the current image and array of centroid super-pixels assigned to the specific data structures for the current cluster object. After we've obtained the array of centroid super-pixels, we step through the elements of this array and for each super-pixel, we're aiming to find the subset of pixels in the current image which value of color offset does not exceed the specific boundary value (e.g., valid pixels). Since, we've obtained such subset, we build a new cluster based on performing a copy of those valid pixels into a new image and assigning the subset of new centroid super-pixels to the specific array for the newly built cluster. After that, we're performing a check if the "mean" super-pixel coordinates are not equal to the coordinates of the current centroid super-pixel in the parent cluster being taken. If not, we're constructing a new cluster object and append it to the array of clusters. At this point, we normally proceed with the following process for each particular centroid super-pixel of the current cluster retrieved from the array. Notice, that only the intial cluster might contain more than one centroid super-pixel, the other clusters are limited to contain just one super-pixel.

After we've completed the k-means clustering procedure, we need to actually "gather" the images for each cluster into a segmented resulting image. For that purpose, we're iterating through the array of clusters and retrieve a matrix of pixels of each particular image. Then, we iterate through the following matrix and perform a check if the color of the current pixel with specific coordinates is not equal to "white" 0xFFFFFF. If not, we're copying the following current pixel to the matrix of pixels for the resulting image.

The final aspect we're about to discuss in this section is the methods that perform a computation of the Euclidian distance between two pixels or two color hues:

public double GetEuclD(KMCPoint<int> Point1, KMCPoint<int> Point2)

{

return Math.Sqrt(Math.Pow(Point1.X - Point2.X, 2) +

Math.Pow(Point1.Y - Point2.Y, 2));

}

public double GetEuclClr(KMCPoint<int> Point1, KMCPoint<int> Point2)

{

return Math.Sqrt(Math.Pow(Math.Abs(Point1.Clr.R - Point2.Clr.R), 2) +

Math.Pow(Math.Abs(Point1.Clr.G - Point2.Clr.G), 2) +

Math.Pow(Math.Abs(Point1.Clr.B - Point2.Clr.B), 2));

}

The following methods are very simple and normally return the evaluated value of the Euclidian distance formula expression discussed in the background theory section of this article.

Finally, the code in C# listed below illustrates the programs main function along with the ImageSegmentation class object creation and initialization:

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Image Segmentation Utility v.1.0a

by Arthur V. Ratz, CPOL @ 2017\n");

Console.Write("Input file name: ");

string InpFile = Console.ReadLine();

Console.Write("Output file name: ");

string OutFile = Console.ReadLine();

ImageSegmentation ImageSeg = new ImageSegmentation();

ImageSeg.Compute(InpFile, OutFile);

Console.ReadKey();

}

}

Points of Interest

The algorithm discussed in this article has many interesting variations based on using different techniques and approaches of machine learning and data mining including the using of Pearson correlation instead of Euclidian distance formula, implementing k-means++ initialization algorithm to improve the quality of clustering, using min-max selection approach to find all those pixels that have the closest distance to the specific super-pixel.

History

- 27th August, 2017 - First revision of article published

- 29th August, 2017 - Final revision of article published