Introduction

Today, almost everyone has at least heard of Docker and the term containerization. It doesn't matter whether they are experts in building container-oriented services, or whether they know how containers function. But they have stumbled upon a document, or ebook, magazine, article, or a video that talks about a containerized application, orchestration tools, or Docker, Kubernetes anything. The point is, people are aware of the technology, and the same evolution of virtual machine stack, that everybody shows. That is pretty much old school now. What I want to do in this Docker 101 class, is to establish the core fundamentals of the Docker setup, how it works, how you can quickly get Docker, and how to try it out.

This article will be part of the articles published under the competition, and I will try my best to cover up all the aspects of Docker, and containerization of the applications with this tutorial collection of the articles. Among a few topics about Docker itself, we will explore the topics covering:

- Data persistence in a container

- Services in Docker

- Container orchestration

- Kubernetes and Docker

These are a few of the topics that I can discuss about Dockers, without actually making beginners lose track, or experts call me an exaggerative geek.

What is Docker?

A short definition for those, who still don't know what Docker is, and why they should care about it. When people talk about Docker, they think of a containerization tool, or some even think of it as a process orchestrator. They are all correct. Docker is, and can be thought of, as a:

- Package manager

- Orchestrator

- Load balancer

And I didn't even mention all the other fuzz, that you can find on the internet like, Docker is a product developed by the company of the same name, or, Docker is open source containerization management tool, or another one like, Docker is the gateway to cloud. They are all right in their own perspective, but still that does not just cover everything that Docker can do. That is why I am writing this article, to showcase the functionality and features of Docker, instead of talking about it. Let's start with the requirements and prerequisites for Docker.

Platform and Requirements

Docker itself is available to be installed on Linux, Windows or macOS, but I would be utilizing Linux platform here. The commands and Docker behavior will be similar on Windows as well as on macOS. But I assure you, it would be an amazing ride if you join using a Linux distro, and especially if you choose to setup Ubuntu installation 16.04 or latest. I have used Ubuntu 18.04 for this setup.

The second thing to note here is that I am using Snaps, the Snapcraft. The reason behind this step and selection is that snaps are really very easy to install, upgrade and manage. They come shipped with the services, programs, and utilities that enable you to manage the utility quite easily. Do not worry, in the section for installation and setup, I will explain and provide guidance on other installation and requirements too as needed, but the primary method is this one.

Installation and Configuration

As discussed, we will be installing the snaps for the Docker and, for those who want to learn some advanced topics of orchestration, Kubernetes. Snaps are available on Linux distributions, the ones that are released recently in 2018, or 2017. The installation process is clearly stated and mentioned on the Docker website, anyways I will still try to explain the method on a few of the platforms.

Windows

On Windows, the only things you need to make sure of before executing the installer for Docker are:

- Hardware virtualization

- Hyper-V to be installed and enabled

This also means, that you cannot try this stuff out on Windows Home edition. You would require to have, Windows Pro or onwards edition for this. But this will not prevent you from trying out the Docker installation on a virtualized environment, and there you can easily setup Linux distro and get started with the next section.

But if you already have a Pro edition of Windows, just head over to Docker downloads and install Docker for your machine.

Linux (Ubuntu 18.04)

Although any of the Linux distribution that supports Snaps would work, but I am going to use the Ubuntu distribution in the rest of the article as well as the articles that follow-up after this one. So to do that, just make sure you have the snaps available for your system. To make sure you are having the snap system installed, either make sure that you are running Ubuntu 16.04 or onwards, that is why I recommended using Ubuntu 18.04. Snaps daemons are installed by default on these operation systems, and in case the following command doesn't give a response:

$ snap version

Then it means that your operating system does not have a snap system installed. To install one, you can run the following commands:

$ sudo apt-get install snapd

This will install the daemon program for the snappy environment and later you can verify that the snappy is available. You can also follow along with the documentation provided by the snap website, for Ubuntu, or select the operation system and the distribution you selected.

Once you are done with that, just go ahead and install the Docker from the snap store.

$ sudo snap install docker

This will take some time and will have the Docker engine installed and set up for you. Now you can go ahead and install and setup a few more things, such as a hello world Docker image for trial purposes or what-so-ever. But what I would rather want to do here, is to hint out a few things about Docker before we step out and enter the second episode of this Docker series. Now, to move ahead and provided you have downloaded and installed Docker engine, move ahead to the next step and let's start exploration of Docker.

Non-snappy Method

Docker publishes the artifacts for Linux environments too, you can download and install those tarballs or use the repository managers like apt, yum, etc.

Some of the important links you might want to follow are listed below:

Since Docker is open sourced, you can always go ahead and compile the source code yourself. This cookbook snippet from Packt would be able to tell you what steps you need to perform in order to build the source code for the Docker engine.

Exploring Docker

Before I conclude this chapter, let's take a quick look at Docker engine from the standpoints of storage, networking, and process information. This will lay some of the foundation for the concepts that we are going to learn later on. So, for the first thing, we will be utilizing my own build Node.js application, the reasons being:

- It is my own development, and I can easily explain what I did, and where.

- It is available as an open source project, and needs contributions and collaboration of the community, on GitHub.

- It is light-weight, and exposes some of the best practices that one can, should, employ during the development of Dockerized packages.

- Docker package is merely 4-lines of code, underlying project is a complete web application project written in Node.js, and exposing several design patterns and architectural designs, especially for serverless approach.

- The project also comes with other configurable and ready-to-execute scripts, such as Docker Compose file.

You can explore the project on GitHub (link provided in the downloads section above), and see how it was structured for yourself. But now, without any further delay, let us create our first Docker container and visualize what the process is like. Inside our application, we are exposing the web app via this code:

let port = process.env.PORT || process.env.PORT_AZURE || process.env.PORT_AWS || 5000;

app.listen(serverConfigurations.serverPort, () => {

let serverStatus = `Server listening on localhost:${serverConfigurations.serverPort}.`;

logger.logEvent("server start", serverStatus);

console.log(serverStatus);

});

This code is not important, from Docker's standpoint, but what I want you to see is that this is what will be executed that in turn provides us a web server running in a container. In a very normal environment, this would just execute the function and start the event loop for the Node.js app, which means that your process will start and it will listen to the network traffic for that hostname, as well as that port that we have assigned. In this case, your output for the command:

$ npm start

would be something like this:

> express-nodejs@1.3.0 start /home/afzaal/Projects/Git/Nodejs-Dockerized/nodejs-dockerized

> node ./src/app.js

Cannot start Application Insights; either pass the value to this app,

or use the App Insights default environment variable.

[Event] server start: Server listening on localhost:5000..

Server listening on localhost:5000.

From a naive standpoint, this all looks fair, but now we stumble upon a problem. If you look at it (ignore the Application Insights line), you will find that it states, "Server listening on localhost:5000.", which brings us to a point that makes it clear that now our port 5000 is bound to this process. This will cause problems with scalability of our application—that have a right of becoming a service—and will in turn require us to:

- Either run the application on different and configurable ports

- This is quite possible in the application I wrote, you can pass the port for the application to listen on, but this is not the recommended approach. Because it will also require you to manage the traffic forwarding from outside this machine, and brings us to the second point.

- Or run the application behind a load balancer and have load balancer forward the traffic to the 5000 port of each process that you spawn for this service.

Another major, and most important problem with this approach is that if our process fails, or terminates, there is no way that our environment can bring back the process. We can overcome the problem by writing our own management program, or utilizing another web server program, but even there we need to manage and maintain how we specify that our application is up and running.

These are the areas where Docker shines and—not just Docker, but other orchestrators and process managers as well, such as Kubernetes, DC/OS Marathon, etc. but since we are talking about exploration of Docker,—we will be talking about these features, in proper depth in the later chapters, in this chapter however, we want to explore how this behavior of deployment of an application on a machine can be achieved. In order to understand how we do that, we are going to perform both the actions that we had talked about as a problem and we are going to leave that all upto Docker. How we do that, is, we create a new image in Docker, and we package our application inside it. In Docker, packaging is done using a Dockerfile, and that file contains the sequence of commands that are required to be run in order to start the process. A Dockerfile requires the following information in order to start your process:

- Dependencies

- Source files

- Entry point or command

Following this pattern, we know that our application has a dependency on Node.js runtime, and the files are in the same folder, and we can start the project using the npm start command. Our Dockefile would be:

FROM node:10-alpine

COPY . .

RUN [ "npm", "update" ]

CMD [ "npm", "start" ]

That is it!

We do not require to write even an extra word, here. I have already talked about these commands in detail in another article of mine, here. And I do not feel like I should talk about it once more. Let's build the image, and run it to verify how things are taken care of for us.

# docker build -t codeproject/dockerops:gettingstarted .

This command will build our first container image, and provide us with the container image with that name.

Sending build context to Docker daemon 1.527MB

Step 1/4 : FROM node:10-alpine

---> 7ca2f9cb5536

Step 2/4 : COPY . .

---> 9ac07aa147b7

Step 3/4 : RUN [ "npm", "update" ]

---> Running in ba65eff2e6d9

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN express-nodejs@1.3.1 No repository field.

+ uuid@3.3.2

+ body-parser@1.18.3

+ pug@2.0.3

+ express@4.16.4

+ applicationinsights@1.0.6

added 120 packages from 179 contributors and audited 261 packages in 30.756s

found 0 vulnerabilities

Removing intermediate container ba65eff2e6d9

---> 558e5026cb19

Step 4/4 : CMD [ "npm", "start" ]

---> Running in 807b13ed3a29

Removing intermediate container 807b13ed3a29

---> 46dc26469806

Successfully built 46dc26469806

Successfully tagged codeproject/dockerops:gettingstarted

As you can see, during the process, Docker injects everything that is needed inside the image. All the required components are downloaded, patched, any executables are ran, and finally an image is built. The build process is quite similar to any other building process, think of the .NET Core build process,

$ dotnet restore

# Assuming the .NET Core project is in the same directory, we execute

$ dotnet build

# If everything goes fine, we do

$ dotnet run

Similarly, what Docker did above was it merely built the image. Now our project is ready to be run. We have named the image as, codeproject/dockerops:gettingstarted. This will be easier to remember, and we will be using the label of the image during the container creation process. This way we can easily manage other operations such as inspection, and removal of the containers from the system. Let's go ahead and create the container, and inspect it as well.

# docker run -d --name gettingstarted codeproject/dockerops:gettingstarted

This will take hardly a few seconds and will create the container for you! Notice the -d flag, that will leave our terminal alone, and will run the container in detached mode. We can explore the logs to see what happens inside the process, and they are showed in the later part of the article continue reading... In the previous article, we discussed how we can opt out of exposing a port, in this one we will see how a container always listens to the network traffic for the IP address that is mapped to it by the Docker engine. Remember how we assigned 5000 as the port for the process, let us inspect the container and find out the IP address for it.

# Notice how gettingstarted helps us in passing the container reference.

# docker inspect gettingstarted

This gives out quite a lot of information, and we are only interested (for the time being, only) in the container information, such as the status, networking and the hostname or IP address information. Which is as follows:

[

{

"Id": "c3f799ed9a74f1cb23c1046bceeee9144aad1122f70f4a39860722b625c5ef5b",

"Created": "2018-10-17T00:44:09.340443736Z",

"Path": "npm",

"Args": [

"start"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 16146,

"ExitCode": 0,

"Error": "",

"StartedAt": "2018-10-17T00:44:10.767427825Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:46dc2646980611d74bd95d24fbee78f6b09a56d090660adf0bd0bc452228f82d",

...

"Name": "/gettingstarted",

"RestartCount": 0,

"Driver": "aufs",

"Platform": "linux",

...

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

...

"Config": {

"Hostname": "c3f799ed9a74",

"Domainname": "",

...

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "2b6ace2bb890dcd59370798285d3c917f40e3f23330298f8a11dff1fbe27b627",

"EndpointID": "12c5e6c571af574e88c796e9804bd571523fc046aaaaa2d39a842580b391e745",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

...

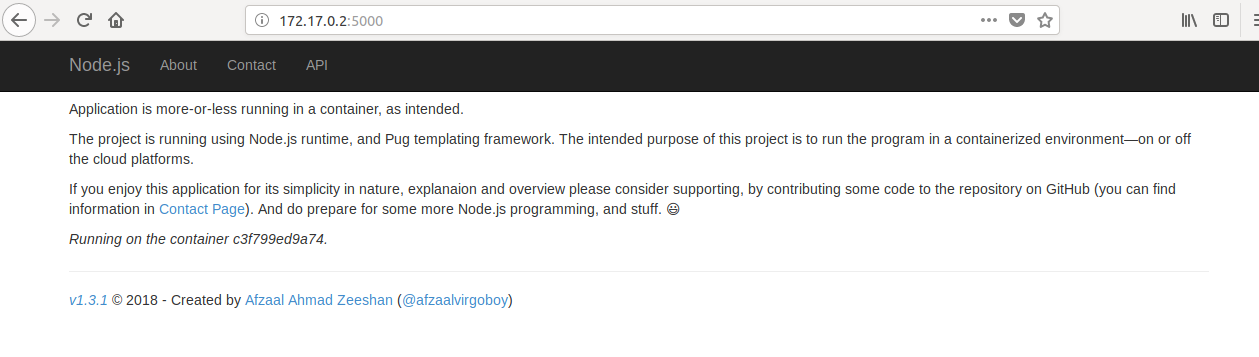

All the lines that are "..." are used to cover up and truncate the information that is extra in this stage. From the text above, you can extract some information about the container, and some information is left over for the different parts of the Docker service. Remember, Docker engine is used in the standalone container mode, as well as in the Swarm mode. Most of the settings available in this value come are for the Swarm mode, in which Docker would be managing machines, services, or stacks. However, we are interested in the area where we are provided with this IPAddress value. That is the IP address for the container that is hosting our Node.js app. Recall that our port was 5000, combining and hitting the URL, we get:

Figure 1: Home page of the container app running in Firefox.

And that is how we can access the services from Docker. It is the same page that you might be tired of looking at, but yes, this is the service being exposed from the Docker engine. And if we explore the logs for the container, the following is what we get:

# docker logs gettingstarted

> express-nodejs@1.3.1 start /

> node ./src/app.js

Cannot start Application Insights; either pass the value to this app,

or use the App Insights default environment variable.

[Event] server start: Server listening on localhost:5000..

Server listening on localhost:5000.

[Request] GET: /.

[Request] GET: /about.

[Request] GET: /.

[Request] GET: /contact.

[Request] GET: /.

[Request] GET: /.

I just surfed the website for a while from the container, and as seen the above are the logs for the container. There is no difference, in how the application executes. Similarly, there is no difference in the runtime, or how the CPU, RAM or network resources are utilized by the process. The difference comes when we look at the process from a different standpoint—of not being a solo player, rather a worker in a swarm.

What is Different? You Say.

Recall the problems we mentioned earlier, 1. Fault-tolerance, 2. Scalability. Both of these problems are solved with this. Docker plays a vital role in this, by being a services' health manager, as well as a good and production grade load balancer. This gives us an opportunity to utilize Docker not just as a package manager for our application, but also as a good load balancer for our application. Although I must admit, that other load balancers and orchestrators such as Kubernetes are much better at handling scalability, Docker is still a good choice for quick deployment of apps and services.

Spoiler alert, although this is the main topic of debate in the next episodes, the Docker Compose concept is useful when it comes to scalability, and fault-tolerance of the containers. A very simple, minimal example of a Docker Compose file can be found in the same GitHub repository:

version: '3'

services:

nodejsapp:

build: .

image: afzaalahmadzeeshan/express-nodejs:latest

deploy:

replicas: 3

resources:

limits:

cpus: ".1"

memory: 100M

restart_policy:

condition: on-failure

ports:

- "12345:80"

networks:

- appnetwork

networks:

appnetwork:

As this is visible from the settings of this deployment script, we have the following configurations:

restart_policy:

condition: on-failure

And secondly, this one:

replicas: 3

These configurations tell Docker enginer to (create and; not as needed) scale the service to 3 instances, and also to make sure that engine recreates the container if the container faces an issue.I won't be going in depth of this script in this article, rather in the next one, that speaks about the services, and we will explore the benefits of using Docker Compose over ordinary Docker CLI, and then we will also take a look at the Docker Stack and Docker Services. There is a very big problem in scalability with Dockers, but... the next article is for that. :-)

Now, we will dedicate future episodes, to some specific areas of Docker development and management.

- DockerOps: Services and their scalability

- DockerOps: Storage and Networking

- DockerOps: Orchestration and extensibility

- DockerOps: Best Practices for Containerization

I hope you will join me on this tour, as I explore Docker engine and showcase some best practices to be employed while developing your apps targetting Docker engine for containerization.

Moving Ahead...

In this article, we merely explore what Docker would allow us to do. We haven't even scratched the surface of Dockers and their power and features. We will start to dig into the surface starting from the next article. The main benefit of using Dockers is that we can have our own apps, and processes run in an isolated environment, and our ability to control how they grow and how they scale in size.

You can, now, remove the resources that you had created to free up some hardware resources on your machine for further usage. Or you can leave them as they are, but we will still follow the recreation process in later articles. To remove the resources, run:

# docker stop gettingstarted && docker rm gettingstarted

This will stop the container and then remove it, so that you can create it once again, if you wish to follow along the article. Now before we move onward to the next episode, here is what you need to know before heading onwards: