Introduction

This article explores making use of the GPU for general purpose processing from .NET.

Important: Please visit the CUDAfy.NET Codeplex site for updates.

Background

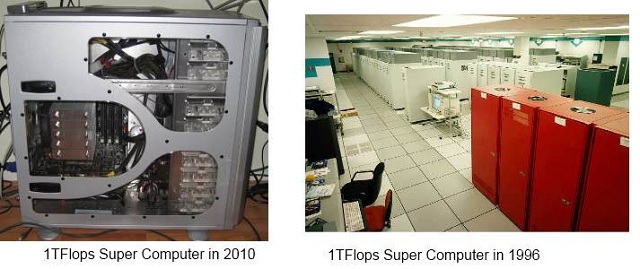

Graphics Processing Units (GPUs) are being increasingly used to perform non-graphics work. The world's fastest super computer - the Tianhe-IA - makes use of rather a lot of GPUs. The reason for using GPUs is the massively parallel architecture they provide. Whereas even the top of the range Intel and AMD processors offer six or eight cores, a GPU can have hundreds of cores. Furthermore, GPUs have various types of memory that allow efficient addressing schemes. Depending on the algorithm, this can all give a massive performance increase, and speed-ups of 100x or more are not uncommon or even that complicated to achieve. This is not just something for super computers though. Even normal PCs can take advantage of GPUs. I'm typing on a fairly cheap Acer laptop ($800) and it has an Intel i5 processor and an NVIDIA GT540M GPU. This little thing hardly runs warm and can give my fairly standard workstation with its two NVIDIA GTX 460s a good run for its money. The amazing thing about this workstation is that in ideal conditions it can do 1 teraflops (less than 100GFLOPs are down to the Intel i7 CPU) . If you look through the history of super computers, this means I've got something that matches the performance of the best computer in the world in 1996 (ASCI Red). 1996 is not that long ago. In 2026, can we have a Tianhe-IA under our desks?

The key point above is that whether an application can speed up or not is down to the algorithm. Not everything will benefit and sometimes you need to be creative. Whether you have the time or budget to do this must be weighed up. Anyway whatever lowers the hurdle to taking advantage of the supercomputer in your PC or laptop can only be a good thing. In the world of General Purpose GPU (GPGPU) CUDA from NVIDIA is currently the most user friendly. This is a variety of C. Compiling requires use of the NVIDIA NVCC compiler which then makes use of the Microsoft Visual C++ compiler. It's not a tough language to learn but it does raise some interesting issues. Applications tend to become first and foremost CUDA applications. To extend a 'normal' application to offload to the GPU needs a different approach and typically the CUDA Driver API is used. You compile modules with the NVCC compiler and load them into your application.

This is all very well but it leaves a rather clunky approach. You have two separate code bases. This may not be a big deal if you have not enjoyed Visual Studio and .NET. Using CUDA from .NET is another story. Currently NVIDIA direct .NET people to CUDA.NET which is a nice, if rather thin, wrapper around the CUDA API. However GPU code must still be written and compiled separately with NVCC and work appears to have stopped on CUDA.NET. The latest changes that came in with CUDA 3.2 mean that a number of things are broken (e.g. CUDA 3.2 introduced 64-bit pointers and v2 versions of much of the API).

Being a die hard .NET developer, it was time to rectify matters and the result is Cudafy.NET. Cudafy is the unofficial verb used to describe porting CPU code to CUDA GPU code. Cudafy.NET allows you to program the GPU completely from within your .NET application with a minimum of messy, clunky business. Now on with the show.

The Code

This project will get you set-up and running with Cudafy.NET. A number of simple routines will be run on the GPU from a standard .NET application. You'll need to do a few things first if you have not already got them. First be sure you have a relatively recent NVIDIA Graphics card, one that supports CUDA. If you don't, then it's not the end of the world since Cudafy supports GPGPU emulation. Emulation is good for debugging but depending on how many threads you are trying to run in parallel can be painfully slow. If you have a normal PC, you can pick up an NVIDIA PCI Express CUDA GPU for very little money. Since the applications scale automatically, your app will run on all CUDA GPUs from the minimal ones in some net books up to the dedicated high end Tesla varieties.

You'll then need to go to the NVIDIA CUDA website and download the CUDA 5.5 Toolkit. Install this in the default locations. Next up, ensure that you have the latest NVIDIA drivers. These can be obtained through NVIDIA update or from here. The project here was built using Visual Studio 2010 and the language is C#, though VB and other .NET languages should be fine. Visual Studio Express can be used without problem - bear in mind that only 32-bit apps can be created though. To get this working with Express, you need to visit Visual Studio Express website:

- Download and install Visual C++ 2010 Express (the NVIDIA compiler requires this)

- Download and install Visual C# 2010 Express

- Download and install CUDA 5.5 Toolkit

- Ensure that the C++ compiler (cl.exe) is on the search path (Environment variables)

- May need a reboot

This set-up of NVCC is actually the toughest stage of the whole process, so please persevere. Read any errors you get carefully - most likely they are related to not finding cl.exe or not having the CUDA Toolkit.

Finally, to make proper use of Cudafy, a basic understanding of the CUDA architecture is required. There is no real getting around this. It is not the goal of this tutorial to provide this, so I refer you to CUDA by Example by Jason Sanders and Edward Kandrot.

Using the Code

The downloadable code provides a VS2010 C# 4.0 console application including the Cudafy libraries. For more information on the Cudafy.NET SDK, please visit the website. The application does some basic operations on the GPU. A single reference is added to the Cudafy.NET.dll. For translation to CUDA C, it relies on the excellent ILSpy .NET decompiler from SharpDevelop and Mono.Cecil from JB Evian. The libraries ICSharpCode.Decompiler.dll, ICSharpCode.NRefactory.dll, IlSpy.dll and Mono.Cecil.dll contain this functionality. Cudafy.NET currently relies on very slightly modified versions of the ILSpy 1.0.0.822 libraries.

There are various namespaces to contend with. In Progam.cs, we use the following:

using Cudafy;

using Cudafy.Host;

using Cudafy.Translator;

To specify which functions you wish to run on the GPU, you apply the Cudafy attribute. The simplest possible function you can run on your GPU is illustrated by the method kernel and a slightly more complex and useful one is also shown:

[Cudafy]

public static void kernel()

{

}

[Cudafy]

public static void add(int a, int b, int[] c)

{

c[0] = a + b;

}

These methods can be converted into GPU code from within the same application by use of CudafyTranslator. This is a wrapper around the ILSpy derived CUDA language and simply converts .NET code into CUDA C and encapsulates this along with reflection information into a CudafyModule. The CudafyModule has a Compile method that wraps the NVIDIA NVCC compiler. The output of the NVCC compilation is what NVIDIA call PTX. This is a form of intermediate language for the GPU and allows multiple generations of GPU to work with the same applications. This is also stored in the CudafyModule. A CudafyModule can also be serialized and deserialized to/from XML. The default extension of such files is *.cdfy. Such XML files are useful since they enable us to speed things up and avoid unnecessary compilation on future runs. The CudafyModule has methods for checking the checksum - essentially we test to see if the .NET code has changed since the cached XML file was created. If not, then we can safely assume the deserialized CudafyModule instance and the .NET code are in sync.

In this example project, we use the 'smart' Cudafy() method on the CudafyTranslator which does caching and type inference automagically. It does the equivalent of the following steps:

CudafyModule km = CudafyModule.TryDeserialize(typeof(Program).Name);

if (km == null || !km.TryVerifyChecksums())

{

km = CudafyTranslator.Cudafy(typeof(Program));

km.Serialize();

}

In the first line, we attempt to deserialize from an XML file called Program.cdfy. The extension is added automatically if it is not explicitly specified. If the file does not exist or fails for some reason, then null is returned. In contrast, the Deserialize method throws an exception if it fails. If null is not returned, then we verify the checksums using TryVerifyChecksums(). This method returns false if the file was created using an assembly with a different checksum. If both this and the prior check fail, then we cudafy again. This time, we explicitly pass the type we wish to cudafy. Multiple types can be specified here. Finally, we serialize this for future use.

Now we have a valid module, we can proceed. To load the module, we need first to get a handle to the desired GPU. This is done as follows. GetDevice() is overloaded and can include a device id for specifying which GPU in systems with multiple, and it returns an abstract GPGPU instance. The eGPUType enumerator can be Cuda or Emulator. Loading the module is done fairly obviously in the next line. CudaGPU and EmulatedGPU derive from GPGPU.

_gpu = CudafyHost.GetDevice(eGPUType.Cuda);

_gpu.LoadModule(km);

To run the method kernel we need to use the Launch method on the GPU instance (Launch is a fancy GPU way of saying start or in .NET parlance Invoke). There are many overloaded versions of this method. The most straightforward and cleanest to use are those that take advantage of .NET 4.0's dynamic language run-time (DLR).

_gpu.Launch().kernel();

Launch takes in this case zero arguments which means one thread is started on the GPU and it runs the kernel method which also takes zero arguments. An alternative non-dynamic way of launching is shown below. Advantages are that is faster first time round. The DLR can add up to 50ms doing its wizardry. The two arguments of value 1 refer to the number of threads (basically 1 * 1), but more on this later.

_gpu.Launch(1, 1, "kernel");

There are a number of other examples provided including the compulsory 'Hello, world' (written in Unicode on a GPU). Of greater interest is the Add vectors code since working on large data sets is the bread and butter of GPGPU. Our method addVector is defined as:

[Cudafy]

public static void addVector(GThread thread, int[] a, int[] b, int[] c)

{

int tid = thread.blockIdx.x;

if (tid < a.Length)

c[tid] = a[tid] + b[tid];

}

Parameters a and b are the input vectors and c is the resultant vector. GThread is the surprise component. Since the GPU will launch many threads of addVector in parallel we need to be able to identify within the method which thread we're dealing with. This is achieved through CUDA built-in variables which in Cudafy are accessible via GThread.

If you have an array a of length N on the host and you want to process it on the GPU, then you need to transfer the data there. We use the CopyToDevice method of the GPU instance.

int[] a = new int[N];

int[] dev_a = _gpu.CopyToDevice(a);

What is interesting here is the return value of CopyToDevice. It looks like an array of integers. However if you were to hover your mouse over it in the debugger, you'd see it has length of zero, not N. What has been returned is a pointer to the data on the GPU. It is only valid in GPU code (the methods you marked with the Cudafy attribute). The GPU instance stores these pointers. Transferring data to the GPU is all very well but we also may need memory on the GPU for result or intermediate data. For this, we use the Allocate method. Below is the code to allocate N integers on the GPU.

int[] dev_c = _gpu.Allocate<int>(N);</int>

Launching the addVector method is more complex and requires arguments to specify how many threads, as well as arguments for the target method itself.

_gpu.Launch(N, 1).addVector(dev_a, dev_b, dev_c);

Threads are grouped in Blocks. Blocks are grouped in a Grid. Here we launch N Blocks where each block contains 1 thread. Note addVector contains a GThread arg - there is no need to pass this as an argument. As stated earlier, GThread is the Cudafy equivalent of the built-in CUDA variables and we use it to identify thread id. The diagram below shows an example with a grid containing a 2D array of blocks where each block contains a 2D array of threads.

Another interesting point of note is the FreeAll method on the GPU instance. Memory on a GPU is typically more limited than that of the host so use it wisely. You need to free memory explicitly, however if the GPU instance goes out of scope, then its destructor will clear up GPU memory.

The final example is somewhat more complex and illustrates the use of structures and multi-dimensional arrays. In file Struct.cs ComplexFloat is defined:

[Cudafy]

public struct ComplexFloat

{

public ComplexFloat(float r, float i)

{

Real = r;

Imag = i;

}

public float Real;

public float Imag;

public ComplexFloat Add(ComplexFloat c)

{

return new ComplexFloat(Real + c.Real, Imag + c.Imag);

}

}

The complete structure will be translated. It is not necessary to put attributes on the members. We can freely make use of this structure in both the host and GPU code. In this case, we initialize the 3D array of these on the host and then transfer to the GPU. On the GPU, we do the following:

[Cudafy]

public static void struct3D(GThread thread, ComplexFloat[,,] result)

{

int x = thread.blockIdx.x;

int y = thread.blockIdx.y;

int z = 0;

while (z < result.GetLength(2))

{

result[x, y, z] = result[x, y, z].Add(result[x, y, z]);

z++;

}

}

The threads are launched this time in a 2D grid so each thread is identified by an x and y component (we launch a grid of threads equal to the size of the x and y dimensions of the array). Each thread then handles all the elements in the z dimension. The length of the dimension is obtained by the GetLength method of the .NET array. The calculation adds each element to itself.

License

The Cudafy.NET SDK is available as a dual license software library. The LGPL version is suitable for the development of proprietary or open source applications if you can comply with the terms and conditions contained in the GNU LGPL version 2.1. Thanks.

Final Words

I hope you've been encouraged to look further into the world of GPGPU programming. Most PCs already have a massively powerful co-processor in your PC that can compliment the CPU and provide potentially huge performance gains. In fact, the gains are sometimes so large that they no longer make any sense to the uninitiated. I know of developers who exaggerate the improvement downwards... The trouble has been in making use of the power. Through the efforts of NVIDIA with CUDA, this is getting easier. Cudafy.NET takes this further and allows .NET developers to access this world too.

There is much more to all this than what is covered in this short article. Please obtain a copy of a good book on the subject or look up the tutorials on NVIDIA's website. The background knowledge will let you understand Cudafy better and allow the building of more complex algorithms. Also do visit the Cudafy website to download the SDK. The SDK includes two large example projects featuring amongst others ray tracing, ripple effects and fractals.

History

- 27th May, 2011

- 16th June, 2011

- Updated to use Cudafy.NET V1.2

- Added some photos

- 12th July, 2011

- Updated to use Cudafy.NET V1.4

- 16th September 2013

- Updated to use Cudafy.NET V1.26