Introduction

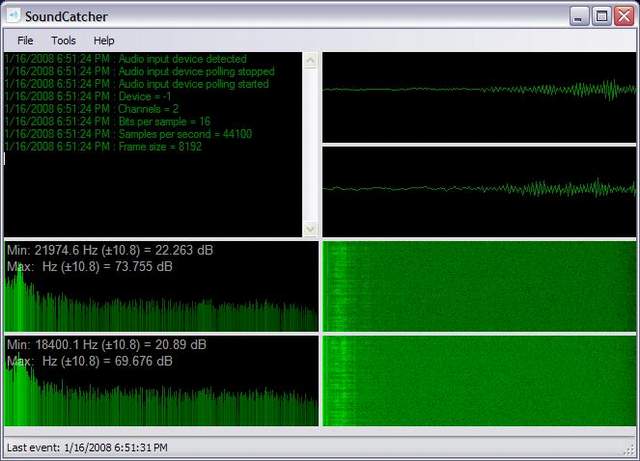

This project demonstrates an implementation of the waterfall spectrogram and use of statistical data to trigger events in near real-time. This code is an elaboration of my previous submission (SoundViewer). This demonstration utilizes the Wave classes developed by Ianier Munoz.

Using the Code

Audio is supplied by the default input device which is typically the microphone. Events are triggered when audio amplitude exceeds the desired threshold value, which can be set under Options on the menu bar. To make this more useful, I've added functionality to save the stream to disk which results in a nice sound activated recorder.

Points of Interest

In order to draw the spectrogram fast enough to allow for near real-time operation, I needed to write directly to memory using unsafe code.

PixelFormat format = canvas.PixelFormat;

BitmapData data =

canvas.LockBits(new Rectangle(0, 0, width, height), ImageLockMode.ReadOnly, format);

int stride = data.Stride;

int offset = stride - width * 4;

try

{

unsafe

{

byte* pixel = (byte*)data.Scan0.ToPointer();

for (int y = 0; y <= height; y++)

{

if (y < _fftLeftSpect.Count)

{

for (int x = 0; x < width; x++, pixel += 4)

{

double amplitude = ((double[])_fftLeftSpect[_fftLeftSpect.Count - y - 1])

[(int)(((double)(_fftLeft.Length) / (double)(width)) * x)];

double color = GetColor(min, max, range, amplitude);

pixel[0] = (byte)0;

pixel[1] = (byte)color;

pixel[2] = (byte)0;

pixel[3] = (byte)255;

}

pixel += offset;

}

}

}

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

canvas.UnlockBits(data);

I noticed that the results vary wildly depending on the hardware and associated drivers being used.

Some things I'd like to experiment with further when I get the time:

- Use of frequency domain to produce "motion" detector equivalent

- Use of spectrogram in sound identification

- Improving performance/robustness

History