Introduction

When we sit down to solve a computational problem, the most obvious solution is often the worst. Let's look at Bubble Sort, for example. It is likely the first algorithm to appear to someone who is just beginning to think about the problem of sorting, given a computer to do the work: start with the first two items, compare them, swap them if the order in the list is reversed, and move to the next item. This algorithm performs equally "well" regardless of the initial condition of the list it is used to sort. Given n items in a list, there will always need to be n2-n comparisons. Usually, we'd move on from this algorithm quickly, noting that this O(n2) performance can get out of hand very, very quickly, and this makes Bubble Sort virtually useless in the face of much better algorithms which are not too much more difficult to implement.

There is something we can use it for, however - testing how quickly we can run a bad algorithm. Since performance degrades geometrically (specifically: quadratically), we see performance differences between implementations more clearly, since doubling the number of items will much more than double the time that the algorithm takes. Plus, Bubble Sort is very easy to implement, and when you are hand-coding SSE2 assembly instructions for the first time, this is a big help.

Background

I'm working on a set of computational geometry algorithms for NetTopologySuite, and some of them have O(n2) performance. Since the algorithms are mostly numerical and perform sorting, and alternative algorithms appear to be hard to implement, I wanted to find a way to take advantage of modern hardware to speed up the operations. Since SSE2 is present on most peoples' computers these days (both Intel and AMD), it seemed like a good choice to work with.

I also found, while investigating various data structures to hold numbers, and to my dismay, that Java does a better job of sorting arrays of numbers, since apparently (after browsing the process' code pages during run-time) Sun's HotSpot Java VM takes advantage of SSE2 instructions, and MS's CLR JIT compiler doesn't. It was a shocker. I wanted to set things right again, and out came the C++/CLI guns to regain some ownership on the platform. Et Voilá: a managed assembly which smokes all comers. Too bad it isn't verifiable, though. I think MS has some work to do on the JIT compiler in this area to get the JIT to create more specialized code, especially for vector arrays.

Preparing to use SSE2

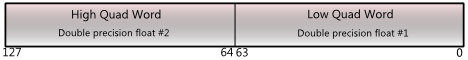

The first thing to know when writing to SSE2 instructions is that we will be using registers and memory locations which are 16 bytes (128 bits) wide. The names of the registers are XMM0 through XMM7 (64-bit systems also have XMM8 through XMM15). They are usually divided into two 64-bit regions called "quadwords" (for double precision floating-point values). They can also be divided into four 32-bit regions called "doublewords" (for single precision floating-point values). Similar divisions exist for integers, words, and bytes. The whole length is called a "double quadword". The registers look like this:

If you are used to the general-purpose registers on the x86 platform (EAX, EBX, etc.), these registers are four times larger.

In order to move data in and out of these registers, you need1 to work with a block of memory which is aligned to a 16-byte (128-bit) boundary. The MS CRT has a function to do this for us: _aligned_malloc. This gives us back a void*, which we can cast to a double* to get our number list.

Plodding along with the code

The next thing I found out is that there is very little guidance in writing the SSE2 assembly. Sure, there are some simple examples of basic operations usage or data movement, as well as examples about fairly advanced numerical methods on the 'Net (and here at CodeProject), but I couldn't find any which shows how to construct a basic general-purpose algorithm based on the instructions.

The Intel processor manuals were essential, although they were much more about reference, and any usage had to be inferred after much trial and error. Further, having done regular 32-bit x86 assembly programming, working with SSE2 was like a separate world in the processor altogether. There are a few ways to communicate from the XMM registers to the general registers (like EAX, EBX, etc.). Eventually, I found the MOVMSKPD command, and realized that compares (like the CMPLTPD I used) set all the bits in a quadword, and the mask of MOVMSKPD extracts one of them into a given register. I was tripping up over the use of the sign bit as being significant somehow - sure it is the sign bit in an IEEE 754 double, but it is also just a bit, and I can use it for control flow. After struggling with this, I found that this is exactly what is described in the Intel Basic Architecture manual (Sec. 11.6.12).

I also had to go through three or four approaches before I figured out how to move data around from register to register. I mostly struggled with SHUFPD, which shuffles values among registers and high and low quadwords, UNPCKHPD, which can copy the high quadword from two registers into a destination, and UNPCKLPD, which works on the low quadwords. Then, I hit upon using MINPD and MAXPD, which perform compares and moves the values. Combining this with a SHUFPD operation to get two registers with the quadwords reversed allowed me to compare and move data in just a few operations and completely with registers. I suspect this approach won't work with smaller values, like single precision floating point values or integers, however, since the number of permutations of values exceed the number of registers available.

The code

The code provided consists of a method which contains the implementation of the Bubble Sort algorithm in an "ASM" code block and a managed C++/CLI framework around it to run it and output some interesting stats to the console. This approach also highlights that it is possible to use C++/CLI to hand code assembly blocks and easily use them from any managed language like C#, VB.NET, or IronPython.

After setting up an aligned buffer (see above), we can get the address of the list and set up our loops:

_asm

{

pusha

begin:

mov esi, values

mov ecx, 0

outer_loop:

cmp ecx, byteCount

je end_outer

mov edx, byteCount

sub edx, ecx

mov ebx, 0

inner_loop:

cmp edx, 16

je end_inner

Then comes the body, where the SSE2 registers and instructions are used. There are generally two variants of each SSE2 instruction: a packed variant for working on multiple data values, and a scalar variant for working on single values. We want the packed variants so we can compare two numbers at once. The packed variants end in "pd" for "packed double".

First, we move data in from the buffer:

movapd xmm0, [esi+ebx]

movapd xmm1, xmm0

movapd xmm2, [esi+ebx+16]

movapd xmm3, xmm2

Now, we have two copies of each of the first four entries in the list: the first two in the XMM0 and XMM1 registers, and the second two in the XMM2 and XMM3 registers. The XMM0 and XMM1 registers look like this:

Since we are not sure that these two values are ordered within the register, we need to compare them. To do this, we will swap the doubles within one of the registers for each pair (this is why we loaded each pair into two registers):

shufpd xmm1, xmm1, 1

shufpd xmm3, xmm3, 1

The operation SHUFPD stands for "Shuffle Packed Double". It takes an "immediate operand" which gives it different behavior depending on the value. In this case, with an immediate operand of 1, SHUFPD picks the low quadword of the destination to go into the high quadword of the destination and the low quadword of the source to go into the high quadword of the destination, like this:

When called with the same register, SHUFPD swaps the doubles within the register.

The registers XMM0, XMM1, XMM2, and XMM3 now exist in a condition such that some choice of XMM0 or XMM1 and some choice of XMM2 or XMM3 result in four sorted elements. The trick was to find SSE2 instructions which would make the decision on which pair of registers to keep. After rummaging through the Intel processor manuals for a few hours over a month or so, I found some that work: MINPD and MAXPD. They will keep the minimum or the maximum of the values in a given pair of XMM registers. However, in doing this, they overwrite the source register. The only way I could figure out how to arrange the min and max comparisons in order to get the minimum values into the [0-1] registers and the maximum values into the [2-3] registers was to save off a temporary copy of the XMM0 register. I put this into XMM4. I am not happy about this, since I had secretly hoped to interleave two sets of the algorithm and use the XMM4-XMM7 registers for this. With this saving off of the XMM0 register to XMM4, this won't work. Sure, I could use a memory location to store it, but I'm trying to avoid that (register to register is the fastest a processor can get). On the other hand, doing twice the work in the same pass might be worth it, but I haven't tested it yet. So, here is the code to save it off:

movapd xmm4, xmm0

Now comes a series of comparisons to push the smallest values into XMM0 and XMM1 and the largest into XMM2 and XMM3:

minpd xmm0, xmm2

minpd xmm1, xmm2

maxpd xmm2, xmm4

maxpd xmm3, xmm4

The code isn't quite obvious, so let's look at it a bit. The instruction MINPD compares each double in two XMM registers in order - meaning the low 64 bits of the source register with the low 64 bits of the destination, and the high 64 bits of the source with the high 64 bits of the destination. It puts the minimum value of each of these comparisons in the source register. Since XMM0 and XMM1 are reflections of each other, using MINPD on each of these with XMM2 will result in the smallest values in both the high and low quadwords of the XMM2 register and the XMM0 register to be stored in XMM0 and XMM1. The same thing happens with MAXPD, only it, rather more obviously, chooses the maximum values. Since XMM0 and XMM1 were possibly completely overwritten by the MINPD compare with XMM2, we use the XMM4 register that we saved off at the beginning.

Finally, we need to compare intra-register to make sure the two double values within a register are ordered:

order_min0:

movapd xmm4, xmm0

shufpd xmm4, xmm4, 1

cmpltpd xmm4, xmm0

movmskpd eax, xmm4

cmp eax, 2

je order_min1

shufpd xmm0, xmm0, 1

order_min1:

movapd xmm4, xmm1

shufpd xmm4, xmm4, 1

cmpltpd xmm4, xmm1

movmskpd eax, xmm4

cmp eax, 2

je finish_min

shufpd xmm1, xmm1, 1

finish_min:

minpd xmm0, xmm1

order_max0:

movapd xmm4, xmm2

shufpd xmm4, xmm4, 1

cmpltpd xmm4, xmm2

movmskpd eax, xmm4

cmp eax, 2

je order_max1

shufpd xmm2, xmm2, 1

order_max1:

movapd xmm4, xmm3

shufpd xmm4, xmm4, 1

cmpltpd xmm4, xmm3

movmskpd eax, xmm4

cmp eax, 2

je finish_max

shufpd xmm3, xmm3, 1

finish_max:

maxpd xmm2, xmm3

The trick here is to use CMPLTPD (Compare Less Than Packed Double) to set the high and/or low 64 bits of an XMM register to all 1 or all 0, depending on if the compare result is true or false, respectively. One of these bits in each quadword (the sign bit in the double floating point value) is then extracted via one of the few operations that allow using both general and XMM registers: MOVMSKPD to EAX, where the resulting value will be two bits: 0, 1, 2, or 3. The high bit corresponds to the high quadword of the XMM register operand, and the low bit corresponds to the low quadword, like this:

The value of 2 in EAX (bits: 10) means that the high quadword is less than the low quadword, which is what we want. If it is any other value, we swap them via a call to SHUFPD with an immediate operand of 1. When the values are swapped, we can compare them with the original register to determine which is lower, and therefore which ordering to keep.

At this point, the registers XMM0 and XMM2 are ordered correctly, and we save them back to the data buffer, moving on to the next pair in the Bubble Sort.

Results

The sort appears to be almost, but not quite, twice as fast as a scalar pointer implementation. This is about what we should expect, since the SSE2 version is making twice the compares in the same number of executions - which is the whole benefit of a vector processor, after all. This isn't true when dealing with a small number of items, say under 64. In this case, it appears that the overhead of SSE2 makes the pointer implementation faster. Perhaps, there are ways to eliminate this overhead by getting the processor to fetch pages from the RAM into the cache before processing them. The following shows a typical run:

If we compare a scalar implementation using pointers, we find the following timings:

Further investigation

I've made this code part of a project on CodePlex: Code Rule. The idea here is to implement algorithms across a number of languages / frameworks to help visualize the relative performance of algorithms and the machines they are run on.

Some of the more interesting things to try out with this example would be to use pre-fetching to control the caching of upcoming data, if the buffer is too big to fit in the processor's data cache. Exploiting the extra registers on a 64-bit system or the other cores in a multi-core machine would also be useful. Of course, this would ultimately be practical only if a better algorithm were implemented, like QuickSort.

Reference

I mostly used the Intel processor manuals, Volumes 1, 2A, and 2B to construct this example. These, and more, can also be found here.

Footnotes

- OK, you don't need to, however, you probably really, really want to, since it is a big performance hit otherwise.

History

- 2008-02-12 - Style edits.

- 2008-02-11 - Initial submission.