Introduction

This article is the first of a multi-part series that will cover the architecture and implementation of components needed to create and maintain a robust, scalable, high performance massive multiplayer online game server and game engine.

As the first article in a series about architecting and implementing a massive multiplayer online game server and engine, this article focuses on the design and implementation of a necessary component, responsible for transforming static virtual bits into a dynamic, living, breathing, interactive virtual world.

The Work Item and Event Scheduler

The component addressed in this article is the Scheduling Engine, also known as the Work Item and Event Scheduler. Although its name isn't very sexy, the functionality of this component serves as the foundation of what makes massive multiplayer online games so compelling: constantly evolving virtual worlds, and dynamic, real-time actor-world interaction.

As its name implies, the Work Item and Event Scheduler schedules, coordinates, and then executes actions that occur within the virtual world. However, the Scheduling Engine handles more than player-initiated actions; it schedules weather changes, drives night and day, and executes bits of code that define the behavior of non-player characters and objects. (For this reason, I will refer to any code scheduled for execution by the event scheduler as a work item.)

Virtual World Basics, 101

Massive multiplayer games consist of set and setting (a dynamic virtual world) and actors that can interact with and alter their set and setting. To provide players with an immersive and realistic experience, interaction with (and changes to) the virtual world need to take place in a way that provides the illusion of continuous time; in other words, everything that occurs in the virtual world must fit into some kind of believable flow of "virtual real-time".

There can be thousands of players concurrently interacting with a single virtual world. In addition to player-driven interactions, other automated changes to the virtual world need to occur. These automated changes range from non-player-character movements and behaviors to weather and time changes.

The Scheduling Engine

The role the scheduling engine plays in driving real-time change and creating the feel of an interactive, dynamic, virtual world is illustrated below:

The scheduling engine component itself includes only the SchedulingEngine and ScheduledWorkItemQueue boxes in the image above (highlighted in yellow), and is only responsible for two things:

- Scheduling work items for future execution, and

- Executing work items at their scheduled times.

Making 'Virtual Real Time' Believable

Below are the basic requirements for providing players with a believable real-time virtual world experience:

- Fantasy-based games often introduce a calculated delay between when a player begins casting a spell and when the spell effects occur. Or, we may want the time it takes for a character to draw his/her weapon to be dependent on character skill. Therefore, we need the capability to schedule an event for execution at a specific time. Since we may be dealing with short time intervals (such as the time it takes a player to issue a command and the execution of the resulting action), the actual execution of an action / work item needs to occur as closely as possible to its scheduled time. Furthermore, we need fairly fine-grained control over scheduled execution times - the time it takes a player's character to attack another character may vary depending on the type of attack being performed, the weapon used, and the character's speed, and may be further modified by environmental variables, character states, character effects, etc. These small modifications to attack time delays must be detectable and meaningful; the difference between a delay of 500ms between attacks and 600ms between attacks should be both noticeable and possibly meaningful in terms of fight outcome.

- The capability to cancel execution of a scheduled work item is a requirement that emerges when delays are introduced between initiation of an action and its execution. As an example, a player may begin casting a spell at a target. During the time it takes to cast the spell, several events might lead to cancellation: the character may change his/her mind about casting the spell, the target may move out of range, or (as in several popular online games), casting may be cancelled if another character successfully attacks the spell-caster.

- To maintain a steady illusion of real-time in the virtual world, execution of an event must not delay the execution of other concurrently scheduled events. If execution of one event delays execution of other scheduled events, the effect of virtual real time is lost, leading to a global slowdown ("lag").

- As player numbers increase and virtual worlds grow in size, the number of updates needed to keep the world running on 'virtual real time' skyrockets. For this reason, many existing massive multiplayer online games use multiple, loosely-connected game servers. By assigning each server a "part" of the virtual world to maintain, and by limiting the number of players on a single server, the number of updates required to keep the virtual world running in real-time is kept in check. However, when using multiple servers, inter-player cooperation and communication may be slowed or hindered (i.e., talking with group members playing on another server), and tricks or cumbersome methods (i.e., portals) may be required to transfer players across servers. Because of the limitations associated with inter-server communication, the experience of a cohesive, virtual world can be negatively impacted. This brings us to our last requirement: in order to support thousands of concurrent players without significantly limiting our world size (or requiring the use of multiple servers), the scheduling engine must be efficient enough to handle hundreds of thousands of events per second.

20,000 characters were scheduled to 'walk' every 90-120ms. 'Walking' involved checking character leg strength, updating the character's movement energy, changing the location of the character in the virtual world, and rescheduling the walk event to occur 90-120ms in the future. The scheduling engine presented in this article had no problem executing 172,730 of these 'walk' events per second (without maxing out the CPU on my laptop):

What Exactly Does the Scheduler Schedule?

The scheduler schedules and executes work items. A work item is best defined by three functional requirements:

- The work item needs to be able to do something.

- It would be convenient if a work item could calculate, set (and have reflective knowledge of) its own execution time.

- We also need the option to cancel work items that have not yet executed.

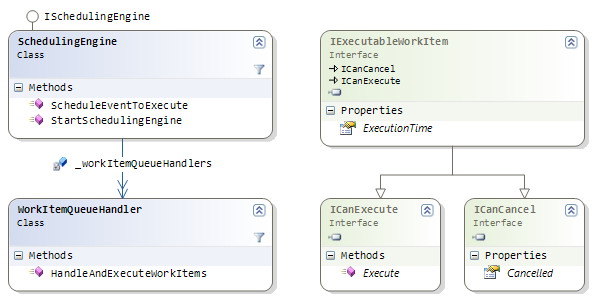

The interface IExecutableWorkItem was designed using these functional requirements, and defines the most basic nature of something that can be scheduled to execute at a specific time (or be cancelled prior to execution):

public interface IExecutableWorkItem

{

void Execute();

DateTime ExecutionTime { get; set; }

bool Cancelled { get; set; }

}

Scheduling Engine Requirements

Now that we have defined a work item, we can define the functional requirements for the scheduling engine itself. First, we need a way of initializing the scheduling engine and instructing it to begin handling scheduled work items (void StartSchedulingEngine()). Conversely, we also need a way to instruct the scheduling engine to stop processing scheduled events and to shut down. It would also be nice to know if the scheduling engine is currently running or if it is attempting to shut down (property: bool WantExit { get; set; }). Because the scheduling engine executes work items based on what it believes to be the current time, it would be helpful to have some way to access the engine's notion of the current date and time (property: DateTime CurrentDateTime { get; set; }). For informational purposes, it would be nice to keep track of how many work items have been executed and how many work items are currently queued (scheduled for execution some time in the future). It will also be helpful to have the ability to examine any exceptions that may be thrown during execution of a work item, and, last but not least, we need a way to schedule a work item / event for execution (void ScheduleEventToExecute(IExecutableWorkItem eventToExecute)). The ISchedulingEngine interface is presented below:

public interface ISchedulingEngine

{

void StartSchedulingEngine();

void ScheduleEventToExecute(IExecutableWorkItem eventToExecute);

long WorkItemsExecuted { get; set; }

DateTime CurrentDateTime { get; set; }

bool WantExit { get; set; }

ISupportsCount[] WorkItemQueueCounts { get; }

List<Exception> Exceptions { get; }

}

Scheduling Engine: Threading and Synchronization

The implementation of the scheduling engine also needs to support functional requirements #3 (execution of one work item must not delay the execution of other work items) and #4 (the scheduling engine must be able to execute hundreds of thousands of work items each second).

To prevent long-running work items delaying the execution of other scheduled work items, the scheduling engine will use multiple threads, each capable of retrieving and executing any current or past-due work item. Because:

- we want to be able to schedule new work items from any running thread, and

- multiple worker threads simultaneously execute scheduled work items, and efficiently synchronizing access to shared work item queues is critical.

Since we will be using a large number of worker threads, our synchronization method needs to be robust. Because we are also interested in maintaining high performance and high work-item-throughput, our method of synchronization must not become a performance bottleneck as the number of worker threads increase: every running thread needs a quick and streamlined way of scheduling new work items and accessing currently scheduled work items.

Without synchronized access to work item queues, several disaster scenarios are possible: multiple threads concurrently trying to schedule work items, and WorkItemQueueHandler threads attempting to dequeue the same work item that another thread is in the process of scheduling.

Simple Problem, Simple Solution

Our synchronization requirement is simple: prevent multiple threads from simultaneously executing critical sections of code that enqueue or dequeue work items from the scheduling queues. Our synchronization solution can be equally simple: create a lock that can only be owned by a single thread at any given time. After acquiring a lock, a single thread can begin executing the critical section of code and alter the shared work item queue. When the critical section of code is finished executing, the thread owning the lock releases the lock for acquisition by another lucky thread. In turn, another thread acquires the lock and can begin execution of the critical section of code. The Enqueue and Dequeue methods below demonstrate the use of a lock to synchronize access to the small, critical sections of code responsible for modifying a shared queue of items:

public void Enqueue(TListType item)

{

AquireLock();

{

_queue.Enqueue(item);

_count++;

}

ReleaseLock();

}

public bool Dequeue(out TListType item)

{

item = null;

AquireLock();

{

if (_count > 0)

{

item = _queue.Dequeue();

_count--;

}

}

ReleaseLock();

return (item != null);

}

High Performance Locking

There are many synchronization solutions available in .NET (Monitor.Enter, ReaderWriterLock, lock(), etc.) that regulate access to shared resources and prevent concurrent execution of critical code blocks. However, as you might have noticed in the above Enqueue and Dequeue methods, I forged my own path and created a Lockable class to enforce single-thread execution of critical code sections. The Lockable class provides a faster alternative to synchronization mechanisms such as lock() under load conditions (see below for performance metrics). The Lockable class uses the atomic Interlocked.CompareExchange operation in conjunction with a spin/sleep/retry mechanism to ensure that only one thread has ownership of a lock at any given time.

Priorities and considerations for designing this locking mechanism included:

- Minimize the number of calls to

Interlocked.CompareExchange, since it's a fairly expensive operation. - The sections of code that require single-thread access should not perform a large amount of work while owning the lock. Therefore, make the assumption that the lock will usually be free and can be successfully acquired. When this assumption is true, the code should follow its optimal execution path (i.e., the least amount of work should be performed when the lock is free and can be immediately obtained).

- It is the programmer's responsibility to know how to use this lock and to be familiar with its many shortcomings (see shortcomings below).

The AquireLock() method is defined below:

public void AquireLock()

{

if (Interlocked.CompareExchange(ref _lock, 1, 0) == 1)

{

int n = 0;

while (_lock == 1)

{

if (n++ > SpinCycles)

{

Interlocked.Increment(ref _conflicts);

n = 0;

Thread.Sleep(0);

}

}

while (Interlocked.CompareExchange(ref _lock, 1, 0) == 1)

{

n = 0;

while (_lock == 1)

{

if (n++ > SpinCycles)

{

Interlocked.Increment(ref _conflicts);

n = 0;

Thread.Sleep(0);

}

}

}

}

}

Many safety features found in other locking mechanisms were intentionally omitted to improve performance. Due to lack of safety features, this locking mechanism has several functional shortcomings:

- A lock cannot be acquired twice, even if the lock is requested again by the same thread that already owns the lock.

- There is no

try/catch/finally logic to ensure ReleaseLock(); is called. This means that any exception raised between the AquireLock(); and ReleaseLock(); statements will render the lock unobtainable (unless measures are implemented to manually release the lock). - The lock is not optimized for efficient acquisition of multiple (nested) locks. Inconsistent nested locking can lead to deadlocks and other serious problems.

Performance Results - Lockable vs. lock()

Included in the solution is a simple program I wrote to compare the relative performance of the Lockable class with the standard .NET lock() method, under conditions similar to what we expect the Scheduling Engine to encounter. These conditions are:

- Multiple worker threads will be continuously attempting to retrieve and execute work items, and

- Work items will tend to be scheduled at a moderate but consistent pace.

To test the relative performance under these conditions, multiple worker threads are employed to execute locked enqueue and dequeue operations against a shared (static) queue. Specifically, half of the worker threads are assigned to enqueue items to the shared (static) queue in short bursts of activity, while the remaining workers continuously dequeue items from the same shared queue. The source code used to obtain the performance metrics below is included in the solution source.

In both the Lockable and lock() tests, half of the worker threads executed the Enqueue method (below), while the other half of the worker threads executed the Dequeue method. The test code using lock() differs from the test code using Lockable only by the synchronization method used.

static class Lockable = new Lockable();

static object _lockObject = new object();

public static void Enqueue()

{

for (int n = 0; n < 1000; n++)

{

for (int i = 0; i < 20; i++)

DoEnqueue(i + n);

Thread.Sleep(0);

}

}

public static void Dequeue()

{

for (int n = 0; n < 1000; n++)

{

while (_testQueue1.Count > 0)

DoDequeue();

}

}

For the Lockable test, the following DoEnqueue and DoDequeue methods were used:

private static void DoEnqueue(int n)

{

_lock.AquireLock();

{

_testQueue1.Enqueue(n);

}

_lock.ReleaseLock();

}

private static void DoDequeue()

{

object o;

_lock.AquireLock();

{

if (_testQueue1.Count > 0) o = _testQueue1.Dequeue();

}

_lock.ReleaseLock();

}

For the lock() test, the following DoEnqueue and DoDequeue methods were used:

private static void DoEnqueue(int n)

{

lock (_lockObject)

{

_testQueue1.Enqueue(n);

}

}

private static void DoDequeue()

{

object o;

lock (_lockObject)

{

if (_testQueue1.Count > 0) o = _testQueue1.Dequeue();

}

}

The results comparing execution times to perform similar amounts of work using Lockable vs. lock() are shown below:

The total number of worker threads used is displayed on the X axis. The total time taken for all worker threads to complete a set amount of work with a shared queue is shown on the Y axis (in milliseconds). The Lockable class outperformed lock() with varying number of worker threads ranging from 20 to 80. The performance advantage of using the lighter-weight Lockable class increased as the number of worker threads (and therefore contention for access to the shared queue) increased.

Getting Back on Track: Implementing the Scheduling Engine

Now that we have an efficient way to manage multi-threaded access to queues of work items, it's time to implement the scheduling engine. A basic description of the scheduling engine implementation follows:

- Work items scheduled to be executed may be stored in one of many queues.

- Each work item queue is serviced by an instance of

WorkItemQueueHandler, and each instance of WorkItemQueueHandler uses a separate, dedicated thread to handle its scheduled work item queue. - Most queues ('fast-track' queues) are dedicated to handling work items that need to be executed within the next 500ms.

- Two additional work item queues ('slow-track' queues) track work items that are scheduled for execution at a later time (i.e., not scheduled to execute within the next 500ms).

- When a work item is scheduled, it is assigned to a fast-track or slow-track queue based on its scheduled execution time and round-robin assignment.

- The job of a fast-track

WorkItemQueueHandler worker thread is to execute all current or past-due work items in its associated work item queue. - The job of a slow-track

WorkItemQueueHandler worker thread is to make sure work items with approaching execution times in its slow-track work item queue are moved to an appropriate fast-track queue.

Below is an illustration of the relationship between work items, work item queue handlers, and the scheduling engine:

Each fast-track work item queue handler uses the following code to efficiently handle work items in its associated work item queue:

private int ExecuteEventsInQueue(int queueNumber)

{

IExecutableWorkItem item;

DateTime currentTime = CurrentDateTime;

List<IExecutableWorkItem> reschedule = new List<IExecutableWorkItem>();

List<IExecutableWorkItem> itemsToExecute = new List<IExecutableWorkItem>(16);

int executedThisPass = 0;

while (_workItemsToExecute[queueNumber].DequeueMultiple(itemsToExecute, 10) > 0)

{

for (int n = 0; n < itemsToExecute.Count && !WantExit; n++)

{

item = itemsToExecute[n];

if (item.ExecutionTime > currentTime)

{

reschedule.Add(item);

}

else

{

executedThisPass++;

item.Execute();

}

}

itemsToExecute.Clear();

}

_workItemsToExecute[queueNumber].EnqueueMultiple(reschedule);

if (executedThisPass > 0)

{ Interlocked.Add(ref _executed, Convert.ToInt64(executedThisPass)); }

return executedThisPass;

}

Slow-track work item queue handlers use the following code to assign work items that may need to be executed soon to a fast-track queue:

private int UpdateWorkItemSchedule(int queueNumber)

{

int workItemsMoved = 0;

IExecutableWorkItem item;

List<IExecutableWorkItem> itemsBackIntoOriginalQueue = new List<IExecutableWorkItem>();

List<IExecutableWorkItem> workItems = new List<IExecutableWorkItem>(16);

while (_allWorkItemQueues[queueNumber].DequeueMultiple(workItems, 10) > 0 && !WantExit)

{

for (int n = 0; n < workItems.Count; n++)

{

item = workItems[n];

int appropriateQueue = FindAppropriateQueue(item);

if (queueNumber != appropriateQueue)

{

_allWorkItemQueues[appropriateQueue].Enqueue(item);

workItemsMoved++;

}

else

{

itemsBackIntoOriginalQueue.Add(item);

}

}

workItems.Clear();

}

_allWorkItemQueues[queueNumber].EnqueueMultiple(itemsBackIntoOriginalQueue);

return workItemsMoved;

}

Using the Scheduling Engine

If you're still reading, you'll be happy to hear that setting up and using the scheduling engine is significantly easier than the work you just did, reading the article up to this point. To use the scheduling engine, we need to create a test work item class. For our test work item class, we'll stick to the simplest possible implementation that fully implements IExecutableWorkItem. We will even make the creator of the test work item class instance responsible for passing in the work item's scheduled execution time.

public class TestWorkitem : IExecutableWorkItem

{

public static int _executed = 0;

public TestWorkitem(DateTime timeToExecute)

{ ExecutionTime = timeToExecute; }

public bool Cancelled { get; set; }

public virtual DateTime ExecutionTime { get; set; }

public virtual void Execute() { Interlocked.Increment(ref _executed);

Console.WriteLine("Work item executed"); }

}

Now, we can set up the scheduling engine. Let's set up a scheduling engine with 32 worker threads and start it running:

SchedulingEngine engine = new SchedulingEngine(32);

engine.StartSchedulingEngine();

Now that the scheduling engine is up and running, we can schedule a few test work items and ask the scheduling engine to let us know when it's done.

for (int n = 0; n < 50000; n++)

{

TestWorkitem workItem =

new TestWorkitem(engine.CurrentDateTime.AddMilliseconds(n / 10));

engine.ScheduleEventToExecute(workItem);

}

engine.WorkItemQueuesEmpty += new EventHandler(engine_WorkItemQueuesEmpty);

Since your work items will be executed at their scheduled times using threads provided by the scheduling engine, you can go about your business on the current thread; the work items will be executed at their scheduled times. As illustrated above, you can subscribe to the WorkItemQueuesEmpty event, which is raised by the SchedulingEngine class instance when all work items have been executed and the work item queues become empty.

To gracefully shut down the scheduling engine, set engine.WantExit = true;.

Source Code and Sample Projects

I hope you've enjoyed reading the article and/or picked up a few useful programming tidbits. Suggestions are welcome, and any useful pieces of code you come across in the attached source is most certainly fair game for use in your own personal projects. The attached solution and source code includes the following projects:

- The Interfaces and GameIndependentInterfaces projects contain interface definitions only. These interfaces are used by a decent portion of the entire game server, and are not limited to the scope of this article.

- The ThreadSafeObjects project contains the implementation of

Lockable and a generic thread-safe queue. In forthcoming articles, this project will grow significantly with the addition of many more thread-safe class implementations. - The Extensions project contains several convenient extension methods. This project will grow significantly in size over the course of this series of articles.

- The SchedulingEngine project implements the scheduling engine itself.

- The TestSchedulingEngine project contains a unit test written to confirm that the scheduling engine executes all scheduled work items within an appropriate amount of time.

- The TestLockableSpeedConsole project contains a console application used to measure the relative performance of

Lockable vs. lock(). - Finally, the aptly-named (?) project, Article1, consists of a light-weight Windows application that lets you:

- play around with scheduling engine thread parameters, and

- submit and monitor the execution of thousands (or hundreds of thousands) of randomly-scheduled test work items.

Points of Interest

I started writing telnet/text-based online multiplayer games in C back in the early 1990's, well before the birth of graphical MMPORGs. The game engine I will be presenting here on CodeProject shares the name - EmlenMud - with the MUD code base I worked on almost 15 years ago. The original code base is mentioned on Cassiopedia.org.

Upcoming article topics are listed below:

- Design and implementation of a high-performance TCP/IP communications library

- Entities, Abilities, Intentions, Actions, and States

- The Virtual World: Interaction, Data Structures, and Synchronization

- Control Commands and Change Notification

- Data Storage and Virtual World Persistence

- Plug-ins and Extensibility

All comments/suggestions are welcome.

owen@binarynorthwest.com. Member BrainTechLLC.