An Introduction to Sql 11 (Code Name Denali) –Part VIII (Data Quality Services(DQS) in CTP 3)

Table of Content

- Introduction

- Background

- What is Data Quality Services(DQS)

- What are the DQS Components

- What we need to do for installing DQS in our system?

- How to Connect to the DQS Client?

- How to create a Knowledge base from scratch?

- How to add more domains to an existing KB?

- How to see the contents of our knowledge base?

- Now that our KB is done, we want to perform the cleansing operation with some source data.How can we do so using the Data Quality project?

- How can we perform a data matching with the same KB?

- DQS activity monitoring

- References

- Conclusion

One of the hottest and awesome developments by Microsoft in the technology field was come into picture on 8th November, 2010 when they released the Community Technology Preview 1 (CTP 1) version of Sql Server 2011(Code name Denali). As expected, Denali has brought some new features for Sql lovers may that be developers, Administrators or Business Intelligence (BI) professionals. Part I describes about the features and enhancements in SSMS. Part II focuses on the new development and enhanced features from T-Sql perspective. Part III looks into the enhancements made from SSIS angle while Part 4 talks about Contained Database.

But that's not the end of Denali.It is an ongoing development and after the big success of CTP1 , it is now CTP 3 which was launched on 12th July 2011.As expected, Denali is spreading itself in various wings may it be enhancements from TSql perspective, a new look and feel-Juneau, a new report tool using the Project Cresent or enhancing the retrival of datawarehousing process by ColumnStore indexes etc. Denali has given a new dimension to the SQL Server database and this series will explore the new Data Quality Services(DQS).

In the last few years, Microsoft has brought many technologies under the developers’ hood. A drastic change has been made in the Sql Server jargon with the advent of Sql Server 2005(code name Yukon) and in the subsequent releases like Sql Server 2008(code name Katmai) and Sql 11(code name Denali), the same pace has been kept with introduction to new features and enhancements as well as improvements. In this article we will explore the new Data Quality Services(DQS) feature of Denali CTP 3. The subsequent articles will focus on the enhancements made in the other areas.

You can read my other articles on Denali as given under

- An Introduction to Sql 11 (Code Name Denali) –Part I (SSMS Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part II (T-Sql Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part III (SSIS Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) -Part IV (Contained Database in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part V (SSIS Features in CTP 3)

- An Introduction to Sql 11 (Code Name Denali) –Part VI (T-Sql Features in CTP 3)

- An Introduction to Sql 11 (Code Name Denali) –Part VII (Column Store Index in CTP 3)

DQS is a new feature in SQL Server "Denali" that provides us with a knowledge-driven data cleansing solution.

We deal with data and there is a high possibility that the data will be incorrect;may be the user has enter wrong data, the data transmission is corrupted etc. Also integrating those inconsistent data from various sources is also a problem and time consuming that will affect the business and the worst victims in such situations are the customers which on the other hand will affect the company from loss of credibility and revenue, customer dissatisfaction etc. Manual processing is not again a good choice as it is always inclined to have erroneous report however meticulously it has been measure. Automation will not give 100% result. Ultimately it will hamper the historical data and as a consequence will affect data analysis and reporting.

In order to rescue the business from such situations, DQS is the need of the day. It ensures high quality data,improves accuracy,data consistency and resolve problems cause by bad data entry in BI or data warehouse or OLTP systems.

It helps business user or a non database professional to create, maintain and execute their organization’s data quality operations with minimal setup or preparation time and with excellent quality.

It improves the data quality by creating a Knowledge Base(KB) about the data and then clean the data based on the knowledge in the knowledge base.

A Knowledge Base(KB) is a repository of three types of knowledge:

- Out of the box knowledge

- Knowledge generated by the DQS server

- Knowledge generated by the user

This knowledge base once build , can server as a reusable stuff which can be continuously improve and then apply in multiple data-quality improvement processes.

KB identifies the incorrect data,proposes changes to the data,find data matches, mismatches and enables to perform data deduplication.It compares source data with the reference data(cloud-based) provided by data quality providers.

The data steward or IT professional verifies both the knowledge in the knowledge base and the changes to be made to the data, and executes the cleansing, deduplication, and reference data services.

The knowledge in the database stores all kind of knowledges applicable to a specific data source.They are stored in data domains which can even span and each of which is a semantic representation of a type of data in a data field.

A domain contains all kind of values like valid values, invalid values, and erroneous data.

Domain Knowledge(DK) consists of synonym associations, term relationships, validation and business rules,and matching policies. Eqipped with this KB, the IT professional/data steward/non-database user can makes a concrete decision about the data analysis and provides support as to whether to correct those data or not.

The DQS knowledge-driven solution uses two fundamental steps to cleanse data:

- Builds a Knowledge base through the knowledge management process

- Changes to be done (if needed for the Knowledge symantics to satisfy) in teh source data based on the knowledge in the KB.This is done through a data quality project.

DOS consists of the

- DQS Server.

- DQS Client.

In addition to these, there is a transformation component call as DQS Cleansing Component in SSIS transformation toolbox that helps us in cleansing the data based on the knowledge base created.

The client and the server component are install as part of SQL Server setup program.(Denali CTP3)

DQS Server

The DQS server is implemented as two SQL Server catalogs that we can manage and monitor in the SQL Server Management Studio.They are

- DQS_MAIN

- DQS_PROJECT

DQS_MAIN includes DQS stored procedures, the DQS engine, and published knowledge bases.

DQS_PROJECT includes data that is required for knowledge base management and DQS project activities.

The source database that contains the data to be analyzed must also be in the same SQL Server instance as the DQS server.

DQS Client

It is a standalone application, designed for data stewards and DQS administrators that helps to perform knowledge management,domain management, matching policy creation, data cleansing, matching, monitoring, data quality projects, and administration in one user interface.

The client application can installed and run on the same computer as the DQS Server or remotely on a separate computer.

For installing DQS in our system, we need to follow the below steps.

First Sql Server Denali CTP3 should be installed.

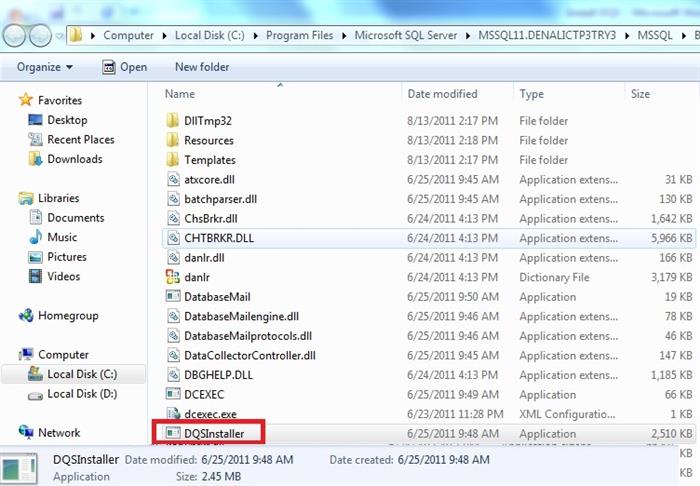

Then we need to find the DQSInstaller which will generally be located at C:\Program Files\Microsoft SQL Server\MSSQL11.DENALICTP3TRY3\MSSQL\Binn\DQSInstaller.exe

Double click on the DQSInstaller.exe and give administrator priveledge and it will be executed. We need to provide the database Master key.Once done, it will be installed and if everything goes fine we will receive the success message

Open SSMS and we will find two database DQS_MAIN, DQS_PROJECTS which also indicates that our DQS server is being installed properly

Once we install from the DQSInstaller.exe properly, next for connecting to the client do the below steps

Go to Start Menu->All Programs-> Microsoft Sql Server Denali CTP3->Data Quality Client(32 bit). Click on that

We will get the DQS client window as under

Click on the Connect button and we will be presented with

We can figure out that the client has 3 areas of activity:

- Knowledge Base Management

- Data Quality Projects

- Administration

1.Knowledge Base Management

It is the central part of DQS client application. We can specify the rules that DQS will apply when validating data and the action taken when those rules are violated. As they capture the organizational knowledge, so they are term as knowledge base.Basically they are the rules define for the domains.

The KB contains Domains which is a component of Data Quality. For example a Country Domain can be such that it should not contain any abbreviated stuff and the length of the country must be greater than 3 letters. Now we can have this domain in our knowledge base say CountryKB. Typically, a single KB can have multiple domain values and for every domain we can have rules and validations apply for them. Domains can be either single valued or compound (consists of multiple fields).

2.Data Quality Projects

It uses KB for improving the quality of the source data by performing data cleansing and data matching activities and finally exports the resultant data to a SQL Server database or a .csv file

A Data quality project can be created either as a cleansing project or a matching project to perform respective activities and can perform the operation on the same KB.

Cleansing is that KB application that refines the source data in the KB.

Matching is that KB application that perform matching activity based on matching policy in a knowledge base to prevent data duplication by identifying exact and approximate matches, and thereby helps to remove duplicate data.

Some of the benefits of data quality project are as under

- Helps us to perform data cleansing operation on the KB

- Helps us to perform data matching operation on the source data by using the matching policy in a knowledge base.

- Provides an intercative GUI for doning the above operations

- Helps to export the resultant cleaned / matched data to the SQL Server database or to a .csv file.

- Helpful for a data steward/non-data base user/IT professional

3.Administration

It covers Activity Monitoring and Configuration. Activity monitoring covers usage and activities against Knowledge Bases. Configuration manages some matching default parameters and connections to 3rd party Reference databases.

In this part we will look into how to create a knowledge base from the scratch.Our knowledge base will have Country domain values and the rules will be such that the country length should be more than or equal to 5 and should not be abbreviated. Also if the user record has "England" as the value,it will be corrected to "United Kingdom".

For this let us click on the New Knowledge base button that comes under the Knowledge Base Management category.Once done, we can now create the new knowledge base.Let us enter a KB name, give some description and click on the create button

Once the above step is completed we will get the Domain Management Sction and here we need to create a domain.

After clicking on the Create a domain button, we will get the Create Domain popup window.We need to enter a domain name and leave the reset as it is. Click on OK button.

To the right hand side we can see our options for setting the Data Quality rules that apply to the domain in question

Next we should create some rules that will be applied. For that, let us click on the Domain Rules tab

Next we need to click on the Add a new domain rule button for creating a new domain rule.Once clicked,we will get the below screen

Enter Name,Description and from the Build a rule drop down choose "Length greater than or equal to" and set the value to 5 as shown under

Along with this, we also want to apply that no country name should be abbreviated.For that, let us click on the Adds a new condition to the selected clause button

From the dropdown , let us choose "Value does not contain" and set the value to "."(dot)

After that click on Apply All Rules button.

Click YES and we will receive the success message stating that the domain rule has been applied successfully

Once we have set up the domain rule, the next step will be to add the domain values.So let us visit the Domain Values tab and click on the Add new domain value button

Now let us add some domain values.Observe that, if anyone gives the value of England, the output will be corrected to United Kingdom.

Once done, click Finish button.

As a last step , we need to click no the Publish button and the KB(Knowledge base) will be available publicly

And we can make out that "OurTestDomain" has been created

Now that our KB is ready to use with one domain, we may want to enhance it by adding some more domains

For this, let us choose Domain Management from the activity list

The Domain Management window appears from where we can add more domains to our KB as stated above

We will add two domains here

a) PersonName the rule being that the name should not contain any abbreviations

b) PersonAge the rule being that age has to numeric

Click on Finish button and publish the KB as we have seen earlier.

Click on the Open Knowledge Base button and select the appropriate KB where we can find the information about the KB.

We can even rename the existing KB. In our case will will rename it from "Our Test Domain" to "Our Test KB". For this right click on the KB name and choose Rename.

As discussed earlier, we need to have source data which need to be cleansed against the KB that we have prepared.Presently, the DQS client supports two kind of sources for data

- SQL Database

- Excel file

For this experiment, let us choose an excel file as our data source

First, we need to click on the New Data Quality Project button.

Once the Data Quality Services window opens up, give a suitable name (1) and from the Use Knowledge base drop down (2), choose Our Test KB. Finally click on the Create button (3).

Now let us create an excel file(source.xlsx) whose content will be as under

Now in the Cleanse project of DQP, change the Data Source to Excel File (1), specify the file path(2) and do the source column mapping with the domain values present in our KB.

Once done, click on the Next button.Next click on the Start Button

As can be figure out that, the statistics is showing that 3 out of 5 records are invalid

Click Next button and we will be in Manage and view result result screen where we can get the detail analysis as how the cleaning process has happened

If we open the respective tabs, we can find out as what are the invalid and corrected values etc.

Click on the Next button and we will reach in the View and Export Data Cleansing Results screen. On the left hand side we can figure out as how the cleansing process has been done based on the rules specified in the domain values.

From the above figure we can make out that while creating the PersonName domain, we specify that name cannot be abbreviated and henceforth in the Name_Reason collumn, we get the status as "Failed Rule".

Now let us analyze for the Country values

For India and England, in Country_Reason column we have the values as "Domain Values". It is because of the fact that, when we created our Country Doomain, we have specified these values in the Domain Values section.It is obvious to get a status message as "Failed Rule" for USA and U.K. because we specified the Domain Rule constraints as Country cannot be abbreviated and the length has to be more than 5. For Vatican city, it is showing as "New Value" because, it satisfies the Domain Rules but this value is not listed in the Domain Values.

I donot think that we need any further explanation for the Age values

Now it's time to export the resultant record. From the "Export Cleansing Results" present on the right hand side, choose the destination type(here .csv),give the file path and file name (note that the file should not be present. It will be created at runtime) and click on the export button

If we open the ResultantOutput.csv, we will get the below

For more information on Data Cleansing, please visit this site

First of all we need to create a matching policy in the knowledge base.For doing so, let us choose the Matching Policy option from the list of activities

Then specify the Excel source and perform the mapping as what we have already done in the previous section for Data Cleansing Process

Kindly note that, we have changed our excel file content as it is now having some duplication records

Once done, click on the Next button and we will be in Matching Policy screen where we need to create the policy.A matching policy tells about one or more matching rules,which domains to use, how well one record matches to another and specify the weight that each domain value carries in the matching assessment. We can mention about teh knid of match(exact or similar)in the rule and also to the degree of similarity.Also the total domain element weight has to be 100

Click on the "Create a matching rule" button and specify a Rule Name (1), the Domain Names(2),the similarity category(3) and teh domain weight(4).

Once done, either we can click on the Next button and then Run the matching policy rules or directly click on the "Run matching policy rule" button (5)

We can figure out the number of matched record

As well as unmatched records

Even we can have a Pie Chart representation of the matched and unmatched records in the matching result section

For more information on data matching, please refer this article

It covers usage and activities against Knowledge Bases, tells the status of the KB or Data Quality projects,the type of activities performed, the start and end time of the activities etc. There is also some filtering options by which we can filter the records.

a)Introducing Data Quality Services

b)Data Quality Services (DQS) FAQ

c)Data Quality Services "How Do I?" Videos

d)Data Quality Services

In this article we have discussed about the new DQS features, what it is, how it helps organization to maintain historical data,it's application.We also talked about Knowledge base, it's creation and uses in the Data quality projects for cleansing.We have also seen how matching policy works.

In a previous article we have seen the uses of KB in a Data Cleansing SSIS component

Hope the users will find it useful and I am looking forward for your feedback