Introduction

I'm afraid to say that I am just one of those people, that unless I am doing something, I am bored. So now that I finally feel I have learnt the basics of WPF, it is time to turn my attention to other matters.

I have a long list of things that demand my attention (such as WCF/WF/CLR Via C# version 2 book), but I recently went for a new job (and got it, but turned it down in the end) which required me to know a lot about threading. Whilst I consider myself to be pretty good with threading, I thought, yeah I'm OK at threading, but I could always be better. So as a result of that, I have decided to dedicate myself to writing a series of articles on threading in .NET.

This series will undoubtedly owe much to the excellent Visual Basic .NET Threading Handbook that I bought, that is nicely filling the MSDN gaps for me and now you.

I suspect this topic will range from simple to medium to advanced, and it will cover a lot of stuff that will be in MSDN, but I hope to give it my own spin also. So please forgive me if it does come across a bit MSDN like.

I don't know the exact schedule, but it may end up being something like:

I guess the best way is to just crack on. One note though before we start, I will be using C# and Visual Studio 2008.

What I'm going to attempt to cover in this article will be:

This article will be all about how to control the synchronization of different threads.

Wait Handles

One of the first steps one must go through when trying to understand how to get multiple threads to work together well, is how to sequence operations. For example, let's say we have the following problem:

- We need to create an order

- We need to save the order, but can't do this until we get an order number

- We need to print the order, but only once it's saved in the database

Now, these may look like very simply tasks that don't need to be threaded at all, but for the sake of this demo, let's assume that each of these steps is a rather long operation involving many calls to a fictional database.

We can see from the brief above that we can't do step 2 until step 1 is done, and we can't do step 3 until step 2 is done. This is a dilemma. We could, of course, have all this in one monolithic code chunk, but this defeats the idea of concurrency that we are trying to use to make our application more responsive (remember, each of these fictional steps takes a long time).

So what can we do? We know we want to thread these three steps, but there are certainly some issues, as we can not guarantee which thread will be started first, as we saw in Part 2. Mmm, this isn't good. Luckily, help is at hand.

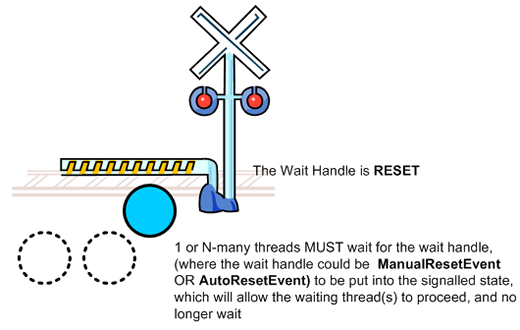

There is an idea within .NET of a WaitHandle, which allows threads to wait on a particular WaitHandle, and only proceed when the WaitHandle tells the waiting thread that it is OK to proceed. This mechanism is know as signaling and waiting. When a thread is waiting on a WaitHandle, it is blocked until such a time that the WaitHandle is signaled, which allows the waiting thread to be unblocked and proceed with its work.

The way that I like to think about the general idea behind WaitHandles is something like a set of lights at a train crossing. While the barrier (the WaitHandle) is not signaled, we (the waiting threads) must wait for the barrier to be raised (signaled).

That's how I think about it any way. So what does .NET offer in the way of WaitHandles for us to use?

The following classes are provided for us:

System.Threading.WaitHandle

System.Threading.EventWaitHandleSystem.Threading.MutexSystem.Threading.Semaphore

As can be seen in this hierarchy, System.Threading.WaitHandle is the base class for a bunch of other System.Threading.WaitHandle derived classes. There are a few specific things that should first be explained before we dive on into looking at the specifics of these System.Threading.WaitHandle derived classes.

Some important common (but not all) methods are as follows:

SignalAndWait

There are several overloads for this method, but the basic idea is that one System.Threading.WaitHandle will be signaled whilst another System.Threading.WaitHandle will be waited on to receive a signal.

WaitAll (Static method on WaitHandle)

There are several overloads for this method, but the basic idea is that an array of System.Threading.WaitHandles is passed into the WaitAll method, and all of these System.Threading.WaitHandles will be waited on to receive a signal.

WaitAny (Static method on WaitHandle)

There are several overloads for this method, but the basic idea is that an array of System.Threading.WaitHandles is passed into the WaitAny method, and any of these System.Threading.WaitHandles will be waited on to receive a signal.

WaitOne

There are several overloads for this method, but the basic idea is the current System.Threading.WaitHandle will be waited on to receive a signal.

Let us now concentrate on System.Threading.EventWaitHandle.

EventWaitHandle

The EventWaitHandle is a WaitHandle and has two more specific classes: ManualResetEvent and AutoResetEvent, that inherit from it that are used more commonly. As such, it is these two sub classes that I will spend time discussing. All that you need to note is that an EventWaitHandle object is able to act just like either of its subclasses by using one of the twp EventResetMode Enumeration values which may be used when constructing a new EventWaitHandle object.

So let's now look a little bit further at the ManualResetEvent and AutoResetEvent objects, as these are more commonly used.

AutoResetEvent

From MSDN:

"A thread waits for a signal by calling WaitOne on the AutoResetEvent. If the AutoResetEvent is in the non-signaled state, the thread blocks, waiting for the thread that currently controls the resource to signal that the resource is available by calling Set.

Calling Set signals AutoResetEvent to release a waiting thread. AutoResetEvent remains signaled until a single waiting thread is released, and then automatically returns to the non-signaled state. If no threads are waiting, the state remains signaled indefinitely."

In laymen's terms, when using a AutoResetEvent, when the AutoResetEvent is set to signaled, the first thread that stops blocking (stops waiting) will cause the AutoResetEvent to be put into a reset state, such that any other thread that is waiting on the AutoResetEvent must wait for it to be signaled again.

Let's consider a small example where two threads are started. The first thread will run for a period of time, and will then signal (calling Set) a AutoResetEvent that started out in a non-signaled state; then a second thread will wait for the AutoResetEvent to be signaled. The second thread will also wait for a second AutoResetEvent; the only difference being that the second AutoResetEvent starts out in the signaled state, so this will not need to be waited for.

Here is some code that illustrates this:

using System;

using System.Threading;

namespace AutoResetEventTest

{

class Program

{

public static Thread T1;

public static Thread T2;

public static AutoResetEvent ar1 = new AutoResetEvent(false);

public static AutoResetEvent ar2 = new AutoResetEvent(true);

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T1 is simulating some work by sleeping for 5 secs");

Thread.Sleep(5000);

Console.WriteLine(

"T1 is just about to set AutoResetEvent ar1");

ar1.Set();

});

T2 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T2 starting to wait for AutoResetEvent ar1, at time {0}",

DateTime.Now.ToLongTimeString());

ar1.WaitOne();

Console.WriteLine(

"T2 finished waiting for AutoResetEvent ar1, at time {0}",

DateTime.Now.ToLongTimeString());

Console.WriteLine(

"T2 starting to wait for AutoResetEvent ar2, at time {0}",

DateTime.Now.ToLongTimeString());

ar2.WaitOne();

Console.WriteLine(

"T2 finished waiting for AutoResetEvent ar2, at time {0}",

DateTime.Now.ToLongTimeString());

});

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

}

}

This results in the following output, where it can be seen that T1 waits for 5 secs (simulated work) and T2 waits for the AutoResetEvent "ar1" to be put into signaled state, but doesn't have to wait for AutoResetEvent "ar2" as it's already in the signaled state when it is constructed.

ManualResetEvent

From MSDN:

"When a thread begins an activity that must complete before other threads proceed, it calls Reset to put ManualResetEvent in the non-signaled state. This thread can be thought of as controlling the ManualResetEvent. Threads that call WaitOne on the ManualResetEvent will block, awaiting the signal. When the controlling thread completes the activity, it calls Set to signal that the waiting threads can proceed. All waiting threads are released.

Once it has been signaled, ManualResetEvent remains signaled until it is manually reset. That is, calls to WaitOne return immediately."

In laymen's terms, when using a ManualResetEvent, when the ManualResetEvent is set to signaled, all threads that were blocking (waiting) on it will now be allowed to proceed, until the ManualResetEvent is put into a reset state.

Consider the following code snippet:

using System;

using System.Threading;

namespace ManualResetEventTest

{

class Program

{

public static Thread T1;

public static Thread T2;

public static Thread T3;

public static ManualResetEvent mr1 = new ManualResetEvent(false);

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T1 is simulating some work by sleeping for 5 secs");

Thread.Sleep(5000);

Console.WriteLine(

"T1 is just about to set ManualResetEvent ar1");

mr1.Set();

});

T2 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T2 starting to wait for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

mr1.WaitOne();

Console.WriteLine(

"T2 finished waiting for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

});

T3 = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"T3 starting to wait for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

mr1.WaitOne();

Console.WriteLine(

"T3 finished waiting for ManualResetEvent mr1, at time {0}",

DateTime.Now.ToLongTimeString());

});

T1.Name = "T1";

T2.Name = "T2";

T3.Name = "T3";

T1.Start();

T2.Start();

T3.Start();

Console.ReadLine();

}

}

}

Which results in the following screenshot:

It can be seen that three threads (T1-T3) are started, T2 and T3 are both waiting on the ManualResetEvent "mr1", which is only put into the signaled state within thread T1's code block. When T1 puts the ManualResetEvent "mr1" into the signaled state (by calling the Set() method), this allows waiting threads to proceed. As T2 and T3 are both waiting on the ManualResetEvent "mr1", and it is in the signaled state, both T2 and T3 proceed. The ManualResetEvent "mr1" is never reset, so both threads T2 and T3 are free to proceed to run their respective code blocks.

Back to the Original Problem

Recall the original problem:

- We need to create an order

- We need to save the order, but can't do this until we get an order number

- We need to print the order, but only once it's saved in the database

This should now be pretty easy to solve. All we need are some WaitHandles that are used to control the execution order, where by step 2 waits on a WaitHandle that step 1 signals, and step 3 waits on a WaitHandle that step 2 signals. Simple, huh? Shall we see some example code? Here is some, I simply chose to use an AutoResetEvent:

using System;

using System;

using System.Threading;

namespace OrderSystem

{

public class Program

{

public static Thread CreateOrderThread;

public static Thread SaveOrderThread;

public static Thread PrintOrderThread;

public static AutoResetEvent ar1 = new AutoResetEvent(false);

public static AutoResetEvent ar2 = new AutoResetEvent(false);

static void Main(string[] args)

{

CreateOrderThread = new Thread((ThreadStart)delegate

{

Console.WriteLine(

"CreateOrderThread is creating the Order");

Thread.Sleep(5000);

ar1.Set();

});

SaveOrderThread = new Thread((ThreadStart)delegate

{

ar1.WaitOne();

Console.WriteLine(

"SaveOrderThread is saving the Order");

Thread.Sleep(5000);

ar2.Set();

});

PrintOrderThread = new Thread((ThreadStart)delegate

{

ar2.WaitOne();

Console.WriteLine(

"PrintOrderThread is printing the Order");

Thread.Sleep(5000);

});

CreateOrderThread.Name = "CreateOrderThread";

SaveOrderThread.Name = "SaveOrderThread";

PrintOrderThread.Name = "PrintOrderThread";

CreateOrderThread.Start();

SaveOrderThread.Start();

PrintOrderThread.Start();

Console.ReadLine();

}

}

}

Which produces the following screenshot:

Semaphores

A Semaphore inherits from System.Threading.WaitHandle; as such, it has the WaitOne() method. You are also able to use the static System.Threading.WaitHandle, WaitAny(), WaitAll(), SignalAndWait() methods for more complex tasks.

I read something that described a Semaphore being like a nightclub. It has a certain capacity, enforced by a bouncer. When full, no more people can enter the club, until one person leaves the club, at which point one more person may enter the club.

Let's see a simple example, where the Semaphore is set up to be able to handle two concurrent requests, and has an overall capacity of 5.

using System;

using System;

using System.Threading;

namespace SemaphoreTest

{

class Program

{

static Semaphore sem = new Semaphore(2, 5);

static void Main(string[] args)

{

for (int i = 0; i < 10; i++)

{

new Thread(RunThread).Start("T" + i);

}

Console.ReadLine();

}

static void RunThread(object threadID)

{

while (true)

{

Console.WriteLine(string.Format(

"thread {0} is waiting on Semaphore",

threadID));

sem.WaitOne();

try

{

Console.WriteLine(string.Format(

"thread {0} is in the Semaphore, and is now Sleeping",

threadID));

Thread.Sleep(100);

Console.WriteLine(string.Format(

"thread {0} is releasing Semaphore",

threadID));

}

finally

{

sem.Release();

}

}

}

}

}

Which results in something similar to this:

This example does show a working example of how a Semaphore can be used to limit the number of concurrent threads, but it's not a very useful example. I will now outline some partially completed code, where we can use a Semaphore to limit the number of threads trying to access a database with a limited number of connections available. The database can only accept a maximum of three concurrent connections. As I say, this code is incomplete, and does not work in its current state; it's for demonstration purposes only, and is not part of the attached demo app.

using System;

using System.Threading;

using System.Data;

using System.Data.SqlClient;

namespace SemaphoreTest

{

class RestrictedDBConnectionStringAccessUsingSemaphores

{

static Semaphore sem = new Semaphore(1, 3);

static void Main(string[] args)

{

new Thread(RunCustomersThread).Start("ReadCustomersFromDB");

new Thread(RunOrdersThread).Start("ReadOrdersFromDB");

new Thread(RunProductsThread).Start("ReadProductsFromDB");

new Thread(RunSuppliersThread).Start("ReadSuppliersFromDB");

Console.ReadLine();

}

static void RunCustomersThread(object threadID)

{

sem.WaitOne();

using (new SqlConnection("<SOME_DB_CONNECT_STRING>"))

{

}

sem.Release();

}

static void RunOrdersThread(object threadID)

{

sem.WaitOne();

using (new SqlConnection("<SOME_DB_CONNECT_STRING>"))

{

}

sem.Release();

}

static void RunProductsThread(object threadID)

{

sem.WaitOne();

using (new SqlConnection("<SOME_DB_CONNECT_STRING>"))

{

}

sem.Release();

}

static void RunSuppliersThread(object threadID)

{

sem.WaitOne();

using (new SqlConnection("<SOME_DB_CONNECT_STRING>"))

{

}

sem.Release();

}

}

}

From this small example, it should be clear that the Semaphore only allows a maximum of three threads (which was set in the Semaphore constructor), so we can be sure that the database connections will also be kept within limit.

Mutex

A Mutex works in much the same way as the lock statement (that we will look at in the Critical sections section below), so I won't harp on about it too much. But the main advantage the Mutex has over lock statements and the Monitor object is that it can work across multiple processes, which provides a computer-wide lock rather than application wide.

Sasha Goldshtein, the technical reviewer of this article, also stated that when using a Mutex, it will not work over Terminal Services.

Application Single Instance

One of the most common uses of a Mutex is, unsurprisingly, to ensure that only one instance of an application is actually running

Let's see some code. This code ensures a single instance of an application. Any new instance will wait 5 seconds (in case the currently running instance is in the process of closing) before assuming there is already a previous instance of the application running, and exiting.

using System;

using System.Threading;

namespace MutexTest

{

class Program

{

static Mutex mutex = new Mutex(false,"MutexTest");

static void Main(string[] args)

{

if(!mutex.WaitOne(TimeSpan.FromSeconds(5)))

{

Console.WriteLine("MutexTest already running! Exiting");

return;

}

try

{

Console.WriteLine("MutexTest Started");

Console.ReadLine();

}

finally

{

mutex.ReleaseMutex();

}

}

}

}

So if we start an instance of this app, we get:

When we try and run another copy, we get the following (after a 5 second delay):

Critical sections A.K.A. Locks

Locking is a mechanism for ensuring that only one thread can enter a particular section of code at a time. This is done by the use of what are known as locks. The actual locked sections of code are known as critical sections. There are various different ways in which to lock a section of code, which will be covered below.

But before we go on to do that, let's just look at why we might need these "critical sections" of code. Consider the bit of code below:

using System;

using System.Threading;

namespace NoLockTest

{

class Program

{

static int item1=54, item2=21;

public static Thread T1;

public static Thread T2;

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

DoCalc();

});

T2 = new Thread((ThreadStart)delegate

{

DoCalc();

});

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

private static void DoCalc()

{

item2 = 10;

if (item1 != 0)

Console.WriteLine(item1 / item2);

item2 = 0;

}

}

}

This code is not thread safe, as it could be accessed by two different simultaneous threads. A thread could set item2 to 0, just at the point when another thread was doing the division, leading to a DivideByZeroException.

Now, this problem may only show itself once every 500 times you run this code, but this is the nature of threading issues; they are only exhibited once in a while, so are very hard to find. You really need to think about all the eventualities before you write code, and ensure you have the correct safe guards in place.

Luckily, we can fix this problem using locks (A.K.A. critical sections). Shown below is a revised version of the code above:

using System;

using System.Threading;

namespace LockTest

{

class Program

{

static object syncLock = new object();

static int item1 = 54, item2 = 21;

public static Thread T1;

public static Thread T2;

static void Main(string[] args)

{

T1 = new Thread((ThreadStart)delegate

{

DoCalc();

});

T2 = new Thread((ThreadStart)delegate

{

DoCalc();

});

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

private static void DoCalc()

{

lock (syncLock)

{

item2 = 10;

if (item1 != 0)

Console.WriteLine(item1 / item2);

item2 = 0;

}

}

}

}

In this example, we introduce the first of the possible techniques to create critical sections, by the use of the lock keyword. Only one thread can lock the synchronizing object (syncLock, in this case) at a time. Any contending threads are blocked until the lock is released. And contending threads are held in a "ready queue", and will be given access on a first come first served basis.

Some people use lock(this) or lock(typeof(MyClass)) for the synchronization object. Which is a bad idea as both of these are publicly visible objects, so theoretically, an external entity could use them for synchronization and interfere with your threads, creating a multitude of interesting problems. So it's best to always use a private synchronization object.

I now want to briefly talk about the different ways in which you can lock (create a critical section).

Lock keyword

We have already seen the first example, where we used the lock keyword, which we can use to lock on a particular object. This is a fairly common approach.

It's worth pointing out that the lock keyword is really just a shortcut, for working with the Monitor class, as is shown below.

Monitor class

The System.Threading namespace contains a class called Monitor, which can do exactly the same as the lock keyword. If we consider the same thread safe code snippet that we used above with the lock keyword, you should see that Monitor does the same job.

using System;

using System.Threading;

namespace LockTest

{

class Program

{

static object syncLock = new object();

static int item1 = 54, item2 = 21;

static void Main(string[] args)

{

Monitor.Enter(syncLock);

try

{

if (item1 != 0)

Console.WriteLine(item1 / item2);

item2 = 0;

}

finally

{

Monitor.Exit(syncLock);

}

Console.ReadLine();

}

}

}

Where the lock keyword is just syntactic sugar for the following Monitor code:

Monitor.Enter(syncLock);

try

{

}

finally

{

Monitor.Exit(syncLock);

}

The lock keyword is actually the same as this code.

MethodImpl.Synchronized attribute

The last method relies on the use of an attribute which you can use to adorn a method to say that it should be treated as synchronized. Let's see this:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace MethodImplSynchronizedTest

{

class Program

{

static int item1=54, item2=21;

static void Main(string[] args)

{

DoWork();

}

[System.Runtime.CompilerServices.MethodImpl

(System.Runtime.CompilerServices.MethodImplOptions.Synchronized)]

private static void DoWork()

{

if (item1 != 0)

Console.WriteLine(item1 / item2);

item2 = 0;

Console.ReadLine();

}

}

}

This simple example shows that you can use System.Runtime.CompilerServices.MethodImplAttribute to mark a method as synchronized (critical section).

One thing to note is that if you lock a whole method, you are kind of missing the chance for better concurrent programming, as there will not be that much better performance than that of a single threaded model running the method. For this reason, you should try to keep the critical sections to be only around fields that need to be safe across multiple threads. So try and lock when you read/write to common fields.

Of course, there are some occasions where you may need to mark the entire method as a critical section, but this is your call. Just be aware that locks should generally be as small (granularity) as possible.

Miscellaneous objects

There are a couple of extra classes that I feel are worth a mention, these are discussed further below:

Interlocked

"A statement is Atomic if it executes as a single indivisible instruction. Strict atomicity precludes any possible preemption. In C#, a simple read or assignment on a field of 32 bits or less is atomic (assuming a 32-bit CPU). Operations on larger fields are non-atomic, as are statements that combine more than one read/write operation."

--Threading in C#, Joseph Albahari

Consider the following code:

using System;

using System.Threading;

namespace AtomicTest

{

class AtomicTest

{

static int x, y;

static long z;

static coid Test()

{

long myVar;

x = 3;

z = 3;

myVar = z;

y += x;

x++;

}

}

}

One way to fix this may be to wrap the non-atomic operations within a lock statement. There is, however, a .NET class that provides a simpler/faster approach. This is the Interlocked class. Using Interlocked is safer than using a lock statement, as Interlocked can never block.

MSDN states:

"The methods of this class help protect against errors that can occur when the scheduler switches contexts while a thread is updating a variable that can be accessed by other threads, or when two threads are executing concurrently on separate processors. The members of this class do not throw exceptions."

Here is a small example using the Interlocked class:

using System;

using System.Threading;

namespace InterlockedTest

{

class Program

{

static long currentValue;

static void Main(string[] args)

{

Interlocked.Increment(ref currentValue);

Console.WriteLine(String.Format(

"The value of currentValue is {0}",

Interlocked.Read(ref currentValue)));

Interlocked.Decrement(ref currentValue);

Console.WriteLine(String.Format(

"The value of currentValue is {0}",

Interlocked.Read(ref currentValue)));

Interlocked.Add(ref currentValue, 5);

Console.WriteLine(String.Format(

"The value of currentValue is {0}",

Interlocked.Read(ref currentValue)));

Console.WriteLine(String.Format(

"The value of currentValue is {0}",

Interlocked.Read(ref currentValue)));

Console.ReadLine();

}

}

}

Which gives us the following results:

The Interlocked class also offers various other methods such as:

CompareExchange(location1,value,comparand): If comparand and the value in location1 are equal, then value is stored in location1. Otherwise, no operation is performed. The compare and exchange operations are performed as an atomic operation. The return value of CompareExchange is the original value in location1, whether or not the exchange takes place.Exchange(location1,value): Sets a location to a specified value and returns the original value, as an atomic operation.

These two Interlocked methods can be used for implementing lock-free (wait-free) algorithms and data structures that would otherwise have to be implemented using full kernel locks (such as Monitor, Mutex etc.).

Volatile

The volatile keyword can be used on a shared field. The volatile keyword indicates that a field can be modified in the program by something such as the Operating System, the hardware, or a concurrently executing thread.

MSDN states:

"The system always reads the current value of a volatile object at the point it is requested, even if the previous instruction asked for a value from the same object. Also, the value of the object is written immediately on assignment.

The volatile modifier is usually used for a field that is accessed by multiple threads without using the lock statement to serialize access. Using the volatile modifier ensures that one thread retrieves the most up-to-date value written by another thread."

ReaderWriterLockSlim

It is often the case that instances of a Type are thread safe in terms of read operations, but not for updates. Although this problem could be remedied with the use of the lock statement, it could become quite restrictive if there are many reads but not many writes. The ReaderWriterLockSlim class is designed to help out in this area.

The ReaderWriterLockSlim class (this is new to .NET 3.5) provides two kinds of locks: a read lock and a write lock. A write lock is exclusive, whereas a read lock is compatible with other read locks.

Put plainly, a thread holding a write lock blocks all other threads trying to obtain a read or write lock. If no thread currently holds a write lock, any numbers of threads may obtain a read lock.

The main methods that the ReaderWriterLockSlim class provides to facilitate these locks are as follows:

EnterReadLock (*)ExitReadLockEnterWriteLock (*)ExitWriteLock (*)

Where * indicates that there is also a safer "try" version of the method available, which supports a timeout.

Let's see a slightly altered example of this (original example courtesy of Threading in C#, Joseph Albahari)

using System;

using System;

using System.Threading;

using System.Collections.Generic;

namespace ReaderWriterLockSlimTest

{

class Program

{

static ReaderWriterLockSlim rw = new ReaderWriterLockSlim();

static List<int> items = new List<int>();

static Random rand = new Random();

static void Main(string[] args)

{

new Thread(Read).Start("R1");

new Thread(Read).Start("R2");

new Thread(Read).Start("R3");

new Thread(Write).Start("W1");

new Thread(Write).Start("W2");

}

static void Read(object threadID)

{

while (true)

{

try

{

rw.EnterReadLock();

Console.WriteLine("Thread " + threadID +

" reading common source");

foreach (int i in items)

Thread.Sleep(10);

}

finally

{

rw.ExitReadLock();

}

}

}

static void Write(object threadID)

{

while (true)

{

int newNumber = GetRandom(100);

try

{

rw.EnterWriteLock();

items.Add(newNumber);

}

finally

{

rw.ExitWriteLock();

Console.WriteLine("Thread " + threadID +

" added " + newNumber);

Thread.Sleep(100);

}

}

}

static int GetRandom(int max)

{

lock (rand)

return rand.Next(max);

}

}

}

Which shows something similar to the following:

It should be noted that there is also a ReaderWriterLock class within the System.Threading namespace. This is what MSDN has to say about the difference between ReaderWriterLockSlim (.NET 3.5) and ReaderWriterLock (.NET 2.0):

ReaderWriterLockSlim is similar to ReaderWriterLock, but it has simplified rules for recursion and for upgrading and downgrading lock state. ReaderWriterLockSlim avoids many cases of potential deadlock. In addition, the performance of ReaderWriterLockSlim is significantly better than ReaderWriterLock. ReaderWriterLockSlim is recommended for all new development.

According to Sasha Goldshtein (Senior consultant and instructor, Sela Group), Jeffrey Richter (famous author of CLR Via C# book) has revised versions of the ReaderWriterLock which are supposed to provide better performance. Sasha also informed me that Vista adds yet another RWL as part of the OS.

I certainly picked the right technical reviewer, thanks Sasha.....which leads me nicely on to the real thanks below.

Special thanks

I would like to personally thank Sasha Goldshtein (Senior consultant and instructor, Sela Group) for his help with technically reviewing this article.

Sasha Goldshtein is someone that I chatted with as a result of the last two articles in this series. He always seemed to be leaving comments about things I had wrong, or posting questions that I didn't know. So I approached Sasha, and asked him if he would mind being the technical reviewer for the rest of this series, which he kindly agreed to. So thanks Sasha from Sacha (me).

We're done

Well, that's all I wanted to say this time. I just wanted to say, I am fully aware that this article borrows a lot of material from various sources; I do, however, feel that it could alert a potential threading newbie to classes/objects that they simply did not know to look up. For that reason, I still maintain there should be something useful in this article. Well, that was the idea anyway. I just hope you agree; if so, tell me, and leave a vote.

Next time

Next time, we will be looking at Thread Pools.

Could I just ask, if you liked this article, could you please vote for it? I thank you very much.

Bibliography

- Threading in C#, Joseph Albahari

- System.Threading MSDN page

- Threading Objects and Features MSDN page

- Visual Basic .NET Threading, Wrox