Contents

- Repository of the metadata for Contract Model

- Contract Model of the endpoints, bindings, contracts, operations, messages, schemas, WSDL

- Manually creating and editing metadata in the Repository

- Importing contracts from endpoints (WSDL, Mex)

- Importing contracts from assemblies

- Drag & Drop metadata into Repository

- Compounding metadata for operations, messages, etc. into the Virtual Contract

- Creating Virtual Endpoints

- Compiling schemas, messages, operations, and contracts

- Exporting (generating) metadata for endpoints

- Repository Service to discover metadata (WS-Transfer Get, etc.) hosting by Windows NT Service

- Built-in client tester driven by the svcutil program

- Export-Import loopback test

- Built-in MMC Framework

- SQL schema for storage

- Implementation using LINQ-SQL, MMC 3.0, and .NetFX 3.5 technologies

The latest Microsoft .NetFX 3.5 version released an integration model based on the Windows Communication Foundation (WCF) and the Windows Workflow foundation (WF) technologies. This model is called as WorkflowService, and its capability is based on projecting a service connectivity and business activity. In the present .NetFx 3.5, the WorkflowService projector is based on the WCF and WF metadata described by three different resources such as a config file (system.serviceModel section group), XOML, and rules files. In addition, this metadata can be extended with other resources such as XSLT, WSDL, etc. I do recommend reading my recent article VirtualService for ESB where more information about this topic (from the service-side point of view) is available.

From the architecture point of view, services driven by metadata enable mapping and managing a business logical model to the physical one. We can see this strategy at the Microsoft Road Map presented for the first time on PDC 2003 and the upcoming PDC 2008, where more details will be released about the OSLO project. You may also visit the following link, and What is Oslo? by Douglas Purdy, Product Unit Manager for Oslo.

This strategy encapsulates metadata from the implementation layer, and hosts AppDomains into one centralized place known as the Repository. This Knowledge Base can be created and modeled at design time, and then mapped into physical targets based on the deployment schema. In other words, present technologies such as .NetFX 3.5 have been integrated with WCF and the WF models at the runtime hosting layer. The upcoming version of the .NetFX is about the integration of metadata. Having a well structuralized and integrated metadata model represented by one resource (XAML) will simplify the design and implementation of the modeling tool and the runtime bootstrap. Based on the PDC 2008 sessions (OSLO/WCF/WF topics), we can see that the WorkflowService is going to play a key role for fully manageable services.

From the enterprise point of view, the Repository size can easily grow to a large Knowledge Base, and without the right tools, it will be very difficult to manage the business models of thousands of endpoints, bindings, services, workflows, XSLT, etc. resources. Manageable services driven by metadata is a big challenge for modeling, simulation, deployment, and runtime processes, and, of course, a quality different thinking (declaratively programming) for designers and developers. This article shows some portion of this challenge such as the Repository of the Contract Model for Manageable Services. Note, that we are limited to current technologies such as .NetFX 3.5.

What does Manageable Services and Contract Model mean?

Well, let's start by briefly describing about the "legacy" Web Services, and follow up to the VirtualService which represents a fully manageable service.

Basically, a service can be considered as fully manageable in the business model if its connectivity, behavior, business workflow, and deployment are described by metadata and located in the Enterprise Service Repository.

Unmanageable Service

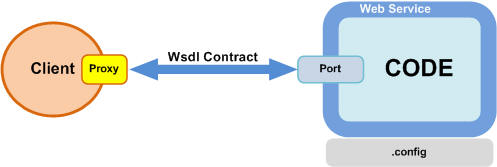

The following picture shows a typical connectivity between a Web Service and its consumer client:

The Web Service is implemented with a hardcoded model for a specific hosting, transport, binding, and message exchange pattern. Besides that, the business logic and activities are also hardcoded during design time. This model has very limited public metadata, which is mostly handled by a local repository such as a config file. Every change in the web service config metadata will force to recycle a hosting process. Using a SoapExtension in the message pipeline, we can customize the encoding, message contract, etc., and publish some metadata for managing conversation on the fly. Note, that extensions represent private local features for the specific web service.

The advantage of this model is its simplicity (just giving a URL address of the port) and interoperability between the different platforms based on the WSDL spec, which is a well known contract - MXL formatted document for consuming services. The Web Service has a built-in exporter of the metadata represented by the WSDL document resource. Based on this resource, the client can create a proxy during design time, or dynamically at runtime. This is a great feature of the loosely coupled connectivity in the logical connectivity model.

At the end of this, the Web Service is considered as an unmanageable business service. Therefore, we need another communication parading for mapping a logical connectivity to the physical connectivity in an easy and manageable way. This is accomplished very easily in WCF. The following picture shows a WCF Service instead of a Web Service.

The WCF model is a quality change in the connectivity model, where each layer can be declaratively described and stored in the metadata resource (such as a config file). There is full encapsulation of the business logic, and activity from the hosting environment based on the ServiceContract model. Hosting a service is fully transparent to the AppDomain and transport channels. Of course, WCF also supports exporting the metadata, not just for HTTP/HTTPS transport.

It looks like the WCF model is a good candidate for a manageable service, isn't it? I can say almost, we are very close. The missing part is the orchestration of the service behind. In other words, the service is still hardcoded at design time, and its manageability is based on the private features. That's bad news; the good news is, that we have a recently released .NetFX version 3.5, where are integrated two models in one paradigm, called WorkflowService.

Manageable Service

The following picture shows a manageable service based on the WorkflowService model. The WCF features are extended by the Workflow model, and integrated into the common model driven by metadata located in the config, XOML and rules resources.

Using WorkflowServices for mapping the business logical model into a physical one gives us the capability to mediate a connectivity and business activity orchestration. As you can see, all the resources are located in the service domain. The current version only supports reading resources from a file system such as a .config file and XOML/rules files. Therefore, any change in the config file will force a recycling host process. One of the solutions about hosting services in isolated business domains can be found in my recent article: VirtualService for ESB. We can centralize metadata into the Repository storage based on this solution.

The following picture shows the next step of our service modification side:

As you can see in the above picture, the services metadata is stored in the database, with one common place for its modeling and managing. This feature looks very nice and doable in the above picture, but today, using the current technologies, we need to build on top of the .NetFX 3.5 version, another layer (infrastructure) for hosting services in the specific AppDomains, bootstrap for metadata, service for managing hosting services, repository model, tools, etc.

The WorkflowService can be connected to the Enterprise Service Bus in the position of the Connector withthe responsibility of contract mediation, service accessibility, etc.; in other words, for service integration; see the following picture:

OK, let's continue, we have one more bullet in the manageable service description - Untyped Message.

Untyped Message

To enable virtualization of the service and, of course, increasing its manageability, the Service Contract should be virtualized. The virtual service can handle an incoming typed message by an untyped Operation Contract in a fully transparent manner like a typed contract. Also, on the opposite side, when the service is talking to another service, the virtual service can generate an outgoing untyped message to the endpoint driven by the typed operation contract. This is a great integration feature, when the virtual service can have full control of the message flow, exchange pattern, and message mediation.

That is true. The VirtualService (manageable WorkflowService) driven by metadata can project a Virtual Contract created during design time based on the existing and/or manually imported contracts, operations, messages, and data contract. The following picture shows this composition:

In the above example, Contract A represents a Virtual Contract as a result of the composition process of Contracts 1 - N, where the specific operations have been imported and compounded into one new virtual contract. The composition process can mediate data contracts using the XSLT technology (that's an additional resource in the metadata).

The following picture shows an example of the VirtualService with the XOML composition process, where an untyped message is branched by an operation action into separate activities for message mediation. In this example, there are five branches in the service, the first three operations are for business activities, and the others are for internal features of the service such as error handling and publishing metadata. Note, these two branches should be included in any virtual service.

Handling am unsupported operation in the virtual service is mostly done by throwing a specific exception with returning a Fault Message via am untyped contract. The other features such as publishing metadata (WSDL or Mex endpoint) are more challenging tasks for a virtual service. Note, there is a virtual (generic) contract in the service based on an untyped message contract. The following code snippet shows an example of the virtual contract:

[ServiceContract(Namespace = "urn:rkiss.esb/2008/08")]

public interface IGenericContract

{

[OperationContract(Action = "*", ReplyAction = "*")]

Message ProcessMessage(Message message);

}

A virtual contract like the above one does not produce a metadata for describing composited contract operations and/or data. One solution for this challenge is to create this metadata during design time (modeling) and export it to the virtual service, like another resource. In this case, the built-in service for metadata exchange is turned off (default configuration) and replaced by an operation branch (see the above picture) for its simulation using the WS-Transfer Get operation. This activity will simply return a WSDL resource back to the caller.

As you can see, in this concept, we push the challenge of the service virtualization to design time, and the bootstrap infrastructure doesn't care by who and how this resource has been generated and stored in the repository. We can use multiple tools (such as Altova, etc.) from the market to generate this metadata, and manually composite it by a Contract Model for runtime projecting. The other way is to create a lightweight tool hosted by MMC. I will describe this MMC Tool in more details later.

The following picture shows a concept of the manageable service driven by metadata:

The Repository represents a central component of this concept, where the metadata for our model (communication, workflow, mediations, etc.) are stored. Basically, there are the following major activities:

- Composition of the metadata by Tool, where Tool can import and create contracts, operations, schemas, bindings, WSDL, XSLT, endpoint, etc. Note, the contract can be imported from the existing endpoint or assembly.

- Pull-up metadata by VirtualService (bootstraping).

- Metadata exchange from the well-known endpoint based on the resource topic. For instance, calling this endpoint:

net.pipe://localhost/repository/mex?order, we can get a metadata exchange resource for the topic order in a fully transparent manner like from other endpoints. Note that the Tool can also import its own metadata that was created by modeling.

How does this model work?

Well, I can explain it in the following basic scenario, where I assume that the Tool already created a metadata for the virtual service and we have an active service in the host environment. Let's say, some client wants to perform a business workflow for ordering a book. The client doesn't need to know about the connectivity for this business, he needs to know who knows it (like an artificial intelligence). Therefore, the client calls the well known endpoint of this Knowledge Base to get it (Discovery phase). Based on the imported metadata exchange resource, the client has enough information to create a proxy channel for the business endpoint. The proxy can be cached and recycled from the Knowledge Base (Repository) based on specific needs such as lifetime expired, error, etc.

The above described concept can be abstracted in the following picture:

As you can see, there are two models, the first one is about the Contract which can be recognized by any component such as a service or a client. This is a logical connectivity model describing "who, how, and what" on the ESB. Note that this article is focusing on this model as a first part of the Tool.

The second model is an Application Model. This model describes the behavior of the service, business workflow, activities, hosting in the AppDomains, messages and service mediations, etc. That's another challenge of the service virtualization included in its business modeling.

The models are logically connected by the ServiceEndpoint metadata, which is a unique resource on the ESB. The ServiceEndpoint is exported by a Contract Model for a specific Address, Binding, and Contract (ABC). Assigning the ServiceEndpoint to the Application Model, we can get a common model of the VirtualService.

OK, finally, we have a picture of the ESB abstraction, where the Contract Models represent a Service Bus, logically centralized in the Repository, and physically decentralized bootstrapping metadata by the VirtualService Bootstrap located in the specific hosting machines:

The Repository storage is plugged in to the ESB via a Connector Service, with a public endpoint to get the metadata resource based on the topic. This endpoint represents all virtual services managed by the Repository. The client should call this endpoint prior to the first business call for dynamically creating a proxy. Of course, the virtual service also supports the metadata exchange feature, but in this case, the client has full responsibility to manage this physical business endpoint (for example: rerouting, mediation, etc.) like another endpoint outside of the ESB.

As I mentioned early, the Repository Service represents an Enterprise endpoint for discovering metadata. The following picture shows this scenario:

As you can see, the metadata service consumer can also be Visual Studio 2008, a console utility such as svcutil.exe, or any client. This is a great feature for centralizing manageable services, where contracts, operations, data, etc. can be unified and reused in the Contract Model. Another feature is the Model-First concept, where the Contract Model is created prior to the business process located behind the service. The VirtualService can help with the emulation of the business process in a transparent manner during the development cycle.

Summary

The following picture shows a strategy of the Repository in distributed systems. In the Repository is a business model logically centralized for modeling purposes, and physically decentralized for runtime processing. In other words, the modeling tools via the Repository (database) can declaratively write the service, its connectivity, message mediation, business processing, etc.

OK, that's all for now, let's start digging in the design and implementation of the Contract Model and MMC Tool.

As I mentioned above, manageable services are driven by metadata (such as connectivity, business activity, etc.) centralized in the Repository. This logically centralized model must be ready for bootstrapping by business AppDomains and physically deployed on the Enterprise network. The challenge of this solution is creating the logical model including its modeling, simulation, and managing. This process takes a lot of stages from the development environment to the production one. The VirtualServices running on the virtual server enables the modeling process for business simulation and emulation for each stage of the developing cycle. At the bottom of this vertical model is the Contract Model for logically describing connectivity by an Endpoint resource.

The following picture shows an example of the Contract Model diagram, where the Endpoint object represents an abstraction of the service interoperability:

The Contract Model is organized into the data hierarchy based on Schemas, Messages, Operations, Contracts, and Endpoints resources. The Endpoint declared by this model can be published by storing its XML formatted text in the WSDL resource for publishing later. This WSDL resource can be queried via the Repository service by name, with a sub-query for topic, version, and contract.

Creating the Contract Model schema in the repository storage and loading the standard bindings metadata supported by WorkflowServices, we are ready for mapping a logical connectivity to the physical one. The knowledge base of this repository can be learned by importing the metadata from the cloud network or manually by using the MMC Tool. The following picture shows this scenario:

Based on the imported metadata such as schema, and/or contract, we can increase the knowledge of the connectivity model. Compounding operations, messages, etc., into the new contracts, we can virtualize our model connectivity. Besides that, we have a capability to manage metadata on the fly based on the application behavior. Note, this article implementation has some limitations (for instance, assigning only one contract to the endpoint) in the Contract Model and MMC Tool.

The above picture shows a position of the virtual service managed by metadata models such as Application and Contract Models. On the top of this picture is shown a direct connectivity between the physical ends. Injecting a logical point between them, we can manage business contracts in a compound manner using different message exchange patterns (such as Request/Response, OneWay, etc.). This feature is enabled by using Untyped Contracts. Of course, there is no limitation for using the Typed Contract in the Virtual Service when it is suitable. For instance, REST/WS-Eventing connectivity to the Enterprise Storage.

To run the above scenario, the Repository must hold a knowledge base of the business workflow, contracts, and the hosting environment. All this information is stored as relational resources in the models. Note, the rational model of the Enterprise Businesses can be on the top of these models.

All resources in the Repository are represented by text and/or XML text formatted elements. There is no binary serialized CLR object in the model; therefore, we have to have enough information in our models (Knowledge Base) to provide runtime support for creating CLR types, instances, AppDomains, business activities, etc. This a big challenge for mapping the metadata to runtime CLR objects. Using "horse" classes such as WsdlExporter, WsdlImport, and XmlSchemaSet from the System.ServiceModel.Description and System.Xml.Schema namespaces and the LINQ technology will simplify the implementation of the Contract Model.

Publishing ServiceEndpoint

The following picture shows the basic process of publishing metadata:

The design concept of exporting the metadata is to create a CLR instance of the System.ServiceModel.Description.ServiceEndpoint class from the metadata represented by textual resources. As the above picture shows, the ABC service endpoint needs an address, which is very easy to create using an EndpointAddress class by passing a Uri address. The challenge starts with a binding type, where an instance of the Binding class is generated based on the XML formatted resource.

The Contract Model has pre-loaded all the standard bindings supported by NetFX 3.5 in the Repository, and the user can add additional bindings by using the embedded XmlNotepad 2007 (as this time, I would like to say thanks for this great CodePlex project, for allowing me to embed its assembly into the MMC framework).

OK, back to the above picture, the Binding description.

Binding

The implementation of mapping a CLR object vs. data is encapsulated into two helper classes located in separate files. The following picture shows their class diagram:

The first class, ConfigHelper has been implemented for deserializing an XML formatted resource into the configuration section, such as (in this case) the BindingsSection. This deserializing process gives us the BindingSection like it is done based on the config file.

The following code snippet shows a generic method for deserializing a config section from XML text:

public static T DeserializeSection<T>(string config) where T : class

{

T cfgSection = Activator.CreateInstance<T>();

byte[] buffer = new ASCIIEncoding().GetBytes(config);

XmlReaderSettings xmlReaderSettings = new XmlReaderSettings();

xmlReaderSettings.ConformanceLevel = ConformanceLevel.Fragment;

using (MemoryStream ms = new MemoryStream(buffer))

{

using (XmlReader reader = XmlReader.Create(ms, xmlReaderSettings))

{

try

{

Type cfgType = typeof(ConfigurationSection);

MethodInfo mi = cfgType.GetMethod("DeserializeSection",

BindingFlags.Instance | BindingFlags.NonPublic);

mi.Invoke(cfgSection, new object[] { reader });

}

catch (Exception ex)

{

throw new Exception( ...);

}

}

}

return cfgSection;

}

Having the BindingsSection object created by metadata is a step to walk through the standard .NetFx 3.5 bindings or custom binding. At the end, we have an instance of the binding class for our ServiceEndpoint. The following picture shows a code snippet of the helper method:

public static Binding CreateBinding(

string bindingName, string bindingNamespace, string xmlBinding)

{

xmlBinding = string.Format("<bindings>{0}</bindings>", xmlBinding);

BindingsSection bindingSection =

DeserializeSection<BindingsSection>(xmlBinding);

Binding binding = CreateEndpointBinding(bindingName, bindingSection);

if (!string.IsNullOrEmpty(bindingNamespace))

binding.Namespace = bindingNamespace;

return binding;

}

Next, the third requirement of the ServiceEndpoint is the Contract Description. This is a key of the implementation, where metadata such as contract, operations, messages, headers, data types, schemas, etc., described by relational data resources are mapped to the ContractDescription type.

ContractDescription

The process of mapping metadata into the ContractDescription type begins from the definition of the DataContract types for the MessageDescription and MessageHeaderDescription objects. We need a schema in order to create the MessageBody or HeaderBody type. Therefore, the schemas are the bottlenecks of our Contract Model hierarchy. Schemas can be imported on-line, drag and dropped from third party tools, for instance Altova, or can be created interactively using the built-in XmlNotepad2007 user control. The schemas stored in the Repository and Notepad can be pre-compiled anytime by clicking on the MMC action item.

As I mentioned earlier, the Contract Model enables a composition of the imported contracts, share their schemas, messages, headers, and operations. The imported schemas must be properly collected by the XmlSchemaSet container to avoid their duplicity, etc. Each step of the contract composition can be pre-compiled before finishing in the Repository.

For this metadata mapping, the WsdlHelper static class has been created for simplified implementation - see the above class diagram.

There are many helpful methods for messaging the metadata and encapsulating this logic from the user interface. The following picture is a code snippet for exporting the service endpoint:

private void buttonExport_Click(object sender, EventArgs e)

{

StringBuilder sbLog = new StringBuilder();

LocalRepositoryDataContext repository =

((LocalRepositorySnapIn)this.view.SnapIn).Repository;

#region PART 1: Generate ContractDescription (CLR Types)

var contractTypes = WsdlHelper.GenerateContractDescriptions(

repository, selectedEndpoint.contractId, selectedOperations, sbLog);

ContractDescription contractDescription =

contractTypes.FirstOrDefault(t => t.Name == selectedContract.Name);

#endregion

#region PART 2: Generate ServiceEndpoints

Collection<ServiceEndpoint> endpoints = new Collection<ServiceEndpoint>()

{

new ServiceEndpoint(contractDescription, binding, endpoint)

{ Name=this.selectedEndpoint.name},

};

#endregion

#region PART 3: Generate wsdl

MetadataSet metadataDocs =

WsdlHelper.GenerateMetadata(endpoints, policyVersion);

StringBuilder sb = new StringBuilder();

metadataDocs.WriteTo(XmlWriter.Create(sb));

#endregion

xmlNotepadPanelControl.XmlNotepadForm.LoadXmlDocument(sb.ToString());

}

The above implementations looks very straightforward using the WsdlHelper class. As an example of this helper, the following is a code snippet for creating a set of schemas for an operation:

public static XmlSchemaSet CreateOperationXmlSchemaSet(

LocalRepositoryDataContext repository,

Guid? inputMessageId,

Guid? outputMessageId,

XmlSchemaSet set)

{

if (set == null)

{

set = new XmlSchemaSet();

set.ValidationEventHandler += delegate(object sender, ValidationEventArgs args)

{

throw args.Exception;

};

}

var im = repository.Messages.FirstOrDefault(m => m.id == inputMessageId);

if (im != null)

{

var om = repository.Messages.FirstOrDefault(m => m.id == outputMessageId);

if (im.schemaId == null || im.Schema.schema1 == null)

throw new Exception("Missing schema for input message ...");

if (set.Schemas().Count == 0 || set.Schemas().Cast<XmlSchema><xmlschema />().

FirstOrDefault(s => s.TargetNamespace == im.Schema.@namespace &&

s.SourceUri == im.Schema.sourceUrl) == null)

set.Add(null, XmlReader.Create(

new StringReader(im.Schema.schema1.ToString()))).SourceUri=

im.Schema.sourceUrl;

if (im.headers != null)

CreateXmlSchemaSetForHeaders(repository, im.headers.ToString(), set);

if (om != null && om.schemaId != null)

{

if (set.Schemas().Count == 0 || set.Schemas().Cast<XmlSchema><xmlschema />().

FirstOrDefault(s => s.TargetNamespace == om.Schema.@namespace &&

s.SourceUri == om.Schema.sourceUrl) == null)

{

set.Add(null, XmlReader.Create(

new StringReader(om.Schema.schema1.ToString()

))).SourceUri=om.Schema.sourceUrl;

}

if (om.headers != null)

CreateXmlSchemaSetForHeaders(repository, om.headers.ToString(), set);

}

}

ImportSchemas(repository, set);

return set;

}

During the processing of the above method, the XmlSchemaSet will accumulate all the contract's operation schemas from the repository (defined by the input/output message ID). The schema query is based on the TargetNamespace and SourceUrl in order to avoid a schema duplicity. Once we have a complete set of schemas on the contract(s) level, we can compile it. This is a step that can break our mapping process. When it happens, the error message will pop up with the error description. Note, the problem must be solved in order to continue to the next step. That's why we have an action (for each level of the metadata) to pre-compile metadata to eliminate the problem of storing bad metadata resources into the repository.

The other interesting feature of the WsdlExport class is creating a memory assembly of the types described by the schemas. Note, the assembly is loaded to the default AppDoman in this version of the article. It should be loaded in a separate AppDomain for its future unloading.

That's all for the description, let's continue with describing a tool which that allow us to create the metadata for service endpoints.

esbLocalRepository Tool

The esbLocalRepository is a lightweight tool for creating and managing metadata for the Contract Model. This tool is implemented in the MMC 3.0 framework, and it uses the LINQ-SQL technology for handling resources in the SQL database.

The UI layout design is based on three panels. The left one is dedicated for metadata type resources such as schemas, messages, operations, contracts, and endpoints/WSDL. The right panel is an Action panel for the selected resource. For instance, the operation resource will have the following actions: All Operations, Add New Operation, Compile Operation, Delete, Refresh. The central panel is for interactive work with the metadata resource. The relational resources are for reading purposes only. The user controls are DataGridView oriented, which allows us to page and filter specific resources from the repository.

The following picture is a screen snippet of the "Add New Operation" into the repository:

The user control hierarchy grows vertically based on relational resources. For instance, the schema resource is on the bottom of this hierarchy, and the opposite endpoint resource is on the top. To show more a user friendly layout of this panel, double click on the row to minimize a client panel for three rows. In opposite, by clicking on the TabPage, the panel will be maximized to show more rows.

For XML formatted resources, the tool embedded the XmlNotepad 2007 assembly, which allows overriding its public XmlNotepad.FormMain class for our purposes. The following screen snippet shows a user control for Add Binding, where a customBinding can be dragged and dropped or interactively created:

While talking about third party user controls that helped me to build this tool, there is one more control. It is the PopupControl. Thanks for this CodeProject assembly; I used this popup control on the combobox to select a specific resource (such as binding, schema, etc.) in a user friendly manner.

The Microsoft Management Console 3.0 (MMC 3.0) is a great managed framework for the Windows environment. Compared to version 2.0 - see my old article Remoting Managment Console written in 2003 for managing remoting objects and their hosting, version 3.0 makes quality changes for easy development and extensibility. Of course, there is a challenge for the next version, for example, having a Pub/Sub notification system with an event driven architecture including the broadcasting scenario. Anyway, thanks for this great MMC 3.0 boilerplate which increased my productivity.

OK, let's make a "Hello World" example to show the capabilities of the tool. This example shows creating a compound (virtual) contract based on the imported metadata from WS-Eventing and Amazon.

Import Contract from URL

Follow these steps:

- Select Contracts on the left panel.

- Select Import Contracts Action on the right panel.

- Insert URL for importing contract. For example: http://schemas.xmlsoap.org/ws/2004/08/eventing/eventing.wsdl

- Click on the Get button. Note, that binding for this connectivity is standard, but it can be changed based on the binding selection (not supported in this version).

You should see contracts, operations. and messages/header in the central panel:

- Select Contract for import to the Repository, for instance,

SubscriptionManager. - Select Operations for this selected Contract. Let's say,

GetStatusOp. - Click on the Import button to process the importing of schemas, messages/headers, operations for this selected contract.

- Click on the Cancel button to cleanup the user control.

- Type the new URL, for instance: http://webservices.amazon.com/AWSECommerceService/AWSECommerceService.wsdl.

- Select a Contract (there is only one).

- Select Operations, for instance:

CartAdd, CartClear, CartCreate, CartGet, and CartModify - Click on the Import button. When the metadata is imported, Exist Column is marked.

Now, we should have two Contracts in the Repository. Each resource can be examined individually on their control. Be sure to Refresh the control after changes. The resource validation is done by Action Compile. Any errors will popup a modal dialog with error details.

Add New Contract (Compound)

The Compound (or Virtual) Contract is managed by a tool based on the metadata stored in the Repository. The process is very simple such as populating contract description properties and selecting operations. Following up on our above example, the following screen snippet should be displayed after clicking on the Compile button:

If the metadata has been compiled, the button Finish will enabled; otherwise, we have to solve the problem described in the error popup dialog and repeat the compiling process.

OK, so far so good. We have our new contract named VirtualContract. This contract has been composited from two different external metadata (WS-Eventing and Amazon).

Now, we can assign this contract to the endpoint and export its metadata. The following steps show this scenario.

Export Endpoint

The Export Endpoint is a final resource for this tool. It will create and store metadata in the repository for future referencing and publishing. As I mentioned earlier, the generic contract (action="*") doesn't generate metadata at runtime. It will be hard to do it dynamically for any contract.

Back to our tool, selecting the control for New Endpoint and our evolution contract included in its operation, we can see the following screen:

The Export and (of course) Save buttons are disabled. There are missing Address and Binding values. Let's type some unique endpoint address and then click on the combobox Binding. The popup control will show up all the binding registered in the Repository. The following screen snippet shows this action:

After that, the Export button is enabled and ready to process exporting of this endpoint. When the compiler succeeds, the metadata is shown in the Metadata Preview tab and the button Save is enabled to store it in the repository.

The following portion of the screen snippet shows this scenario. You can see more tab pages in this control; for example, Generated Contract Types will show you the source code of all the types and service contract(s). Note, the Policy tab is not implemented in this article.

Now, we can store the endpoint metadata into the repository under a unique key. The key is the Name and Topic properties. Clicking on the button Save, the WSDL resource will be stored in the repository and will get the following feedback screen on the Metadata Test tab page..

If we have installed and opened the Repository service (hosted in the Windows NT Service under the name LocalRepositoryService), we can run the svcutil.exe test by clicking on the button Run.

Note that other optional command arguments can be added to svcutil.exe, or you can just type /? for more help.

One more thing about the serialization. The default serializer is used as DataContractSerializer. In same cases, we need to use the XmlSerializer, which is why we have a combobox selection. The compiling process will automatically select the best option by selecting the AutoSerializer option. In order for processing the XML on the contract operation(s), the checkbox XmlFormat can be checked.

The following code snippet shows the logic of selecting the type of the serialization. Note that the XmlSerializerImporter class is implemented in the solution:

XsdDataContractImporter importer = new XsdDataContractImporter()

{

Options = new ImportOptions()

{ GenerateSerializable = true,

EnableDataBinding = false,

GenerateInternal = false,

ImportXmlType = false

}

};

if (serializerOption != null &&

serializerOption.Serializer == SerializerTypes.DataContractSerializer)

{

importer.Options.ImportXmlType = true;

importer.Import(set);

code = importer.CodeCompileUnit;

}

else if (serializerOption != null &&

serializerOption.Serializer == SerializerTypes.XmlSerializer)

{

XmlSerializerImport importer2 = new XmlSerializerImport();

importer2.Import(set);

code = importer2.CodeCompileUnit;

}

else if (importer.CanImport(set))

{

importer.Import(set);

code = importer.CodeCompileUnit;

}

else

{

XmlSerializerImport importer2 = new XmlSerializerImport();

importer2.Import(set);

code = importer2.CodeCompileUnit;

}

Modifying an Imported Contract

An imported contract can be modified by adding more operations or changing its properties. The following screen snippet shows the Modify Contract action, where the imported contract Test2 version 1.0.0.0 is modified by adding one more CartAdd operations from another imported contract:

Compiling this new version and clicking on the Finish button, the contract Test2 version 1.0.0.1 will be stored in the repository. We can export the endpoint for this contract and store it for future discovery by the client or the Virtual Service (the bootstrapping process).

The Virtual Service driven by the metadata will project this Contract in the workflow service, like it is shown in the following picture:

This is a simplified XOML virtual service in order to describe four operation branches. The first one is a branch for processing our additional operation CartAdd, which is not a part of the physical Test2 contract. The second branch is basically a router of the originator contract to the physical endpoint, the next branch is for publishing metadata via WS-Transfer, and the last one is for handling unsupported operations.

Basically, the above example showed a service mediation prior to the real physical endpoint. We extended a real unmanageable service for additional business in the virtual service based on the metadata. Note, the client didn't see this real endpoint, and actually the client doesn't care about its physical location and existence.

One more example to show is how to add a new message into the repository.

Add New Message

As you know, the imported contract will import schemas, messages, and operations. This is an implicit process of extracting all the resources from the imported metadata into the repository. This feature is recommended, that way we can import contracts from the assembly. The contracts can be created in Visual Studio in the contract assembly, and then imported into the repository.

However, the tool has the capability to create a new message (body and headers) explicitly via the Add New Message action. The following screen snippet shows this action:

In order to create a new message, the schema and the specific type have to be selected (see the above picture for the message body and header). There is no way in this implementation to type the message body based on the parts. In all the cases, we need to have a message contract like a request/response. This element is selected by the schema type. In the case of the header, there is some flexibility to type the header manually except for importing from the schema. Once we are ready, the message can be compiled by clicking on the Finish button that will store the metadata in the repository for future composition.

Summary

The above sections described a few features of the esbLocalRepository. The tool gave you some overview of how to create a Virtual Contract. This contract is a result of composition resources such as operations, messages, schemas, types, etc. stored in the Repository. Therefore, the Repository can also be called a Knowledge Base of the Logical Connectivity and Business processing. The Repository must be educated implicitly from the existing contracts, or explicitly in a contract-first fashion.

The following picture shows the position of the Contract Model in the Manageable Service:

As you can see, behind the Contract Model is an Application Model driven by XOML. This is the place where a virtual contract is projected based on the business model, including a service and message mediation such as changing a message exchange pattern, etc.

That's all for the description, and let's describe some usage of the metadata from the Repository.

The primary goal of the Contract Model is creating the metadata for the virtual service in a Model-First fashion. As I mentioned earlier, the service with a generic contract (Action="*") doesn't publish the metadata; therefore, we need to provide it to the service in advance. The best usage of the Contract Model is for a Virtual Service, together with an Application Model. Unfortunately, we don't have it in the scope of this article.

However, we can prove this concept in a small test using Visual Studio 2008 as a client and tiny self hosted generic services on the server side. The following code snippet shows its contract:

[ServiceContract(Namespace = "urn:rkiss.esb/2008/04")]

public interface IGenericContract

{

[OperationContract(Action = "*", ReplyAction = "*")]

Message ProcessMessage(Message message);

}

[ServiceContract(Namespace = "urn:rkiss.esb/2008/04")]

public interface IGenericOneWayContract

{

[OperationContract(Action = "*", IsOneWay=true)]

void ProcessMessage(Message message);

}

The service has only one business logic: dump the full message on the console.

Client

The Contract Model represented in the Repository can be consumed via a well known service endpoint. In this article, that point has been configured in the config file as net.pipe://localhost/repository/mex? with a simple query by the name of the metadata.

Let's create a Console Application client using WCF. This client needs to consume a service, therefore the proxy must be created. VS2008 has a built-in Add Service Reference feature for creating a proxy in a transparent manner.

The following screen snippet shows the utility dialog for importing metadata from the service endpoint:

There is nothing special on the client side, the developer knows a service endpoint where he/she can obtain the metadata, and VS2008 will embed the source code and all the types into the project for consuming the service via a proxy. Note, the proxy has enough information for the message exchange pattern between the service and the client including the actual target endpoint address.

Once we have a proxy in the source code, the business layer can begin consuming the service via this proxy in a fully transparent manner like any other object located in the AppDomain.

That's all from the client side. In order to run our client, we need to launch our target service. In this example, we have an empty service to show a received SOAP message. Note, the service is very simple and works for one way operations only, there is no response message back to the caller.

The following screen snippet shows an example of the client calls for the RenewOp operation from WS-Eventing.

The LocalRepositoryConsole solution has been built with the following five projects based on the three layers:

The core of the solution is the LocalRepositoryConsole project where an implementation of the MMC tool for the Contract Model is located. The solution was developed with Visual Studio 2008/SP1 - target Framework 3.5/SP1 version, and the solution uses several technologies. There is a third party folder for holding a copy of the assemblies such as XmlNotepad 2007 and PopupControl. The solution (for test purposes) uses the MS svcutil.exe program from the standard installation path. Please modify the path to match your settings, if necessary.

Since the solution requires an installation process with SQL, MMC, etc., the following steps will help you deploy the solution on your box.

- Download and unzip the included source into your folder.

- Create a local database in SQLEXPRESS:

connectionString = "Data Source=localhost\sqlexpress;

Initial Catalog=LocalRepository;Integrated Security=True"

- Add the external tool InstallUtil.exe into your VS2008 for the install/uninstall assembly. Here are the command and arguments:

C:\Windows\Microsoft.NET\Framework\v2.0.50727\InstallUtil.exe

$(BinDir)$(TargetName)$(TargetExt)

- Open the solution by using VS2008.

- In the Server Explorer, add a database connection to the LocalRepository database.

- Compile the solution.

- In LocalRepositoryConsole, open the file CreateLocalRepository.sql and click on Execute SQL. This script will create all the tables and schemas for our repository.

- Open the file LoadingLocalRepository.sql and execute the script. This action will process and preload the metadata into the repository, for instance, standard bindings.

- Select LocalRepositoryWindowsService as the StartUp Project. Now, when you click on installutil (externaol), we can install this Windows NT service. Note, the service must be started manually, therefore open Computer Management Services and find the service with the name LocalRepositoryService and click on Start.

- Select LocalRepositoryConsole and setup for StartUp Project. Go to External Tools, and click on installutil.

- OK, now is the time for MMC. Go to Vista Start and type mmc. You should see an empty Console Root on MMC 3.0.

- Select File/Add or Remove Snap-ins.

- Add the esbLocalRepository snap-in.

- Now, the Contract Model tool is ready to be used. Be sure to save it before you close it.

- You can learn about your repository and work with the schemas, messages, etc. After this evaluation period, the repository can be purged with the script, ResetLocalRepository.

Note, as I mentioned earlier, this implementation is not a production version. It is an initial version for projecting the concept of the Contract Model for a Virtual Service. There are many missing features such as deleting constrained resources, improvement of merging schemas within the same service, comparing schemas, policy, compounding endpoints, etc.

The logical model connectivity is based on the Contract Model metadata which is a service endpoint description. From the modeling point of view, this model is logically centralized in the Repository and physically decentralized on the host processes. The current hardware technology enables us to deploy our application virtually on the quest machines of the physically hosted system. For instance, we can create clusters of virtual servers for front-end, middle-tier, and back-end databases. All these virtual servers can be hosted on one physical server with strong hardware resources. As you can see, the installation of these servers can be part of the modeling, too. The business processes are virtually decentralized and logically centralized using the Contract Model. This article described the Contract Model, which plays a very small part of the modeling challenge. The next challenge is creating an Application Model that describes the hosting of the VirtualService, activation, mediation, and the business processing (using a XAML activated WorkflowService).

We can hear more details about the modeling strategy at the Microsoft PDC 2008 conference, where the project Oslo and its technologies will be introduced. See you there.

References: