Introduction

The article describes the internals of volatile fields. I've seen a lot of discussions in the web regarding volatile fields. I've performed my own small research in this area, and here are some thoughts on this.

Volatile fields and memory barrier: A look inside

The two main purposes of C# volatile fields are:

- Introduce memory barriers for all access operations to these fields. In order to improve performance, CPUs store frequently accessible objects in the CPU cache. In the case of multi-threaded applications, this can cause problems. For instance, imagine a situation when a thread is constantly reading a boolean value (read thread) and another one is responsible for updating this field (write thread). Now, if the OS will decide to run these two threads in different CPUs, it is possible that the update thread will change the value of the field on the CPU1 cache and the read thread will continue reading the value from the CPU2 cache. In other words, it will not get the change in thread1 until the CPU1 cache is invalidated. The situation can be worse if two threads update the value.

Volatile fields introduce memory barriers, which means that the CPU will always read from and write to the virtual memory, but not to the CPU cache. Nowadays, such CPU architectures as x86 and x64 have CPU cache coherency, which means that any change in the CPU cache of one processor will be propagated to other CPU caches. And, in its turn, it means that the JIT compiler for the x86 and x64 platforms makes no difference between volatile and non-volatile fields (except the one stated in item #2). Also, multi-core CPUs usually have two levels of cache: the first level is shared between CPU cores, and the second one is not. But, such CPU architectures as Itanium with a weak memory model does not have cache coherency, and therefore the volatile keyword and memory barriers play a significant role while designing multi-threaded applications. Therefore, I'd recommend to always use volatile and memory barriers even for x86 and x64 CPUs, because otherwise, you introduce CPU architecture affinity to your application.

Note: you can also introduce memory barriers by using Thread.VolatileRead/Thread.VolatileWrite (these two methods successfully replace the volatile keyword), Thread.MemoryBarrier, or even with the C# lock keyword etc.

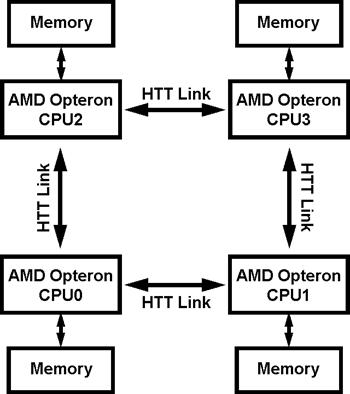

Below are displayed two CPU architectures: Itanium and AMD (Direct Connect architecture). As we can see, in AMD's Direct Connect architecture, all processors are connected with each other, so we have memory coherence. In the Itanium architecture, the CPUs are not connected with each other and they communicate with RAM through the System Bus.

- Prevents instruction reordering. For instance, consider we have a loop:

while(true)

{

if(myField)

{

}

}

In the case of a non-volatile field, during JIT compilation, due to performance considerations, the JIT compiler can reorder instructions in the following manner:

if(myField)

{

while(true)

{

}

}

In case you plan to change myField from a separate thread, will there be a significant difference? Usually, it is recommended to use the lock statement (Monitor.Enter or Monitor.Exit), but if you change only one field within this block, then the volatile field will perform significantly better than the Monitor class.

Special thanks

I would like to thank Stefan Repas from Microsoft for providing some helpful information on this topic.