Introduction

Every now and then, I find myself driven nuts by websites that insist on me clicking through tens of pages to do relatively simple things that I need to do on a regular basis, or websites which insist that I access their content interactively on their web page rather than being able to select some content and download it for viewing later.

An example of this is YouTube, and the number of downloaders available on the Internet is testament to the demand for the ability to grab the videos so they can be watched offline either on the PC or on iPods and other more convenient devices.

While it would be simple to just create such a program and upload it here, I thought it would be more useful if I walked you through the process of creating such a program, as it really is a combination of activities, only one of which is coding.

Before I get into this, it is inevitable that the program that accompanies this article will work as is for only a short period of time. The code has inbuilt into it a dependency that YouTube continue to work exactly as it does now (Jan 2009). This is, of course, unrealistic, but I also don't intend to keep revisiting and updating this article to keep it working. If this happens, then I leave it as an exercise for the reader to use the methods below to work out what changes to make to get it to work again.

What the article does include that will remain useful is a number of utility functions and coding patterns for quickly implementing these sort of robots.

Preliminaries

Before we commence, I want to point out that I have written this article based on a machine that uses Internet Explorer. It is possible, if not probable, that it can all also be done with Opera, Firefox etc., but as I don't use these browsers, I have not bothered to document how you would use those tools instead of IE.

Step 1 - Capture A Sample

Before we open up our coding tools, we have some work to do with our browser. We need to reverse engineer how the web site in question interacts with our browser.

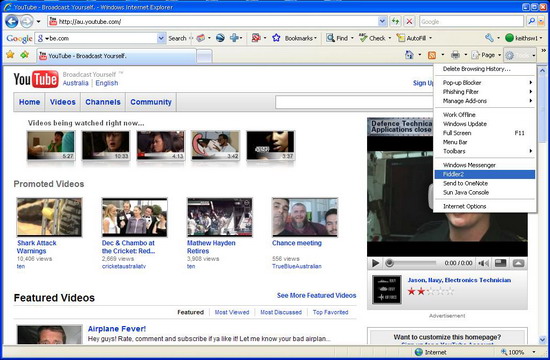

The tool I use for this is a free browser plug-in named Fiddler. You will need to download and install this tool on your machine. For a full description of what this is and how it works, please see the tool's website. Using IE, Fiddler will just work. Using other browsers, you may need to spend some time reading the website to work out how to get it to work.

Our first step is to observe what happens when we watch a video on YouTube. To do this, we follow these steps ...

- Open up your browser.

- Find the option for clearing the browser cache, and do so. This will clear the cache, which will make it easier to see what is going on. On IE 7, this can be found by going to the Tools item on the command bar and selecting the Internet Options menu item. On the General tab, click the Delete button in the Browsing history section, and then click the Delete files button in the dialog box that appears.

- Startup Fiddler (in IE 7, you can find it under Tools/Fiddler2 in the command bar).

- Clear any capture data when Fiddler starts, by using the Edit/Remove/All Sessions menu item.

- Navigate to http://wwww.youtube.com/.

- Find a video you are interested in.

- Rick click the mouse on the video thumbnail, and select Copy Shortcut from the context menu, and save this shortcut in a text document. This URL is our starting URL.

- Watch the video in its entirety ... choosing a short video helps here.

- Stop Fiddler, capturing data using the File/Capture Traffic menu item.

- Select all the captured traffic using the Edit/Select All menu item.

- Save the traffic to a file using the File/Save/Session(s)/In ArchiveZip menu item for future reference.

Step 2 - Investigation

The first thing that can be observer by right clicking on the video in the browser is that YouTube uses Adobe Flash to play videos.

Flash videos are typically either streamed or downloaded .FLV files. A quick look in the browser cache shows that we do indeed have a fairly large FLV file, which is our video. This is the file we want our tool to download.

Our next step is to go back to Fiddler and find the request which downloaded the .FLV file, and more specifically, take a close look at the URL. This is the URL we need to access:

http://v24.cache.googlevideo.com/get_video?origin=mia-v348.mia.youtube.com

&video_id=zgDp4CW_ZI0&ip=124.171.9.247®ion=255&

signature=6C8F763CCEA43043AF5A30AC135E7CEE57FC45CC.535A50B0D535A581E22E6B7C6

DA67C8FFB2DC3D5&sver=2&expire=1232543080&key=yt1&ipbits=0

Now, we go to the start of the Fiddler log and try to find our starting URL. This should be the same as the URL we found when we copied the shortcut behind the video thumbnail. This is our starting point. We just have to work out how to get from here to the .FLV file's URL.

http://au.youtube.com/watch?v=zgDp4CW_ZI0

Looking at the requests between the original shortcut and the FLV file, we see another interesting request.

http://au.youtube.com/get_video?video_id=zgDp4CW_ZI0&t=OEgs

ToPDskL4WlMPA0C8xypYnj1Q6IIE&el=detailpage&ps=

This is interesting because it seems to be referring to the same page, but on a different server with different (and simpler) parameters.

Before we go on, I need to introduce you to Fiddler's right hand panel. This panel allows you to inspect the web request made and the response received. If you click on the URL above and select the Inspectors tab (red circle), you can see the request data (pink circle) and the response data (green circle). Spend some time here familiarising yourself as we will be spending some time here in the next few steps.

Drawing your attention to the Raw tab in the response, we can see this request resulted in an HTTP 1.1 return code of 303. This code means that the website in response to this request has told your browser it is looking in the wrong place and has an alternative place for you to look. You can see this location 6 lines down, prefixed by Location:, and fortunately for us, it is that long complicated target URL.

To test this, we can take the shorter:

http://au.youtube.com/get_video?video_id=zgDp4CW_ZI0&t=OEgsToPDskL4

WlMPA0C8xypYnj1Q6IIE&el=detailpage&ps=

URL and put it into our browser address bar, and a few seconds later, the file download box appears. A bit of "try it and see" experimenting with this URL further shows that it can be shortened to:

http://au.youtube.com/get_video?video_id=zgDp4CW_ZI0&t=OEgsToPDskL4WlMPA0C8xypYnj1Q6IIE

and it will still work fine. This simpler URL is now our new target.

Our next step is to work out how to get the components of this URL. Breaking it down, we have a number of elements:

- http://au.youtube.com/ - This is the server to contact. This appears to be the same as our original shortcut, so we should be able to get it from there.

- get_video? - This seems fairly static, so we can hardcode this.

- video_id=zgDp4CW_ZI0& - This was the v parameter in the original shortcut, so once again, we should be able to get it from there.

- t=OEgsToPDskL4WlMPA0C8xypYnj1Q6IIE - Last one, and what do you know, we are stuck.

To find the source for our t parameter, we need to go back to Fiddler and look at the response to the original shortcut.

Looking closely at the raw response, we see it is an HTML file which we can search.

Fortunately, this string is in the file on the following line:

var fullscreenUrl = '/watch_fullscreen?fs=1&creator=Hughsnews&

ad_channel_code=invideo_overlay_480x70_cat23%

2Cafv_overlay&ad_host_tier=26386&vq=None&iv_storage_server=http%3A%

2F%2Fwww.google.com%2Freviews%2Fy%2F&invideo=True&tk=T9FA4SUtLTJB11M7ykBfjJ6LZPH-

uvK6bGXgUFekX58jdOAq2gMjZg%3D%3D&plid=AARg-MTEDBrh1UjAAAAC0QDIAQA&

iv_module=http%3A%2F%2Fs.ytimg.com%2Fyt%2Fswf%

2Fiv_module-vfl71609.swf&afv=True&ad_video_pub_id=ca-pub-6219811747049371

&sdetail=p%253A%2F&sourceid=y&ad_host=ca-host-pub-

5485660253720840&fmt_map=6%2F720000%2F7%2F0%2F0&hl=en&

cust_p=jguoYeSIhbDqThmQSvK-wA&BASE_YT_URL=http%3A%2F%

2Fau.youtube.com%2F&ad_module=http%3A%2F%2Fs.ytimg.com%2Fyt%2Fswf%2

Fad-vfl73792.swf&video_id=zgDp4CW_ZI0&l=368&sk=piJP2atRgcLHGIk_GGpRyKlD

kSN0urx0C&t=OEgsToPDskL4WlMPA0C8xypYnj1Q6IIE&title=Airplane Fever!';

So now, we have everything we need to build a robot. Our new robot needs to follow these steps:

- Accept the shortcut.

- Extract the server name and the v parameter.

- Retrieve the webpage the shortcut refers to.

- Search the returned page for the line containing our t parameter.

- Build the URL and request it.

- When we get the redirection, follow it.

- Save the FLV file returned.

The Code

The code attached to the article basically implements the robot steps identified above. I don't plan to discuss the code as it is in the source files and clearly documented

Of more interest is the RobotUtility.cs file as it contains a set of small but useful functions which can be reused in any similar robot. The functions here are not exhaustive, and if you plan to build robots for other websites, I am sure you will want to extend it with other functions.

The functions and their use can be summarised as follows:

string GetPage(Uri url)

This function gets the text returned by a URL in a string.

string ExtractValue(string tosearch, string regex)

This function is useful for extracting substrings from a string, but the regex string should follow some strict rules:

- It must have a substring named

val. - It must find what you are looking for in the first match.

URI GetRedirect(Uri url)

This function requests the URL, and returns the new URL that this one redirects to.

void GetFile(Uri url, string file)

This function requests the URL, and saves the resulting data in the named file.

Putting it all Together

Using these functions, it is possible to implement the robot steps described above as follows:

string shortcut = tbURL.Text;

string server = RobotUtility.Utility.ExtractValue(shortcut, "^(?'val'.*?)watch");

string v = RobotUtility.Utility.ExtractValue(shortcut, "v=(?'val'.*?)$");

string page = RobotUtility.Utility.GetPage(new Uri(shortcut));

string t = RobotUtility.Utility.ExtractValue(page, "&t=(?'val'.*?)&");

string flvurl = server + "get_video?video_id=" + v + "&t=" + t;

Uri redirect = RobotUtility.Utility.GetRedirect(new Uri(flvurl));

string file = tbDestination.Text + "\\" + v + ".flv";

RobotUtility.Utility.GetFile(redirect, file);

Conclusion

When you need to do something in bulk, or you want to evade an overly restrictive website, a robot can save you huge amounts of time and frustration.