Introduction

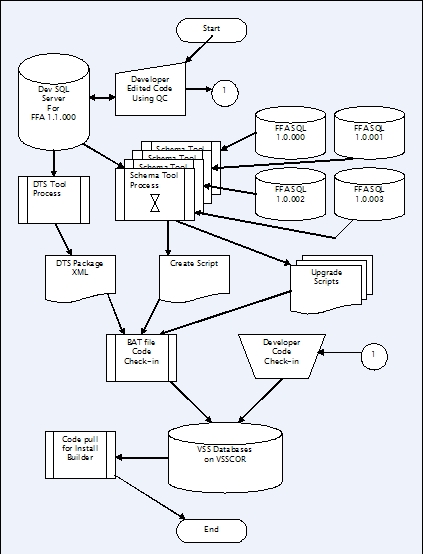

The challenge of developing code for any small or large development project that uses a SQL database, is that often the code and database schema change or get modified over the duration of the SDLC from what was previously delivered to the customer.

It is not always easy to ensure that the previously delivered schema stays in sync with the code unless rigorous project process controls are in place. I have worked on several projects, and every one had a different approach to ensuring that code and database schema stay in sync.

This tool which I call the SQL Schema Tool or SST, was created to assist in handling the problems of comparing or providing update scripts that work against the issue of synchronizing a customer database schema from a previous version when migrating to a new version.

One way that we can deliver schema changes for an existing database, is to create a new database with the updated schema and then use some ETL process to migrate data from the old database into the new one. However my experience with customers is that they are reluctant to have to swap out a production database for an entirely new one.

My approach to the problem using SST, was to provide a way to capture the delivered customer's schema as an XML snapshot that describes the schema. This snapshot of the customer's database schema can then be compared to the current development database schema without requiring active connections and provide an update SQL script to run against the customer database.

Current Features

- Schema XML snapshots, for portable SQL schema generation/compare without a database

- Schema generation – with selectable object types

- Schema compare – with selectable object types

- DTS package XML Snapshots, for creating portable DTS packages

- Two Command-line tools for the above listed functions. This should allow ant build or other automation tools to compare or generate schema updates automatically.

- Data compare – currently of individual tables only

- Data export – CVS and XLS, plan to add text file output for SQL

insert statements - Reports for data compare, Schema; tables, views, functions, and sprocs

- XML tree browser with XPath search of XML snapshots generated by the tools

- Run generated SQL against selected DB without switching to yet another tool

- Logging of all events, actions and errors - both SQL related and application type errors.

- Custom User Defined XSLT transformations can be automatically applied to the generated SQL Schema and Data XML output.

Background

I worked on the QueryCommander SQL Editor project at one point. Some of the design approaches used in SST come from my experiences with working in code base.

The SQL Schema Tool or SST started life as a command line project to get DTS packages out of the database as XML. Additional code was added later to get schema changes made against development databases that did not exist in the QA databases and create update scripts to run against QA databases during the continuous build process.

The schema tool was then turned into a common set of classes that could be called from either a command line or a Winform GUI application. I had decided to try and sell the software, but never seemed to generate much interest. Therefore, I am writing this article and giving my code away under the attached license as well as any license used by the libraries contained within the code.

Using the Code

The code was written and tested against Microsoft SQL Server 2000 and SQL Server 2005. I have separated the classes such that it should be an easy modification to add support for other vendor databases, such as MYSQL or DB2, Oracle; although the DTS Package classes would only work with SQL 2000.

Architecture of the schema module has been designed to deal with the database at the object level. This allows individual groups of objects to be selected for the compare or generate schema operations. It also allows the user of the application to narrow their focus to the exact objects of a database that are in flux, or changing often.

When you examine the output of the XML snapshot, you can see this approach by noting the XML nodes or elements are grouped by object type, and sorted by object name.

All principal output from the schema module is in the form of XML, that is in turn transformed into the appropriate output, by the use of XSLT and XML transformations.

The SQL Schema Tool is designed to consider a master or source database, which is the current or latest database schema for use in a project. The destination or target database is considered by SST to be the older schema, or the schema that needs updating.

Major Design Considerations

- Keep intact any data that exists in the target database

- Ability to make schema comparisons in a fully or partially disconnected mode by the use of saving a XML schema snapshot for the source database, target database or both

- Use XSLT to transform XML into appropriate objects

Shown below is a simplified sequence diagram of the SQLSchemaTool class.

The SQLSchemaTool class uses the SQLObjects namespace and the classes: Tables, Views, Sprocs, etc. that serialize the schema of those objects as XML. The code for the objects in the database makes use of Generics where appropriate.

public static string SerializeDB(

string SQLServer,

string DBName,

string UID,

string PWD,

string SQLfile,

bool Translate,

bool Primary,

object threaded,

byte objectsToSerialize,

string CustomXSLT,

string delimTableNames)

{

_threaded = threaded;

string _serverDB = SQLServer + ":" + DBName;

string outputFile = string.Format

(_OUTPUTFILE, SQLServer.Replace("\\", "_").Replace(":", "-"),

DBName.Replace("\\", "_").Replace(":", "-"));

try

{

SQLMethods.SQLConnections _connections;

if (UID != null && PWD != null)

{

_connections = new SQLMethods.SQLConnections

(SQLServer, DBName, UID, PWD, false);

}

else

{

_connections = new SQLMethods.SQLConnections(SQLServer, DBName);

}

if (_connections != null && _connections.Count > 0)

{

DataSet _ds = new DataSet("DataBase_Schema");

_ds.EnforceConstraints = false;

DataTable dt = _ds.Tables.Add("Database");

dt.Columns.Add("Name");

dt.Columns.Add("Date");

dt.Columns.Add("Time");

DataRow dr = dt.NewRow();

dr.ItemArray =

new object[] { DBName, DateTime.Now.ToShortDateString(),

DateTime.Now.ToShortTimeString() };

dt.Rows.Add(dr);

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.DEFAULT)) ==

(int)_NodeType.DEFAULT)

{

SQLObjects.Defaults _defaults = new SQLObjects.Defaults(DBName);

_defaults.GetObject<sqlobjects.defaults>(_connections[0].sqlConnection);

_ds.Merge(_defaults);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.RULE)) ==

(int)_NodeType.RULE)

{

SQLObjects.Rules _rules = new SQLObjects.Rules(DBName);

_rules.GetObject<sqlobjects.rules>(_connections[0].sqlConnection);

_ds.Merge(_rules);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.UDDT)) ==

(int)_NodeType.UDDT)

{

SQLObjects.UDDTs _uddts = new SQLObjects.UDDTs(DBName);

_uddts.GetObject<sqlobjects.uddts>(_connections[0].sqlConnection);

_ds.Merge(_uddts);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.TABLE)) ==

(int)_NodeType.TABLE)

{

SQLObjects.Tables _tables = new SQLObjects.Tables(DBName);

if (!string.IsNullOrEmpty(delimTableNames))

{

_tables.GetObject<sqlobjects.tables>(_connections[0].sqlConnection,

Primary, delimTableNames);

}

else

{

_tables.GetObject<sqlobjects.tables>

(_connections[0].sqlConnection, Primary);

}

_ds.Merge(_tables);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.VIEW)) ==

(int)_NodeType.VIEW)

{

SQLObjects.Views _views = new SQLObjects.Views(DBName);

_views.GetObject<sqlobjects.views>(_connections[0].sqlConnection);

_ds.Merge(_views);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.SPROC)) ==

(int)_NodeType.SPROC)

{

SQLObjects.Sprocs _sprocs = new SQLObjects.Sprocs(DBName);

_sprocs.GetObject<sqlobjects.sprocs>(_connections[0].sqlConnection);

_ds.Merge(_sprocs);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.FUNCTION)) ==

(int)_NodeType.FUNCTION)

{

SQLObjects.Funcs _funcs = new SQLObjects.Funcs(DBName);

_funcs.GetObject<sqlobjects.funcs>(_connections[0].sqlConnection);

_ds.Merge(_funcs);

}

Sleep();

if ((objectsToSerialize & Convert.ToByte(_NodeType.TRIGGER)) ==

(int)_NodeType.TRIGGER)

{

SQLObjects.Triggers _triggers = new SQLObjects.Triggers(DBName);

_triggers.GetObject<sqlobjects.triggers>(_connections[0].sqlConnection);

_ds.Merge(_triggers);

}

Sleep();

if (File.Exists(outputFile)) File.Delete(outputFile);

XmlDataDocument xmlData = new XmlDataDocument(_ds);

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.LoadXml(xmlData.OuterXml);

Sleep();

SortDependencies(_serverDB, VIEWPATH, VIEWDEP, ref xmlDoc);

SortDependencies(_serverDB, FUNCPATH, FUNCDEP, ref xmlDoc);

SortDependencies(_serverDB, SPROCPATH, SPROCDEP, ref xmlDoc);

foreach (Char c in Path.GetInvalidFileNameChars())

{

outputFile = outputFile.Replace(c, '_');

}

foreach (Char c in Path.GetInvalidPathChars())

{

outputFile = outputFile.Replace(c, '_');

}

xmlDoc.Save(outputFile);

GC.Collect();

if (Translate && outputFile != null && outputFile.Trim().Length > 0)

{

string createName = outputFile.ToLower().Replace(".xml", ".sql");

if (SQLfile != null && SQLfile.Length > 0)

{

createName = SQLfile.ToLower().Replace(".xml", ".sql");

}

if (!createName.EndsWith(".sql")) { createName += ".sql"; }

XsltHelper.SQLTransform(outputFile,

XsltHelper.SQLCREATEXSLT, createName);

outputFile += "," + createName;

logger.Info("\nSQL Create Schema has been saved to " +

createName + ".");

}

if (CustomXSLT != null && CustomXSLT.Trim().Length > 0)

{

FileInfo fi = new FileInfo(CustomXSLT);

File.WriteAllText("CustomOutput.XML",

XsltHelper.Transform(xmlDoc.OuterXml, fi));

logger.Info("\nThe Custom XSLT {0},

has been applied and saved as

CustomOutput.XML.", CustomXSLT);

}

}

}

catch (Exception ex)

{

if (ex is System.Data.SqlClient.SqlException)

{

logger.Error("\nSQL Error: {0}, DB Server {1}",

ex.Message, _serverDB);

}

else

{

logger.Error(ERRORFORMAT, ex.Message, ex.Source, ex.StackTrace);

}

}

return outputFile;

}

Once the objects of the database are serialized as XML, then the code can perform XSLT transformations against the XML to make SQL, or other output.

Additionally, if there are two such XML representations of databases, those serialized databases can be compared. The results of that comparison are output as XML which again can be transformed into the necessary SQL to update the target database.

Points of Interest

Here is a screen shot of the XML output from the tool:

Here is a screen shot of the SQL output from the tool after the XSLT transformation get applied:

Here is a screen shot of the HTML difference report created from the tool after the XSLT transformation get applied:

Solution Building

The build process uses the ILMerge to merge the project's DLLs together into a single assembly in the pre/post build commands. See Microsoft Research for the download of this tool. This makes the deployment installer much easier to design.

For the SQLSchemaToolGUI project, edit the pre and post build events which are accessed from the project properties.

There is an initial line for both pre and post build events that has:

del "$(TargetDir)merge.log"

That line should be changed to:

IF EXIST "$(TargetDir)merge.log" del "$(TargetDir)merge.log"

This is required because I first ran the merge.bat file from the commandline, which creates the merge.log file. So I never thought about the fact that the log file would not be there.

The log file is the output log of the .NET iLMerge tool, which the batch file is using to merge all the individual DLLs into the single SSTassemblies.dll file.

TODO

- The tools need lots of testing, I'm sure there are still bugs:)

- Improve SQL object dependency calculations and performance

- Design XSLT to generate base data access classes using .NET - similar to MyGeneration tool

- Create other database provider classes for MySql, DB2, and Oracle

- Enhance Data Compare functionality

Credits

- Weifen Luo - DockPanel Suite is designed to achieve docking capability for MDI forms. It can be used to develop Visual Studio .NET style applications.

- #develop - SharpDevelop is an open source IDE for the .NET platform. SST uses

ICSharpCode.TextEditor. - QueryCommander - The QueryCommander dev team for the ideas and approaches to work with SQL Server.

- NLog - NLog is a .NET logging library designed with simplicity and flexibility in mind.

- WIX - The Windows Installer XML (WiX) is a toolset that builds Windows installation packages from XML source code. The toolset supports a command line environment that developers may integrate into their build processes to build MSI and MSM setup packages.

- XML Diff - The Microsoft XML Diff Tool.

More Information

History

- January 2005 - Finished Command line tool

- June 2007 - Finished GUI version

- Sept 2007 - Started Beta tests

- Current - Decided to give away source for free