Introduction

In the first article, we reviewed how Excellence works. In this article, we will create a sample application that uses Excellence.

Business Scenario

Here is a sample business scenario (all names are fictitious):

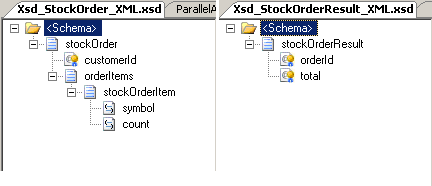

Connexita is a stock broker company which receives orders from its customers via a Web Service to buy stocks (we do not cover selling stocks for the sake of simplicity). Each order will have the customer ID as well as a list of order items. Our orchestration will receive the order and will return the total value of the order based on the current prices so that the final quote could be sent for confirmation of the customer.

Our orchestration needs to get the current price of each stock from a third party Web Service provided by Stock Services Limited. If the stock belongs to a foreign stock exchange, it also has to get the exchange rate (from the same Web Service) to calculate the order item price – the Web Service has made it easier by converting the price to local currency. At the end, it calculates the total for all items and returns the result along with the order ID so that if the customer is happy with the quote, she/he could purchase it. Just to make things a bit more interesting, I have added two parallel actions so that we could test the parallel scenario.

You might find the scenario a bit woolly, but I chose it because it is easy while it provides enough challenge for testing.

Test Setup

We develop the orchestration as we would do for any other orchestration. Then, we will need to put the trace outputs. As I said, this is useful even if you do not use any Unit Testing. Here, we define our steps:

public enum StockPurchaseSteps

{

Connexita_StockPurchase_ReceiveMessage,

Connexita_StockPurchase_GetRecordCount,

Connexita_StockPurchase_AssignMarket,

Connexita_StockPurchase_AssignGetStockPriceRequest,

Connexita_StockPurchase_SendStockPriceRequest,

Connexita_StockPurchase_ReceiveGetStockPriceResult,

Connexita_StockPurchase_ItIsForeignExchange,

Connexita_StockPurchase_ItIsLocalStock,

Connexita_StockPurchase_CalculateItemValue,

Connexita_StockPurchase_AssignExchangeRateRequest,

Connexita_StockPurchase_SendExchangeRateRequest,

Connexita_StockPurchase_ReceiveExchangeRateResult,

Connexita_StockPurchase_IncrementLoopCounter,

Connexita_StockPurchase_ParallelAction1,

Connexita_StockPurchase_ParallelAction2,

Connexita_StockPurchase_AssignOrderResult,

None

}

As it can be seen, the <Project>_<Orchestration>_<Step> naming convention is used which guarantees the step names to be unique.

Then, after each shape, we put an expression shape and output the trace. For example:

System.Diagnostics.Trace.WriteLine(System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ReceiveMessage, msgOrder.customerId));

For order item level trace outputs, we need more context information than the customer ID. Here, we also need the symbol of the stock:

System.Diagnostics.Trace.WriteLine(System.String.Format("{0}_{1}_{2}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_AssignGetStockPriceRequest,

msgOrder.customerId, symbol));

Now, we have done all the tracing we needed, and we can start with the Unit Tests. I personally use NUnit, but you could use pretty much any Unit Test framework you like. You will see that I keep a member variable for the test context since a lot of the checks need to be performed in threads other than the thread running the test – i.e., in events we subscribe in our tests. The private class TestContext is used and initialised for this purpose. So now, let’s look at the main test.

First of all, we need to make a Web Service call to invoke our orchestration. In order to do that, we first need to build an order. The MessageHelper class helps us to create a random list of order items. This class uses randomisation helper classes to achieve this. The randomisation classes inherit from a generic class RandomGeneratorBase. I have included some common implementations of it which are self-explanatory, but some deserve a brief explanation.

The ListRandomGenerator<T> generic class returns a random item from its list of T objects. If we use it with a “unique” option, it will actually remove from its internal list each item it returns so that it does not return the same items twice. PrioritisedListRandomGenerator<T> is similar, but we can initialise each item of the list with a priority or likelihood, the higher it is, the more likely that item is returned. This is particularly useful for load testing (and that is where I had originally written it for) where likelihood of options are not the same; for example, for the marital status likelihood of single, married and divorced are not the same, so we can initialise them with a priority/likelihood factor.

Going back to our Web Service call, we need to invoke the orchestration with an order message. Since our Wait() calls on LogFileResetEvent are blocking, we need to call the Web Service asynchronously – otherwise, the Web Service call would be blocking and will not finish until the opportunity for Wait() has passed. SoapRequester provides an AsyncMakeRequest to call the Web Service in an async fashion. We register for the ReceivedResponse event of the requester in order to catch the response. The code to check the response of the Web Service and make sure the totals match is in the event handler:

void requester_ReceivedResponse(object sender, ReceivedSoapResponseEventArgs e) {

Assert.AreEqual(_context.Total,

double.Parse(PseudoXPath.GetValueOfAttribute(e.State.Response, "total")));

}

Since this would not be in the same thread running the test, NUnit would show the failure (if values do not match) in a different way, but it is obvious enough and will not be missed. As it can be seen, I use PseudoXPath which is just my small Regular Expression tool that is a lot easier to use than having to define XPath, especially as you would quite commonly run into nasty namespace problems.

Now, we need to think about the external dependencies of our system. We have two Web Service calls that would need to be mocked. I am covering Web Service dependency here since it is the most complex. I have also developed tools for mocking MQ Series services, but I did not include it in the toolkit since I did not want to have a dependency to MQ Series – not everyone is using it. Yet, if you are interested, I can send it to you. I have not included MSMQ tools either, but developing it will be fairly similar to MQ.

So, how can we create a mock Web Service? Some of you might already know about SoapUI. This is an excellent tool for anyone working with Web Services and WSDLs. You can actually give it a WSDL, and it will create a mock Web Service for you in a jiffy. Just before setting up Unit Tests and while developing, I sometimes use SoapUI for its mock Web Service functionality to make sure everything is in place. But, there are two problems with using out of the box SoapUI for our Unit Testing:

- You cannot setup the response in your test. For example, you would like to send back a price of 230 for one stock and a price of 340 for another. While it allows for defining rule-based response using XPath, this will be setup outside your test and thus will not be ideal.

- You cannot check the request sent to SoapUI in your test.

So basically, we have to create our own Web Service mocks in code. The WebServiceMock class provides this facility, and it has been developed in a way that you could use it in conjunction with SoapUI without having to change any binding or URL for your send ports.

So, we create a mock service by providing the URLs it needs to serve:

WebServiceMock wm =

new WebServiceMock(new string[] { TestConstants.StockPriceServiceUrl });

The URL has been setup in a SoapUI fashion:

public const string StockPriceServiceUrl =

"http://127.0.0.1:8088/mockStockPriceServiceSoap/";

Please note that WebServiceMock serves only URLs with a slash at the end (a requirement by HttpListener), but SoapUI sets up the URL with no slash at the end. If you want to be able to use both without having to change your send port URLs all the time, you could change the SoapUI URL, but in SoapUI, it is not immediately visible how to do it. First, you need to stop the mock service, press Enter on the service to open the service window, and click on the tools icon to the far right of the start and stop icons to see the edit screen:

And now, you can add the extra slash to the end.

Please bear in mind that sometimes you cannot keep SoapUI open when you run your tests. The reason is SoapUI starts listening on port 8088 as soon as you open it, without even starting any mock service.

So, how would we setup the responses in WebServiceMock? Bear in mind, our Web Service should not only serve two services (stock price and exchange rate), but also must furnish this for more than one stock. To achieve this, we setup expectations:

WebServiceExpectation expPrice =

new WebServiceExpectation(TestConstants.StockPriceServiceUrl,

new string[] { item.Symbol, TestConstants.GetStockPriceKey },

MessageHelper.GetStockPriceResponse(item.CurrentPrice));

For creating a Web Service expectation, we provide a list of keys and the response it must send back. Keys are all substrings that a Web Service mock must find in a request in order to send back that particular response. Normally, we would set up various expectations, and the mock service will loop through them and send back as soon as it finds an expectation, which is a match for all keys.

For the stock price, we set up the expectation using the order item symbol and the service key, which is just an element name exclusive to each service. This way, we will make sure that all requests will have a unique set of keys for their expectations.

Now, let’s look at how we could set up tests for checking the data sent out by BizTalk. Here, we use a concept similar to correlation ID; i.e., for each request, we assign a GUID, and when the request is received by the mock Web Service and the expectation is matched, this GUID is passed back. All we have to do is to keep these GUIDs in our context along with the order item it is associated with. WebServiceMock has an event called MessageMatchedExpectation which will be raised when an expectation is matched. Again, we can use PseudoXPath on the request to make sure values have been correctly passed on by BizTalk:

Assert.AreEqual(_context.Guids[e.Expectation.Id].Symbol,

PseudoXPath.GetValueOfElement(e.Message, "symbol"));

Also, for converting the prices, we can write:

Assert.AreEqual(_context.ForeignGuids[e.Expectation.Id].CurrentPrice.ToString(),

PseudoXPath.GetValueOfElement(e.Message, "price"));

Assert.AreEqual(StockSymbolParser.GetMarket

(_context.ForeignGuids[e.Expectation.Id].Symbol),

PseudoXPath.GetValueOfElement(e.Message, "market"));

So, if all these tests are working, then we can make sure all the outputs from our orchestration are correct.

Now, let’s go back to the essence of Excellence, which is instrumentation traces. As we covered in part 1 of this article, for every step in our orchestration, we output a trace, and we use a LogFileResetEvent to wait for each and every step, and fail the test if the trace for any step is not received.

The Wait substring for all steps before the loop will be setup using just the customer ID. Steps inside the loop, however, will have both the customer ID and the stock symbol. So, we will setup these two Wait() statements for the first two steps of the orchestration:

if(!debug.Wait(System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ReceiveMessage,

_context.CustomerId)))

Assert.Fail("Timed out on Connexita_StockPurchase_ReceiveMessage");

if(!debug.Wait(System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_GetRecordCount,

_context.CustomerId)))

Assert.Fail("Timed out on Connexita_StockPurchase_GetRecordCount");

Since we will loop in the same fashion as the orchestration, we can put the Wait() statements inside the loop similar to the way they are going to be executed by the orchestration. For example:

if (!debug.Wait(System.String.Format("{0}_{1}_{2}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_AssignMarket,

_context.CustomerId, item.Symbol)))

Assert.Fail("Timed out on Connexita_StockPurchase_AssignMarket");

Depending on the stock being local or foreign, we will have different setups towards the end of the loop. This condition will match the code in the orchestration.

if (item.IsLocal())

{

if (!debug.Wait(System.String.Format("{0}_{1}_{2}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ItIsLocalStock,

_context.CustomerId, item.Symbol)))

Assert.Fail("Timed out on Connexita_StockPurchase_ItIsLocalStock");

….

Finally, we set up our parallel steps check:

if(!debug.WaitAll(new string[]

{

System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ParallelAction1,

_context.CustomerId), System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ParallelAction2,

_context.CustomerId),}, ParallelAction.MaxDelayInMS * 2))

Assert.Fail("One of the parallel actions did not finish.");

As you can see, there isn't much happening in the parallel actions. All they have to do is call DoAction() which is just a delay for a random span of time, but this probably suffices to show how parallel shapes can be handled and Unit Tested using Excellence.

Well, that was it all! Unit Testing an orchestration which has conditions, loops, parallel shapes, and involves exposing Web Services and calling other Web Services was as simple as that.

Only a few things to mention. First of all, you can use NUnit’s console window for various information outputted by LogFileResetEvent. This is especially helpful for debugging parallel WaitAll() calls. Also, it is best to include only one key for every parallel branch; e.g., if your parallel shape has three branches, use WaitAll() with three keys, one for each branch, usually the last one. The reason is, this will simplify the test, and I have also seen oddities with WaitAll() due to synchronization, which happens rarely but still occur. I appreciate if you would let me know if you happen to find these minor bugs.

History

- 9th March, 2009: Initial post

- 24th March, 2009: Updated source code