Introduction

For those developers who come from a red-green-refactor – solid Unit Testing –background, BizTalk development could be a disappointing revelation.

I myself was never a purist Unit Tester as I believed that value in Unit-Testing is achieved in common-sense and moderate approach rather than the extreme one, but I was startled to see how cumbersome BizTalk testing was. Everything from compilation to deployment to restarting the host is slow, dependencies to external services are numerous, and instrumentation not easily achievable. You actually cannot see what is happening. With time and experience, however, we find ways to overcome some of the problems, but BizTalk testing could generally be annoying and inefficient.

In the same habit of normal C# development, I started looking for BizTalk Unit Testing frameworks, and I was pleased to find Kevin Smith’s BizUnit. After studying and working with it for a bit, I realised that although it improves the quality of development, it has a lot to be desired. First of all, granularity of the tests is at the level of outputs of the system. For a complex orchestration, we usually need more leverage than just the output of the system, since many steps could be happening before any message is outputted from the orchestration, and if something goes wrong, it is not immediately visible what went wrong. Also, I failed to find an easy means to handle parallel or multi-message scenarios. The configuration based approach for Unit Testing – I believe – is as good as pure code approach, while I personally did not find it particularly useful, and prefer pure code for its flexibility – at the end of the day, we are writing Unit Tests which usually compiles and runs on the developer’s machine.

So, I started writing a mini-framework for Unit Testing which could provide more leverage, and now after a year and writing Unit Tests for more than a few projects using it, I believe it is worth sharing it. Excellence has these features/advantages:

- Shape level granularity for tests

- Shape level error reporting which saves time with debugging the orchestration

- Ability to handle parallel or multi-message scenarios

- Access to intermediary values in the orchestration – if you wish to expose them

- Enforcing an instrumentation paradigm which is useful in development as well as after deployment

- A suite of tools for mocking various systems and services that can be easily expanded

- Randomisation tools for simulating flavours of real life cases in production

- Ability to test outputs from the system – similar to BizUnit

- Extensible – similar to BizUnit

Tracing and Excellence

Just before getting into further details, it might be useful – for those purists out there – to know that Excellence, like BizUnit, is not a true Unit-Testing framework as it is not designed to test a unit of code as such. It is perhaps rather system testing, or some might call it integration testing, but the unit we deal here is usually an orchestration – including the orchestrations it calls. The level of isolation that is often easily achievable in code Unit Testing is impossible in BizTalk as it would be pure black magic – if ever possible to invoke a shape on its own.

Excellence is built upon an instrumentation paradigm where after every shape in the BizTalk orchestration, we output a trace entry using System.Diagnostic.Trace. I believe most BizTalk developers are quite familiar with trace outputs which are similar to Console.WriteLine() or good old MessageBox for debugging. Traces are cheap, and can be safely left in production code as the performance hit is normally in the viewing entries and not writing them.

Each trace entry will consist of two elements: Shape ID (Step ID) and Context ID. I have conveniently used the <Project>_<Orchestration>_<ShapeNameOrAction> naming convention for my shape IDs, but you could use your own. I normally define all shape IDs as an enumeration and use the name (and not the integer value) of the enumeration for tracing. Context ID is the familiar concept of “which message we are processing now”. This would depend on the project, but it is useful to include a hierarchy of information in the context, e.g., <CustomerId>_<OrderId>_<OrderItemId>, rather than just the <OrderItemId>. This usually proves invaluable for debugging in development as well as finding and resolving problems in production.

Now, it is important to understand what System.Diagnostic.Trace really does. This object exposes static methods to output trace entries. Its behaviour can be controlled via the <system.diagnostic> section of the configuration file. A call to Write() or WriteLine() will loop through all the Listeners defined for the Trace. By default, only one listener is defined: DefaultTraceListener. This class is a thin wrapper around the native Windows API OutputDebugString:

[DllImport("kernel32.dll", CharSet=CharSet.Auto)]

public static extern void OutputDebugString(string message);

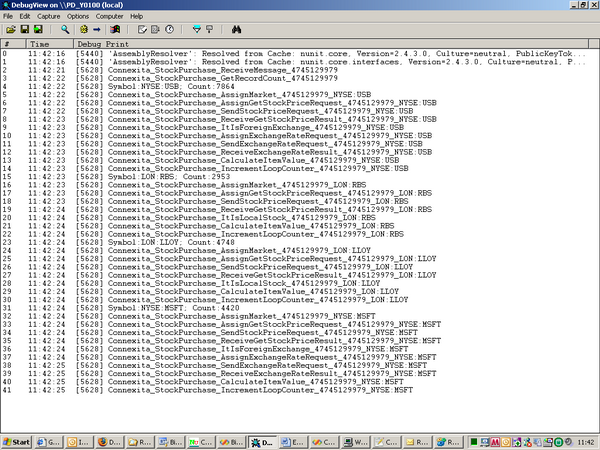

This output can be seen using Sysinternal’s DebugView which is a very useful tool, and as we will see, essential to Excellence.

You could use the <system.diagnostic> section of the configuration file to add more listeners, e.g., TextFileListener or EventLogListener. Alternatively, you can use the static Listeners property of the Trace class to add listeners.

I doubt if you would ever want to disable harmless default trace outputs, but if ever you need to (e.g., on a production server where resources are scarce), you could simply do that using this configuration:

<system.diagnostics>

<trace>

<listeners>

<remove name="Default" />

</listeners>

</trace>

</system.diagnostics>

This means that using trace is absolutely safe to be left in the production code as you can disable absolutely any trace output, including the default one.

So, the idea behind Excellence is simple: to set up the test so that for each shape, we wait for the right string output (which has the shape ID and context ID combined as one string), and when we receive it, we wait for the next, and so on. If we do not receive the expected trace output after a timeout period, we find out that something has gone wrong exactly on that shape, and the test fails.

Capturing Traces

Now, how can we access the trace outputs of a different process? Well, if DebugView can do it, so can we. First, we cover the process debugging which is the natural way to programmatically access debug outputs, and then we discuss what finally was used. So, please skip this section if you are not that interested in how Windows debug output works.

So, how do we access another process’ debugs? This is actually achieved by debugging the process:

- Find the process ID of BTSNTSvc.exe that runs the orchestrations. If you have one, then it would be easy to loop through

Process.GetProcesses() and find the only process that has ProcesssName == “BTSNTSvc.exe”. If you have more than one host (quite likely), it is a bit tricky, and you might have to provide it as a parameter to your tests. - Calling the

DebugActiveProcess API to start debugging the process. - Calling

DebugSetProcessKillOnExit so that after we stop the debugging, the process carries on. - We create a loop in which we call

WaitForDebugEvent with a timeout in order to receive debug messages. One of these messages is OUTPUT_DEBUG_STRING_EVENT, which we have sent using System.Diagnostic.Trace, and will read the string message by creating a buffer for the message and copying the string. After this, we call ContinueDebugEvent to carry on. - At the end of debugging, we call

DebugActiveProcessStop.

Easy? Well, not quite. First of all, resources and samples on the Win32 Debug are pretty limited and sketchy, and doing it in managed code is actually black art. One of the main headaches was defining structures in C#. None of the samples I found ever worked, and with some jiggery-pokery, I got it working for normal managed code. I tested it with a console application, and yes, it was indeed working.

Then, I tried it on BizTalk, and there were problems. First of all, debugging critical processes requires a privilege which threads normally do not have. But, this is easy to achieve:

Process.EnterDebugMode();

And, once you have finished:

Process.LeaveDebugMode();

The main problem, however, was that after a few seconds into debugging BTSNTSvc.exe, it would crash. I think the problem was defining the DEBUG_EVENT structure. One of the main problems with it is that .NET is strongly typed and WaitForDebugEvent is not. It is a catch-all for various debug information, and each receiving structure has a different size. So, when we call WaitForDebugEvent, we do not know what type the next debug event will be to use the appropriate message. My solution (which was probably not the best) was to define one common structure which would cover all scenarios. This works fine until an exception is thrown. BizTalk always throws two exceptions when it wakes up for the first time (you could see these in the DebugView window), and this was the cause of the crash. In any case, I would appreciate if someone could point out where the problem is and how to fix it.

If you have skipped the previous section, now is the time to join in. So, if debugging the BTSNTSvc.exe process is not as much fun as we had hoped for, what is the solution? The solution comes with the DebugView tool itself: it has a feature to save the output to a text file, and we can simply read the file and look for trace entries. All we have to do is to make sure that, before we run the tests, DebugView is open and we are saving the output to a predefined text file, which is trivial to do. You would get to the habit of keeping DebugView while developing and testing anyway; the only thing to remember (which has caught me a few times and will probably catch you) is to enable the option to save to the log file. When you do that, you would see a green arrow on the disk icon; this is a good indicator to get used to.

So, the idea is to have a ResetEvent – similar to AutoResetEvent – which can wait for a particular trace entry and block the current thread until you either find the entry or it times out. The IInstrumentationResetEvent interface defines the common behaviour of such reset events, namely the one that debugs and gets the trace outputs, and the other that looks through a log file to find the entry specified by the test. An alternative could be a similar ResetEvent which interrogates the event log for a specific entry.

So, basically, you could create your tests, and use an implementation of IInstrumentationResetEvent generated from the factory, and based on your scenario or preference, use different sources (debug, file, event log, etc). IInstrumentationResetEvent exposes the familiar Wait() method which takes a timeout and returns a boolean – in a similar fashion as WaitOne(). AutoResetEvent takes a timeout and returns a boolean. The difference is AutoResetEvent will wait for Set() to be called on it, but IInstrumentationResetEvent objects will do the Set() when it finds a matching entry, and for this reason, Wait() accepts a substring to match in the entries it interrogates. WaitAll() is similar, with the only difference being it accepts a string array and does not return until it finds all the substrings, each in one or the other entry. This is used for Unit-Testing parallel scenarios, and also in the case where we are testing a non-serial multi-message most likely in a disassembler pipeline which breaks a message into several messages and each message is processed separately and quite likely in parallel.

A Basic Unit Test

As you will see with part two of the article, a basic Unit Test (for the first shape of the orchestration) will be:

using (LogFileResetEvent debug =

new LogFileResetEvent(TestConstants.LogFileName,

TestConstants.PollingInterval,

TestConstants.StepTimeOut))

{

if (!debug.Wait(System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ReceiveMessage,

_context.CustomerId)))

Assert.Fail("Timed out on Connexita_StockPurchase_ReceiveMessage");

}

So in the code above, after setting up the test to invoke the orchestration, we create an instance of the LogFileResetEvent class and wait for an entry which is made up of the Step ID/Shape ID and the Context ID. The Wait() method is called using a default timeout, and we wait for a substring. If LogFileResetEvent finds the substring in the entries before it times out, it returns true. Otherwise, it returns false and the test will fail.

In the same fashion, you could output internal orchestration variables (not so useful for the whole message and best be used from primitive types) inside the orchestration and use the Wait() method for the expected value. For example, if we are interested in a variable called count, we wait for “count:4” if we have written a trace output inside the orchestration that uses the string formatting “count:{0}” and we expect the count value to be 4. This is for intermediary variables that are not directly outputted from the orchestration; otherwise, we could use the tools to set the test expectation.

The snippet below shows how to use WaitAll() for testing parallel sections of the orchestration:

if(!debug.WaitAll(new string[]

{

System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ParallelAction1,

_context.CustomerId),

System.String.Format("{0}_{1}",

Connexita.StockPurchase.Helper.StockPurchaseSteps.

Connexita_StockPurchase_ParallelAction2,

_context.CustomerId)

}, ParallelAction.MaxDelayInMS * 2))

Assert.Fail("One of the parallel actions did not finish.");

Here, we set the expectation so that WaitAll() only returns when it has found both the ParallelAction1 and ParallelAction2 entries in the trace, or it has timed out, in which case the test fails. This will not tell you which parallel action did not finish, but it would be fairly easy to find out from the trace itself.

So basically, there is no limit to what you can do with tracing while these traces can be equally used for troubleshooting production issues. In order to save the trace outputs on your server, all you have to do is to add another listener to store your trace outputs for you:

<system.diagnostics>

<trace autoflush="true" indentsize="4">

<listeners>

<add name="eventLog"

type="System.Diagnostics.EventLogTraceListener"

initializeData="TraceLog" />

</listeners>

</trace>

</system.diagnostics>

The configuration snippet above will output your traces to the Application event log, as well as the normal debug output which can be seen using DebugView. Using these traces, you could exactly see what has happened to each message. This could also help you with understanding performance problems and bottlenecks, especially if the bottleneck is in a code called from the orchestration, in which case HAT would not give you much information.

So, in brief, we have discussed the Excellence BizTalk Unit Testing framework which provides the paradigm and the tools to effectively Unit Test your orchestrations. It offers a few crucial advantages over existing frameworks, and is easy to set up and use.

In the next article, we cover an example of how to use this framework in practice.