Introduction

I was writing an article on determining the fastest algorithm for sorting and while I was writing I got influenced by the many sorting algorithms and I devised

an algorithm for sorting. It's called Simo because that's the name of someone who is very special to my heart :)

How it works

It is a recursive sorting algorithm that repeats the following steps:

- Get the average value of numbers in the array

- Divide the array into two arrays (array 1 and array 2) and the average value will be chosen as the pivot.

- Any value smaller than the pivot will be in first array and any value larger than the pivot will be in the second array.

- Repeat the same algorithm on array 1 and array 2 till you reach the ending condition

For optimization purposes:

- The ending condition is not a normal ending for a recursive function but it uses some conditions that I devised through try and error, these conditions raise the performance alot.

- If the array size is less than 15 an Insertion Sort is used to decrease overhead, 15 has been found empirically

as the optimal cutoff value in 1996.

The algorithm

This is how the algorithm works:

low is the index of the first element and high is the index of last element.

template <class T>

void simoSort(T a[],int low,int high)

{

if(high-low>15)

{

double average=0;

int n = (high - low + 1);

for (int i = low; i <= high; i++)

average+=a[i];

average/=n;

int k=low;

for(int i = low;i<=high; i++)

if(a[i]<average)

{

int tempValue = a[i];

a[i] = a[k];

a[k] = tempValue;

k++;

}

if(low<k-1 && k-1!=high)

simoSort(a,low,k-1);

if(high>k && low!=k)

simoSort(a,k,high);

}

else

{

for (int i = low + 1; i <= high; i++) {

int value = a[i];

int j;

for (j = i - 1; j >= low && a[j] > value; j--) {

a[j + 1] = a[j];

}

a[j + 1] = value;

}

}

}

Example

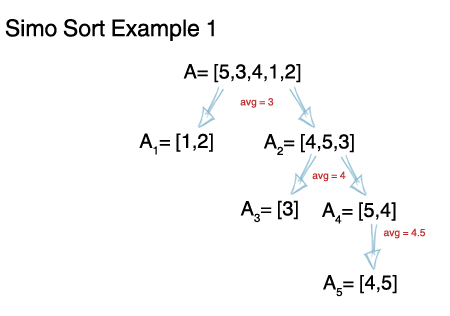

"Example 1" shows how the algorithm works in a normal case.

"Example 2" shows how the algorithm works in its best case scenario where elements of the array have little variance between them.

In the case where there where only 2 numbers "1" and "0" the algorithm had a complexity of O(n)

Note: When the algorithm stops in the shown figures it means a leaf has reached an ending condition.

Complexity

The Search is Stable and has an upper bound space Complexity of O(1)

After doing the time complexity analysis calculations.

- The Average and Worst case scenarios are of (5/6)*nlogn complexity which maps to O(nlogn)

- The Best Case is O(n) as shown in Example 2.

If any one can prove these values wrong please tell me and I will modify them, thanks.

The algorithm is currently faster than quick sort and bucket sort when the variance of the elements is low(not more than 5 different elements)

according to the I benchmark that I made, and I'm currently writing an article that will compare all sorting algorithms including this one.

Limitations

The current version of the algorithm only works on integers. it does not work on chars or double values.

History

1.0 (10 March 2012)

I hope that this article would at least slightly help those who are interested in this issue. Feel free to tell me any comments on the algorithm :)