This article demonstrates texture based rendering. It starts off with the 2D texture approach and explains the issues if we use it, and ends with the 3D texture technique.

Introduction

I was a Win32 application developer and one fine day, I was asked to work in a volume rendering project. I started learning OpenGL, but learning volume rendering was difficult. The reason is that for volume rendering, you get a lot of theories to read but nothing related to a working code explaining why. This article is my effort to fill that gap.

This will give a step by step explanation of the basic concepts of volume rendering techniques. There are different types of volume rendering techniques like Raycasting and texture based rendering. This article demonstrates texture based rendering. It starts off with the 2D texture approach and explains the issues if we use it, and ends with the 3D texture technique. Though I'm using OpenGL to explain, one can easily create it in DirectX as the concept is the same.

There are a lot of techniques (like Depth mixing, MPR, LUT, transfer function, cropping, rendering using shader, etc.) that are used along with a volume rendering project. In fact, those make a volume rendering project complete. To keep this short, I am planning to include those in another article.

Background

A basic knowledge of OpenGL is needed to follow the article as I am not deeply explaining each of the OpenGL APIs. The data attached along with the article doesn't have any headers which makes it easy to work with. The dimension (256x256x109 8 bit) information is hard coded in the application. We can get more test data from here. But that has to be converted to raw data.

The sample application uses 3D textures. So to run this application, the machine should have support for 3D textures. Which means an OpenGL version 1.2 or greater.

We can get the glew library from here. GIF images in this article are created using this application.

Using the Application

A file open dialog will be shown at the start up of the application. We have to select the sample data. We can rotate the image using mouse movements if the left button is pressed.

Using the Code

The attached source will only contain the 3D texture based approach.

CRawDataProcessor - Responsible for reading the data from the file and converting it to textureCTranformationMgr - Handles the transformation and keeps it in a matrixCRendererHelper - Does the OpenGL initialization and volume rendering

What is this Raw Data?

Raw data is nothing but continuous 2D frames. Below is a snapshot of the slices obtained by opening the attached raw data. These 2D frames will be from different positions of the Z axis.

Setting Up OpenGL

There is nothing special that we are doing here other than the usual steps to initialize OpenGL. I am calling the Initialize function from Dialog::OnCreate to initialize. The Resize function handles the setting up of the Ortho projection. As dialog::OnSize will be called in the start up and resize, OnSize is the place where we can set up the ortho. Render() will be called from OneraseBackground() to draw the scene. Glew.h and glew32.lib (along with a supporting graphics card) are needed when we move to 3D textures. This is because the OpenGL version shipped with Windows doesn’t support specifications greater than version 1.1.

As I mentioned, we are using Orthogonal projection with the following values:

- Left = -1

- Right = +1

- Top = +1

- Bottom = -1

- Near = -1

- Far = +1

The above values may slightly change in the Resize function as we are keeping the aspect ratio. Check the resize code. However, we don’t have to bother about the aspect ratio inside the rendering code.

bool CRendererHelper::Initialize( HDC hContext_i )

{

PIXELFORMATDESCRIPTOR stPixelFormatDescriptor;

memset( &stPixelFormatDescriptor, 0, sizeof( PIXELFORMATDESCRIPTOR ));

stPixelFormatDescriptor.nSize = sizeof( PIXELFORMATDESCRIPTOR );

stPixelFormatDescriptor.nVersion = 1;

stPixelFormatDescriptor.dwFlags =

PFD_DOUBLEBUFFER | PFD_SUPPORT_OPENGL | PFD_DRAW_TO_WINDOW ;

stPixelFormatDescriptor.iPixelType = PFD_TYPE_RGBA;

stPixelFormatDescriptor.cColorBits = 24;

stPixelFormatDescriptor.cDepthBits = 32;

stPixelFormatDescriptor.cStencilBits = 8;

stPixelFormatDescriptor.iLayerType = PFD_MAIN_PLANE ;

int nPixelFormat = ChoosePixelFormat( hContext_i,

&stPixelFormatDescriptor );

if( nPixelFormat == 0 )

{

AfxMessageBox( _T( "Error while Choosing Pixel format" ));

return false;

}

if( !SetPixelFormat( hContext_i, nPixelFormat, &stPixelFormatDescriptor ))

{

AfxMessageBox( _T( "Error while setting pixel format" ));

return false;

}

m_hglContext = wglCreateContext( hContext_i );

if( !m_hglContext )

{

AfxMessageBox( _T( "Rendering Context Creation Failed" ));

return false;

}

BOOL bResult = wglMakeCurrent( hContext_i, m_hglContext );

if( !bResult )

{

AfxMessageBox( _T( "wglMakeCurrent Failed" ));

return false;

}

glClearColor( 0.0f,0.0f, 0.0f, 0.0f );

glewInit(); if(GL_TRUE != glewGetExtension("GL_EXT_texture3D"))

{

AfxMessageBox( _T( "3D texture is not supported !" ));

return false;

}

return true;

}

void CRendererHelper::Resize( int nWidth_i, int nHeight_i )

{

GLdouble AspectRatio = ( GLdouble )(nWidth_i) / ( GLdouble )(nHeight_i );

glViewport( 0, 0, nWidth_i, nHeight_i );

glMatrixMode( GL_PROJECTION );

glLoadIdentity();

if( nWidth_i <= nHeight_i )

{

glOrtho( -dOrthoSize, dOrthoSize, -( dOrthoSize / AspectRatio ) ,

dOrthoSize / AspectRatio, 2.0f*-dOrthoSize, 2.0f*dOrthoSize );

}

else

{

glOrtho( -dOrthoSize * AspectRatio, dOrthoSize * AspectRatio,

-dOrthoSize, dOrthoSize, 2.0f*-dOrthoSize, 2.0f*dOrthoSize );

}

glMatrixMode( GL_MODELVIEW );

glLoadIdentity();

}

2D Texture Based Approach

What is Texture?

To those who don’t know what a texture is, think like this, texture is a structure like BITMAP. And there are some functions provided to manipulate a structure.

The main steps involved in using a texture are:

- Generate the texture using

glGenTextures. - Bind the texture and set the texture behavior for zoom in and zoom out, as well as for out of bound conditions (texture coordinates beyond 0-1).

- Load the data to the texture.

- While drawing the vertex, specify the texture coordinates that have to be mapped.

Once the data is loaded into the texture, the actual dimension is no longer needed because the texture coordinates are always specified by 0-1. Whatever may be the size of the texture, it is 1. The advantage is that we just have to map the coordinates and OpenGL will take care of all the scaling needed.

glTexImage2D is the function used to load data to the two dimensional texture. The last parameter to the function is the buffer which holds the data. Below is what happens at the time of glTexImage2D.

I am creating 109 (number of 2D slices of the attached sample data) 2D textures to store each of these frames. Here is the code to read the file and create the textures. A volume image is nothing but 2D frames stacked in Z direction. So let us arrange those 2D textures. Like this:

InitTextures2D() creates as many textures as the number of slices. And loads each texture with each slice.Draw() arranges the textures in the z axis.

bool InitTextures2D( LPCTSTR lpFileName_i )

{

CFile Medfile;

if( !Medfile.Open(lpFileName_i ,CFile::modeRead ))

{

AfxMessageBox( _T( "Failed to read the raw data" ));

return false;

}

m_uImageCount = 109;

m_uImageWidth = 256;

m_uImageHeight = 256;

m_puTextureIDs = new int[m_uImageCount] ;

char* chBuffer = new char[ 256 * 256 ];

glGenTextures(m_uImageCount,(GLuint*)m_puTextureIDs );

for( int nIndx = 0; nIndx < m_uImageCount; ++nIndx )

{

Medfile.Read(chBuffer, m_uImageWidth*m_uImageHeight);

glBindTexture( GL_TEXTURE_2D, m_puTextureIDs[nIndx] );

glTexEnvi(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_REPLACE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, m_uImageWidth, m_uImageHeight , 0,

GL_LUMINANCE, GL_UNSIGNED_BYTE,(GLvoid *) chBuffer);

glBindTexture( GL_TEXTURE_2D, 0 );

}

delete[] chBuffer;

return true;

}

void Render()

{

float fFrameCount = m_uImageCount;

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT );

glEnable(GL_DEPTH_TEST);glMatrixMode(GL_MODELVIEW):

glLoadIdentity();

glEnable(GL_TEXTURE_2D);

for(int nIndx=0; nIndx <m_uImageCount;++nIndx)

{

glBindTexture(GL_TEXTURE_2D,m_puTextureIds[nIndx]);

glBegin(GL_QUADS);

MAP_2DTEXT(nIndx);

glEnd();

glBindTexture(GL_TEXTURE_2D,0);

}

}

What Does the MAP_2DTEXT Macro Do?

#define MAP_2DTEXT( TexIndex ) \

glTexCoord2f(0.0f, 0.0f); \

glVertex3f(-dViewPortSize,-dViewPortSize,(TexIndex *2*dViewPortSize/fFrameCount)-1.0f);\

glTexCoord2f(1.0f, 0.0f); \

glVertex3f(dViewPortSize,-dViewPortSize,(TexIndex *2*dViewPortSize/fFrameCount)-1.0f);\

glTexCoord2f(1.0f, 1.0f); \

glVertex3f(dViewPortSize,dViewPortSize,(TexIndex *2*dViewPortSize/fFrameCount)-1.0f);\

glTexCoord2f(0.0f, 1.0f); \

glVertex3f(-dViewPortSize,dViewPortSize,(TexIndex *2*dViewPortSize/fFrameCount)-1.0f);

What does the above code do? It simply specifies the texture coordinate and the corresponding vertex of the quad. The texture coordinate (0,0) is mapped to the vertex (-1,-1) and the texture coordinate (1,1) mapped to the vertex(1,1). (0,1) gets mapped to (-1,1) and (1,0) to (1,-1). This is repeated for each quad that is drawn in the z axis. See in the for loop, the texture that we are binding every time is different. As the for loop is from 0-109, the z position in the vertex needs to have some conversion to make it range from (-1) to (+1).

Are we seeing anything? Yea that’s the first slice.

This is because; we started arranging the frames from -1 to +1 in Z axis and due to the depth test property, we are seeing what is near to us (see the ortho).

Applying Alpha

The next step is to remove those black colors from our frames, isn’t it? In OpenGL, there is a feature called Alpha test. That means, we can specify an alpha value and alpha criteria.

glEnable( GL_ALPHA_TEST );

glAlphaFunc( GL_GREATER, 0.05f );

This means OpenGL will draw the pixel only if its alpha value is greater than 0.05f. But our data doesn’t have any alpha value. Let’s revisit the texture creation and add the alpha value.

for( int nIndx = 0; nIndx < m_uImageCount; ++nIndx )

{

Medfile.Read(chBuffer, m_uImageWidth*m_uImageHeight);

glBindTexture( GL_TEXTURE_2D, m_puTextureIDs[nIndx] );

glTexEnvi(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_REPLACE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

for( int nIndx = 0; nIndx < m_uImageWidth*m_uImageHeight; ++nIndx )

{

chRGBABuffer[nIndx*4] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+1] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+2] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+3] = 255;

if( chBuffer[nIndx] < 20 )

{

chRGBABuffer[nIndx*4+3] = 0;

}

}

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, m_uImageWidth, m_uImageHeight , 0,

GL_RGBA, GL_UNSIGNED_BYTE,(GLvoid *) chRGBABuffer );

glBindTexture( GL_TEXTURE_2D, 0 );

}

void Render()

{

float fFrameCount = m_uImageCount;

glClear( GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT );

glEnable( GL_ALPHA_TEST );

glAlphaFunc( GL_GREATER, 0.5f );

glEnable( GL_DEPTH_TEST );

glMatrixMode( GL_MODELVIEW );

glLoadIdentity();

glEnable(GL_TEXTURE_2D);

for ( int nIndx = 0; nIndx < m_uImageCount; nIndx++ )

{

glBindTexture( GL_TEXTURE_2D, m_puTextureIDs[nIndx]);

glBegin(GL_QUADS);

MAP_2DTEXT( nIndx );

glEnd();

glBindTexture( GL_TEXTURE_2D, 0 );

}

}

We can check the pixel value and set its alpha value as 0 (fully transparent) and for all other pixel values it can be set as 255. This temporary buffer is supplied to the texture. Check the internal property of the texture and the type of the buffer in the glTexImage2D is changed. Render again.

If we have different levels of alpha, then we can play with glAlphaFunc giving different values as the alpha criteria. So for that, we are simply making the alpha data same as the luminance data here. This means a pixel with luminance value 0 will have its alpha as 0, a pixel with 255 will get alpha as 255, and a pixel with a value in between will get the alpha in between.

Now if we try increasing the value of the second parameter in glAlphaFunc, we can see something like this:

Apply Blend

Even though we have managed to get an overview of the data, it still doesn't look good. When we view an object in the real world, the rays will pass through the object according to its transparency and we will get a mix of colors till that. How do we achieve this? Remember Blending in OpenGL? Yeah, we can enable blending and draw each frame.

Remember to remove the depth test.

void CRendererHelper::Render()

{

float fFrameCount = m_uImageCount;

glClear( GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT );

glEnable(GL_BLEND);

glBlendFunc( GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA );

glMatrixMode( GL_MODELVIEW );

glLoadIdentity();

glRotated( mfRotation, 0, 1.0,0 );

glEnable(GL_TEXTURE_2D);

for ( int nIndx = 0; nIndx < m_uImageCount; nIndx++ )

{

glBindTexture( GL_TEXTURE_2D, m_puTextureIDs[nIndx]);

glBegin(GL_QUADS);

MAP_2DTEXT( nIndx );

glEnd();

glBindTexture( GL_TEXTURE_2D, 0 );

}

}

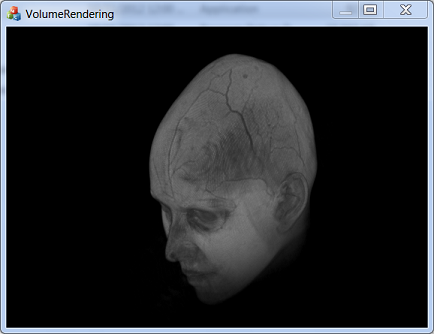

Now this looks pretty good, isn't it?

Let's Do Some Transformations on the Image Now

Rotation is simple with OpenGL. Use glRotate.

glMatrixMode( GL_MODELVIEW );

glLoadIdentity();

glRotated( mfRotation, 0, 1.0,0 );

Did you notice some issues here while rotating?

Rotation is Not Proper after 90 Degrees

An 180 degree image looks exactly like the horizontal flipped image of the image without any rotation. It was okay if we were drawing only a 2 D surface, but for 3D data, this is wrong.

Why this behavior? We are mapping the textures to the quad, and rotation is applied to the quad. We are using blending to see the data and there is no depth test.

Let us consider a case where there are no rotations. We are drawing 109 quads in order. The quad drawn at -1 of the z axis maps the 0texture. This is first drawn on the frame buffer. Then on top of this, the texture at 1st index and so on. As there is no depth test enabled, whatever is drawn gets blended.

Consider an 180 degree rotation.

The drawing and mapping code is same. But we are applying rotation. Now when the first quad is drawn at z axis -1, the rotation gets applied and it goes rotating and will be positioned at the +1 axis and it will be horizontally flipped. The texture at 1st index will go to the positive counter part of the axis flipped (imagine looking at an image from the other side of the paper). Like this, all the textures get flipped and blended resulting in a horizontally flipped image.

Screen Goes Blank When It Comes 90 Degree

As it rotates reaching 90 degree, the image gradually loses details and goes blank at 90 degrees. Same for 270 degrees. See the images.

Why is this Happening?

As we are rotating the quads, we are not getting enough samples to blend whenever it gets rotated. As it reaches 90 degrees, all the quads we are drawing become parallel to our eyes. In other words, we are not drawing anything in the Y-Z plane. So when the X-Y plane rotates 90 degrees, there is nothing we can see as OpenGL doesn’t draw the edge of the quad.

To get the actual image at 180 degree rotation, we need to have the texture at the other end drawn first.

How Do We Fix This?

Texture Rotations

OpenGL supports a matrix called texture matrix. We can apply transformations in the texture matrix, and it will get affected during texture mapping. But with 2D textures, it is of no use.

Move to 3D Textures

3D textures have the advantage of z axis. Like the width and height gets normalized to 1 in a 2D texture, the number of slices or the z axis also gets normalized to 1 in a 3D texture. How will this resolve our transformation with 2D textures?

Let us change the quad mapping code and transformations.

#define MAP_3DTEXT( TexIndex ) \

glTexCoord3f(0.0f, 0.0f, ((float)TexIndex+1.0f)/2.0f); \

glVertex3f(-dViewPortSize,-dViewPortSize,TexIndex);\

glTexCoord3f(1.0f, 0.0f, ((float)TexIndex+1.0f)/2.0f); \

glVertex3f(dViewPortSize,-dViewPortSize,TexIndex);\

glTexCoord3f(1.0f, 1.0f, ((float)TexIndex+1.0f)/2.0f); \

glVertex3f(dViewPortSize,dViewPortSize,TexIndex);\

glTexCoord3f(0.0f, 1.0f, ((float)TexIndex+1.0f)/2.0f); \

glVertex3f(-dViewPortSize,dViewPortSize,TexIndex);

bool CRendererHelper::InitTextures3D( LPCTSTR lpFileName_i )

{

CFile Medfile;

if( !Medfile.Open(lpFileName_i ,CFile::modeRead ))

{

AfxMessageBox( _T( "Failed to read the raw data" ));

return false;

}

m_uImageCount = 109;

m_uImageWidth = 256;

m_uImageHeight = 256;

m_puTextureIDs = new int[m_uImageCount] ;

char* chBuffer = new char[ m_uImageWidth*m_uImageHeight *m_uImageCou ];

char* chRGBABuffer = new char[ m_uImageWidth*m_uImageHeight*m_uImageHeight*4 ];

glGenTextures(1,(GLuint*)&mu3DTex );

Medfile.Read(chBuffer, m_uImageWidth*m_uImageHeight*m_uImageCount);

glBindTexture( GL_TEXTURE_3D, mu3DTex );

glTexEnvi(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE, GL_REPLACE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_BORDER);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_BORDER);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_BORDER);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

for( int nIndx = 0; nIndx < m_uImageWidth*m_uImageHeight*m_uImageCount; ++nIndx )

{

chRGBABuffer[nIndx*4] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+1] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+2] = chBuffer[nIndx];

chRGBABuffer[nIndx*4+3] = chBuffer[nIndx];

}

glTexImage3D(GL_TEXTURE_3D, 0, GL_RGBA,

m_uImageWidth, m_uImageHeight , m_uImageCount, 0,

GL_RGBA, GL_UNSIGNED_BYTE,(GLvoid *) chRGBABuffer );

glBindTexture( GL_TEXTURE_3D, 0 );

delete[] chBuffer;

delete[] chRGBABuffer;

return true;

}

void CRendererHelper::Render()

{

float fFrameCount = m_uImageCount;

glClear( GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT );

glEnable( GL_ALPHA_TEST );

glAlphaFunc( GL_GREATER, 0.03f );

glEnable(GL_BLEND);

glBlendFunc( GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA );

glMatrixMode( GL_TEXTURE );

glLoadIdentity();

glTranslatef( 0.5f, 0.5f, 0.5f );

glRotated( mfRotation, 0, 1.0,0 );

glTranslatef( -0.5f,-0.5f, -0.5f );

glEnable(GL_TEXTURE_3D);

glBindTexture( GL_TEXTURE_3D, mu3DTex );

for ( float fIndx = -1.0f; fIndx <= 1.0f; fIndx+=0.003f )

{

glBegin(GL_QUADS);

MAP_3DTEXT( fIndx );

glEnd();

}

}

Now the rotation is applied to the texture matrix. And quad is mapped with the corresponding z axis position of the 3D textures. So when we apply rotation, the quad is never transformed. The quad gets drawn in the XY plane all the time. The 2D plane of the texture it maps gets rotated. So for a 180 degree rotation, the quad drawn at the -1 Z axis maps the texture coordinate 1 in the texture z axis. As the 109 planes are drawn parallel, there will always be 109 pixels to be blended in any direction. Irrespective of the number of slices, we can draw more planes and map the corresponding z position of the 3D texture because of the interpolation property of the texture.

When we use texture rotation, we should use the texture property to be GL_CLAMP_TO_BORDER to get a correct image. Because when the texture coordinate gets rotated, texture coordinates may even be beyond 0-1. We don’t want to emit data when the coordinate is beyond that.

We have 256 pixels in the x axis, 256 in the y axis, and 109 in the z axis. See what happens when we rotate the image in y direction for 90 degrees. Now it is the z side being shown as the x axis. We are mapping the values from -1 to +1 in all the axes. So the 109 pixel is now mapped to -1 to +1 in axis and we can see that that side is looking bigger compared to the other side. We should scale that to get the correct size. Usually for the actual data, we will get the pixel per mm value in x, y, and z so that we can scale accordingly. But here it's just raw data. So I am using the dimension itself for scaling. Here, I have made the width to be mapped to -1 - +1 and all other sides are scaled according to the width.

Also, I have applied a negative scale in the y axis to avoid the flip which we get because of gltexImage3D (see the gltexImage2D data transfer). This flip can be achieved by mapping the texture upside down also.

glMatrixMode( GL_TEXTURE );

glLoadIdentity();

glTranslatef( 0.5f, 0.5f, 0.5f );

glScaled( (float)m_pRawDataProc->GetWidth()/(float)m_pRawDataProc->GetWidth(),

-1.0f*(float)m_pRawDataProc->GetWidth()/(float)(float)m_pRawDataProc->GetHeight(),

(float)m_pRawDataProc->GetWidth()/(float)m_pRawDataProc->GetDepth());

glMultMatrixd( m_pTransformMgr->GetMatrix());

glTranslatef( -0.5f,-0.5f, -0.5f );

Points of Interest

If you want to play with data of different dimension and type (LUMINANCE 16 etc.), then changes have to be made in CVolumeRenderingDlg::OnInitDialog and in CRawDataProcessor::ReadFile. If the dimension is other than the order of 2, then pixel alignment has to be corrected before doing glTexImage3D.

History

- 26th October, 2012 - Initial version

- 31st October, 2012 - Updated article

- 30th June, 2012 - Fixed this mistake in the code. Thanks to Englishclive for pointing it out.