Introduction

Image classification is something that reminds me of the times of college when I had many AI courses and I played with it for the first time. At that moment, it was a very experimental field and, to use it on your project, you often had to implement a lot of stuff and fight with code before you had something to test.

A few years ago, when I had the opportunity to play again with it, I found a very very different scenario. Everything that was “experimental” at college time now is “standard” and academic things are now just libraries. When I discovered OpenCV, Tensorflow, Keras, I was amazed but scared at the same time. You can put together some lines of code copied from the internet and you can have a trained network without knowing anything about AI. That’s the power of progress.

In this article, I wanted to go a step forward. In most cases, the image classification process with AI is quite the same. You have some samples, hopefully, images, each one labeled with the image description. In an example, you may have 1000 animal images, and you must know what each one is. Then you have to design a model, that’s able to train and learn from the sample the rule to decide what is what. After that, you need to train the model, maybe making more than one attempt, tuning parameter. This process is quite long, even hardware progress makes it nothing compared to my college time. So relax and wait until the training process finishes. Finally, you have a trained model, that’s able to predict what is into the image basing on what you teach.

Well, why not create a web application to manage all this stuff? Imagine a tool that allows you to upload or define images and image labels. In this application, you can also define the model, and start the train. In the end, you can download the model, or consume it from web services just sending an image and getting back the result.

In this scenario, you do not need to have a dev environment to play with AI. Just open the browser, define the model, click training and wait for the result.

Basically, that’s the project I do for this article and, because it is on top of Keras, I called it KerasUI.

By the way, I would also take the opportunity to introduce image classification for neophytes.

Article Roadmap

- Image classification explained to my grandma

- Keras UI: How It Works

- Tutorial

- Point of Interest

Note: This project is part of the image classification context on Codeproject. A special thanks to Ryan Peden for the article "Cat or not" where I learnt the basics to manage the training process and images to test the tool.

Image Classification Explained to My Grandma

The Image Classification problem is the task of assigning to an image one label from a fixed set of categories. This is one of the core problems in Computer Vision that, despite its simplicity, has a large variety of practical applications. In poor words, what you want is that if you give an image of a dog to the computer, it tells you “it’s a dog”.

This is a problem that can be resolved using artificial intelligence and computer vision. The computer vision helps to manipulate and preprocess images to get it in a form that computer can use (from a bitmap to a matrix of relevant values). Once you have the input in a good form, you can apply an algorithm to predict the result.

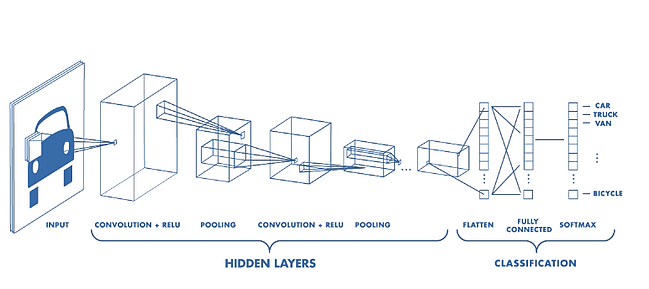

The most common solution nowadays is to use CNN (Convolutional Neural Network). Such kind of neural network is very convenient for image processing and is trained against the dataset you will provide.

Dataset is just a list of samples, each one is labeled. The main topic is that you tell the machine how to decide by example. Usually, the dataset is divided into a training set, test set, and validation set. This is because you want to train the network, then test how it works on separate data until it works as expected. Finally, if you want to have objective feedback, you must use other data: the validation set. This is required mostly because if you let the network train always on the same data, it will drop any error, but will be able to work only with the sample you provide. So, if you put into something a little bit different, you want to get a good result. That’s called overfitting and is something to avoid because it means the network didn’t abstract the rules but just repeated what you tell it. Think about a math expression 2*5+10, it’s something like remember that the result is 25 instead be able to evaluate it.

CNN: The Convolutional Neural Networks

The architecture of a CNN (

Source)

The Input

In image classification, we start from... images! It is not so hard to understand that an image is a bidimensional matrix (width * height), composed by pixel. Each pixel is composed of 3 different values, in RGB, red, green and blue. To use CNN is convenient to separate the 3 different layers, so your final input matrix to represent your image will be image_size x image_size x 3. Of course, if you have a black & white image, you don't need 3 layers, but only one, so you'll have image_size x image_size x 1. Well, if you consider also that your dataset will be composed by N items, the whole input matrix will be N x image size x image size x 3. The size of the image must be the same for all and cannot be a full HD image, to avoid too long time processing. There are no written rules for this but is often a compromise: 256x256 may be a good value in some cases, in other words, you will need more resolution.

The Convolution

Inside images, there are many details, hints, and shades that are not relevant for the network. All that detail can confuse the training so the main idea is to simplify the image keeping all the data that brings information. This is intuitive and is so easy in word but in practice? The CNN uses a convolutional step, that's the core of this method, to reduce the size of the image keeping the more relevant part of the image. The convolution layer has this name because it makes the convolution between a sliding piece of the matrix and a filter. The size of the filter and the piece of the matrix to analyze are the same. This piece is called Kernel. To make the matrix size suitable for the kernel size, it is padded with zeroes in all dimensions. Convolution produces a scalar value for all kernel multiplication. This will produce a size drop, in example with kernel 4x4 over a 32x32 matrix (1024 elements), you will have in output a 4x4 matrix (16 elements). The size of the kernel impact on the final result and often is better to keep the small kernel and chain multiple convolutional layers, where I can add some pooling layer in the middle (I'll speak about pooling later).

The Convolution operation. The output matrix is called Convolved Feature or Feature Map. Source

The Pooling

The pooling layer is used to reduce the matrix size. There are many approaches, but the basic is: I take a set of adjacent values and I used only one. The most common algorithm is the max pooling, so, basically, you take the bigger element into the set.

The Max pooling operation. Source, from O'Reilly media

The Fully Connected Layer

The final step is the neural network. Until this last step, we have done some "deterministic" operation, just algebraic computation. In this step, we have real artificial intelligence. All has been done before, had only the purpose to generate data that can be understood by the network.

Keras UI: How It Works

The process for training a neural network is often the same. I can summarize it in:

- Define the dataset

- Build a model (network configuration, preprocess layers...)

- Train

- Check if work, if not, came to 1

It isn't a deterministic process: you may need to iterate it a lot of times before having something working. In most cases, people build scripts and run them manually until they were satisfied. This approach works but has some limitations:

- You work locally, so you consume your physical resources. You may be limited by your hardware or you may be forced to keep the computer on all night until the training will finish.

- It is hard for a colleague to make his test, maybe because all the dataset or trained model is into your PC.

So, my idea was to create a web application that can manage this project, letting the freedom to change the model definition as you were in your local machine. With a solution like this, you can start the train, shut down the PC and go out for a walk, because of all the load is the server side. You can also have a cheap PC or a smartphone to train a neural network.

Moreover, the big benefit of using a system like this is the following. Besides, the training process is long, heavy and not deterministic, the final result will be a model with weights (just a single file, using Keras). So why bother with that stuff if, after a certain period, you don't have to pain more? The matter with this is that you will embed your model into the app. Maybe it is good enough for now, but what in the future? You may want to deliver a better model or unload the user from the prediction load.

That's why, designing KerasUI, I left the possibility to expose the model via API. In this way, you can send the image and get back the result. All the computational part is on the server side, so you can change it as you want without any impact for the caller.

Usage

- Run standalone.bat or

sh standalone.bat (this will install requirements apply migrations and run the server, the same script works on UNIX and Windows) - Create the admin user using

python manage.py createsuperuser - http://127.0.0.1:8000/

How to Manage Dataset

Keras UI allows uploading dataset items (image) into the web application. You can do it one by one or adding a zip file with many images in one shot. It manages multiple datasets so you can keep things separate. After you have the images loaded, you can click the training button and run the training process. This will train the model you have defined without any interaction from you. You will get back training result and if you are finicky, you can go to the log file and see what the system output.

How to Test Using Web UI

To avoid losing sleep over, I provided a simple form where you can upload your image and get the result.

How to Use Django API UI or Postman to Test API

All you have seen until now in the web UI can be replicated using API.

API Usage

This application use oauth2 to authenticate the request, so the first step you need is to get the token. Django supports many flows, in this example, I used password flow. Please remember you have to enable the app (this is not created by default at first run).

POST to http://127.0.0.1:8000/o/token/

Headers:

Authorization: Basic czZCaGRSa3F0MzpnWDFmQmF0M2JW

Content-Type: application/x-www-form-urlencoded

Body:

grant_type:password

username:admin

password:admin2019!

Response:

{

"access_token": "h6WeZwYwqahFDqGDRr6mcToyAm3Eae",

"expires_in": 36000,

"token_type": "Bearer",

"scope": "read write",

"refresh_token": "eg97atDWMfqC1lYKW81XCvltj0sism"

}

The API to get the prediction works in json post or form post. In json post, the image is sent as a base64 string. This double way to consume the service is useful because it supports different scenarios. You may link it to a form or use with wget or curl tool directly as well as you can use it from your application.

POST http://127.0.0.1:8000/api/test/

Headers:

Content-Type:application/json

Authorization:Bearer <token>

Body:

{

"image":"<base 64 image",

"dataset":1

}

The response

{

"result": "<LABEL PREDICTED>"

}

You can check the full postman file for more information or test it directly.

Tutorial

This chapter is a walkthrough on the technical part to explain how it is built and how it works. The project is built on top of Django, so it follows Django guidelines and there isn't anything out of standard.

What is Django

I'll cover the most important points, supposing you can understand all even if you don't know Django yet. For who is a virgin with Django, all you need to know is that Django is mostly a RAD framework that allows defining the data model from a high level (i.e., you can tell that there is an "email" or "image" field). The model is mapped directly to the database structure and automatically bound with UI. Of course, you can configure quite everything by implementing classes or altering templates. All is not related by data, can be managed manually creating custom routes/action.

Well, I fear to have bee too simplistic, and I hope to haven't created too many enemies into Django supporters. ;-)

Anyway, you can discover it here.

The Stack

The project stack:

- Python

- django framework

- keras, tensorflow,numpy

- SQLite (or another database you like)

Tools used:

- Visual Studio code

- Postman

- A web browser

Project Setup

As I mentioned, the project is based on Django, so the first thing to do is to create a Django project using CLI.

This requires to install Django installed on the system, and we can manage from pip.

pip install Django

Now we have Django onboard, just create the project.

django-admin startproject kerasui

This command will produce the following structure:

root/

manage.py

kerasui/

__init__.py

settings.py

urls.py

wsgi.py

These files are:

- The outer kerasui/ root directory is just a container for your project. The inner mysite/ directory is the actual Python package for your project. Its name is the Python package name you’ll need to use to import anything inside it (e.g. mysite.urls).

- manage.py: A command-line utility that lets you interact with this Django project in various ways. You can read all the details about manage.py in

Django-admin and manage.py. - __init__.py: An empty file that tells Python that this directory should be considered a Python package. If you’re a Python beginner, read more about packages in the official Python docs.

- kerasui/settings.py: Settings/configuration for this Django project. Django settings will tell you all about how settings work.

- kerasui/urls.py: The URL declarations for this Django project; a “table of contents” of your Django-powered site. You can read more about URLs in URL dispatcher.

- kerasui/wsgi.py: An entry-point for WSGI-compatible web servers to serve your project. See how to deploy with WSGI for more details.

Run It

To check if all works, just run Django with the built-in server (in production, we will use WSGI interface to integrate with our favorite web server):

python manage.py runserver

You can also use setup Visual Studio code to run Django.

This is the Django configuration:

{

"version": "0.2.0",

"configurations": [

{

"name": "Python: Django",

"type": "python",

"request": "launch",

"program": "${workspaceFolder}\\kerasui\\manage.py",

"args": [

"runserver",

"--noreload",

"--nothreading"

],

"django": true

}

]

}

Settings Configuration

Here the basic part of the configuration that tells:

- To use OAuth 2 and session authentication so that: regular web user logs in and uses the web site and rest sandbox, API user gets the token and queries the API services

- To use SQLite (you can change to move to any other DB)

- To add all Django modules (and our two custom: management UI and API)

- Enable cors

INSTALLED_APPS = [

'python_field',

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'oauth2_provider',

'corsheaders',

'rest_framework',

'management',

'api',

]

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

'django.middleware.security.SecurityMiddleware',

'corsheaders.middleware.CorsMiddleware',

]

ROOT_URLCONF = 'kerasui.urls'

REST_FRAMEWORK = {

'DEFAULT_AUTHENTICATION_CLASSES': (

'rest_framework.authentication.SessionAuthentication',

'rest_framework.authentication.BasicAuthentication',

'oauth2_provider.contrib.rest_framework.OAuth2Authentication',

),

'DEFAULT_PERMISSION_CLASSES': (

'rest_framework.permissions.IsAuthenticated',

),

'DEFAULT_PAGINATION_CLASS': 'rest_framework.pagination.LimitOffsetPagination',

'PAGE_SIZE': 10,

}

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': os.path.join(BASE_DIR, 'db.sqlite3'),

}

}

First Run

Django uses a migration system that produces migration files from the model you defined. To apply migrations you just need to run the migrate command (makemigration to create migration files from the model).

The user database starts empty, so you need to create the admin user to login. This is done by the createsuperadmin command.

python manage.py migrate

python manage.py createsuperuser

How It's Built

The app is separated into 3 modules:

- Management part: the web UI, the modules and all the core stuff

- Background worker: is a Django command that can be executed in the background and is used to train models against the dataset

- API: This part exposes API to interact with application from outside. In the example, this allows adding the item to dataset from a third party application. Moreover, the most common usage is to send an image and get the prediction result

Management

To create an app on Django:

python manage.py startapp management

This will create the main files for you. In this module, the most we use is about Model and Model representation:

- module.py: Here are all models with field specifications. By such class definition, all is set to have a working CRUD over entities

- admin.py: This layer describes how to show and edit data with forms.

The Data Model

Our data model is very simple. Assuming that we want to train only one model per dataset (this may be a limit if you would reuse dataset with multiple models...), we have:

DataSet: This contains the model, the model settings, and the name of the dataset.DataSetItem: This contains the dataset items, so one image per row with the label attached.

Here is just a sample of models and model representation:

class DataSetForm( forms.ModelForm ):

process =forms.CharField( widget=forms.Textarea(attrs={'rows':40, 'cols':115}),

initial=settings.PROCESS_TEMPLATE )

model_labels =forms.CharField(initial="[]")

class Meta:

model = DataSet

fields = ['name', 'process','epochs','batchSize','verbose','model_labels','model']

def train(modeladmin, request, queryset):

for dataset in queryset:

DataSetAdmin.train_async(dataset.id)

class DataSetAdmin(admin.ModelAdmin):

list_display = ('name','epochs','batchSize','verbose','progress')

form=DataSetForm

actions = [train]

change_list_template = "dataset_changelist.html"

@staticmethod

def train(datasetid):

call_command('train',datasetid)

@staticmethod

def train_async(datasetid):

t = threading.Thread(target=DataSetAdmin.train, args=(datasetid,))

t.setDaemon(True)

t.start()

admin.site.register(DataSet,DataSetAdmin)

class DataSet(models.Model):

name= models.CharField(max_length=200)

process = models.CharField(max_length=5000, default=settings.PROCESS_TEMPLATE)

model = models.ImageField(upload_to=path_model_name,max_length=300,

db_column='modelPath',blank=True, null=True)

batchSize = models.IntegerField(validators=[MaxValueValidator(100),

MinValueValidator(1)],default=10)

epochs = models.IntegerField(validators=[MaxValueValidator(100),

MinValueValidator(1)],default=10)

verbose = models.BooleanField(default=True)

progress = models.FloatField(default=0)

model_labels= models.CharField(max_length=200)

def __str__(self):

return self.name

Django works in code-first approach, so we will need to run python manage.py makemigrations to generate migration files that will be applied to the database.

python manage.py makemigrations

Background Worker

The background worker is the part of the application that works on a separated thread and manages a long time running process, in this case, the network training. Django supports "Commands" that are designed to be called by the UI or shell. To create a command, you don't need a lot more than create a file with a class and put it into a special folder, called "management".

To create the background worker, we need a module to host it, and I used the management module. Inside it, we need to create a management folder (sorry for the name that is the same as the main module, I hope this is not a threat). Each file on it can be run via python manage.py <commandname> or via API.

In our case, we start the command in a background process via regular Django action.

This is the relevant part:

class DataSetAdmin(admin.ModelAdmin):

actions = [train]

@staticmethod

def train(datasetid):

call_command('train',datasetid)

@staticmethod

def train_async(datasetid):

t = threading.Thread(target=DataSetAdmin.train, args=(datasetid,))

t.setDaemon(True)

t.start()

API

The API is created in a separated app, to keep things isolated and more clear. This helps also to separate concerns and avoid drowning in a big pot of files.

python manage.py startapp api

Basically, all CRUD models can be exposed by API, however, you need to specify how to serialize it:

class DataSetItemSerializer(serializers.HyperlinkedModelSerializer):

image = Base64ImageField()

dataset= serializers.PrimaryKeyRelatedField(many=False, read_only=True)

class Meta:

model = DataSetItem

fields = ('label', 'image', 'dataset')

class DataSetSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = DataSet

fields = ('name', 'process')

You need also to create ViewSet (mapping between the model and the data presentation):

class DataSetItemViewSet(viewsets.ModelViewSet):

queryset = DataSetItem.objects.all()

serializer_class = DataSetItemSerializer

class DataSetViewSet(viewsets.ModelViewSet):

queryset = DataSet.objects.all()

serializer_class = DataSetSerializer

Finally, you need to define all routes and map viewset to url. This will be enough to consume model as API.

router = routers.DefaultRouter()

router.register(r'users', views.UserViewSet)

router.register(r'datasetitem', views.DataSetItemViewSet)

router.register(r'dataset', views.DataSetViewSet)

router.register(r'test', views.TestItemViewSet, basename='test')

urlpatterns = [

url(r'^', include(router.urls)),

url(r'^api-auth/', include('rest_framework.urls', namespace='rest_framework')),

]

urlpatterns += staticfiles_urlpatterns()

The Training

This is the core part of the application. Besides all other topics are not a lot more than a web application, this is closer to artificial intelligence and here, we start the cool part.

The algorithm is very easy:

- Take all images from the dataset.

- Normalize them and add to a labeled list.

- Create the model, how it is specified into dataset record.

- Train it.

The part that has to be managed smart is how to let the user have the possibility to write its own custom model and train it. The idea I had is to give him the possibility to write its own python code. Then, it is used during the training process. This may not be the best in terms of security, because the user could write anything on it, also malicious code. Well, that's true, but this is not a saas project, so we can assume that only trusted people can access as administrator. Instead, as a consumer, you just inquire the saved model, so the system is fully safe.

This is the piece of code that queries dataset items and loads images:

def load_data(self, datasetid):

self.stdout.write("loading images")

train_data = []

images = DataSetItem.objects.filter(dataset=datasetid)

labels = [x['label'] for x in DataSetItem.objects.values('label').distinct()]

for image in images:

image_path = image.image.path

if "DS_Store" not in image_path:

index=[x for x in range(len(labels)) if labels[x]==image.label]

label = to_categorical([index,],len(labels))

img = Image.open(image_path)

img = img.convert('L')

img = img.resize((self.IMAGE_SIZE, self.IMAGE_SIZE), Image.ANTIALIAS)

train_data.append([np.array(img), np.array(label[0])])

return train_data

Take a look at:

labels = [x['label'] for x in DataSetItem.objects.values('label').distinct()]

label = to_categorical([index,],len(labels))

This assigns an order to all the labels, i.e., ["CAT","DOGS"] then to_categorical convert the positional index to the one-hot representation. To tell in simpler words, this makes CAT =[1,0] and DOG=[0,1].

To train the model:

model=Sequential()

exec(dataset.process)

model.add(Dense(len(labels), activation = 'softmax'))

model.fit(training_images, training_labels,

batch_size=dataset.batchSize, epochs=dataset.epochs, verbose=dataset.verbose)

Note that the dataset.process is the python model definition you entered into web admin and you can tune as much you want. The last layer is added outside the user callback to be sure to match the array size.

The fit method just runs the train using all data (Keras automatically makes a heuristic separation of test and training set, for now, it's enough, in future, we can plan to let the user choose percentages of data to use in each part or mark items one by one).

Finally, we store the trained model:

datasetToSave=DataSet.objects.get(pk=datasetid)

datasetToSave.progress=100

datasetToSave.model_labels=json.dumps(labels)

temp_file_name=str(uuid.uuid4())+'.h5'

model.save(temp_file_name)

datasetToSave.model.save('weights.h5',File(open(temp_file_name, mode='rb')))

os.remove(temp_file_name)

datasetToSave.save()

Note that I also save the label order because it must be the same as the model to match the one-hot convention.

The Prediction

There is a common method that, given the sample and the dataset, retrieves the model, loads it and makes the prediction. This is the piece of code:

def predict(image_path,datasetid):

dataset=DataSet.objects.get(pk=datasetid)

modelpath=dataset.model.path

model=load_model(modelpath)

labels=json.loads(dataset.model_labels)

img = Image.open(image_path)

img = img.convert('L')

img = img.resize((256, 256), Image.ANTIALIAS)

result= model.predict(np.array(img).reshape(-1,256,256, 1))

max=result[0]

idx=0

for i in range(1,len(result)):

if max<result[i]:

max=result[i]

idx=i

return labels[idx]

The model is loaded using load_model (modelpath) and the labels are from the database. The model prediction output as a list of values, the higher index is chosen and used to retrieve the correct label assigned to the network output at training time.

Points of Interest

About the Project and the Idea of Creating a UI for Keras

This project is just a toy. To become a real project, it will need a lot of work and, as a potential user, I'll think twice before choosing an on-prem solution considering how well cloud solution works and how cheap we are considering the quality of the service.

The project was an excuse to create an opportunity to play again with neural networks and learn\ experiment with Keras and artificial intelligence.

About CNN, AI, and Image classification

Since I moved my first step on this field, in 2008, there were relevant changes. The first feeling as an amateur, that now all the process is "deterministic". By using standard technologies and good documentation, it is easier to make a network work. The experience is still important, and I don't want to compare AI with a regular database read\write operation, but finding a lot of stuff, tutorial, guides is something that allows a newbie to get something working.

The credit for this is to the big players, as usual, They make accessible AI to developers, sharing their libraries, maybe just to let us know it's easier to consume all the stuff from them as service. ;-)

References

History

- 13th May, 2019: Initial version