Introduction

Implementation of this filter is based on my BaseClasses.NET library which described in my previous post (Pure .NET DirectShow Filters in C#). So you can see how to implement encoder filters. Implementation uses NVIDIA CUDA Encoding API.

Implementation

Filter implemented same way as described in previous article. It uses TransformFilter as a base class and overrides its methods. Encoding settings can be configured via IH264Encoder interface:

[ComVisible(true)]

[System.Security.SuppressUnmanagedCodeSecurity]

[Guid("089C95D9-7DAE-4916-BC52-54B45D3925D2")]

[InterfaceType(ComInterfaceType.InterfaceIsIUnknown)]

public interface IH264Encoder

{

[PreserveSig]

int get_Bitrate([Out] out int plValue);

[PreserveSig]

int put_Bitrate([In] int lValue);

[PreserveSig]

int get_RateControl([Out] out rate_control pValue);

[PreserveSig]

int put_RateControl([In] rate_control value);

[PreserveSig]

int get_MbEncoding([Out] out mb_encoding pValue);

[PreserveSig]

int put_MbEncoding([In] mb_encoding value);

[PreserveSig]

int get_Deblocking([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_Deblocking([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_GOP([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_GOP([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_AutoBitrate([Out,MarshalAs(UnmanagedType.Bool)] out bool pValue);

[PreserveSig]

int put_AutoBitrate([In,MarshalAs(UnmanagedType.Bool)] bool value);

[PreserveSig]

int get_Profile([Out] out profile_idc pValue);

[PreserveSig]

int put_Profile([In] profile_idc value);

[PreserveSig]

int get_Level([Out] out level_idc pValue);

[PreserveSig]

int put_Level([In] level_idc value);

[PreserveSig]

int get_SliceIntervals([Out] out int piIDR,[Out] out int piP);

[PreserveSig]

int put_SliceIntervals([In] ref int piIDR,[In] ref int piP);

}

Via that interface it is possible to configure settings. Implementation how to handle it you can find in filter property page implementation.

NVIDIA CUDA Encoder API

First I made the interop of CUDA Video Encoder API:

DllImport("nvcuvenc.dll")]

public static extern int NVCreateEncoder(out IntPtr pNVEncoder);

DllImport("nvcuvenc.dll")]

public static extern int NVDestroyEncoder(IntPtr hNVEncoder);

DllImport("nvcuvenc.dll")]

public static extern int NVIsSupportedCodec(IntPtr hNVEncoder, uint dwCodecType);

DllImport("nvcuvenc.dll")]

public static extern int NVIsSupportedCodecProfile(IntPtr hNVEncoder, uint dwCodecType, uint dwProfileType);

DllImport("nvcuvenc.dll")]

public static extern int NVSetCodec(IntPtr hNVEncoder, uint dwCodecType);

DllImport("nvcuvenc.dll")]

public static extern int NVGetCodec(IntPtr hNVEncoder, out uint pdwCodecType);

DllImport("nvcuvenc.dll")]

public static extern int NVIsSupportedParam(IntPtr hNVEncoder, NVVE_EncodeParams dwParamType);

DllImport("nvcuvenc.dll")]

public static extern int NVSetParamValue(IntPtr hNVEncoder, NVVE_EncodeParams dwParamType, IntPtr pData);

DllImport("nvcuvenc.dll")]

public static extern int NVGetParamValue(IntPtr hNVEncoder, NVVE_EncodeParams dwParamType, IntPtr pData);

DllImport("nvcuvenc.dll")]

public static extern int NVSetDefaultParam(IntPtr hNVEncoder);

DllImport("nvcuvenc.dll")]

public static extern int NVCreateHWEncoder(IntPtr hNVEncoder);

DllImport("nvcuvenc.dll")]

public static extern int NVGetSPSPPS(IntPtr hNVEncoder, IntPtr pSPSPPSbfr,

int nSizeSPSPPSbfr, out int pDatasize);

DllImport("nvcuvenc.dll")]

public static extern int NVEncodeFrame(IntPtr hNVEncoder,

[MarshalAs(UnmanagedType.LPStruct)] NVVE_EncodeFrameParams pFrmIn, uint flag, IntPtr pData);

DllImport("nvcuvenc.dll")]

public static extern int NVGetHWEncodeCaps();

DllImport("nvcuvenc.dll")]

public static extern void NVRegisterCB(IntPtr hNVEncoder, NVVE_CallbackParams cb, IntPtr pUserdata);

The function NVRegiserCB is used for registering encoder callbacks in

the NVVE_CallbackParams structure. For that we need to make the delegate for each callback method.

public delegate IntPtr PFNACQUIREBITSTREAM(ref int pBufferSize, IntPtr pUserdata);

public delegate void PFNRELEASEBITSTREAM(int nBytesInBuffer, IntPtr cb, IntPtr pUserdata);

public delegate void PFNONBEGINFRAME([MarshalAs(UnmanagedType.LPStruct)] NVVE_BeginFrameInfo pbfi, IntPtr pUserdata);

public delegate void PFNONENDFRAME([MarshalAs(UnmanagedType.LPStruct)] NVVE_EndFrameInfo pefi, IntPtr pUserdata);

[StructLayout(LayoutKind.Sequential)]

public struct NVVE_CallbackParams

{

public IntPtr pfnacquirebitstream;

public IntPtr pfnreleasebitstream;

public IntPtr pfnonbeginframe;

public IntPtr pfnonendframe;

}

In filter we should static methods according that delegates and make delegate variables in a class - this is major thing to have them in a class scope not local.

private CUDA.PFNACQUIREBITSTREAM m_fnAcquireBitstreamDelegate = new CUDA.PFNACQUIREBITSTREAM(AcquireBitstream);

private CUDA.PFNRELEASEBITSTREAM m_fnReleaseBitstreamDelegate = new CUDA.PFNRELEASEBITSTREAM(ReleaseBitstream);

private CUDA.PFNONBEGINFRAME m_fnOnBeginFrame = new CUDA.PFNONBEGINFRAME(OnBeginFrame);

private CUDA.PFNONENDFRAME m_fnOnEndFrame = new CUDA.PFNONENDFRAME(OnEndFrame);

CUDA.NVVE_CallbackParams _callback = new CUDA.NVVE_CallbackParams();

_callback.pfnacquirebitstream = Marshal.GetFunctionPointerForDelegate(m_fnAcquireBitstreamDelegate);

_callback.pfnonbeginframe = Marshal.GetFunctionPointerForDelegate(m_fnOnBeginFrame);

_callback.pfnonendframe = Marshal.GetFunctionPointerForDelegate(m_fnOnEndFrame);

_callback.pfnreleasebitstream = Marshal.GetFunctionPointerForDelegate(m_fnReleaseBitstreamDelegate);

CUDA.NVRegisterCB(m_hEncoder, _callback, Marshal.GetIUnknownForObject(this));

Configuring Encoder

Main methods for open configuring and closing encoder:

protected HRESULT OpenEncoder()

protected HRESULT ConfigureEncoder()

protected HRESULT CloseEncoder()

Parameters for configuration I put into specified structure:

protected struct Config

{

public uint Profile;

public CUDA.NVVE_FIELD_MODE Fields;

public CUDA.NVVE_RateCtrlType RateControl;

public bool bCabac;

public int IDRPeriod;

public int PPeriod;

public long Bitrate;

public bool bGOP;

public bool bAutoBitrate;

public bool bDeblocking;

}

To configure encoder used one API NVSetParamValue. This API allows to setting up all parameters and accept

IntPtr as argument for value, here is an example of using it:

IntPtr _ptr = IntPtr.Zero;

int[] aiSize = new int[2];

aiSize[0] = _bmi.Width;

aiSize[1] = Math.Abs(_bmi.Height);

_ptr = Marshal.AllocCoTaskMem(Marshal.SizeOf(typeof(int)) * aiSize.Length);

Marshal.Copy(aiSize,0,_ptr,aiSize.Length);

hr = (HRESULT)CUDA.NVSetParamValue(m_hEncoder, CUDA.NVVE_EncodeParams.NVVE_OUT_SIZE, _ptr);

Marshal.FreeCoTaskMem(_ptr);

Receiving and delivering samples

First we override the OnReceive method. In this method we fill NVVE_EncodeFrameParams structure and call the

NVEncodeFrame API. Note: we do not call the base class OnReceive method.

public override int OnReceive(ref IMediaSampleImpl _sample)

{

{

AMMediaType pmt;

if (S_OK == _sample.GetMediaType(out pmt))

{

SetMediaType(PinDirection.Input,pmt);

Input.CurrentMediaType.Set(pmt);

pmt.Free();

}

}

CUDA.NVVE_EncodeFrameParams _params = new CUDA.NVVE_EncodeFrameParams();

_params.Height = m_nHeight;

_params.Width = m_nWidth;

_params.Pitch = m_nWidth;

if (m_SurfaceFormat == CUDA.NVVE_SurfaceFormat.YUY2 || m_SurfaceFormat == CUDA.NVVE_SurfaceFormat.UYVY)

{

_params.Pitch *= 2;

}

_params.PictureStruc = CUDA.NVVE_PicStruct.FRAME_PICTURE;

_params.SurfFmt = m_SurfaceFormat;

_params.progressiveFrame = 1;

_params.repeatFirstField = 0;

_params.topfieldfirst = 0;

_sample.GetPointer(out _params.picBuf);

_params.bLast = m_evFlush.WaitOne(0) ? 1 : 0;

HRESULT hr = (HRESULT)CUDA.NVEncodeFrame(m_hEncoder, _params, 0, m_dptrVideoFrame);

m_evReady.Reset();

return hr;

}

Delivering samples performed inside encoder callbacks. In AccureBitstream we query for new sample from output pin allocator and returns it buffer pointer:

private static IntPtr AcquireBitstream(ref int pBufferSize, IntPtr pUserData)

{

H264EncoderFilter pEncoder = (H264EncoderFilter)Marshal.GetObjectForIUnknown(pUserData);

pEncoder.m_pActiveSample = IntPtr.Zero;

pBufferSize = 0;

HRESULT hr = (HRESULT)pEncoder.Output.GetDeliveryBuffer(out pEncoder.m_pActiveSample,null,null, AMGBF.None);

IntPtr pBuffer = IntPtr.Zero;

if (SUCCEEDED(hr))

{

IMediaSampleImpl pSample = new IMediaSampleImpl(pEncoder.m_pActiveSample);

{

AMMediaType pmt;

if (S_OK == pSample.GetMediaType(out pmt))

{

pEncoder.SetMediaType(PinDirection.Output, pmt);

pEncoder.Output.CurrentMediaType.Set(pmt);

pmt.Free();

}

}

pSample.SetSyncPoint(pEncoder.m_bSyncSample);

pBufferSize = pSample.GetSize();

pSample.GetPointer(out pBuffer);

}

return pBuffer;

}

In OnBeginFrame callback routine we save settings for the sample:

private static void OnBeginFrame(CUDA.NVVE_BeginFrameInfo pbfi, IntPtr pUserData)

{

H264EncoderFilter pEncoder = (H264EncoderFilter)Marshal.GetObjectForIUnknown(pUserData);

pEncoder.m_bSyncSample = (pbfi != null && pbfi.nPicType == CUDA.NVVE_PIC_TYPE_IFRAME);

}

The ReleaseBitstream method used to call sample delivery:

private static void ReleaseBitstream(int nBytesInBuffer, IntPtr cb, IntPtr pUserData)

{

H264EncoderFilter pEncoder = (H264EncoderFilter)Marshal.GetObjectForIUnknown(pUserData);

IMediaSampleImpl pSample = new IMediaSampleImpl(pEncoder.m_pActiveSample);

pEncoder.m_pActiveSample = IntPtr.Zero;

if (pSample.IsValid)

{

long _start, _stop;

_start = pEncoder.m_rtPosition;

_stop = _start + pEncoder.m_rtFrameRate;

pEncoder.m_rtPosition = _stop;

pSample.SetTime(_start, _stop);

pSample.SetActualDataLength(nBytesInBuffer);

pEncoder.Output.Deliver(ref pSample);

pSample._Release();

}

}

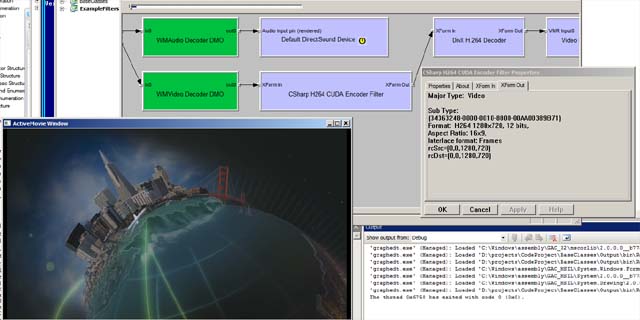

Filter Overview

Property page with available settings to configure.

Filter supports next media types as an input: NV12, YV12, UYVY, YUY2, YUYV, IYUV and format VideoInfo amd VideoInfo2. For planar types picture width should be 16 aligned, otherwise type rejected as filter not provide own allocator.

Output types from filter are H264 in VideoInfo and VideoInfo2 formats, AVC1 with Mpeg2Video format and NALU size length - 4. I made filter to be available for connection to Avi Mux filter along with common mp4 mts flv and mkv multiplexors. I set timestamps in media samples according DTS not PTS, but that not issue for good decoders and allows to embed video into AVI even if closed gop used with large IDR period.

I have good perfomance using that filter and it works fine with 4% CPU usage on 30 FPS playback 1280x720 video. And that for VC-1 decoding H264 encoding, decoding and preview.

History

- 15-07-2012 - Initial version.