Introduction

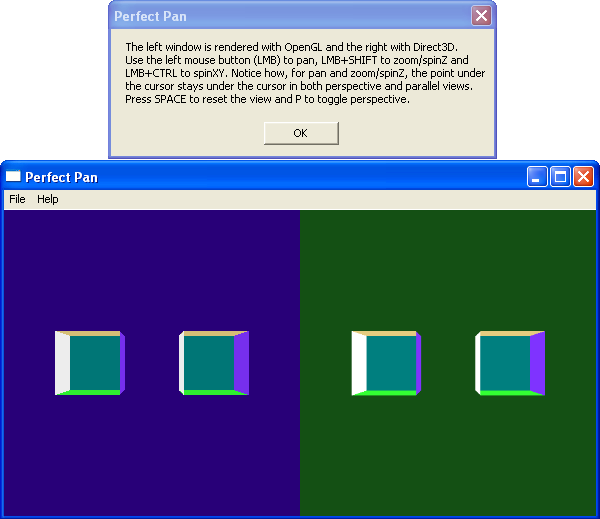

Everyone reading this article would be familiar with the ‘grab’ type of panning of 2D images, as with Adobe Acrobat®, where the mouse grabs the image and drags it around. The point under the cursor stays under the cursor. When it comes to 3D, a number of programs provide this intuitive interaction for parallel (orthographic) views, but I don’t know of any that implement it for perspective views. Some 3D programs do move objects around on a construction-type plane in 3D so the point on the plane stays under the mouse. The panning technique shown here is for more general use.

General Viewing Parameters

The demo program is derived from the demo program in A New Perspective on Viewing, which proposes a set of general viewing parameters. All interactive motion algorithms need to use viewing parameters to transform screen based mouse motion into virtual space motion. From the previous article, the seven general viewing parameters that specify the view volume in view space are:

struct TViewVolume { float hw, hh, zn, zf, iez, tsx, tsy; };

A view-to-world rotate/translate transformation, which positions the view volume in world space, completes the view specification.

The Panning Algorithm

Parallel view panning is relatively simple, and needs to scale the mouse pixel motion to the virtual space. In perspective views, items closer to the eye-point pan faster across the screen than items further away. To keep the picked point under the cursor requires physical pixel motion to be mapped to the corresponding virtual plane passing through the picked point.

The z-buffer value is the picked point's screen space z-coordinate. A reverse transformation from screen (depth buffer) space to view space maps the screen space point to view space. The demo's source code shows how to read the depth buffer value in both OpenGL and Direct3D. The z-buffer value is provided as a value between 0.0 (front) and 1.0 (back). The derivation of the reverse transformation formulae are given as a comment in the OnPicked() method in Main.cpp.

float m33 = -(1-vv.zf*vv.iez) / (vv.zn-vv.zf);

ViewZ = (ScreenZ+m33*vv.zn) / (ScreenZ*vv.iez+m33);

MotionZ = ViewZ;

A pixel-to-view-rectangle scale factor is calculated and used to map the physical mouse 2D point to a virtual 2D point in the view space z=0 plane. The 2D point is then projected onto the z=MotionZ plane.

RECT rect;

GetClientRect( hWnd, &rect );

float PixelToViewRectFactor = vv.hw * 2.0f / rect.right;

ViewX = ScreenX * PixelToViewRectFactor - vv.hw;

ViewY = -(ScreenY * PixelToViewRectFactor - vv.hh);

ViewX += -ViewX*MotionZ*vv.iez + vv.tsx*MotionZ;

ViewY += -ViewX*MotionZ*vv.iez + vv.tsy*MotionZ;

ViewZ = MotionZ;

rect.right is the window’s client area width in pixels. vv.hw is the HalfWidth general viewing parameter that specifies half the width of the rectangular cross section of the viewing volume at the z=0 view space plane. vv.hh is similarly the HalfHeight general viewing parameter.

Notice how the calculations handle both parallel (iez=0) and perspective views. These formulae are examples of how the general viewing parameters often don’t require code to differentiate between parallel and perspective views. See A New Perspective on Viewing for information on the general viewing parameters.

For each repetitive mouse move, the move-from and move-to 3D view space points are calculated then used to update the ViewToWorld translation.

ViewToWorld.trn -= (MovedTo - MovedFrom) * ViewToWorld.rot;

ViewToWorld.trn is the translation portion of the view-to-world transformation, and is a point in world space. Likewise, ViewToWorld.rot is the 3x3 rotation portion. The delta view space translation is calculated by subtracting the vectors; then the view space delta is transformed to world space by multiplying by the ViewToWorld rotation. Finally, the world space delta is added to the ViewToWorld translation. Overloaded operators implement the vector subtraction and the vector times matrix operation in the last line.

The code that reads the z-buffer value is a little convoluted as the demo program redraws the screen then, after the redraw, the depth buffer is read and the value passed back using a callback. This technique is useful for quick response programs that clear the back buffer and z-buffer straight after a redraw to minimize the response time from reading the latest input values to showing the updated image.

Each mouse move event is converted into a delta mouse movement to allow other motion sources, such as animated movements, to work seamlessly with the mouse. The panning algorithm will permit other motion sources to move the point under the cursor away from the cursor, but the interaction still makes intuitive sense to the user.

Zoom

The zoom/spinZ interaction also keeps the picked point under the cursor. This type of interaction is similar to the 2D multi-touch screen interaction using two fingers where one finger is fixed at the center of the window. The view space origin's z-coordinate needs to be moved to match the picked point’s z-coordinate, which requires the view size to be adjusted. All the other calculations are similar to those for panning.

if (ISPERSPECTIVE(vv.iez) && ISPRESSED(GetKeyState( VK_SHIFT )))

{

float ez = 1/vv.iez;

HalfViewSize *= (ez-ViewZ)/ez; AVec3f Delta = CVec3f(0,0,ViewZ); Delta = Delta * ViewToWorld.rot; ViewToWorld.trn += Delta; ViewZ = 0; InvalidateRect( hWnd, NULL, FALSE );

}

The SpatialMath vector and matrix types and overloaded operators are used to simplify the code. See SpatialMath.h for more information.

Most users prefer zoom as a separate action to spin. For instance, a vertical mouse motion can be used to zoom the view in and out or scale an object up and down. The use of a view space z-coordinate is just as applicable to this type of zoom.

Conclusion

With the perfect panning and zooming motion algorithms appearing relatively simple, it is surprising that these algorithms aren’t already in widespread use. The simplicity derives from the use of the general viewing parameters, and it can prove difficult to implement these algorithms with other sets of viewing parameters.

Perfect panning in perspective views is an intuitive and subconscious way of adjusting the rate of virtual motion so the movement of the 2D image matches the movement of the mouse. Users will love the direct control provided by perfect panning.

History

- October 12, 2009: Initial post.