Singleton Pattern : This is a pattern which is widely used when there is a situation to create only one instance of a class.

Lets demonstrate the pattern using a simple example. Earlier i used to wonder how load balancer servers work. So, i decided to write an application to demonstrate both the things in one go.

When client initiates a request , load balancer will verify the load on each server and redirects the request to the server with minimal load. The same thing we will implement in c# sample.

Step 1: Create a web application so that we can verify by instantiating multiple instances of the application.

Step 2: Create a class interpreting Servers. I created two server "Server1", "Server2".

Each server will have count of current requests serving and a method which will take a while to execute.

namespace SingletonSample

{

public interface IServers

{

void ServeRequest();

void EndRequest(IAsyncResult ar);

}

public class Server1 : IServers

{

delegate void LoadWaitDelegate();

public static int ServiceCount = 0;

public void ServeRequest()

{

ServiceCount = ServiceCount + 1;

LoadWaitDelegate delWaitMethod = new LoadWaitDelegate(DelayMethod);

delWaitMethod.BeginInvoke(EndRequest, delWaitMethod);

}

public void EndRequest(IAsyncResult ar)

{

LoadWaitDelegate delWaitMethod = (LoadWaitDelegate)ar.AsyncState;

delWaitMethod.EndInvoke(ar);

ServiceCount--;

}

private void DelayMethod()

{

System.Threading.Thread.Sleep(4000);

}

}

public class Server2 : IServers

{

delegate void LoadWaitDelegate();

public static int ServiceCount = 0;

public void ServeRequest()

{

ServiceCount = ServiceCount + 1;

LoadWaitDelegate delWaitMethod = new LoadWaitDelegate(DelayMethod);

delWaitMethod.BeginInvoke(EndRequest, delWaitMethod);

}

public void EndRequest(IAsyncResult ar)

{

LoadWaitDelegate delWaitMethod = (LoadWaitDelegate)ar.AsyncState;

delWaitMethod.EndInvoke(ar);

ServiceCount--;

}

private void DelayMethod()

{

System.Threading.Thread.Sleep(3000);

}

}

}

If you observe the code, the DelayMethod() will take 4000 or 3000 msecs to execute based on server, so this will executed asynchronously. Once after completion of execution, EndRequest() method will be called, thus reducing the count of services currently serving.

Step 3: Now, we need to create Load Balancer infrastructure or class. But, before creating it, we need to rethink on one point.

"We need to have only one instance of Load Balancer class."

This is where Singleton pattern will come into picture. Look at the below code how i have implemented it.

namespace SingletonSample

{

public class LoadBalancer

{

private static LoadBalancer _ldBal;

public static int LoadbalancerInstances=0;

private LoadBalancer()

{

LoadbalancerInstances++;

}

public static LoadBalancer LdBal

{

get

{

_ldBal= _ldBal == null ? new LoadBalancer() : _ldBal;

return _ldBal;

}

}

public IServers ServeServer()

{

return Server1.ServiceCount <= Server2.ServiceCount ?

(IServers)new Server1() : (IServers)new Server2();

}

}

}

Some Key Points highlighted in Yellow:

- The default constructor of the class should be private.

- The instantiating of class should be done only once via property or a method.

- As we are discussing about Loadbalancer, the ServeServer() method will return a server which is having less or equal load compared to other.

Step 4: Now create a web form with a list box displaying all the messages from server.

<%@ Page Language="C#" AutoEventWireup="true" CodeBehind="DefaultPage.aspx.cs" Inherits="SingletonSample.DefaultPage" %>

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml">

<head runat="server">

<title>Test Load Balancer</title>

</head>

<body>

<form id="form1" runat="server">

<div style="height:80%;width:80%">

<table style="height:100%;width:100%">

<tr>

<td>

<label>Messages from Load Balancer :</label>

</td>

</tr>

<tr>

<td>

<asp:ListBox ID="lbMessages" runat="server" Height="130px" Width="300px"

Font-Bold="True" Font-Names="Cambria" Font-Size="Smaller"></asp:ListBox>

</td>

</tr>

<tr>

<td>

<label>Time Stamp :</label><asp:Label ID="lblTimestamp" runat="server"

Font-Names="Calibri" Font-Size="Small"></asp:Label>

</td>

</tr>

</table>

</div>

</form>

</body>

</html>

Step 5: In code behind, all we do is create instance of Loadbalancer and submit the request. We will log pertaining messages to list box, to see how the flow and logic works.

namespace SingletonSample

{

public partial class DefaultPage : System.Web.UI.Page

{

LoadBalancer _loadBalancer;

IServers _server;

string _serverName;

protected void Page_Load(object sender, EventArgs e)

{

_loadBalancer = LoadBalancer.LdBal;

AddMessage("No of Loadbalancer instances :" + LoadBalancer.LoadbalancerInstances.ToString());

AddMessage("Current count Server1 : " + Server1.ServiceCount.ToString() + " Requests; Server2 : " + Server2.ServiceCount.ToString()+" Requests.");

_server= _loadBalancer.ServeServer();

_serverName=_server.GetType().ToString().Replace("SingletonSample.","");

AddMessage("Server allocated by Load Balancer :" +_serverName );

ShowCountMessage(_serverName);

AddMessage(_serverName + " serving current request");

_server.ServeRequest();

ShowCountMessage(_serverName);

lblTimestamp.Text = DateTime.Now.TimeOfDay.ToString();

}

private void ShowCountMessage(string strServerName)

{

AddMessage("No of Requests with "+strServerName+" now :" +

(strServerName == "Server1" ? Server1.ServiceCount : Server2.ServiceCount).ToString()

);

}

private void AddMessage(string strMsg)

{

ListItem lstItem = new ListItem(strMsg);

lbMessages.Items.Add(lstItem);

}

}

}

Step 6 : Now execute and see what is the output. Before going to see out put, lets see what we wrote.

Each page request will initiate a request to one of the server objects, and server will serve the request. But it will take 3000 or 4000 milli seconds to finish it. Mean while when second request come, load balancer will compare the load and send the request to right server.

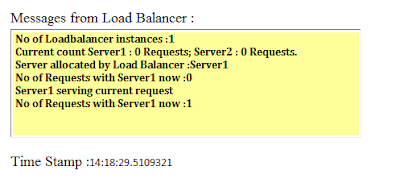

I have taken the screenshots, by refreshing the page continuously thus submitting requests rapidly. Observe the time stamp as well.

First Request

After rapid submission of requests

Here you can see, Server 1 has 5 requests in process and Server 2 has 4 requests. So, Load balancer has chosen Server 2 to serve the current request. But regardless of many requests, there is only one instance of Load balancer.

Now leave the form for some while and then submit again and see all the requests were served and both the servers will start from zero.

With this, we have implemented a simple Loadbalancer class using Singleton Pattern. Now, Big Brains will ask a question:

"What if threading is implemented and there are 2 first requests submitted at a time?"

Ans:Yes its a valid question and our code will create 2 instances of Loadbalancer class thus messing our whole idea behind it.

Now next question, why this problem can happen with Threading and why not with regular execution?

Ans: In normal execution, same memory location will be shared for all the requests, but in threading, memory slicing can happen thus executing the code in multiple memory locations. So, there is a high probability of multiple threads creating multiple objects of Load Balancer class.

Resolution: Locking. When you use lock, even in case of threading scenario, the execution will be restricted to a single memory location. Thus we can restrict multiple instances of Load Balancer class.

So in case of thread programing, we need to modify the instantiating part of Loadbalancer class by involving Locking mechanism.

Below are the changes highlighted in yellow, made in LoadBalancer class to handle Threading situations.

namespace SingletonSample

{

public class LoadBalancer

{

private static LoadBalancer _ldBal;

private static object syncRoot = new Object();

public static int LoadbalancerInstances=0;

private LoadBalancer()

{

LoadbalancerInstances++;

}

public static LoadBalancer LdBal

{

get

{

if (_ldBal == null)

{

lock (syncRoot)

{

_ldBal = _ldBal == null ? new LoadBalancer() : _ldBal;

}

}

return _ldBal;

}

}

public IServers ServeServer()

{

return Server1.ServiceCount <= Server2.ServiceCount ? (IServers)new Server1() : (IServers)new Server2();

}

}

}

Ok, we have implemented locking , but why not on the instance variable it self, why we used a second volatile variable for this locking?

Reason : This approach ensures that only one instance is created and only when the instance is needed. Also, the variable is declared to be volatile to ensure that assignment to the instance variable completes before the instance variable can be accessed. Lastly, this approach uses a syncRoot instance to lock on, rather than locking on the type itself, to avoid deadlocks.

Code:

Click Here

Is it helpful for you? Kindly let me know your comments / Questions.