Introduction

Let's continue reviewing the world of DirectShow. And here I describe the way to developing demultiplexors. I figure out all aspects which are requre to build such kind of filters and provide the example with code overview based on my C# class library: BaseClasses.NET which described in article: Pure .NET DirectShow Filters in C#. This is first part and here I review only Splitter Filters with input pin, in second part I review Source Filters

Background

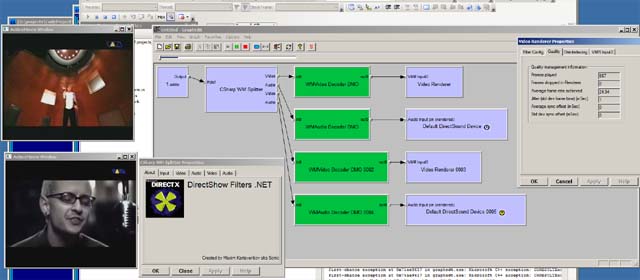

The main purpose of DirectShow Splitter filters (also called as Demultiplexors) is to handle media file formats; parse it and provide separate data streams which upstream filters can understand and can handle. Sound not so hard I think. Let's look on GraphEdit shot:

Here we see Standard AVI Splitter which comes with the system. Splitter have one input pin which connected to the "File Source Async" filter with loaded file. We have 2 output pins here as file contains audio and video data. That pins created automatically once input pin connected and removed if it disconnects. Once graph starts playing splitters read or receive data on input pin performs parsing and deliver it into specified output pin. Let's figure out how it works.

How the FilterGraph decide what splitter to use?

At first try to start GraphEdit application and try to render any media file. For any different file types filter graph select different splitters. If there is no splitter for specified type of the file registered in a system than you just can play the file using DirectShow. More of that if you manually load file with "File Source Async" filter it can display different output types for different files. For example in Graph above it shows Major Type "Stream" and Subtype "Avi", and if I load an mpeg1 PES file it can show "MPEG1System" subtype. This happend because of media type registered in the system. You can create your own file format and register it so the "File Source Async" filter will do displaying your type GUID. For that just necessary to write registration information into system registry during filter registration. Note in that article I review only splitters filters registration of the source filters I describe in second part. Media type registered in following registry subkey:

HKCR\Media Type\{E436EB83-524F-11CE-9F53-0020AF0BA770}\{ Subtype GUID }

The GUID it's the major type which provided as output, usually MEDIATYPE_Stream. The Subtype GUID it is your custom type GUID. Registry values in there describes how to identify your file type, and what source filter to use for handling it. Source filter is the string value representing the GUID of the filter which should be selected for that file type; in most cases it is "File Source Async". Other registry values have names started from "0". There should be at least one value otherwise your media type can't be detected. The value is check bytes sequence which helps source filter to identify your type. That sequence is string value which consis of integers: "offset, length, mask, value" separated with ",". The Filter Graph during file rendering reads the number of bytes specified in "length" from file position specified at "offset", then performs bitwise AND operation with the "mask" and compare result with the "value" if result match it load the source filter and setup media type in there. Note: there are can be multiple offsets lengths masks values in single string in case of more than one entry Filter Graph performs AND operation with all entries specified in single line. How the registration looks you can see in next screenshot.

In BaseClassesNET library I made the specified attributes which can be specified on your Splitter filter or a Parser class and your media type will be registered.

[ComVisible(false)]

[AttributeUsage(AttributeTargets.Class, AllowMultiple = true)]

public class RegisterMediaType : Attribute

Attribute allows next constructor modifications:

public RegisterMediaType(string _subtype, string _sequence)

public RegisterMediaType(string _filter, string _subtype, string _sequence)

public RegisterMediaType(string _filter, string _majortype, string _subtype, string _sequence)

Once file source is loaded and media type setted Filter Graph can connect to it. In case of manually loading source filter and connecting filters such registration is not required.

Basic filter architecture

As we already discussed splitter get's the data from input pin and provide it to upstream filtrs via output pins. On each output pin we should have it own thread as we have to split the data from the file and deliver it separately. In case if we have indexing in a file or other stuff which allows us to faster access it content we may not have separate thread for splitting data. In that case the data readed separately in each pin thread. Otherwise we have third common thread in a filter which performs reading data divide it into single chunks (for video it is usually called frames, for audio - packets) and append to the queue for each output pin. In same time that pin reads that chunk from the queue and deliver to upstrem filter. In this part we will review the second model with the common parser thread. That model looks:

Not too complex, I think, isn't so? Initially filter have one input pin. Once Filter Graph Manager connect to it inside filter we performing validation - can we handle data from connected filter. If it connected to "File Source Async" we are use IAsyncReader interface and SyncRead or SyncReadAligned methods for that. Interface we can query from connected pin. With those 2 methods we can read data from the stream at any position and check the given data format. In case if data not good for us we just discard the connecting with the specified error code. In other case we should read the file until we define all available streams in there (for example 2 streams: audio and video) for each stream we should create output pin. Prepare output media types which we can handle. The pins whcih are not mandatory we can name with start of "~" character. In that case Filter Graph Manager will ignore such pin from automatically rendering, but you still can render it manually. That is necessary for example subpictures, some other things which may not primary, as well Filter Graph Manager try to render all output pins which name not starts with "~" and if one of them cannot connect the filter will be dropped and Graph Manager will do looking for other filter.

Along with that model we should have to handle the major directshow tasks of the splitters and file sources.

Major tasks of the splitter

You may think: "What else is require from splitters?", actually many things. Here I review the major tasks, some other additional I'll describe in next article.

Seeking

As you know in every media player application we have seeking capabilities there we can set playback position. That functionality is fully prepared in a splitter. More of that, splitter provide information of the duration of the current media file. Splitter takes care abt timestamping according playback rate. The splitter only does not provide current playback position - it provide start and stop positions, but not the current. Current position is set in the renderer, and in application you are see the position not of all streams (output pins) but only of one, usually audio (this because of audio stream always preffered clock provider in a playback). But in our filter we should have implementation of IMediaSeeking interface on each output pin. So how then decide in our filter which pin use for seeking? Actually you can choose any connected pin and better with higher media duration of the stream.

New Segments

Once pin become active it should notify the upstream filter that next media samples will be in same segment. What is that mean? In example we start playing the file, before delivery any samples we send new segment notification with start and stop positions and current playback rate. If you not call SetPositions or SetRate before starting playback - then you will get new segment notify with the 0, MAXLONGLONG, 1.0 values for start stop and rate values. Once you seeking the graph - you set the new start position, or you changing stop position or you changing rate - you will get the new segment notify with new settings after any seeking operation is performed.

Flushing

Flushing means that filter should discard any received samples, and performs clean up to be ready to receive new data. In other words in decoder I collect the referenced data for building full frames to an output, once I receive BeginFlush notification I clear the referenced data, and discard any incoming samples until I receive EndFlush. Hope right now much easy to understand.Notifications BeginFlush and EndFlush are also sent by the splitter. That is happend due seeking. Once user set new position we are send begin flush to all pins then stopping all threads (pins and demuxer) and after send end flush, so upstream filters will be ready to receive data from the new position.

End Of Stream

Once splitter have no data for the stream it send the EndOfStream notification. Which means for connected filters to not expect any data from that pin. And any data which sent over that pin should be discarded. Situation: we have file with 2 streams with different durations for example 10 and 5 seconds, after 7 seconds playback you seek to position at 2 seconds, will be any display on pin with stream which is 5 seconds length? The answer is - yes. This will work next way: after seeking as we have notify EndOfStream on that pin we does not make the flushing the data, as there are no data should be, but we change the position and send other notification - NewSegment, and inform upstream that data available.

Windows Media Splitter Filter implementation

In previous artice I describe the classes which are used to create the splitter filters, I also add the basic sample in there. Here I review the implementation of Windows Media files splitter using WMF SDK. The class library have good oportunity to make such type of filters, and the filter it self looks very simple:

[ComVisible(true)]

[Guid("A7EABBF9-F348-4879-9AB9-29A0B0F80CF4")]

[AMovieSetup(true)]

[PropPageSetup(typeof(AboutForm))]

public class WMSplitterFilter : BaseSplitterFilterTemplate<WMParser>

{

public WMSplitterFilter()

: base("CSharp WM Splitter")

{

}

}

Actually, we only concentrates on implementation file format supporting things, all other things already implemented in a base classes, So we need to implement own classes based on FileParser and DemuxTrack classes, which described in previous article.

Implementing parser

Major tasks for our parser are:

- check the input file - can we play it or not.

- initialize tracks.

- demultiplexing the data and put it into tracks queues.

- Seeking support.

Opening the file

At first we should create reader object by calling WMCreateReader API. After we should query IWMReaderAdwanced2 interface from IWMReader object we have. IWMReaderAdvanced2 expose the OpenStream method which we should call to open media data. As you can see that method require 3 arguments: IStream object - we will use an opened BitStreamReader as it support that interface; IWMReaderCallback - interface, for asynchronius handling status notifications, we will implement in our reader class; third parameter - context data - we not using. Once we call that method stream will be opening asychroniusly and send status result into callback, so we just provide Event waiting mechanism.

protected override HRESULT CheckFile()

{

HRESULT hr = (HRESULT)WMCreateReader(IntPtr.Zero, 0, out m_pWMReader);

if (SUCCEEDED(hr) && m_pWMReader != IntPtr.Zero)

{

IWMReaderAdvanced2 pReaderAdvanced = (IWMReaderAdvanced2)Marshal.GetObjectForIUnknown(m_pWMReader);

m_evAsyncNotify.Reset();

hr = (HRESULT)pReaderAdvanced.OpenStream(m_Stream, this, IntPtr.Zero);

if (SUCCEEDED(hr))

{

m_evAsyncNotify.WaitOne();

hr = m_hrAsyncResult;

}

if (SUCCEEDED(hr))

{

pReaderAdvanced.SetUserProvidedClock(true);

}

pReaderAdvanced = null;

}

return hr;

}

In the callback OnStatus method; in there we just set the async events and set result variable:

public int OnStatus(Status Status, int hr, AttrDataType dwType, IntPtr pValue, IntPtr pvContext)

{

switch (Status)

{

case Status.Opened:

m_hrAsyncResult = (HRESULT)hr;

m_evAsyncNotify.Set();

break;

case Status.Stopped:

m_hrAsyncResult = (HRESULT)hr;

m_evAsyncNotify.Set();

break;

case Status.Closed:

m_hrAsyncResult = (HRESULT)hr;

m_evAsyncNotify.Set();

break;

case Status.Started:

OnTime(m_rtPosition, pvContext);

break;

case Status.EndOfStreaming:

m_hEOS.Set();

break;

case Status.Error:

m_hEOS.Set();

break;

}

return NOERROR;

}

As you can see we call SetUserProvidedClock method, this because we may work not in real time and timing is based on Graph clock.

Loading tracks

Once file is loaded we are checking it profile and extract configuration of each stream. According that configuration we creates the track and add it into the list for an output.

if (m_pWMReader == IntPtr.Zero) return E_UNEXPECTED;

HRESULT hr;

IWMProfile pProfile = (IWMProfile)Marshal.GetObjectForIUnknown(m_pWMReader);

int nStreams;

hr = (HRESULT)pProfile.GetStreamCount(out nStreams);

if (SUCCEEDED(hr))

{

if (nStreams > 0)

{

for (int i = 0; i < nStreams; i++)

{

IWMStreamConfig pStreamConfig;

hr = (HRESULT)pProfile.GetStream(i, out pStreamConfig);

if (SUCCEEDED(hr))

{

WMTrack pTrack = new WMTrack(this,i, ref pStreamConfig);

pStreamConfig = null;

if (S_OK == pTrack.InitTrack())

{

m_Tracks.Add(pTrack);

if (m_rtDuration < pTrack.TrackDuration)

{

m_rtDuration = pTrack.TrackDuration;

}

}

else

{

pTrack.Dispose();

pTrack = null;

}

}

}

}

else

{

hr = E_INVALIDARG;

}

}

pProfile = null;

Each track have it own additional initialization in there we initialize track settings and validate can it be used for streaming or not. Along with it we should intialize m_rtDuration variable so we can support seeking. Duration of the file we can retreive as specified attribute via IWMHeaderInfo interface.

IWMHeaderInfo pHeader = (IWMHeaderInfo)Marshal.GetObjectForIUnknown(m_pWMReader);

AttrDataType _type;

long _duration = 0;

short wBytes = (short)Marshal.SizeOf(_duration);

IntPtr _ptr = Marshal.AllocCoTaskMem(wBytes);

short wStreamNum = 0;

if (S_OK == pHeader.GetAttributeByName(ref wStreamNum, "Duration", out _type, _ptr, ref wBytes))

{

_duration = Marshal.ReadInt64(_ptr);

if (wBytes == Marshal.SizeOf(_duration))

{

m_rtDuration = _duration;

}

}

Marshal.FreeCoTaskMem(_ptr);

pHeader = null;

Demultiplexing

We are using one common thread for demultiplexing, but actual processing goes in separate threads in WMF SDK and from that threads we just getting results via callback. In overridden OnDemuxStart we call IWMReader.Start to start processing the data:

public override HRESULT OnDemuxStart()

{

IUnknownImpl _unknown = new IUnknownImpl(m_pWMReader);

WMStartProc _proc = _unknown.GetProcDelegate<WMStartProc>(10);

return (HRESULT)_proc(m_pWMReader,m_rtPosition, 0, 1, IntPtr.Zero);

}

Due threading issue I make wrap over the single function here. I think that will be easy than doing wrap interface fully. I use only 3 methods of IWMReader interface, so make additional wrapper for that wasn't require. In actual demultiplexer thread we just wait for Stop or EOS events, as WMF SDK uses their own threads for providing output packets:

public override HRESULT ProcessDemuxPackets()

{

if (0 == WaitHandle.WaitAny(new WaitHandle[] { m_hEOS, m_hQuit }))

{

return S_FALSE;

}

return NOERROR;

}

The m_hQuit event it's the global stop notification. The m_hEOS event is set once we have End of stream notify from WM callback - see OnStatus implementation topic above. We override OnDemuxStop and call in there IWMReader.Stop method:

public override HRESULT OnDemuxStop()

{

IUnknownImpl _unknown = new IUnknownImpl(m_pWMReader);

WMStopProc _proc = _unknown.GetProcDelegate<WMStopProc>(11);

return (HRESULT)_proc(m_pWMReader);

}

During processing as we have using our own clock we receive IWMReaderCallbackAdvanced.OnTime notification, which means that previous timing period elapsed. We just need to increase the time to another period:

public int OnTime(long cnsCurrentTime, IntPtr pvContext)

{

IUnknownImpl _unknown = new IUnknownImpl(m_pWMReader);

IntPtr pReaderAdvanced;

Guid _guid = typeof(IWMReaderAdvanced).GUID;

_unknown._QueryInterface(ref _guid, out pReaderAdvanced);

_unknown = new IUnknownImpl(pReaderAdvanced);

WMDeliverTimeProc _proc = _unknown.GetProcDelegate<WMDeliverTimeProc>(5);

cnsCurrentTime += UNITS;

int hr = _proc(pReaderAdvanced,cnsCurrentTime);

_unknown._Release();

return hr;

}

We are receiving stream samples, this means that they are raw - as they are stored in a file (usually compressed) . We can configure to receive uncompressed samples too - that functionality you can add by your self and extend the filter :). So once new sample is ready it call IWMReaderCallbackAdvanced.OnStreamSample callback method. In here we add sample data into the queue of the track.

public int OnStreamSample(short wStreamNum, long cnsSampleTime, long cnsSampleDuration, int dwFlags, IntPtr pSample, IntPtr pvContext)

{

foreach (WMTrack _track in m_Tracks)

{

if (_track.StreamNum == wStreamNum)

{

PacketData _data = new WMPacketData(pSample);

_data.Start = cnsSampleTime;

_data.Stop = cnsSampleTime + cnsSampleDuration;

_data.SyncPoint = (dwFlags & 1) != 0;

_track.AddToCache(ref _data);

break;

}

}

return NOERROR;

}

Argument pSample here is the INSSBuffer interface. we should hold it until the sample will be processed and release it then. This is necessary bcs of allocator try to fill any available buffer and if we not hold it the procissing not stopped. Maybe in some other articles I describe how to work with allocators. So we are using WMPacketData class to hold that sample, initialization of the fields of base PacketData class from INSSBuffer:

public WMPacketData(IntPtr pBuffer)

{

NSBuffer = pBuffer;

if (pBuffer != IntPtr.Zero)

{

Marshal.AddRef(pBuffer);

IUnknownImpl _puffer = new IUnknownImpl(pBuffer);

GetBufferAndLengthProc _proc = _puffer.GetProcDelegate<GetBufferAndLengthProc>(7);

IntPtr _ptr;

_proc(pBuffer, out _ptr, out Size);

Buffer = new byte[Size];

Marshal.Copy(_ptr, Buffer, 0, Size);

}

}

We are calling INSSBuffer.GetBufferAndLength method to obtain data pointer and length.

Seeking

Seeking implementation is very simple here. As we have SeekToTime method to override from base class; we use it and just store the timing position in a class variable.

public override HRESULT SeekToTime(long _time)

{

m_rtPosition = _time;

return base.SeekToTime(_time);

}

Note: we are know that at this point all threads are stopped. So after we are start processing again we just specify stored position in IWMReader.Start method which called in overridden OnDemuxStart.

Implementing track

In track we only need to perform additional initialization and provide media type for connection, as processing done in the filter.

Track Initialization

In each track we need to set type of the media data (Audio, Video). Retrieve stream number - using it we decide for which track we got sample in a callbacks.

hr = (HRESULT)m_pStreamConfig.GetStreamNumber(out m_wStreamNum);

if (FAILED(hr)) return hr;

{

Guid _type;

hr = (HRESULT)m_pStreamConfig.GetStreamType(out _type);

if (FAILED(hr)) return hr;

if (_type == MediaType.Video)

{

m_Type = TrackType.Video;

}

if (_type == MediaType.Audio)

{

m_Type = TrackType.Audio;

}

}

Additionally we configure the settings for samples delivery for that track:

WMParser pParser = (WMParser)m_pParser;

IWMReaderAdvanced2 pReaderAdvanced = (IWMReaderAdvanced2)Marshal.GetObjectForIUnknown(pParser.WMReader);

StreamSelection _selected;

hr = (HRESULT)pReaderAdvanced.GetStreamSelected(m_wStreamNum, out _selected);

if (SUCCEEDED(hr))

{

if (_selected == StreamSelection.Off)

{

m_Type = TrackType.Unknown;

}

else

{

hr = (HRESULT)pReaderAdvanced.SetReceiveStreamSamples(m_wStreamNum, true);

ASSERT(hr == S_OK);

hr = (HRESULT)pReaderAdvanced.SetAllocateForStream(m_wStreamNum, false);

ASSERT(hr == S_OK);

int dwSize = 0;

if (SUCCEEDED(pReaderAdvanced.GetMaxStreamSampleSize(m_wStreamNum, out dwSize)))

{

m_lBufferSize = dwSize;

}

}

}

pReaderAdvanced = null;

In code above we set type of media to Unknown in case if stream not enabled in a file - this way such stream will not be displayed in a filter as output pin. In other case we specify to receive raw samples for that stream. Additionally we get maximum buffer size for the stream and set to use default allocator.

Implementing GetMediaType

Output pin craeted for each track and to performs connection we have to provide output media type. This is done in GetMediaType method of the track. To obtain type information we are use IWMMediaProps interface, and it GetMediaType method. We should call this method twice: first time to obtain required size and second time to fill the buffer. As we use WMF SDK in there WM_MEDIA_TYPE structure is similar to AM_MEDIA_TYPE, so we can use our managed AMMediaType class for casting allocated data.

public override HRESULT GetMediaType(int iPosition, ref AMMediaType pmt)

{

if (iPosition < 0) return E_INVALIDARG;

if (iPosition > 0) return VFW_S_NO_MORE_ITEMS;

IWMMediaProps pMediaProps = (IWMMediaProps)m_pStreamConfig;

int cType = 0;

pMediaProps.GetMediaType(IntPtr.Zero, ref cType);

IntPtr pMediaType = Marshal.AllocCoTaskMem(cType);

pMediaProps.GetMediaType(pMediaType, ref cType);

pmt.Set((AMMediaType)Marshal.PtrToStructure(pMediaType,typeof(AMMediaType)));

Marshal.FreeCoTaskMem(pMediaType);

return S_OK;

}

Filter notes

Resulted filter performed demultiplexing Windows media files such as asf, wmv and wma. I didn't found any problems with it. In package included 2 versions of filter - Splitter and Source. What else you can try with that filter:

- implement to delivery uncompressed samples along with compressed

- implement filteer as network source which will performs receiving and playing windows media network streams

History

08-10-2012 - Initial version.