Introduction

Second part of description how to create DirectShow demultiplexors. Here I review how to implement Source filters with example of implementation AVI File Source filter in C#. Example based on my class library: BaseClasses.NET which described in article

Pure .NET DirectShow Filters in C#.

Background

Source filters are similar to Splitters, but it does not contains input pin. Instead of it loads file or stream or url using IFileSourceFilter interface. Once user calls Load method of it interface filter performs validation of input file and creating output pins. While playing, filter reads and parse data directly from the file and deliver it.

How the FilterGraph decide to use Source Filters?

In previous article we review splitters filter registration. Source filters also stored information in the system registry. Source filters can be registred by file extension or by protocol.

Registering protocol

Protocol registration used for streaming mostly. Filter Graph automatically decide what source filter to use for specified protocol, during RenderFile call. It registered in following registry subkey:

HKCR\<your protocol>\Extensions

Here <your protocol> - protocol name such as "http", "https", "mms" or even your own. Under "Extensions" subkey placed file extensions as name and string GUID of your source filter as value. If filter work for any extesions then "Source Filter" value with GUID placed just at subkey of <your protocol>. Here is the shot for a http protocol

In the class library I made the attribute to easy perform such registration:

[ComVisible(false)]

[AttributeUsage(AttributeTargets.Class, AllowMultiple=true)]

public class RegisterProtocolExtension : Attribute

Next constructors are available:

public RegisterProtocolExtension(string _protocol)

public RegisterProtocolExtension(string _protocol, string _extension)

Attribute can be placed on filter or on parser class.

Registering extension

Extension works similar to protocol, so Filter Graph check for an extension of the file, find entry in register and loads the source filter if it specified. Extension registered in a next registry subkey:

HKCR\Media Type\<your extension>

<your extension> should be specified with dot, example ".mp3". Under that subkey one major value is specified is consist of filter's GUID and named "Source Filter". Also possible two other GUID values with names "Media Type" and "SubType", that values are passed to the filter in RenderFile call. Example how it looks:

Class library also handled that with another attribute:

[ComVisible(false)]

[AttributeUsage(AttributeTargets.Class, AllowMultiple = true)]

public class RegisterFileExtension : Attribute

And constructors of it:

public RegisterFileExtension(string _extension)

public RegisterFileExtension(string _extension,string _MediaType,string _SubType)

If no protocol found and no extension - then Filter Graph uses "File Source Async" filter as a source.

Basic Filter Architecture

In current example we will use another model there each pin reads the data from the file in it's own thread. So each output works asynchronously and deliver samples as soon as possible. This looks like the each pin reads it's own data from the file to deliver.

Black arrows shows query data from the file. This architecture is much simple than in previous article and easy to implement, as queue may not be used here (in current sample it is not used). Output pins are created once file is loaded. All major things for file source filters are the same as described in previous article. And we review some additional features.

Loading the file

In a file source filter we require to implement IFileSourceFilter interface. It is used to specify file path or URL or other string data to the source filter. Along with this information can be optional specify Media Type. It usually set by Graph Manager for "File Source Async" filter using registry as described in previous article. For "File Source Async" we see that type on output pin. In case, if we use our own file source, passing media type not necessary. Once user call this method inside filter we check giving file and decide can we handle it or not. If we can't handle we shoudl return an error. Otherwise we loading tracks and initialize output pins. Validation called 2 times: first time the BitStreamReader object in parser not initialized (null) - this in case if you handle the file directly in parser; if first call is failed base class initialize reader object and call second time.

Opening progress

Additionally Source filters can implement IAMOpenProgress interface (mostly implemented in File Source filters) . This interface can be used by applications for checking progress of open operation. For example if we opening media from network or using some other things during opening file which can takes time so in separate thread application can query for progress and/or abort operation. Class library have brief implementation of this interface, for your own needs you should modify it.

Implementing stream selection

Stream selection allows to application enable or disable specified audio output in case is there are couple of audio tracks in a file. This mechanism is available via IAMStreamSelect interface. This interface allows to extract audio track information, number of available tracks in a file and enable or disable them for playback. In A class library BaseSplitter implement that interface, so it supported automatically and can be used by appications. In track implementations you can specify the name of an audio track and LCID - to display the language for an audio. Most player applications supports usage of this interface. The tricky stuff in enabling or disabling implementation is that we need to do seeking for little duration forward and then backward - this require because of selected seeking pin can be disable and we need to reselect it in a filter graph.

if (IsActive && dwFlags != AMSTREAMSELECTENABLEFLAGS.DISABLE)

{

try

{

IMediaSeeking _seeking = (IMediaSeeking)FilterGraph;

if (_seeking != null)

{

long _current;

_seeking.GetCurrentPosition(out _current);

_current -= UNITS / 10;

_seeking.SetPositions(_current, AMSeekingSeekingFlags.AbsolutePositioning, null, AMSeekingSeekingFlags.NoPositioning);

_current += UNITS / 10;

_seeking.SetPositions(_current, AMSeekingSeekingFlags.AbsolutePositioning, null, AMSeekingSeekingFlags.NoPositioning);

}

}

catch

{

}

}

In our example how the stream selection looks in a GraphStudio application:

AVI Source Filter Implementation

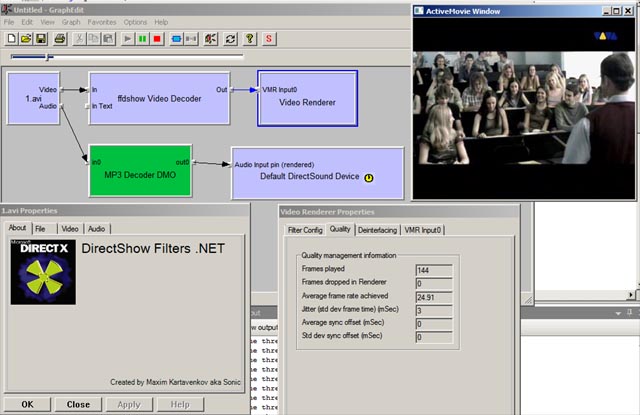

With model described above we can easy implement AVI Source filter. We will use VFW API as it enought for our sample. AVI have an index table and accessing to sample in track can be done very fast and in seperate thread, so our model should work. Let's see how our filter looks:

[ComVisible(true)]

[Guid("C55996E5-89C1-4a03-93FB-2985EC8CBC0E")]

[AMovieSetup(true)]

[PropPageSetup(typeof(AboutForm))]

[RegisterFileExtension(".avx")]

public class AVISourceFilter : BaseSourceFilterTemplate<AVIParser>

{

public AVISourceFilter()

: base("CSharp AVI Source")

{

}

}

I add the attribute for registering file extension and associate it with our filter, but instead of ".avi" I specify ".avx". So if you change extension of your avi file to ".avx" and try to render it in GraphEdit, as well as play in any other applications, will be used our filter.As in previous article we concentrates on implementation of file format supports.

Implementing parser

As in current implementation we are not using the common demuxing thread and each track works by it own, in parser we just make checking the file and initializing the tracks. Reading packets performed in tracks directly, as well as seeking.

Opening the file

I already mention that we use IFileSourceFilter interface for loading file. All operations is done in base class and in parser we just have to override same method as previously: CheckFile of base parser class. In that method we check for a file instead of stream in previous article.

protected override HRESULT CheckFile()

{

if (String.IsNullOrEmpty(m_sFileName)) return E_INVALIDARG;

return (HRESULT)AVIFileOpen(out m_pAviFile, m_sFileName, AVIOpenFlags.Read, null);

}

This is done very simple: if AVIFileOpen API succeeded - we can play file otherwise - not. Note: we should check if m_sFileName variable initialized (as initialization can be performed twice differently).

Loading tracks

Next step after file checked is loading the tracks. We have AVI file handle initialized from call of CheckFile. Here we use AVIFileInfo API to get information of the file and of all streams in it. After we create tracks based on streams using AVIFileGetStream.

AVIFILEINFO _info = new AVIFILEINFO();

hr = (HRESULT)AVIFileInfo(m_pAviFile, _info, Marshal.SizeOf(_info));

if (hr.Failed) return hr;

if (_info.dwStreams == 0)

{

return E_UNEXPECTED;

}

for (int i = 0; i < (int)_info.dwStreams; i++)

{

IntPtr _stream;

if (S_OK == AVIFileGetStream(m_pAviFile, out _stream, 0, i))

{

AVITrack _track = new AVITrack(this, _stream);

if (S_OK == _track.InitTrack())

{

m_Tracks.Add(_track);

}

else

{

_track.Dispose();

_track = null;

}

}

}

As in previous example we put additional initialization of the track into it own method. Here we just check the result and discard stream from playback if it fails. Additionally we calculate duration of the media:

m_rtDuration = 0;

if (_info.dwRate != 0)

{

m_rtDuration = _info.dwLength * _info.dwScale / _info.dwRate;

}

Implementing track

All major functionality in current implementation located in track. And each track performed next tasks:

- Initialization

- Providing media type for connection

- Seeking

- Reading data from file and prepare packets.

Track initialization

In additional track initialization we preapre information about duration of the track. Along with it we query for buffer size which is recommended. According streamtype FOURCC we initialize output type: Audio or Video.

if (m_pAviStream == IntPtr.Zero) return E_UNEXPECTED;

AVISTREAMINFO _info = new AVISTREAMINFO();

HRESULT hr = (HRESULT)AVIStreamInfo(m_pAviStream, _info, Marshal.SizeOf(_info));

if (hr.Failed) return hr;

m_iBufferSize = (int)_info.dwSuggestedBufferSize;

m_nCurrentSample = AVIStreamStart(m_pAviStream);

if (_info.dwRate != 0)

{

m_rtOffset = (long)((double)(UNITS * _info.dwStart * _info.dwScale) / _info.dwRate);

m_rtDuration = (long)((double)(UNITS * _info.dwLength * _info.dwScale) / _info.dwRate);

}

if (_info.fccType == streamtypeVIDEO)

{

m_Type = TrackType.Video;

}

if (_info.fccType == streamtypeAUDIO)

{

m_Type = TrackType.Audio;

}

if ((int)(_info.dwFlags & AVIStreamInfoFlags.DISABLED) != 0)

{

m_bEnabled = false;

}

if (_info.wLanguage != 0)

{

m_lcid = MAKELCID(MAKELANGID(_info.wLanguage, SUBLANG_NEUTRAL), SORT_DEFAULT);

}

I decide to put here initialization of output media type to not call each time VFW api.

int cbFormat = 0;

hr = (HRESULT)AVIStreamReadFormat(m_pAviStream, _info.dwStart, IntPtr.Zero, ref cbFormat);

if (hr.Failed) return hr;

IntPtr pFormat = Marshal.AllocCoTaskMem(cbFormat);

hr = (HRESULT)AVIStreamReadFormat(m_pAviStream, _info.dwStart, pFormat, ref cbFormat);

if (hr.Succeeded)

{

if (m_Type == TrackType.Audio)

{

m_mt.majorType = MediaType.Audio;

WaveFormatEx _wfx = (WaveFormatEx)Marshal.PtrToStructure(pFormat, typeof(WaveFormatEx));

m_mt.SetFormat(pFormat,cbFormat);

m_mt.formatType = FormatType.WaveEx;

m_mt.sampleSize = _wfx.nBlockAlign;

m_mt.subType = new FOURCC(_wfx.wFormatTag);

if (m_iBufferSize < _wfx.nAvgBytesPerSec)

{

m_iBufferSize = _wfx.nAvgBytesPerSec;

}

if (m_iBufferSize < _wfx.nSamplesPerSec)

{

m_iBufferSize = _wfx.nSamplesPerSec << 3;

}

}

if (m_Type == TrackType.Video)

{

m_mt.majorType = MediaType.Video;

VideoInfoHeader _vih = new VideoInfoHeader();

_vih.BmiHeader = (BitmapInfoHeader)Marshal.PtrToStructure(pFormat, typeof(BitmapInfoHeader));

_vih.SrcRect.bottom = _vih.BmiHeader.Height;

_vih.TargetRect.bottom = _vih.BmiHeader.Height;

_vih.SrcRect.right = _vih.BmiHeader.Width;

_vih.TargetRect.right = _vih.BmiHeader.Width;

if (_info.dwRate != 0)

{

_vih.AvgTimePerFrame = UNITS * _info.dwScale / _info.dwRate;

}

else

{

_vih.AvgTimePerFrame = 1;

}

if (_vih.BmiHeader.ImageSize == 0)

{

_vih.BmiHeader.ImageSize = _vih.BmiHeader.GetBitmapSize();

}

if (_vih.BmiHeader.Compression == 0)

{

switch (_vih.BmiHeader.BitCount)

{

case 1:

m_mt.subType = MediaSubType.RGB1;

break;

case 4:

m_mt.subType = MediaSubType.RGB4;

break;

case 8:

m_mt.subType = MediaSubType.RGB8;

break;

case 16:

m_mt.subType = MediaSubType.RGB565;

break;

case 32:

m_mt.subType = MediaSubType.RGB32;

break;

default:

case 24:

m_mt.subType = MediaSubType.RGB24;

break;

}

}

else

{

m_mt.fixedSizeSamples = false;

m_mt.subType = new FOURCC(_vih.BmiHeader.Compression);

}

m_mt.SetFormat(_vih);

int nExtraSize = cbFormat - Marshal.SizeOf(_vih.BmiHeader);

if (nExtraSize > 0)

{

IntPtr _ptr = new IntPtr(pFormat.ToInt32() + Marshal.SizeOf(_vih.BmiHeader));

m_mt.AddFormatExtraData(_ptr,nExtraSize);

}

m_mt.sampleSize = _vih.BmiHeader.ImageSize;

if (m_iBufferSize < _vih.BmiHeader.ImageSize)

{

m_iBufferSize = _vih.BmiHeader.ImageSize;

}

}

}

Marshal.FreeCoTaskMem(pFormat);

We read BitmapInfoHeader structure for video or WaveFormatEx structure for audio by calling AVIStreamReadFormat and preapre the whole media type information according it. Audio type initialization is fairly simple. But for video we should handle RGB colorspaces, as AVI can contains RGB video, but compression FOURCC is different from subtype Guid. And major thing here that can be extradata for the decoder which placed additionally to BitmapInfoHeader structure but we need to copy it to media type separately, as we put VideoInfoHeader structure for a format.

Implementing GetMediaType

Here we just returns the copy AMMediaType structure which we saved during track initialization.

public override HRESULT GetMediaType(int iPosition, ref AMMediaType pmt)

{

if (iPosition < 0) return E_INVALIDARG;

if (iPosition > 0) return VFW_S_NO_MORE_ITEMS;

pmt.Set(m_mt);

return NOERROR;

}

Seeking

We should have implementation of seeking in each track instead of parser, this is also required because of we use different model. In base parser each track receives seeking notify and we know that all threads are stopped during that method calls. In VFW from AVI file we can read media data by given sample index. So we have variable which stores currently played sample index. As seeking perfromed by time value we use AVIStreamTimeToSample for conversion from time to sample index.

public override HRESULT SeekTrack(long _time)

{

if (_time <= 0)

{

m_nCurrentSample = AVIStreamStart(m_pAviStream);

}

else

{

m_nCurrentSample = AVIStreamTimeToSample(m_pAviStream,(int)(_time / 10000));

int _temp = AVIStreamFindSample(m_pAviStream, m_nCurrentSample, AVIStreamFindFlags.FIND_PREV | AVIStreamFindFlags.FIND_KEY);

if (_temp != 0)

{

m_nCurrentSample = _temp;

}

long _current = AVIStreamSampleToTime(m_pAviStream, m_nCurrentSample);

if (_current != -1)

{

_current *= 10000;

_time = _current;

}

}

return base.SeekTrack(_time);

}

After seeking we should always starts from key frame. so we should adjust resulted sample index. For that used AVIStreamFindSample API.

Reading data and preapre packets

Prepare packets and output them looks much easy compare to previous example. As here we just read the data and deliver it without putting packets into queue. So we need to override GetNextPacket method which is extrack data to deliver from queue in base implementation.

public override PacketData GetNextPacket()

{

PacketData _data = new PacketData();

_data.Buffer = new byte[m_iBufferSize];

int nSamples = 0;

HRESULT hr = (HRESULT)AVIStreamRead(m_pAviStream, m_nCurrentSample, -1, _data.Buffer, m_iBufferSize, out _data.Size, out nSamples);

if (hr.Failed) return null;

int _temp = AVIStreamFindSample(m_pAviStream, m_nCurrentSample, AVIStreamFindFlags.FIND_NEXT | AVIStreamFindFlags.FIND_KEY);

if (_temp != -1 && _temp < m_nCurrentSample + nSamples)

{

_data.SyncPoint = true;

}

_data.Start = AVIStreamSampleToTime(m_pAviStream, m_nCurrentSample);

if (_data.Start != -1) _data.Start *= 10000;

m_nCurrentSample += nSamples;

_data.Stop = AVIStreamSampleToTime(m_pAviStream, m_nCurrentSample);

if (_data.Stop != -1) _data.Stop *= 10000;

return _data;

}

We use AVIStreamRead API for reading data. We pass current sample index and preapred buffer. After we increase current sample variable according number of readed samples. Major thing is to proper set the key frame flag as this is major stuff for delivery compressed data. For that we use same API as for seeking: AVIStreamFindSample.

Filter notes

Resulted filter performs reading AVI files and delivery data over each output pin in it's own thread. It shows how easy to make this type of filter and how to use model without common demultiplexing thread. Note: Such implementation not means that all File Source Filters works same way, mostly used model described in previous article, but here just a use case of another way. You can try to modify flter with delivering uncompressed samples.

History

13-10-2012 Initial Version.