Introduction

This article makes an experimental comparison on the performance of the different methods to pass structured data to SQL Server Stored Procedures from ADO.NET.

Background

It is very common that programmers will want to pass some structured data to Stored Procedures from ADO.NET. For example, if a client application needs to update the scores for thousands of students, it is better to bundle the information in some structured way and pass it to a SQL Server Stored Procedure parameter and let the Stored Procedure process the information in a batch. Compared with calling a Stored Procedure thousands of times, this approach has at least two clear advantages:

- Reduce network traffic and therefore improve performance.

- By processing the information together in the Stored Procedure, it is much easier to implement the transaction in the Stored Procedure to make sure the updates either succeed or fail for all.

Although it is possible to implement transactions using ADO.NET, when passing the information to SQL Server on a record by record basis, some database objects may be locked by the long going transaction and other users' access to these objects is blocked. By bundling the records and sending them to the Stored Procedure in a structured way, the Stored Procedure can preprocess the data in a form that is best suited for the database update and therefore reduce the time needed to lock the database objects.

The subject of this article has been long discussed by programmers. A CodeProject article: Passing an array or DataTable into a Stored Procedure gave a very good introduction on it. The performance comparison between passing the information in an array and calling the Stored Procedure multiple times clearly favors the batch processing method.

With the introduction of the XML data type in SQL Server 2005 and the introduction of the user defined Table data type in SQL Server 2008, we have more options to pass structured data to SQL Server. In general, we have at least three methods:

- In the good old days, we passed structured data to Stored Procedures as

Text or VARCAHR by separating pieces of information with special characters. - Passing the information in XML format.

- Passing the information as Table Valued Parameters.

This is no doubt that the above three methods all have significant performance advantages over passing information on a record by record basis, but what is the performance comparison among these methods themselves? This article is intended to answer this question by an experiment, and the result shows an impressive performance difference.

To make the comparison practically meaningful, the time measured for the comparison includes the following:

- The time to prepare structured data to a form suitable for passing to the Stored Procedure in the client program.

- The time spent to upload the data to SQL Server as a Stored Procedure parameter.

- The time spent by the SQL Server Stored Procedure to process data to a form that is easy to be used by the Stored Procedure. In this experiment, it is the time to process the data into a temporary table or some equivalent data type.

In this experiment, the Stored Procedures will return the data uploaded to the client program in exactly the same way. This time is also included in the measurement for a more pleasing user experience when conducting the experiment. Since all the Stored Procedures return the same data, the time spent should be similar and should not change the result of the experiment.

This article includes the complete C# code and SQL scripts to setup the test. Interested readers can download the code and set up the environment to repeat the test themselves. If you are not familiar with how to pass structured data to SQL Server Stored Procedures, the code that comes with this article can also serve as a good reference. The client C# code is written in .NET 3.5 in Visual Studio 2008, and the SQL Server version is 2008.

The Data to Pass to SQL Server Stored Procedures

To make the comparison, we need to have some test data to pass to the SQL Server Stored Procedures. I choose the test data based on two criteria:

- All the three methods will be using exactly the same set of data.

- The data volume needs to be large enough so the performance difference is visible.

A singleton class is created to store the data to pass to the Stored Procedure so it will not be changed during the experiment.

using System;

using System.Data;

namespace SQLSPPerformanceComparison

{

class DataSourceToUpload : DataTable

{

private static DataSourceToUpload _thisInstance = null;

private DataSourceToUpload() : base()

{

int NoOfRowsToSend =

System.Convert.ToInt32(

System.Configuration.ConfigurationSettings.AppSettings[

"NoOfRowsOfDataInTheTest"]);

Columns.Add("ID", System.Type.GetType("System.Int32"));

Columns.Add("Name", System.Type.GetType("System.String"));

Columns.Add("Score", System.Type.GetType("System.Int32"));

Random rd = new Random();

for (int Idex = 1; Idex <= NoOfRowsToSend; Idex++)

{

DataRow row = NewRow();

Rows.Add(row);

row["ID"] = Idex;

row["Name"] = "Student Name No. " + Idex.ToString();

row["Score"] = 60 + rd.NextDouble() * 40;

}

}

public static DataSourceToUpload GetInstance()

{

if (_thisInstance == null)

{

_thisInstance = new DataSourceToUpload();

}

return _thisInstance;

}

}

}

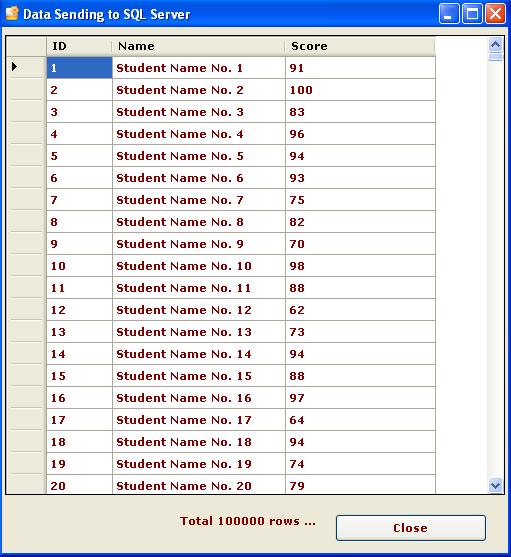

This class represents a DataTable. The DataTable is filled with dummy test data simulating a list of students with ID, Name, and Score. This class reads from the "App.config" file for the number of rows to fill in the DataTable. In my configuration, the DataTable is 100000 rows. The following picture shows the top 20 rows of the DataTable.

The ADO.NET Utility

All the SQL Server connections in this experiment go through the following utility class:

using System;

using System.Data;

using System.Data.SqlClient;

namespace SQLSPPerformanceComparison

{

class SQLServerUtility

{

public static DataTable GetADataTable(SqlCommand cmd)

{

SqlConnection connection = new SqlConnection();

connection.ConnectionString =

System.Configuration.ConfigurationSettings.AppSettings[

"SQLServerConnectionString"];

cmd.Connection = connection;

cmd.CommandTimeout = 0;

connection.Open();

DataTable aTable = new DataTable();

SqlDataAdapter adapter = new SqlDataAdapter();

adapter.SelectCommand = cmd;

adapter.Fill(aTable);

return aTable;

}

}

}

The public, static method "GetADataTable" obtains the connection string from the "App.config" file. It takes a SqlCommand input and calls SQL Server to obtain a DataTable. For each method in the comparison, we will prepare a SqlCommand object and use this method to send the data to SQL Server.

Passing Data as Text Separated by Special Characters

The following code is used for testing passing data as Text to the Stored Procedure. Each data item is separated by a special character. This method of communicating with Stored Procedures has been widely used in the good old days for the last couple of years. The choice of separating characters in this article is different from most articles introducing this technique. To avoid the need to escape the data content passing to the Stored Procedure, two ASCII codes, 0 and 1, are used as the separators. These two ASCII codes are not printable, so they will not be confused with any string or integer content in the data.

private DataTable TestWithTextType()

{

char RowSeparator = System.Convert.ToChar(0);

char ItemSeparator = System.Convert.ToChar(1);

StringBuilder SB = new StringBuilder();

foreach (DataRow row in DataToUpload.Rows)

{

int ID = System.Convert.ToInt32(row["ID"]);

string Name = System.Convert.ToString(row["Name"]);

int Score = System.Convert.ToInt32(row["Score"]);

SB.Append(ID.ToString());

SB.Append(ItemSeparator);

SB.Append(Name);

SB.Append(ItemSeparator);

SB.Append(Score.ToString());

SB.Append(RowSeparator);

}

SqlCommand cmd = new SqlCommand();

cmd.CommandText = "UploadByTextType";

cmd.Parameters.Add("@DataInTextType",

SqlDbType.Text).Value = SB.ToString();

cmd.CommandType = CommandType.StoredProcedure;

DataTable aTable = SQLServerUtility.GetADataTable(cmd);

return aTable;

}

The data to upload comes from the global variable "DataToUpload" which references the single instance of the class "DataSourceToUpload" mentioned earlier. The Stored Procedure that receives the data is generated by the following script:

IF EXISTS (SELECT * FROM sys.objects WHERE

object_id = OBJECT_ID(N'[dbo].[UploadByTextType]') AND

type in (N'P', N'PC'))

DROP PROCEDURE [dbo].[UploadByTextType];

GO

CREATE PROCEDURE [dbo].[UploadByTextType]

@DataInTextType AS TEXT

AS

BEGIN

DECLARE @T AS TABLE([ID] INT, [Name] VARCHAR(250), [Score] INT)

DECLARE @RowSeparator AS INT; DECLARE @ItemSeparator AS INT;

DECLARE @P AS INT

DECLARE @DataLength AS INT

DECLARE @Item AS INT

DECLARE @C AS VARCHAR(1)

DECLARE @ID AS VARCHAR(20)

DECLARE @Name AS VARCHAR(250)

DECLARE @Score AS VARCHAR(20)

SET @RowSeparator = 0; SET @ItemSeparator = 1

SET @P = 1; SET @Item = 1; SET @ID = ''; SET @Name = ''; SET @Score = ''

SET @DataLength = DATALENGTH(ISNULL(@DataInTextType, ''));

WHILE @P <= @DataLength

BEGIN

SET @C = SUBSTRING(@DataInTextType, @P, 1)

IF ASCII(@C) = @RowSeparator BEGIN

INSERT INTO @T VALUES(CAST(@ID AS INT), @Name, CAST(@Score AS INT));

SET @Item = 1; SET @ID = ''; SET @Name = ''; SET @Score = '';

END ELSE

BEGIN

IF @Item = 1

BEGIN IF ASCII(@C) = @ItemSeparator BEGIN SET @Item = @Item + 1 END

ELSE BEGIN SET @ID = @ID + @C END END

ELSE IF @Item = 2

BEGIN IF ASCII(@C) = @ItemSeparator BEGIN SET @Item = @Item + 1 END

ELSE BEGIN SET @Name = @Name + @C END END

ELSE IF @Item = 3

BEGIN IF ASCII(@C) = @ItemSeparator BEGIN SET @Item = @Item + 1 END

ELSE BEGIN SET @Score = @Score + @C END END

END

SET @P = @P + 1

END;

SELECT * FROM @T

END;

GO

This Stored Procedure parses the data from the ADO.NET client and restores it into a temporary table, and returns it to the calling ADO.NET client.

Passing Data in XML Format

With the introduction of the XML type in SQL Server 2005, we can send structured data to Stored Procedures as XML. The following is the C# code at the client side:

private DataTable TestWithXMLType()

{

System.Text.UTF8Encoding AEncoder = new UTF8Encoding();

System.IO.MemoryStream ms = new System.IO.MemoryStream();

System.Xml.XmlTextWriter tw =

new System.Xml.XmlTextWriter(ms, new System.Text.ASCIIEncoding());

tw.WriteStartDocument();

tw.WriteStartElement("DATAS");

foreach (DataRow row in DataToUpload.Rows)

{

int ID = System.Convert.ToInt32(row["ID"]);

string Name = System.Convert.ToString(row["Name"]);

int Score = System.Convert.ToInt32(row["Score"]);

tw.WriteStartElement("DATA");

tw.WriteElementString("ID", ID.ToString());

tw.WriteElementString("Name", Name);

tw.WriteElementString("Score", Score.ToString());

tw.WriteEndElement();

}

tw.WriteFullEndElement();

tw.WriteEndDocument();

tw.Flush();

tw.Close();

string strXML = AEncoder.GetString(ms.ToArray());

SqlCommand cmd = new SqlCommand();

cmd.CommandText = "UploadByXMLType";

cmd.Parameters.Add("@DataInXMLType", SqlDbType.Text).Value = strXML;

cmd.CommandType = CommandType.StoredProcedure;

DataTable aTable = SQLServerUtility.GetADataTable(cmd);

return aTable;

}

The Stored Procedure is generated by the following script:

IF EXISTS (SELECT * FROM sys.objects WHERE

object_id = OBJECT_ID(N'[dbo].[UploadByXMLType]') AND type in (N'P', N'PC'))

DROP PROCEDURE [dbo].[UploadByXMLType];

GO

CREATE PROCEDURE [dbo].[UploadByXMLType]

@DataInXMLType AS TEXT

AS

BEGIN

DECLARE @I int

DECLARE @XML AS XML

SET @XML = CAST(@DataInXMLType AS XML)

EXEC sp_xml_preparedocument @I OUTPUT, @XML

SELECT *

FROM OPENXML (@I, '/DATAS/DATA', 1) WITH

([ID] INT 'ID', [Name] VARCHAR(250) 'Name', [Score] INT 'Score')

EXEC sp_xml_removedocument @I

END;

GO

Passing Data as Table Valued Parameters

With the introduction of Table Valued Parameters in SQL Server 2008, we can pass an ADO.NET DataTable to SQL Server as a Stored Procedure parameter. The following is the client side .NET code. Although the data saved in the singleton class "DataSourceToUpload" is a DataTable by itself, the following code regenerates a DataTable. In real world applications, the data to be sent may not always in the DataTable format. Counting the time spent on generating the DataTable makes the comparison more practically meaningful.

private DataTable TestWithSQLTableType()

{

DataTable TableToUpload = new DataTable();

TableToUpload.Columns.Add("ID",

System.Type.GetType("System.Int32"));

TableToUpload.Columns.Add("Name",

System.Type.GetType("System.String"));

TableToUpload.Columns.Add("Score",

System.Type.GetType("System.Int32"));

foreach (DataRow row in DataToUpload.Rows)

{

int ID = System.Convert.ToInt32(row["ID"]);

string Name = System.Convert.ToString(row["Name"]);

int Score = System.Convert.ToInt32(row["Score"]);

DataRow aNewRow = TableToUpload.NewRow();

aNewRow["ID"] = ID;

aNewRow["Name"] = Name;

aNewRow["Score"] = Score;

TableToUpload.Rows.Add(aNewRow);

}

SqlCommand cmd = new SqlCommand();

cmd.CommandText = "UploadByTableType";

cmd.Parameters.AddWithValue("@DataInTableType", TableToUpload);

cmd.CommandType = CommandType.StoredProcedure;

DataTable aTable = SQLServerUtility.GetADataTable(cmd);

return aTable;

}

On the SQL Server side, we need to first create a user defined Table type and use it as the Stored Procedure parameter type.

IF EXISTS (SELECT * FROM sys.objects

WHERE object_id = OBJECT_ID(N'[dbo].[UploadByTableType]')

AND type in (N'P', N'PC'))

DROP PROCEDURE [dbo].[UploadByTableType];

GO

IF EXISTS (SELECT * FROM sys.types st JOIN sys.schemas ss

ON st.schema_id = ss.schema_id

WHERE st.name = N'TStudentScore' AND ss.name = N'dbo')

DROP TYPE [dbo].[TStudentScore];

GO

CREATE TYPE [dbo].[TStudentScore] AS TABLE(

[ID] [int] NULL,

[Name] [varchar](250) NULL,

[Score] [int] NULL

);

GO

CREATE PROCEDURE dbo.UploadByTableType

@DataInTableType AS TStudentScore READONLY

AS

BEGIN

SELECT * FROM @DataInTableType

END;

GO

Run the Test Application

To run the test application, you will need an instance of SQL Server 2008, and you will need enough permissions to run the above SQL scripts to generate the Stored Procedures and the user defined Table type.

You will also need to check the content in the application's "App.config" file.

="1.0"="utf-8"

<configuration>

<appSettings>

<add key="SQLServerConnectionString"

value="Data Source=localhost;Initial Catalog=Experiment;Integrated Security=SSPI;"/>

<add key="NoOfRowsOfDataInTheTest"

value="100000"/>

</appSettings>

</configuration>

Two values need to be configured. One is the connection string to connect to SQL Server. You will need to make sure the connection string has enough credentials to access the Stored Procedures and the user defined Table type. In my experiment, the login in the connection string is the server administrator, so I have unlimited access. The other value to configure is the number of rows of test data used for the comparison. I set this value to 100000 in my experiment.

If you have Visual Studio 2008, you can download the source code and compile it to run. If you do not have Visual Studio, the article comes with a compiled EXE. You can simply change the application's configuration file and run this EXE program.

Averaging the time spent by each method 10 times, I get the following result:

| Method | Time Spent (seconds) |

Pass as Text separated by special characters | 33 |

Pass as XML | 8 |

Pass as DataTable | 0.89 |

The difference is very impressive. Passing as XML is over 4 times faster than passing as Text separated by special characters, while passing as DataTable is almost 10 times faster than passing as XML. The following picture shows the result when uploading the data in DataTable format.

What Makes the Difference so Big?

To answer this question, I did another experiment. I changed the three Stored Procedures by issuing the following SQL script, so the Stored Procedures do not parse the information but make an immediate return.

ALTER PROCEDURE [dbo].[UploadByTextType]

@DataInTextType AS TEXT

AS

BEGIN

SELECT 'A' AS A

END;

GO

ALTER PROCEDURE dbo.UploadByTableType

@DataInTableType AS TStudentScore READONLY

AS

BEGIN

SELECT 'A' AS A

END;

GO

ALTER PROCEDURE [dbo].[UploadByXMLType]

@DataInXMLType AS TEXT

AS

BEGIN

SELECT 'A' AS A

END;

GO

Repeating the experiment, I get the following result:

| Method | Time Spent (seconds) |

Pass as Text separated by special characters | 0.2 |

Pass as XML | 0.43 |

Pass as DataTable | 0.57 |

This result is not surprising. The length of the XML string is longer than the length of the text string for the same amount of information, and the DataTable is a more complex object than an XML string. Summarizing the results from the two experiments, we can come to the following conclusion:

- The time spent to send information to SQL Server alone is relatively short compared to the time for the Stored Procedures to process the information to an easy to use form. In this article, we programmed the Stored Procedures to transform the information into a temporary table or equivalent when necessary, which is the most common form in practice.

- Sending structured data in a form that is easy for the Stored Procedure to use, such as XML, and particularly

DataTable, we can significantly improve the overall application performance.

More on Passing Data as Table Valued Parameters

The huge performance advantage by passing structured data as a DataTable to the Stored Procedures is demonstrated. But this is not the end of the story. If we create a new login to the SQL Server database, assign execution permission to the Stored Procedures, and change the "App.config" to use this login, the application fails immediately when sending the DataTable to the database. This is because we created a user defined Table type, and we will need to the grant execution permission to this user defined type to the login. I am not sure why Microsoft added this level of security. It seems to be an unnecessary maintenance penalty when we want to work in an efficient way.

Conclusion

This article made a comparison on the time spent to pass structure data to SQL Server stored procedures by different methods. We can find that the performance difference is very impressive if we want the information to be transformed into an easy to use form in the Stored Procedure. When data volume is small, this difference should not affect the overall application significantly. When the data volume becomes larger, a proper choice of the methods becomes necessary.

Performance is never the only reason when choosing among different methods, but it definitely deserves to be one of the reasons, and the choice is yours.

Limitations of this Experiment

It seems that this experiment has answered my questions and my choice is made, but it still has limitations.

- My entire test is performed on a development SQL Server located on the same computer as the client program, which is rarely the case in a production environment. What would the result be when the client and server are located on different computers?

- Are all my implementations for the methods the most efficient implementations?

To answer these questions, further tests are needed. If you are interested in conducting more extensive tests, I am eager to know your findings, and your feedback is appreciated.

History

This is the first revision of this article.